Abstract

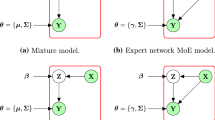

We present a flexible non-parametric generative model for multigroup regression that detects latent common clusters of groups. The model is founded on techniques that are now considered standard in the statistical parameter estimation literature, namely, Dirichlet process(DP) and Generalized Linear Model (GLM), and therefore, we name it “Infinite MultiGroup Generalized Linear Models” (iMG-GLM). We present two versions of the core model. First, in iMG-GLM-1, we demonstrate how the use of a DP prior on the groups while modeling the response-covariate densities via GLM, allows the model to capture latent clusters of groups by noting similar densities. The model ensures different densities for different clusters of groups in the multigroup setting. Secondly, in iMG-GLM-2, we model the posterior density of a new group using the latent densities of the clusters inferred from previous groups as prior. This spares the model from needing to memorize the entire data of previous groups. The posterior inference for iMG-GLM-1 is done using Variational Inference and that for iMG-GLM-2 using a simple Metropolis Hastings Algorithm. We demonstrate iMG-GLM’s superior accuracy in comparison to well known competing methods like Generalized Linear Mixed Model (GLMM), Random Forest, Linear Regression etc. on two real world problems.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Ando, R.K., Zhang, T.: A framework for learning predictive structures from multiple tasks and unlabeled data. Journal of Machine Learning Research 6, 1817–1853 (2005)

Antoniak, C.: Mixtures of dirichlet processes with applications to bayesian nonparametric problems. Annals of Statistics 2(6), 1152–1174 (1974)

Bakker, B., Heskes, T.: Task clustering and gating for bayesian multitask learning. Journal of Machine Learning Research 4, 83–99 (2003)

Baxter, J.: Learning internal representations. In: International Conference on Computational Learning Theory, pp. 311–320 (1995)

Baxter, J.: A model of inductive bias learning. Journal of Artificial Intelligence Research 12, 149–198 (2000)

Blei, D.: Variational inference for dirichlet process mixtures. Bayesian Analysis 1, 121–144 (2006)

Breslow, N.E., Clayton, D.G.: Approximate inference in generalized linear mixed models. Journal of the American Statistical Association 88(421), 9–25 (1993)

Caruana, R.: Multitask learning. Machine Learning 28(1), 41–75 (1997)

Escobar, M.D., West, M.: Bayesian density estimation and inference using mixtures. Journal of the American Statistical Association 90, 577–588 (1994)

Ferguson, T.: A bayesian analysis of some nonparametric problems. Annals of Statistics 1, 209–230 (1973)

Hastie, T., Tibshirani, R.: Varying-coefficient models. Journal of the Royal Statistical Society. Series B (Methodological) 55(4), 757–796 (1993)

IBM: Ibm spss version 20. IBM SPSS SOFTWARE (2011)

Jordan, M., Jacobs, R.: Hierarchical mixtures of experts and the EM algorithm. In: International Joint Conference on Neural Networks (1993)

Lee, Y., Nelder, J.A.: Hierarchical generalized linear models. Journal of the Royal Statistical Society. Series B (Methodological) 58(4), 619–678 (1996)

Lee, Y., Nelder, J.A.: Hierarchical generalised linear models: A synthesis of generalised linear models, random-effect models and structured dispersions. Biometrika 88(4), 987–1006 (2001)

Lee, Y., Nelder, J.A.: Modelling and analysing correlated non-normal data. Statistical Modelling 1(1), 3–16 (2001)

Lee, Y., Nelder, J.A.: Double hierarchical generalized linear models (with discussion). Journal of the Royal Statistical Society: Series C (Applied Statistics) 55(2), 139–185 (2006)

Neal, R.M.: Markov chain sampling methods for dirichlet process mixture models. Journal of Computational and Graphical Statistics 9(2), 249–265 (2000)

Nelder, J.A., Wedderburn, R.W.M.: Generalized linear models. Journal of the Royal Statistical Society, Series A (General) 135(3), 370–384 (1972)

Robert, C., Casella, G.: Monte Carlo Statistical Methods (Springer Texts in Statistics). Springer-Verlag New York, Inc. (2005)

Sethuraman, J.: A constructive definition of dirichlet priors. Statistica Sinica 4, 639–650 (1994)

Viele, K., Tong, B.: Modeling with mixtures of linear regressions. Statistics and Computing 12(4), 315–330 (2002)

Xue, Y., Liao, X., Carin, L.: Multi-task learning for classification with dirichlet process priors. Journal of Machine Learning Research 8, 35–63 (2007)

Yu, K., Tresp, V., Schwaighofer, A.: Learning gaussian processes from multiple tasks. International Conference on Machine Learning, pp. 1012–1019 (2005)

Zhang, J., Ghahramani, Z., Yang, Y.: Learning multiple related tasks using latent independent component analysis. Advances in Neural Information Processing Systems, pp. 1585–1592 (2005)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Sk, M.I., Banerjee, A. (2016). Automatic Detection of Latent Common Clusters of Groups in MultiGroup Regression. In: Perner, P. (eds) Machine Learning and Data Mining in Pattern Recognition. MLDM 2016. Lecture Notes in Computer Science(), vol 9729. Springer, Cham. https://doi.org/10.1007/978-3-319-41920-6_19

Download citation

DOI: https://doi.org/10.1007/978-3-319-41920-6_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-41919-0

Online ISBN: 978-3-319-41920-6

eBook Packages: Computer ScienceComputer Science (R0)