Abstract

We propose a convex formulation of the correspondence problem between two images with respect to an energy function measuring data consistency and spatial regularity. To this end, we formulate the general correspondence problem as the search for a minimal two-dimensional surface in \(\mathbb {R}^4\). We then use tools from geometric measure theory and introduce 2-vector fields as a representation of two-dimensional surfaces in \(\mathbb {R}^4\). We propose a discretization of this surface formulation that gives rise to a convex minimization problem and compute a globally optimal solution using an efficient primal-dual algorithm.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Correspondence Problem

- Geometric Measure Theory

- Spatial Regularity

- Efficient Primal-dual Algorithm

- Minimal Surface Problem

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The establishment of spatially dense correspondence is one of the central computational challenges in computer vision with a wide range of applications including stereo disparity estimation [1, 2], optical flow estimation [3], shape matching [4] and medical image registration [5–7]. Correspondence estimation is often cast as an energy minimization problem including a (generally) non-convex data consistency term and a spatial regularizer. Although such optimization problems have been intensively studied in computer vision, to date an algorithm that finds a global optimum in polynomial time is not known.

1.1 Problem Statement: Diffeomorphic Matching

We consider a general correspondence estimation problem where the aim is to compute an optimal diffeomorphic image matching defined as follows. Let \(\varOmega \subset \mathbb {R}^2\) denote the image plane, i.e. an open simply-connected subset of \(\mathbb {R}^2\). We describe the data consistency between points on both images as a map \(g:\varOmega \times \varOmega \rightarrow \mathbb {R}_{\ge 0}\), where g(p, q) measures the consistency between brightness, color, depth or some high level features at point \(p\in \varOmega \) in image 1 and at point \(q\in \varOmega \) in image 2. We define the desired correspondence between both images as the optimal solution to the constrained minimization problem

Here \({{\mathrm{Diff}}}^+(\varOmega ,\varOmega )\) is the set of all orientation-preserving diffeomorphisms from \(\varOmega \) to \(\varOmega \) and \(df(p)\in \mathbb {R}^{2\times 2}\) denotes the Jacobian (or more general the differential) of f at p.  measures the deviation from isometry, \({{\mathrm{Id}}}\in \mathbb {R}^{2\times 2}\) being the identity matrix. Solving (1) therefore leads to a diffeomorphic transformation of \(\varOmega \) favoring data consistency via the term g(p, f(p)) and spatial regularity (local isometry) via W(df(p)), with \(\epsilon \in \mathbb {R}_{\ge 0}\) determining the trade-off.

measures the deviation from isometry, \({{\mathrm{Id}}}\in \mathbb {R}^{2\times 2}\) being the identity matrix. Solving (1) therefore leads to a diffeomorphic transformation of \(\varOmega \) favoring data consistency via the term g(p, f(p)) and spatial regularity (local isometry) via W(df(p)), with \(\epsilon \in \mathbb {R}_{\ge 0}\) determining the trade-off.

1.2 Related Work

While the correspondence model (1) is not the only conceivable choice, most works on correspondence finding propose similar energies that include data consistency and a spatial regularizer. Moreover, we believe that the proposed solution via geometric measure theory can be generalized to other classes of cost functions. Due to the non-convexity of the data term coupled with the spatial regularizer, such problems have evaded many attempts to determine global optima.

Formally, problem (1) differs from optical flow formulations as pioneered by Horn and Schunck [3] in that we do not search for a displacement field \(v:\varOmega \rightarrow \mathbb {R}^2\) but rather directly for a pairing of corresponding points \(f:\varOmega \rightarrow \varOmega \). The commonly used coarse-to-fine linearization strategies in optical flow estimation [8–10] often provide high-quality solutions in practice, yet they cannot guarantee optimal solutions.

To our knowledge, optimal solutions to such correspondence problems only exist in the one-dimensional setting (the stereo problem) as pioneered in the spatially discrete setting by Ishikawa [2] and in the spatially continuous setting by Pock et al. [11]. The latter framework has been generalized to higher dimensions [12], yet respective convex relaxations only provide approximate solutions with no (apriori) optimality guarantees.

We would like to stress that our proposed method is not related to the work of Vaillant et al. [13] that also uses currents and 2-forms: Apparently [13] solves a different problem not including a arbitrary non-convex data term. Moreover it involves optimizing a surfaces of codimension 1 while we tackle the minimal surface problem of codimension 2. It is exactly the codimension 2 which makes our problem challenging.

In this paper, we propose a convex energy for the correspondence problem in the framework of geometric measure theory to which we can find the globally optimal solution. Since this theory is not commonly used in computer vision, we will briefly review the most relevant concepts such as m-vectors, differential forms and currents in Sects. 3 and 4. For a more detailed presentation, we refer the reader to standard textbooks [14, 15].

1.3 Contribution

-

1.

In Sect. 2, we prove that the correspondence problem (1) is equivalent to a minimal surface problem in higher dimension. Subsequently, we express this minimal surface problem as an optimization problem over 2-vector fields.

-

2.

In Sect. 5, we propose a discretization of this minimal 2-vector field problem which gives rise to a convex optimization problem. The discrete problem can be optimized with standard convex optimization methods that converge to a globally optimal solution.

-

3.

In Sects. 3 and 4 we select and lay out the mathematical concepts necessary for the proposed method that are normally embedded into the complex theory of geometric measure theory. We also derive Lemmas 3 and 4. They do not exist in standard textbooks, but are crucial for the numerical implementation.

-

4.

In Sect. 6.2, we derive a solution to the mass norm’s proximity operator. This proximity operator is necessary for the numerical optimization of the discrete problem detailed in Sects. 6 and 7. Additionally we derive a discrete formulation of the boundary operator for 2-vector fields.

2 Optimal Correspondences and Minimal Surfaces

In this section, we transform the original correspondence problem into an equivalent optimization problem, where we minimize over 2-vector fields (introduced in Sects. 3 and 4) on the product space \(\varOmega \times \varOmega \). The transformation consists of two steps:

The first step is to turn the correspondence problem into a minimal surface problem. Instead of optimizing directly over sets of diffeomorphisms, we will optimize over subsets of the product space \(\varOmega \times \varOmega =\varOmega ^2\). In the space of diffeomorphisms \(f:\varOmega \rightarrow \varOmega \) are represented by their graphs \(\{(p,f(p))|p\in \varOmega \}\subset \varOmega ^2\), which are 2-dimensional surfaces embedded into \(\mathbb {R}^4\). Let the projections \(\pi _1, \pi _2:\varOmega ^2\rightarrow \varOmega \) be defined by \(\pi _1(p,q)=p, \pi _2(p,q)=q\) for any \((p,q)\in \varOmega ^2\) and denote by \(\pi _{1|S}\), \(\pi _{2|S}:S\rightarrow \varOmega \) the restrictions of \(\pi _1, \pi _2\) to some set \(S\subset \varOmega ^2\). Now we can reformulate (1) as the following minimal surface problem:

where we define \(w:\varOmega ^2\rightarrow \mathbb {R}\) by \(w(p) = g(\pi _1(p), \pi _2(p))+\epsilon \) for any \(p\in \varOmega ^2\). Note that W(df(p)) from (1) is now implicitely included in the surface integral \(\int _{S} dp\) of (2). Indeed both optimization problems are equivalent:

Proposition 1

Let \(f^*\) be a minimizer of (1), then it’s graph \(\{(p,f(p))|p\in \varOmega \}\) is a minimizer of (2). If \(S\subset \varOmega ^2\) is a minimizer of (2), then \(\pi _{1|S}^{-1}\circ \pi _{2|S}:\varOmega \rightarrow \varOmega \) is a minimizer of (1).

Proof

For any feasible \(f:\varOmega \rightarrow \varOmega \) of (1) let \(S(f)=\{(p,f(p))|p\in \varOmega \}\) be its graph. Then \(h:\varOmega \rightarrow \varOmega ^2, h(p) = (p,f(p))\) is a chart of S(f). Now expand the surface integral and w:

So for any feasible f of (1) there is a feasible S(f) of (2), such that the energy of f with respect to (1) is equalt to the energy of S(f) with respect to (2).

On the other hand, for any feasible \(S\subset \varOmega ^2\) of (2) the function \(f_S=\pi _{1|S}^{-1}\circ \pi _{2|S}:\varOmega \rightarrow \varOmega \) is a feasible solution to (1). Note that it holds  and

and

\(\square \)

The second step of our approach is to represent the surfaces \(S\subset \varOmega ^2\) by their tangent planes. From differential geometry we know that we can parameterize some 2-dimensional surface embedded into \(\varOmega ^2\) by a diffeomorphic map \(u: U\rightarrow \varOmega ^2\), where U is an open subset of \(\mathbb {R}^2\).Footnote 1 The directional derivatives \(u_x=\frac{\partial {u}}{\partial {x}}, u_y=\frac{\partial {u}}{\partial {y}}:U\rightarrow \mathbb {R}^4\) yield tangent vectors that span the tangent planes of S, i.e. \(u_x(u^{-1}(p)), u_y(u^{-1}(p))\in \mathbb {R}^4\) span the tangent space \(\mathcal {T}_pS\) of S at \(p\in S\). The weighted area of S can be evaluated by \(\mathcal {A}_w(S) = \int _U w(u(p))\mathcal {A}(u_x(p),u_y(p))dp\), where \(\mathcal {A}(u_x(p),u_y(p))\) is the area of the parallelogram spanned by \(u_x(p),u_y(p)\). Now define the vector fields \(t_x, t_y:\varOmega ^2\rightarrow \mathbb {R}^4\) by

Using the 2-dimensional Hausdorff measure \(\mathcal {H}^2\) (see Sect. 4) we can evaluate the weighted area of S by \(\mathcal {A}_w(S) = \int _{\varOmega ^2} w(p)\mathcal {A}(t_x(p),t_y(p))d\mathcal {H}^2p\). The important observation is that the integral directly depends neither on S nor on the chart u. The key idea is that the two vector fields \(t_x, t_y\) define the shape, area and boundary of S and we can rewrite the minimal surface problem (2) as an optimization over two vector fields on \(\varOmega ^2\):

Keep in mind, that this is only a loose definition of the optimization problem that we will actually solve. We will state a more refined definition in Sect. 5 that makes heavy use of the tools of geometric measure theory. Since these tools are not common in computer vision, we will introduce them in the following.

We start with the introduction m-vectors and alternating forms that are used to represent our two vector fields in Sect. 3. In Sect. 4 we will discuss differential forms and currents. We will glue those concepts together and propose a refined form of (6) in Sect. 5. The latter can be discretized into a convex minimization problem. This discretization will be presented in Sect. 6. Finally we will show how to optimize the convex optimization problem in Sect. 7.

3 m-Vectors and m-Covectors

As indicated in optimization problem (6) we want to optimize over two vector fields. At each point we need to measure the area of the parallelogram of both vectors, their boundary and orientation and make sure that both vectors are linearly independent. Especially the latter constraint will make this optimization problem hardly tractable, if we use two separate vector fields. Instead we will use more sophisticated concepts, namely simple m-vectors, m-vectors and m-covectors.Footnote 2

3.1 m-Vectors

Loosely speaking, simple m-vectors represent an oriented m-dimensional subspace of \(\mathbb {R}^n\) spanned by m linearly independent vectors plus some positive area. The area is defined by the parallelotope of these vectors. The set of m-vectors is the vector space obtained by the linear extension of the set of simple m-vectors. m-covectors are the elements of the dual space to the space of m-vectors. We will first give a formal definition of (simple) m-vectors and then discuss the relevant concepts.

Definition 1

(Simple m -Vectors and m -Vectors [15, p. 23]). Let \(m,n\in \mathbb {N}, m\le n\).

-

1.

Define an equivalence relation \(\sim \) on \((\mathbb {R}^n)^m\), such that for any \((v_1, \ldots , v_m)\in (\mathbb {R}^n)^m\) and any \(\alpha \in \mathbb {R}\) it is

$$\begin{aligned} (v_1, \ldots , \alpha v_i, \ldots , v_j, \ldots , v_m)&\sim (v_1, \ldots , v_i, \ldots , \alpha v_j, \ldots , v_m)\text {,}\end{aligned}$$(7)$$\begin{aligned} (v_1, \ldots , v_i, \ldots , v_j, \ldots , v_m)&\sim (v_1, \ldots , v_i+\alpha v_j, \ldots , v_j, \ldots , v_m)\text { and}\end{aligned}$$(8)$$\begin{aligned} (v_1, \ldots , v_i, \ldots , v_j, \ldots , v_m)&\sim (v_1, \ldots , v_j, \ldots , -v_i, \ldots , v_m)\text {.} \end{aligned}$$(9)Denote the equivalence class of any \((v_1, \ldots , v_m)\in (\mathbb {R}^n)^m\) with respect to \(\sim \) by \(v_1\wedge \ldots \wedge v_m\) and call it a simple m-vector.

-

2.

Consider the vector space of all linear combinations of simple m-vectors and define the equivalence relation \(\approx \) on this vector space by

$$\begin{aligned} \alpha (v_1\wedge v_2\wedge \ldots \wedge v_m)&\approx (\alpha v_1)\wedge v_2\wedge \ldots \wedge v_m~\text { and}\end{aligned}$$(10)$$\begin{aligned} (v_1\wedge v_2\wedge \ldots \wedge v_m)+(v'_1\wedge v_2\wedge \ldots \wedge v_m)&\approx (v_1+v'_1)\wedge v_2\wedge \ldots \wedge v_m \end{aligned}$$(11)for any \(v'_1, v_1, \ldots , v_m\in \mathbb {R}^n, \alpha \in \mathbb {R}\). Denote this vector space modulo \(\approx \) by \(\varLambda _m\mathbb {R}^n\) and call any element of \(\varLambda _m\mathbb {R}^n\) an m-vector. Additionally define \(\varLambda _0\mathbb {R}^n=\mathbb {R}\).

\(\cdot \wedge \cdot \) is called the wedge product. It will be generalized later in Definition 5. For the time being, let us take a closer look at simple m-vectors and make a first observation:

Observation

The m-vector \(\omega \in \varLambda _m\mathbb {R}^n\) is simple if and only if there exist \(v_1, \ldots , v_m\in \mathbb {R}^n\) such that \(\omega =v_1\wedge \ldots \wedge v_m\).

Let \(e_1, \ldots , e_n\in \mathbb {R}^n\) be the oriented orthonormal basis of \(\mathbb {R}^n\) pointing in the standard direction. We call this basis the standard basis of \(\mathbb {R}^n\). This basis induces an orthonormal basis \(\{e_{i_1}\wedge \ldots \wedge e_{i_m}\}_{i_1<\ldots <i_m}\subset \varLambda _m\mathbb {R}^n\) of \(\varLambda _m\mathbb {R}^n\). Let us call this basis the standard basis of \(\varLambda _m\mathbb {R}^n\). It directly follows that  . We can write any m-vector \(\omega \) as a weighted sum of basis m-vectors: \(\omega =\sum _{i_1<\ldots <i_m}\omega _{i_1\cdots i_m}e_{i_1\cdots i_m}\), where \(\{\omega _{i_1\cdots i_m}\in \mathbb {R}\}_{i_1<\ldots <i_m}\) are the coefficients or coordinates (with respect to the standard basis).

. We can write any m-vector \(\omega \) as a weighted sum of basis m-vectors: \(\omega =\sum _{i_1<\ldots <i_m}\omega _{i_1\cdots i_m}e_{i_1\cdots i_m}\), where \(\{\omega _{i_1\cdots i_m}\in \mathbb {R}\}_{i_1<\ldots <i_m}\) are the coefficients or coordinates (with respect to the standard basis).

Notation

For some indexed set of vectors \(\{v_i\}_i\) we abbreviate the wedge product by \(v_{i_1\cdots i_m}=v_{i_1}\wedge \ldots \wedge v_{i_m}\). For example \(e_{132}=e_1\wedge e_3\wedge e_2\). Additionally we will abbreviate the decomposition of some m-vector \(\omega \) into basis vectors by \(\omega =\sum _\sigma \omega _\sigma e_\sigma \).

Definition 2

For any two vectors \(\omega =\sum _\sigma \omega _\sigma e_\sigma , \xi =\sum _\sigma \xi _\sigma e_\sigma \in \varLambda _m\mathbb {R}^n\) we define the standard inner product \(\langle {\cdot , \cdot } \rangle :\varLambda _m\mathbb {R}^n\times \varLambda _m\mathbb {R}^n\rightarrow \mathbb {R}\) of \(\varLambda _m\mathbb {R}^n\) by \(\langle {\omega , \xi } \rangle = \sum _\sigma \omega _\sigma \xi _\sigma \) and define the Euclidean norm \(\Vert {\cdot } \Vert _{2}:\varLambda _m\mathbb {R}^n\rightarrow \mathbb {R}\) on \(\varLambda _m\mathbb {R}^n\) by \(\Vert {\omega } \Vert _{2}=\sqrt{\langle {\omega ,\omega } \rangle }\) for any \(\omega \in \varLambda _m\mathbb {R}^n\).

Observation

Whenever the m-vector \(\omega \) is simple, then for every decomposition \(\omega =v_1\wedge \ldots \wedge v_m, v_1, \ldots , v_m\in \mathbb {R}^n,\) it holds that the m-dimensional volume of the parallelotope spanned by \(v_1, \ldots , v_m\) is exactly \(\Vert {\omega } \Vert _{2}\). It directly follows the important property that \(\omega =0\) if and only if \(v_1, \ldots , v_m\) are not linearly independent.

If the simple m-vector \(\omega =v_1\wedge \ldots \wedge v_m\ne 0\), then \(v_1, \ldots , v_m\) is an oriented basis of the m-dimensional subspace \(V\subset \mathbb {R}^n\). For any orthonormal basis \(w_1, \ldots , w_m\) of V with the same orientation it holds:

In other words, there is a one-to-one correspondence between simple m-vectors and oriented m-dimensional subspaces (also called oriented m-planes through 0) of \(\mathbb {R}^n\) with an associated positive volume. Furthermore we can set all simple m-vectors of unit-length and all oriented m-dimensional subspaces of \(\mathbb {R}^n\) into a one-to-one correspondence. These concepts are illustrated in Fig. 1.

Examples of simple 2-vectors in \(\mathbb {R}^3\): From Definition 1 it follows that the 2-vectors \(v_1\wedge v_2=2v_1\wedge {\frac{1}{2}}v_2=-v_1\wedge -v_2\in \varLambda _2\mathbb {R}^3\) are identical. This is in line with the geometric interpretation of the corresponding vector pairs \((v_1, v_2), (2v_1, {\frac{1}{2}}v_2), (-v_1, -v_2)\): All three span the same 2-dimensional subspace of \(\mathbb {R}^2\), induce the same orientation on this subspace and their parallelogram has the same area. On the other hand \(v_1\wedge v_2\ne v_2\wedge v_1=-(v_1\wedge v_2)\), since the orientations of the corresponding subspaces are inverted.

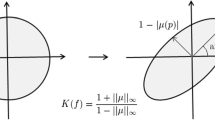

3.2 The Mass Norm

What is the difference between m-vectors and simple m-vectors? Indeed up to \(\mathbb {R}^3\) all m-vectors are simple. The most basic example of a non-simple m-vector is \(e_{12}+e_{34}\in \varLambda _2\mathbb {R}^4\) (i.e. \(=(e_1\wedge e_2)+(e_3\wedge e_4)\)). This 2-vector cannot be decomposend into a single wedge product (\(v_1\wedge v_2\)) between two vectors in \(\mathbb {R}^4\). Interestingly the two subspaces spanned by \(e_{12}\) and \(e_{34}\) intersect only at the origin and therefore \(e_{12}+e_{34}\) does not represent a “simple subspace”. Now the important observation is that while the areas of the two parallelograms spanned by \(e_{12}\) and \(e_{34}\) add up to 2 the Euclidean norm \(\Vert {e_{12}+e_{34}} \Vert _{2}=\sqrt{2}\) is strictly smaller. This is a problem because an optimization of 2-vectors in \(\mathbb {R}^4\) with respect to the Euclidean norm would naturally prefer non-simple 2-vectors while the minimal surfaces we are looking for are represented by simple 2-vectors. The solution is the so-called mass norm. Its definition is based on duality, so we will introduce it along the definition of m-covectors. Recall that the dual of some real vector space V is the set of all real linear functions \(f:V\rightarrow \mathbb {R}\) on that space.

Definition 3

( m -Covectors). The dual space \((\varLambda _m\mathbb {R}^n)^*\) of \(\varLambda _m\mathbb {R}^n\) is called the space of m-covectors and is denoted by \(\varLambda ^m\mathbb {R}^n\). We write \(\langle {\phi , \omega } \rangle =\phi (\omega )\in \mathbb {R}\) for any \(\omega \in \varLambda _m\mathbb {R}^n,\phi \in \varLambda ^m\mathbb {R}^n\).

Observation

\(\varLambda ^m\mathbb {R}^n=(\varLambda _m\mathbb {R}^n)^*=\varLambda _m(\mathbb {R}^{n*})\) holds, such that the dual basis \(e_1^*, \ldots , e_n^*\in \mathbb {R}^{n*}\) induces the dual inner product \(\langle {\cdot , \cdot } \rangle \) and the dual Euclidean norm \(\Vert {\cdot } \Vert _{2*}\) on \(\varLambda ^m\mathbb {R}^n\).

Definition 4

(Mass Norm and Comass Norm). The comass norm denoted by \(\Vert {\cdot } \Vert _*\) is the norm on \(\varLambda ^m\mathbb {R}^n\) defined for any \(\phi \in \varLambda ^m\mathbb {R}^n\) by

The mass norm denoted by \(\Vert {\cdot } \Vert \) is the norm on \(\varLambda ^m\mathbb {R}^n\) defined for any \(\omega \in \varLambda _m\mathbb {R}^n\) by

Lemma 1

For any \(\omega \in \varLambda _m\mathbb {R}^n\) it holds

Continuing the discussion from above, Lemma 1 gives us the intuition why the mass norm is the right choice: If some m-vector is not simple, its mass norm will be strictly larger than its Euclidean norm and from the decomposition identity of Eq. (15) we can deduce that the mass norm \(\Vert {e_{12}+e_{34}} \Vert =2\) has the “correct” value.

3.3 Grassmann Algebra

Recall that the gradient of a scalar field is a vector field and the divergence of a vector field is a scalar field. In the next section we will apply integration and differentiation to m-vector fields, which will require us to change the “order” of the m-vector field in a similar fashion. Therefor we will now introduce the Grassmann algebra, the wedge product and the interior product.

Definition 5

(Grassmann Algebra). The Grassmann algebra (or exterior algebra) of \(\mathbb {R}^n\), denoted by the \(\varLambda _*\mathbb {R}^n\), is the union of \(\varLambda _m\mathbb {R}^n\), i.e. \(\varLambda _*\mathbb {R}^n=\bigcup _m \varLambda _m\mathbb {R}^n\), together with the exterior multiplication (or wedge product) \(\wedge :\varLambda _*\mathbb {R}^n\times \varLambda _*\mathbb {R}^n\rightarrow \varLambda _*\mathbb {R}^n\). The exterior product of two simple vectors \((v_1\wedge \ldots \wedge v_m)\in \varLambda _m\mathbb {R}^n\) and \((u_1\wedge \ldots \wedge u_k)\in \varLambda _k\mathbb {R}^n\) is defined by

and is linearly extended to all m-vectors in \(\varLambda _*\mathbb {R}^n\). Define the Grassmann algebra \(\varLambda ^*\mathbb {R}^n\) with respect to covectors analoguously.

Definition 6

(Interior Product). Let \(\phi \in \varLambda ^m\mathbb {R}^n\) and \(\omega \in \varLambda _p\mathbb {R}^n\), if \(p\le m\), the interior product \(\omega \rfloor \phi \in \varLambda ^{m-p}\mathbb {R}^n\) is an alternating \(m-p\) form defined by \(\langle {\omega \rfloor \phi , \xi } \rangle = \langle {\phi , \xi \wedge \omega } \rangle \) for any \(\xi \in \varLambda _{m-p}\mathbb {R}^n\). If \(p\ge m\), the interior product \(\omega \lfloor \phi \in \varLambda _{p-m}\mathbb {R}^n\) is an \(p-m\) vector defined by \(\langle {\theta , \omega \lfloor \phi } \rangle = \langle {\phi \wedge \theta , \omega } \rangle \) for any \(\theta \in \varLambda ^{p-m}\mathbb {R}^n\).

4 Differential Forms and Currents

Denote the tangent space of \(\mathbb {R}^n\) at point \(p\in \mathbb {R}^n\) by \(\mathcal {T}_{p}\mathbb {R}^n\) and its dual space by \(\mathcal {T}_{p}\mathbb {R}^{n*}\). We can construct Grassman algebras from both spaces because they can be identified with \(\mathbb {R}^n\). Denote the standard basis of \(\mathcal {T}_{p}\mathbb {R}^n\) by \(\partial _1, \ldots , \partial _n\in \mathcal {T}_{p}\mathbb {R}^n\) and the standard basis of \(\mathcal {T}_{p}\mathbb {R}^{n*}\) by \(dx_1, \ldots , dx_n\in \mathcal {T}_{p}\mathbb {R}^{n*}\).

4.1 Differential Forms

Definition 7

( m -Vector Fields and Differential m -Forms). An m -vector field on \(\mathbb {R}^n\) is a function \(\omega :\mathbb {R}^n\rightarrow \varLambda _m\mathcal {T}_{p}\mathbb {R}^n\). Any m-vector field \(\omega \) on \(\mathbb {R}^n\) can be uniquely decomposed into \(\omega (p)=\sum _\sigma \omega _\sigma (p) \partial _\sigma \), where \(\omega _\sigma :\mathbb {R}^n\rightarrow \mathbb {R}\) are \(\omega \)’s coefficient functions.

A differential m-form \(\phi \) on \(\mathbb {R}^n\) is a field of m-covectors of \(\mathcal {T}_{p}\mathbb {R}^n\), i.e. \(\phi :\mathbb {R}^n\rightarrow \varLambda ^m\mathcal {T}_{p}\mathbb {R}^n\), such that each coefficient function \(\phi _\sigma :\mathbb {R}^n\rightarrow \mathbb {R}\) of \(\phi (p)=\sum _\sigma \phi _\sigma (p) dx_\sigma \) is differentiable. For simplicity we will call differential m-forms just m -forms. We denote the set of all m-forms on \(\mathbb {R}^n\) by \(\mathcal {D}^m\mathbb {R}^n = \{\phi :\mathbb {R}^n\rightarrow \varLambda ^m\mathcal {T}_{p}\mathbb {R}^n\}\).

The support of \(\phi \) is the closure of \(\{p\in \mathcal {M}|\phi (p)\ne 0\}\) and is denoted by \({{\mathrm{spt}}}\phi \).

Definition 8

(Exterior Derivative). Let \(\phi (p)=\sum _\sigma \phi _\sigma (p) dx_\sigma \in \mathcal {D}^m\mathbb {R}^n\). The exterior derivative \(d\phi \in \mathcal {D}^{m+1}\mathbb {R}^n\) of \(\phi \) is defined by

Lemma 2

([15] Lemma 6.1.8). Let \(\phi \in \mathcal {D}^m\mathbb {R}^n, \theta \in \mathcal {D}^p\mathbb {R}^n\), it holds \(d(\phi \wedge \theta )=(d\phi )\wedge \theta +(-1)^m\phi \wedge (d\theta )\).

4.2 Currents

Definition 9

(Currents). We denote the dual space of \(\mathcal {D}^m\mathbb {R}^n\) by \(\mathcal {D}_m\mathbb {R}^n=(\mathcal {D}^m\mathbb {R}^n)^*\). The elements of \(\mathcal {D}_m\mathbb {R}^n\) are called m -currents.

We will mostly use currents that are constructed from measures and m-vector fields. We start by defining 0-currents induced by measures:

Definition 10

(Measure Induced Currents). Let \(\mu \) be a measure on \(\mathbb {R}^n\), we define the associated current \(\mu \in \mathcal {D}_0\mathbb {R}^n\) induced by measure \(\mu \) by

for any \(\phi \in \mathcal {D}^0\mathbb {R}^n\). (The meaning of \(\mu \) will be directly clear from the context.)

The only measure we will use is the Hausdorff measure (see [16, p. 9]):

Definition 11

(Hausdorff Measure). The m -dimensional Hausdorff measure \(\mathcal {H}^m\) of some set \(A\subset \mathbb {R}^n\) is defined by

where \(S_j\) are n-dimensional balls and \(\alpha _m\) is the volume of the m-dimensional unit ball.

Define the Hausdorff measure restricted to some \(B\subset \mathbb {R}^n\) by \(\mathcal {H}^m_B(\cdot )=\mathcal {H}^m(\cdot \cap B)\).

We combine currents with vector fields and differential forms by:

Definition 12

Let \(T\in \mathcal {D}_k\mathbb {R}^n\), \(\xi \in \mathcal {D}^l\mathbb {R}^n\) and \(\omega \) an l-vector field on \(\mathbb {R}^n\), we define \((T\lfloor \xi )\in \mathcal {D}_{k-l}\mathbb {R}^n\) and \((T\wedge \omega )\in \mathcal {D}_{k+l}\mathbb {R}^n\) by

It directly follows from Definition 12 that we can combine some measure \(\mu \) and an m-vector \(\omega \) on \(\mathbb {R}^n\) to an m-current \(\mu \wedge \omega \in \mathcal {D}_m\mathbb {R}^n\) that evaluates on any m-form \(\phi \) to

Definition 13

(Partial Derivative). Let \(T\in \mathcal {D}_k\mathbb {R}^n\), its partial derivative \(D_{x_i}T\in \mathcal {D}_k\mathbb {R}^n\) is again a k-current defined, sucht that for any \(\phi \in \mathcal {D}^{k}\mathbb {R}^n\) it holds: \((D_{x_i}T)(\phi ) = -T(\frac{\partial {\phi }}{\partial {x_i}})\text {.}\)

Definition 14

(Boundary). The boundary \(\partial T\) of some k-current \(T\in \mathcal {D}_k\mathbb {R}^n\) is again a current in \(\mathcal {D}_{k-1}\mathbb {R}^n\) of dimension \(k-1\) defined, such that for any \(\phi \in \mathcal {D}^{k-1}\mathbb {R}^n\) it holds \(\partial T(\phi ) = T(d\phi )\text {.}\)

Definition 15

(Mass). The mass \(\mathbf {M}(T)\in \mathbb {R}_{\ge 0}\) of some \(T\in \mathcal {D}_m\mathbb {R}^n\) is defined by \(\mathbf {M}(T) = \sup \{T(\phi )| \phi \in \mathcal {D}^m\mathbb {R}^n, \sup _{p\in \mathbb {R}^n} \Vert {\phi (p)} \Vert ^*\le 1\}\text {.}\)

Observation

If S is an (oriented) m-dimensional surface and \(\omega \) is an m-vector field induced by the tangent planes of S, we can associate with S a current \(T_S=\mathcal {H}^m\wedge \omega \). The important insight from geometric measure theory is that the notion of mass and boundary of a current coincides exactly with the area and boundary definition from differential geometry. I.e. \(\mathbf {M}(T_S)=\mathcal {A}(S)\), \(\partial T_S = T_{\partial S}\) and \(\mathbf {M}(\partial T_S)=\mathcal {A}(\partial S)\).

In the next section, we will cast our optimization problem as an optimization over the set of currents induced by 2-vectors and we need to numerically compute their boundary. We will now introduce a general notion of divergence that will eventually lead to the crucial Lemma 4, which enables us to discretize the boundary operator as a matrix-vector multiplication.

Definition 16

(Divergence). The divergence \({{\mathrm{div}}}\omega \) of some differentiable m-vector field \(\omega \) on \(\mathbb {R}^n\) is an \(m-1\) vector field defined by \({{\mathrm{div}}}\omega = \sum _{i=1}^n \frac{\partial {\omega }}{\partial {x_i}}\lfloor dx_i\text {.}\)

Indeed this is a generalization of the known divergence of (differentiable) 1-vector fields \(\omega =\sum _j\omega _j \partial _j\) since \({{\mathrm{div}}}\omega =\sum _{i,j}\frac{\partial {\omega _j}}{\partial {x_i}} \partial _j\lfloor dx_i =\sum _{i}\frac{\partial {\omega _i}}{\partial {x_i}}\).

Lemma 3

Let \(\omega \) be a differentiable m-vector field on \(\mathbb {R}^n\) and let \(T\in \mathcal {D}_0\mathbb {R}^n\), then \(\partial (T\wedge \omega )=-T\wedge {{\mathrm{div}}}\omega -\sum _i(D_{x_i} T)\wedge (\omega \lfloor dx_i)\text {.}\)

Lemma 4

Let \(U\subset \mathbb {R}^n\) be open, \(\mathbf {n}_{\partial U}\) the 1-form associated to the outward-pointing unit vector orthogonal to \(\partial U\) and \(\omega \) an differentiable m-vector field, it holds that \(\partial (\mathcal {H}_U^n\wedge \omega ) = -\mathcal {H}_U^n\wedge {{\mathrm{div}}}\omega +(-1)^{m}\mathcal {H}^{n-1}_{\partial U}\wedge (\omega \lfloor \mathbf {n}_{\partial U})\text {.}\)

5 Minimal 2-Vector Problem

Using the tools introduced in previous sections, we will now propose a formulation of the minimal surface problem (2) that is based on 2-vector fields of minimal mass. Let \(S\subset \varOmega ^2\) be a surface satisfiying the constraints of (2). Obviously \(\pi _{1|S}^{-1}:\varOmega \rightarrow \varOmega ^2\) is a chart of S. Define the two vector fields \(t^S_x, t^S_y:\varOmega ^2\rightarrow \mathcal {T}_p\mathbb {R}^4\) by

The wedge product of \(t^S_x, t^S_y\) is a 2-vector field we will denote by \(\omega _S=t^S_x\wedge t^S_y:\varOmega ^2\rightarrow \varLambda _2\mathcal {T}_p\mathbb {R}^4\). Now the important observation is that we can write the area of S weighted by the data term w as the mass of the current \(\mathcal {H}_{S}^2\wedge w\wedge \frac{\omega _S}{\Vert {\omega _S} \Vert }\in \mathcal {D}_2\mathbb {R}^4\):

This allows us to modify (2) into

While we now have an objective based on the integration of some 2-vector field we still have several problems: we still optimze over \(S\subset \varOmega ^2\), the measure \(\mathcal {H}_{S}^2\) depends on S and \(\omega _S\) jumps when entering S from \(\varOmega ^2\setminus S\). We will overcome these problems by the observation that we can approximate any \(\omega _S\) by a differentiable 2-vector field \(\omega :\varOmega ^2\rightarrow \varLambda _2\mathcal {T}_p\mathbb {R}^4\) (i.e. a 2-vector field whose coefficient functions are differentiable). We are thus able to state our final optimization problem:

The constraint on the divergence of \(\omega \) directly comes from Lemma 4. Although the infimum is not attained in the continuous setting, this formulation has several key benefits. If we discretize Problem (25) as described in Sect. 6, it has the following properties:

-

1.

The search space of differentiable 2-vector fields is convex.

-

2.

The objective is convex.

-

3.

The divergence \({{\mathrm{div}}}\) is a linear operator leading to a convex constraint.

-

4.

The projections \(\pi _1, \pi _2\) are linear operators leading to convex constraints.

In summary, the discrete version of (25) is a convex optimization problem, which can be optimized with standard methods that converge to a globally optimal solution. On the downside we cannot prove that the optimal 2-vector field of (25) is simple. While our intuition and experiments suggest that this holds, it is still an open question and a formal proof would be a major challenge and is well beyond the scope of this paper.

6 Discretization

We would like to discretize all normal 2-currents in \(\mathbb {R}^n\) that have the form \(\mathcal {H}^n\wedge \omega \), where \(\omega \) is a differentiable 2-vector field with support on the open set \(P\subset \mathbb {R}^n\). We will do this by sampling \(\omega \) on the integer grid.

Let \(w_1, \ldots , w_n\in \mathbb {N}\), define \(P=\{1, \ldots , w_1\}\times \cdots \times \{1, \ldots , w_n\}\subset \mathbb {N}^n\subset \mathbb {R}^n\), then a 2-vector field sampled at points P is a function \(\omega :P\rightarrow \varLambda _2\mathcal {T}_{p}\mathbb {R}^n\) and we have a discrete representation of any such \(\omega \) via the coefficient functions \(\alpha _{ij}:P\rightarrow \mathbb {R}, i<j,\), such that \(\omega (p)=\sum _{i<j}\alpha _{ij}(p)\partial _{ij}\), for any \(p\in P\). For the relevant case of \(n=4\), the space of all 2-vectors is represented by the six scalar functions \(\alpha _{12}, \alpha _{13}, \alpha _{14}, \alpha _{23}, \alpha _{24}, \alpha _{34}: P\rightarrow \mathbb {R}\) or just by \(\alpha :P\rightarrow \mathbb {R}^6\).

6.1 Divergence

We define the partial derivatives of \(\omega \) using finite differences by \(\frac{\partial {\omega }}{\partial {x_i}}=\omega (p+\partial _i)-\omega (p)\). Now fix some point \(p_0\in P\), denote \(\alpha ^0=\alpha (p_0)\) and \(\alpha ^k=\alpha (p_0+\partial _k)\) and let \(\delta ^k=\alpha ^k-\alpha ^0\). It is \(\frac{\partial {\omega (p_0)}}{\partial {x_k}}=\sum _{i<j}\delta ^k_{ij}\partial _{ij}\). Expanding Definition 16 and some calculations yield

For \(n=4\), the discrete divergence operator expands to

6.2 Mass Norm

The primal-dual algorithm for convex optimization used in Sect. 7 solves in each step a minimization problem that depends on the current point in the search space. This minimization problem is described by the so-called proximity operator \({{\mathrm{prox}}}_f:V\rightarrow V\) defined by

where \(f:V\rightarrow \mathbb {R}\cup \{\infty \}\) is a convex function on the normed vector space V. In this section we will derive a solution of the proximity operator \({{\mathrm{prox}}}_{\frac{c}{L}\Vert {\cdot } \Vert }: \varLambda _2\mathcal {T}_p\mathbb {R}^4\rightarrow \varLambda _2\mathcal {T}_p\mathbb {R}^4\), where \(c,L\in \mathbb {R}_{>0}\) are positive constants. Each 2-vector \(\phi =\sum _{i<j}\phi _{ij}\partial _{ij}\in \varLambda _2\mathbb {R}^4\) can be represented by a skew-symmetric matrix \(S(\phi )\in \mathbb {R}^{4\times 4}\), defined as

Clearly \(\Vert {\phi } \Vert _{2}={\frac{1}{\sqrt{2}}}\Vert {S(\phi )} \Vert _{\mathrm {F}}\), where \(\Vert {\cdot } \Vert _{\mathrm {F}}\) is the Frobenius norm.

Let \(U(\phi ),T(\phi )\in \mathbb {R}^{4\times 4}\) be two matrices where \(U(\phi )\) is orthogonal and \(T(\phi )\) has the form

such that \(S(\phi ) = U(\phi )T(\phi )U(\phi )^\top \). Such a decomposition of \(S(\phi )\) is called a (real) Schur decomposition. From linear algebra we know, that such a decomposition always exists and \(T_{12}i, -T_{12}i, T_{34}i, -T_{34}i\) are the purely imaginary eigenvalues of \(S(\phi )\).

Interestingly, \(\Vert {\phi } \Vert ={\frac{1}{2}}\Vert {T(\phi )} \Vert _{\mathrm {1}}\). This allows us to express the proximity operator as

The objective in the last line is identical to the well known \(\Vert {\cdot } \Vert _{\mathrm {1}}\)-prox operator thus

7 Optimization

Let \(c\in \mathbb {R}^n, b\in \mathbb {R}^m\) and \(A\in \mathbb {R}^{m\times 6n}\) where \(m,n\in \mathbb {N}\). After discretization we can find the optimum of (25) by solving an optimization problem of the following form:

where \(X_i\in \mathbb {R}^6\) is the i-th column of matrix \(X\in \mathbb {R}^{6\times n}\) that is the reshaped vector x. These \(X_i\) represent the 2-vector at point p by its six coefficients. A, b represent the discrete divergence and projection operators and constraints. The system can be transformed into the primal-dual framework by defining the functions \(F:\mathbb {R}^m\rightarrow \overline{\mathbb {R}}, G:\mathbb {R}^N\rightarrow \mathbb {R}\) by

Clearly the primal problem

has the same optimimum and optimizer as Problem (33), but is now a convex unconstrained problem, that can be directly solved by the primal-dual algorithm [17–19]. It is also possible to use ADMM for the optimization.

Examples of optimal solutions computed by the proposed method: we computed correspondences between two pais of images. In the first row we see that the energy function prefers isometric transformations. The rotation between the two images is correctly recovered. In the second row we add a quite large translation. In both cases we see that the proposed method generates solutions that are dense and continuous in both directions.

8 Experiments

In this Section we will present typical correspondence maps that we computed with the discretization of our proposed optimization problem. Since the minimal surface is represented by a discrete 2-vector field on the discrete product space \(\varOmega ^2\) corresponding points might not be unique. We will take the center of gravity to construct the map \(f:\varOmega \rightarrow \varOmega \): Let \(x:\varOmega ^2\rightarrow \varLambda _2\mathcal {T}_p\mathbb {R}^4\) be the optimal 2-vector field, \(p\in \varOmega \), then define f by \(f(p) = \sum _{q\in \varOmega } \Vert {x(p,q)} \Vert q\). We define the map in the other direction analoguously.

In Fig. 2 we depict two examples of optimal correspondences computed by the proposed approach. We used the distance in color space for the data term. Boundary conditions ensure that the boundary of one image is mapped to the boundary of the other image. The correspondence map f is visualized by the transformation vectors \(t:\varOmega \rightarrow \mathbb {R}^2\), such that \(f={{\mathrm{Id}}}+t\). These vectors are then color coded using the HSV color space.

9 Conclusion

We proposed a method for computing regularized dense correspondences between two images. The key idea is to rephrase the correspondence estimation in the framework of geometric measure theory. To this end, we first introduce a fairly general correspondence cost function on the space of diffeomorphisms which combines a generally non-convex data term with a smoothness regularizer that favors isometries. Secondly, we turn this problem into a minimal surface problem in higher dimension which can be written as an optimization over 2-vector fields. The latter problem can be discretized as a convex optimization problem for which we can efficiently compute an optimal solution using (for example) a primal-dual algorithm.

References

Veksler, O.: Stereo matching by compact windows via minimum ratio cycle. IEEE Int. Conf. Comput. Vis. (ICCV). 1, 540–547 (2001)

Ishikawa, H.: Exact optimization for Markov random fields with convex priors. IEEE Trans. Pattern Anal. Mach. Intell. 25(10), 1333–1336 (2003)

Horn, B.K., Schunck, B.G.: Determining optical flow. In: 1981 Technical Symposium East, International Society for Optics and Photonics, pp. 319–331 (1981)

Bronstein, A.M., Bronstein, M.M., Kimmel, R.: Generalized multidimensional scaling: a framework for isometry-invariant partial surface matching. Proc. Nat. Acad. Sci. 103(5), 1168–1172 (2006)

Modersitzki, J.: Numerical Methods for Image Registration. Oxford University Press, Oxford (2003)

Ashburner, J.: A fast diffeomorphic image registration algorithm. Neuroimage 38(1), 95–113 (2007)

Vercauteren, T., Pennec, X., Perchant, A., Ayache, N.: Diffeomorphic demons: efficient non-parametric image registration. NeuroImage 45(1), S61–S72 (2009)

Alvarez, L., Weickert, J., Sánchez, J.: Reliable estimation of dense optical flow fields with large displacements. Int. J. Comput. Vision 39(1), 41–56 (2000)

Memin, E., Perez, P.: Hierarchical estimation and segmentation of dense motion fields. Int. J. Comput. Vision 46(2), 129–155 (2002)

Brox, T., Bruhn, A., Papenberg, N., Weickert, J.: High accuracy optical flow estimation based on a theory for warping. In: Pajdla, T., Matas, J.G. (eds.) ECCV 2004. LNCS, vol. 3024, pp. 25–36. Springer, Heidelberg (2004)

Pock, T., Cremers, D., Bischof, H., Chambolle, A.: Global solutions of variational models with convex regularization. SIAM J. Imaging Sci. 3(4), 1122–1145 (2010)

Lellmann, J., Strekalovskiy, E., Koetter, S., Cremers, D.: Total variation regularization for functions with values in a manifold. In: IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, December 2013

Vaillant, M., Glaunès, J.: Surface matching via currents. In: Christensen, G.E., Sonka, M. (eds.) IPMI 2005. LNCS, vol. 3565, pp. 381–392. Springer, Heidelberg (2005)

Federer, H.: Geometric Measure Theory. Springer, Heidelberg (1969)

Krantz, S.G., Parks, H.R.: Geometric Integration Theory. Springer, Heidelberg (2008)

Morgan, F.: Geometric Measure Theory: A Beginner’s Guide. Academic Press, Cambridge (2000)

Pock, T., Cremers, D., Bischof, H., Chambolle, A.: An algorithm for minimizing the piecewise smooth Mumford-Shah functional. In: IEEE International Conference on Computer Vision (ICCV), Kyoto, Japan (2009)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Pock, T., Chambolle, A.: Diagonal preconditioning for first order primal-dual algorithms in convex optimization. In: 2011 IEEE International Conference on Computer Vision (ICCV), pp. 1762–1769. IEEE (2011)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Windheuser, T., Cremers, D. (2016). A Convex Solution to Spatially-Regularized Correspondence Problems. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds) Computer Vision – ECCV 2016. ECCV 2016. Lecture Notes in Computer Science(), vol 9906. Springer, Cham. https://doi.org/10.1007/978-3-319-46475-6_52

Download citation

DOI: https://doi.org/10.1007/978-3-319-46475-6_52

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46474-9

Online ISBN: 978-3-319-46475-6

eBook Packages: Computer ScienceComputer Science (R0)