Abstract

3D shape reconstruction with multi-view stereo (MVS) relies on a robust evaluation of photo consistencies across images. The robustness is ensured by isolating surface albedo and scene illumination from the shape recovery, i.e. shading and colour variation are regarded as a nuisance in MVS. This yields a gap in the qualities between the recovered shape and the images used. We present a method to address it by jointly estimating detailed shape, illumination and albedo using the initial shape robustly recovered by MVS. This is achieved by solving the multi-view inverse rendering problem using the geometric and photometric smoothness terms and the normalized spherical harmonics illumination model. Our method allows spatially-varying albedo and per image illumination without any prerequisites such as training data or image segmentation. We demonstrate that our method can clearly improve the 3D shape and recover illumination and albedo on real world scenes.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

3D reconstruction from multiple images is becoming a basic tool in various applications, e.g. CAD model creation [1] and 3D mapping [2] thanks to softwares based on structure-from-motion (SfM) [3–5] followed by multi-view stereo (MVS) [6–8]. 3D reconstruction typically begins with SfM from imagery. MVS is then used to reconstruct surface while finding more correspondences via pixel/feature matching. Since this is a non-trivial task as real images are taken under a variety of conditions, photo consistencies among images are robustly computed [9, 10] in order to suppress the appearance changes among images. Such matching schemes work well for recovering distinctive objects but often fail with weakly textured objects.

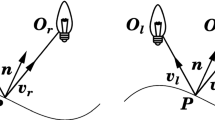

In contrast to MVS, Shape from Shading (SfS) [11] recovers surface normals from a single image by solving the inverse problem of image rendering formed as a function of surface normal, albedo and shading. Since shading directly represents (high frequency) shape information under the smooth illumination, SfS can extract fine and detailed surface normal from shading variation. Although SfS requires surface albedo and/or shading information, recent approach solves this by using additional prerequisite [12] or multiple images captured under different illuminations, i.e. Photometric Stereo (PS) [13, 14].

For these reasons, MVS and SfS are complementary to each other and several methods that fuse MVS and SfS have been proposed [15–19]. The main idea is to use MVS (or RGBD images) as an initial shape (depth and normal) and refine it by applying SfS techniques. Table 1 summarizes the relationships of the closely related methods based on multiple-view images. Previous methods can estimate albedo and illumination under limited conditions such as uniform albedo [20], the existence of known illumination images [21] and constant illumination among images [22].

Contribution. In this paper, we propose a method to reconstruct detailed shape only from images via multi-view inverse rendering (MVIR). To achieve this, we design cost function based on rendering term and regularization terms on shape and albedo (Sect. 3). The cost function consists of the rendering term that can handle temporally varying illumination, the geometric smoothness term that refines shape while preserving sharp edges and the photometric smoothness term (Sect. 3.2) that constrains albedo among multiple images. The proposed method is able to jointly estimate shape, albedo and illumination without any prerequisites and can be applied to images which capture complex objects under different illumination conditions (such as day/night images).

2 Related Work

In this section, we describe fundamental works on MVS, photometric methods (SfS and PS) and their combinations.

Multi-view Stereo. MVS in principle recovers depth by robustly measuring photo consistencies among images, e.g. SSD, NCC [9] and descriptor distances [10]. The typical MVS approach recovers depth for reference images [23, 24] by optimizing the cost function that consists of a data term (photo consistencies) and smoothness (regularization) terms [25, 26]. The scene is recovered by merging depth maps and determining surfaces that impact the final quality of surface [27–30].

Other popular approaches directly reconstruct points or patches in 3D space [7, 31] by locally evaluating photo consistencies and conservatively expanding the reconstruction. This approach is robust for recovering complex objects as it suffers less from depth discontinuity or occlusions but will not work on weakly-textured objects. Jancosek and Pajdla [8] improves on this by introducing a modified graph-cut based surface reconstruction. Bao et al. [32] on the other hand solves this by fusing pre-trained semantic shape information. All-in-one MVS softwares [7, 8, 33] were, indeed, a breakthrough as they can provide 3D models with impressive quality when many photographs are taken in good conditions, e.g. using many images taken by DSLR cameras under a consistent lighting [9, 34].

Although up-to-date MVS methods are well-balanced in accuracy, robustness and computational costs, the surface quality (accuracy and completeness) can be further improved by taking into account illumination and albedo encoded in the images.

Photometric Methods. Horn [11] introduced Shape from Shading that estimates the surface normal by decomposing shading information encoded in the image. This is achieved by solving the inverse rendering function formulated in a simplified form. Since SfS is an ill-posed problem, early SfS algorithms assume uniform albedo and known illumination [35, 36]. Recently proposed methods loosen these conditions by extending the rendering model [37] and relaxing the albedo restriction under general illumination [38].

Using multiple images taken under different lighting conditions can also relax the conditions in SfS: Photometric Stereo (PS). Woodham [14] demonstrated that surface normal can be recovered from images captured at a fixed viewpoint under varying calibrated illuminations. Basri and Jacobs [39] extended this to more natural (uncalibrated) illumination by representing the illumination as low order spherical harmonics. Hernandez et al. [40] further relaxed the fixed view point condition by using visual hull information. This method used surface normals on object silhouettes to estimate direction of illumination for multi-view images.

Combination. Photometric methods in general prefer less-textured surface captured under different illuminations whereas MVS requires well-textured surfaces. Combining these two complementary approaches can produce higher quality 3D shape.

Wu et al. [20] presented a method to refine the initial shape obtained from MVS while taking care of ambient occlusion artifacts. They achieved this by modelling the inverse image rendering function using low order spherical harmonics. As summarized in Table 1, this method requires uniform surface albedo and constant (global) illumination among images in order to separate shading and albedo.

Shan et al. [21] showed that cloudy images can be a clue to decompose albedo and shading from images, i.e. using multiple cloudy images captured under ambient illumination as the source of albedo. They used an initial shape from MVS, decomposed surface albedo, and recovered other images with varying illumination on top of the estimated albedo. Shi et al. [41] introduced a method based on PS: it first recovers the initial shape by MVS, transfer images for “a reference camera” and apply uncalibrated PS using internet photos. This method refines shape and recovers temporally-varying illumination by altering the estimation procedures from the reference view point.

With the advent of low cost RGB-D sensors, several photometric-based techniques are introduced to fill the quality gap between the RGB image and depth map [42–45]. Barron and Malik [42] showed that a single RGB-D image can be used to compute a refined shape, albedo and scene illumination by using pre-trained mixtures of gaussians. Other works refine the depth by assuming uniform albedo [43, 44] or multiple albedo (with image segmentation) [45] under a low order spherical harmonics model.

Most recently, Zollhöfer et al. [22] proposed a novel shape refinement technique by formulating the inverse rendering function including color and surface normals under the volumetric representation. In [22], surface refinement is performed in a voxel space in the form of optimizing TSDF that has a significant advantage on its memory efficiency and computational cost. Note that depths of each view are mapped into the voxel space and color values are cast to the voxels. As each voxel can have only a single color value, the method [22] is (so-far) incapable to deal with per-image illumination (Table 1).

Our Method. Our method jointly estimates the detailed shape, illumination and albedo using multiple images with no additional requirement such as cloudy images [21], pre-trained GM [42] or image segmentation [45]. Our formulation also enables to estimate spatially-varying albedo and temporally-varying (per image) illumination in contrast to [20, 22]. The refined high quality shape, albedo and illumination can be also used for various applications, e.g. visualization of textured models and re-lighting images in high quality (Sect. 4).

3 MVIR Under Arbitrary Illumination and Albedo

In this section, we describe the proposed method for jointly estimating shape, albedo and illumination. We describe the pre-processing steps (Sect. 3.1) followed by our cost formulation of multi-view inverse rendering (Sect. 3.2) with the details of each regularization term and conclude with discussions. Figure 1 shows an overview of our method.

3.1 Pre-processing

Camera Poses and Initial Shape Estimation.

The camera poses of input images and initial shape were estimated using structure from motion and multi-view stereo. In our experiment, we used VisualSFM [5, 46] followed by CMPMVS [8] or MVE [6] but other methods can also be used, e.g. Theia [47].

Visibility Calculation.

The visibility of every mesh vertex in the initial shape is computed from ray-triangle intersections between the sight rays and all the surface meshes. A sight ray is a ray that starts from a vertex to each camera. If a vertex is located in the view frustum of a camera and its sight ray is not occluded by any other mesh, we regard the camera as visible from the vertex. We adopt octree space partitioning to reduce calculation cost [48].

Surface Subdivision. The surface mesh model must have sufficiently high resolution in order to recover the fine and detailed shape. We subdivide the surface meshes until the maximum size of each triangular face observed in the visible images becomes smaller than a threshold (we set 2 for images of VGA size, then proportionally increase to 9 for the images of \(3072 \times 2048\) pixels). We used the state-of-the-art adaptive subdivision method [49].

3.2 Joint Estimation of Surface, Illumination and Albedo

Using the pre-processed information described above, we build the cost function for joint estimation of shape, albedo and scene illumination.

The following is a list of variables to be estimated:

-

\(D \in \mathbb {R}^{n}\) is the 3D vertex displacement where n is the total number of vertices. To stabilize our optimization process, the displacement of each vertex i is constrained to the initial normal direction, i.e. \(D^i \in \mathbb {R}^{1}\).

-

\(R \in \mathbb {R}^{n \times 3}\) is the vertex albedo. For each vertex, we express the components in RGB color space (\(R^i \in \mathbb {R}^{3} = (R_R, R_G, R_B)\)).

-

\(L \in \mathbb {R}^{p \times 12}\) is the scene illumination matrix where p is the total number of cameras. \(L^c \in \mathbb {R}^{12} = (L_0, \ldots , L_8, L_R, L_G, L_B)\) is the illumination basis of c-th camera. To simplify the optimization process, we represent scene illumination as a combination of a single spherical harmonics basis and RGB color scales. \(L_0, \ldots , L_8\) are the coefficients of the 2nd-order spherical harmonics basis while \(L_R\), \(L_G\) and \(L_B\) are the scales of red, green and blue channels (details are given below).

We next describe the cost terms \(E_{ren}\), \(E_{gsm}\) and \(E_{psm}\).

Rendering Term.

The first term \(E_{ren}\) is the rendering term that measures image pixel errors between input and rendered images.

\({\hat{I}}^{i,c} \in \mathbb {R}^{3}\) is the RGB values of pixel i in the input image c and \(I^{i,c} \in \mathbb {R}^{3}\) is the rendered values. V(i) indicates the visible camera set for i-th vertex which is pre-computed in Sect. 3.1. The rendering function \(I^{i,c}\) is defined as multiplication of albedo \((R_R,R_G,R_B)\) and shading \(S \in \mathbb {R}^{1}\) which is a function of the scene illumination L and the vertex displacement D:

This rendering function assumes a simple Lambertian and ignore any other complex reflection component. Similar assumptions were also made in other works [20, 22].

The shading S is computed by using spherical harmonics illumination model [50],

where \(N^i \in \mathbb {R}^{3} = (N_x, N_y, N_z)\) is the normal vector computed using D by averaging normals of adjacent faces at the i-th vertex. The spherical harmonics illumination model assumes that light sources are located at infinity (environmental lights) [20]. This illumination model is particularly suitable for compactly representing complex illumination and is commonly used in the state-of-the-art shape refinement methods [12, 17, 22, 42].

In order to suppress the number of parameters in the optimization, we assume that illumination distribution is common among the RGB channels. This enables us to estimate the spherical harmonics basis \((L_0, \ldots , L_8)\) and the relative scales \(L_R\) and \(L_B\) only when setting \(L_{G}=1\). We chose the green channel to be 1 because it is known as most sensitive in the RGB color representation [51, 52].

Notice that our formulation allows the estimation of both per image illumination and spatially varying albedo which clearly differs from [20, 22]. The limitation is that our rendering function does not model shadow, ambient occlusion or other reflection components explicitly (see also Sect. 4). Their effects are absorbed as albedo variation.

Geometric Smoothness Term.

We apply the geometric smoothness term \(E_{gsm}\):

This term computes an approximated local surface curvature at each vertex using its neighboring vertices. In detail, \(l^i\) is the average edge length between adjacent vertices and its centroid. \(K(D^i, P^i)\) is a distance between the vertex and the local plane P computed by weighted least squares [53] using adjacent vertices of the i-th vertex. The distance K is normalized with \(l^i\) so that this smoothness cost is independent of the scene scale. The weight \(w_{1}\) for the local plane P is computed from the pixel value differences:

A(i) is a set of adjacent vertices of i-th vertex. This smoothness term with the weighted plane fitting encourages the surface to be flat while preserving sharp edges detected on images.

Photometric Smoothness Term.

As our cost function allows for spatially-varying albedo on the surface mesh vertices, there are ambiguities when separating albedo from shading and illumination in the image rendering function, i.e. \(R \cdot SL\). To regularize this ambiguity, we follow the approach often used in intrinsic decomposition [42, 54, 55], i.e. vertices having similar albedo should have similar color values in each input image. We establish the photometric smoothness term \(E_{psm}\) on albedo as:

\(w_{i,j}\) is a weight controlling the balance between texture and shading:

The weight \(w_{2}\) is computed using chromaticities between the input and rendered images:

We measure the differences by the angles of L2 normalized RGB vectors, similar to [22, 55]. However, using this weight alone cannot resolve non-chromatic textures such as checker-board patterns. To suppress the effect of non-chromatic textures, we use an additional weight \(w_{3}\) based on pixel intensities (L2 norm of RGB vector):

Setting a large value for \(k_3\) makes \(w_3\) sensitive to the non-chromatic (intensity) changes. Since this prevents the refinement using shading information, we set \(k_3\) to be smaller than \(k_2\) in the weight \(w_{i,j}\). The weight \(w_{i,j}\) then effectively recognizes that the smooth variation is induced by shading (changes of surface normals) on the same material and the photometric smoothness positively contributes to recover it. In contrast, the sharp variation is detected as material change and the photometric smoothness does not influence on the shape recovery. It is important to note that the multi-view constraints appear on the regularization terms (Eqs. 5 and 7) because their weights are computed using the visible camera set V(i) (Eqs. 6 and 8). Figure 2 visualizes the effect of our photometric smoothness term on a toy example.

Effect of our photometric smoothness term. We render a typical CG model that includes a few objects with both sharp and smooth albedo variations as a toy example (a). (b) and (c) shows the weights as heat maps. The photometric smoothness contributes for shape recovery (large weight, blue) and does not contribute (small weight, red). The weight using chromaticity difference only (b) cannot detect non-chromatic changes, e.g. checker board like patterns on the top-left cube and bottom-right ball. Our weight using both chromaticity and intensity differences (c) successfully detects those sharp albedo changes while recognizing the smooth variation as it is induced by shading (change of surface normals). (Color figure online)

Illumination Scale Ambiguity.

MVIR has uncertainty to the illumination scale ambiguity. This is a similar phenomenon to the scale ambiguity found in SfM. To fix this in the optimization, we select a dominant camera which has the largest view frustum and constrain its illumination basis \(L^d\) to \(\Vert L_0, \ldots , L_8\Vert = 1\) and \(L_R = L_B = 1\). The resulted illuminations are relative to the illumination of the dominant camera. Notice that the choice of the dominant camera does not change property of cost function (the cost is simply biased) apart from numerical computation issue (same as bundle adjustment).

3.3 Discussions

Why Can We Deal both Spatially-Varying Albedo and Per-Image Illumination?

It is interesting to note the following physical conditions that exist in the MVIR problem. According to the per image illumination model, a surface point (vertex) appears to have different RGB values on each image. However, the shape and albedo at this surface point should be unique [56]. This phenomenon is implemented in such a way that mesh vertices share variables for shape and albedo among the multiple views in the rendering term (Eq. 2). Furthermore, the rendering term, indeed, contributes to decompose albedo and shading because the wrong shape (shading) induces inconsistencies for the RGB values rendered across multiple views and, accordingly, the cost (error) increases. Obviously, a standard MVS does not take into account the smoothness constraint in this way. Although our cost function is non-convex, our experience shows the optimization is highly feasible thanks to stable initial shapes provided by recent SfM and MVS.

Benefit of Combining SfS and MVS.

MVIR naturally takes into account photo consistencies among multiple views. It is beneficial, for example, to resolve bas-relief ambiguity. Figure 3 shows a toy example of demonstrating it. Starting from a uniform initial value, our MVIR without multi-view constraint which is similar to a single-view SfS method fails to recover shape correctly due to the bas-relief ambiguity (Fig. 3(a)). Our MVIR using only multi-view constraint (21 images) without photometric smoothness term which is close to MVS also fails to recover the details because the surface texture is not distinctive (Fig. 3(a)). Our MVIR recovers the shape successfully (Fig. 3(c)). This is a simple and classic example but clearly shows advantage w.r.t. the single-view based technique [42] and the per-view refinement [41].

Benefit of combining SfS and MVS. This toy example shows that bas-relief ambiguity is a nuisance in a single-view SfS but is not when combining with multi-view stereo. A single-view SfS recovered the relief reversely (a) due to depth uncertainty. MVS without the photometric smoothness recovered the distorted surface (b) due to the lack of distinctive features. Thanks to the multiple-view constraint, our MVIR using multiple images is able to recover the shape (c) similar to ground truth shape (d).

4 Experiments

In this section, we first describe the implementation details and show experiments on both synthetic and real-world datasets in comparison with baseline approaches.

4.1 Implementation Details

The proposed MVIR was implemented using C# with external dependencies: Ceres Solver [57], OpenCV [58] and Armadillo [59]. We tested our method on a standard Windows 10 desktop PC consisted of Intel i7 CPU and 64 GB of memory. We conducted with no GPU optimization. Our software is online at [60].

Throughout the experiments, we used the same values for the parameters \(\beta =2\), \(k_1=10\), \(k_2=10\) and \(k_3=100\). Only the geometric smoothness weight \(\alpha \) is modified.

When visualizing our estimated albedo, we re-compute their values that firmly satisfy our rendering function (Eq. 3) and we take the median of re-computed values when the vertex is seen from multiple images. This post-processing results sharper albedo. Notice that this is necessary for visualization and does not influence in the optimization process.

The synthetic dataset: Joyful Yell. As the original shape of CG data (a) has a constant albedo, we colored (b) and rendered (c) using the CG software [61].

4.2 Qualitative and Quantitative Evaluation Using Synthetic Dataset

To evaluate the performance of the proposed method numerically, we used a publicly available CG model: The Joyful Yell (Fig. 4). As the original shape has a constant albedo, we colored it by using the CG software [61]. To simulate various illumination conditions, the illumination is generated such that each image has a single color but randomly generated light sources. The object is assumed to have complete Lambertian surface. We generated 37 input images of \(2048 \times 1536\) pixels by using CG rendering software [61]. For this dataset, we set \(\alpha =0.1\).

Figures 5 and 6 show the results for qualitative evaluation. The resulted shapes (Fig. 6(c) show that our method recovers both fine structures (hairs, ears and clothes) and smooth bodies. In contrast, CMPMVS [8] (Fig. 6(b)) is unsuccessful at especially recovering textureless parts (forehead and cheeks).

The resulted illumination map (Fig. 5(a)) shows that our method successfully recovered the relative color variation and distribution of scene illuminations among the images. Furthermore, the albedo (Fig. 5(b)) is overall estimated successfully but not identical to the ground truth colors. This is because the ambient occlusions and inter-reflections are represented as albedo variations [21, 22].

Although the estimated albedo has some errors due to the simplified rendering function, the proposed joint estimation is beneficial for shape refinement. This is demonstrated in the quantitative evaluation w.r.t. the ground truth values in Table 2. We evaluate the average of depth errors (RMSE) and the average of angular errors computed on every image. This evaluation shows a clear improvement when compared with the input shape by CMPMVS [8].

Despite of feasible initial shape from MVS, our cost function may be failed to converge to the global minimum because the cost function is non-convex. In that case, we can re-compute surface mesh [33] with smoothing and then apply our MVIR again. This simple method already gives additional improvement.

4.3 Evaluation on the Internet Photo Dataset

We demonstrated the performance of the proposed MVIR using internet photos which have significantly different illuminations across images. We used the internet photosFootnote 1 at a specific landmark: Trevi Fountain [62]. We used the 57 images of various sizes and set \(\alpha =0.3\).

Figure 7 shows examples of input images, illuminations and albedo estimated by our MVIR. Notice that the input images were taken under various illumination conditions and our formulation (which allows per image illumination) successfully recovered relative illuminations and detailed shape (Fig. 8(c)) when compared with CMPMVS (a) and MVE (b).

We also compared the proposed MVIR with the MVS method that uses many images. We performed MVE using 414 images which are obtained by retrieving similar images from Rome16K dataset [63] by matching compact image descriptors [64]. The resulted shape by MVE using 414 images (Fig. 9(a)) gives some improvement when compared to MVE with 57 images (Fig. 8(b)) but the proposed MVIR using 57 images (Fig. 9(b)) is yet more detailed, e.g. arch, cornice. Notice that our MVIR shines more when estimation of high quality shape is required only using a limited number of images, e.g. photos from a place that can be rarely visiting (e.g. Mars) or already lost by natural damages/desasters.

Examples of estimated illumination and albedo on the Joyful Yell. (a) Each triplet shows the input image (left), ground-truth illumination (middle) and the estimated illumination (right). We set the global scale of illumination to 0.4 for visualizing the estimated illuminations. (b) Albedo estimated by the proposed method. (c) Ground-truth albedo from the same viewpoint.

Estimated shapes on the Joyful Yell. Our MVIR recovers fine details (clothes and hairs) as well as smooth body (forehead and cheeks) (c) when compared with CMPMVS [8] (b).

Using the results of MVIR, we generated synthesized images under arbitrary illuminations as an example of applications. The effect of the light source/position change is clearly visible as shading (Fig. 10(b)), e.g. the half dome behind the statue. As the synthesized images (Fig. 10(b and c)) are very realistic, they can be potentially beneficial for machine learning approaches that require many images, e.g. deep learning.

We also experimented on the Yorkminster in 1DSfM dataset [65]. In similar manner as the DiTrevi experiments, using 9 images, we estimated initial mesh by CMPMVS and performed the MVIR. Figure 11 shows the input images, illuminations and albedo estimated by the proposed MVIR. Figure 12 shows the refined shape by the MVIR (c) in comparison with CMPMVS (a) and MVE (b). Notice that the MVIR (c) recovers a flat facade without losing the fine and sharp structures.

4.4 Computational Complexity

The variables in the cost function (Eq. 1) are \( a N_v + b N_c\) for \(N_v\) vertices and \(N_c\) images. a is 4 (1 displacement and 3 albedo) and b is 11 (9 spherical harmonics (SH) basis and 2 color scales). For example, in the DiTrevi experiment (Sect. 4), the number of variables is 4, 130, 417. Total processing time of MVIR with 57 images is 12 h (Fig. 9(b)) and MVE with 414 images took 8 h (Fig. 9(a)). The cost is optimized using a standard non-linear least squares minimizer [57]. Computational efficiency can be improved by numerical approximation and hardware acceleration [22].

4.5 Comparison with Other Methods

We compared with a state-of-the-art method for joint estimation of shape, albedo and illumination using a single RGB-D image [42]. Although this method uses a single view only and the comparison with multi-view setup is not quite fair, we chose it because their code is the only one publicly available in this field of study. For producing the RGB-D input, we selected an image from the Trevi Fountain dataset and computed its depth map using the initial shape of CMPMVS. Our result (Fig. 13(b)) clearly shows the advantage of recovering with multiple-view images when compared with (Fig. 13(b)).

Examples of re-lighting on the Trevi Fountain dataset. We generated the synthesized image (b) at the target view point (a) by re-lighting using the estimated albedo and synthesized illumination. We also re-light (c) by using the illumination of reference view (d). Notice that our re-lighting can represent shading and shadow variation by using estimated high quality shape and albedo information.

We also compared our method with Wu et al. [20] which assumes a uniform albedo using their dataset. Figure 13(c and d) shows the qualitative result of shape refinement on the Angel dataset. Notice that our method (d) recovers more detailed shape when compared with (c) [20].

4.6 Failure Cases

Our method cannot handle non-lambertian reflection and more complex illumination model. Figure 14 shows a typical failure case: the surface has a strong specularity (Fig. 14(b)). Notice that the MVIR still works fairly well for the other parts where the specularity is not too strong, thanks to the geometric smoothness term.

5 Conclusion

We have presented a multi-view inverse rendering method for joint estimation of shape, albedo and illumination. We used the shape obtained from MVS as an initial input and incorporated shape-from-shading in our formulation. The detailed shape, albedo and illumination can be obtained by solving multi-view inverse rendering problem using the geometric and photometric smoothness terms and normalized spherical harmonics illumination model. Experimental results show that our method successfully recovers on not only the synthetic dataset but also real-world dataset under various albedo and illumination conditions.

Notes

- 1.

The gamma calibration using a fixed value (2.2 as in [56]) on the internet photos gave no additional improvement in our preliminary experiments. However, the calibration of nonlinearity will be beneficial when it is performed precisely.

References

Biehler, J., Fane, B.: 3D Printing with Autodesk: Create and Print 3D Objects with 123D, AutoCAD and Inventor, 1st edn. Que Publishing Company, Seattle (2014)

Anguelov, D., Dulong, C., Filip, D., Frueh, C., Lafon, S., Lyon, R., Ogale, A., Vincent, L., Weaver, J.: Google street view: capturing the world at street level. Computer 6, 32–38 (2010)

Moulon, P., Monasse, P., Marlet, R., et al.: Openmvg. an open multiple view geometry library. https://github.com/openMVG/openMVG

Snavely, N., Seitz, S., Szeliski, R.: Modeling the world from internet photo collections. IJCV 80(2), 189–210 (2008)

Wu, C., Agarwal, S., Curless, B., Seitz, S.M.: Multicore bundle adjustment. In: CVPR, pp. 3057–3064. IEEE (2011)

Fuhrmann, S., Langguth, F., Goesele, M.: MVE - a multi-view reconstruction environment. In: EG GCH (2014)

Furukawa, Y., Ponce, J.: Accurate, dense, and robust multiview stereopsis. PAMI 32(8), 1362–1376 (2010)

Jancosek, M., Pajdla, T.: Multi-view reconstruction preserving weakly-supported surfaces. In: CVPR, pp. 3121–3128. IEEE (2011)

Furukawa, Y., Hernndez, C.: Multi-view stereo: a tutorial. Found. Trends Comput. Graph. Vis. 9(1–2), 1–148 (2013)

Tola, E., Lepetit, V., Fua, P.: Daisy: an efficient dense descriptor applied to wide-baseline stereo. PAMI 32(5), 815–830 (2010)

Horn, B.K.P.: Obtaining shape from shading information. In: Shape from Shading, pp. 123–171. MIT Press, Cambridge (1989)

Barron, J.T., Malik, J.: Shape, albedo, and illumination from a single image of an unknown object. In: CVPR, pp. 334–341. IEEE (2012)

Ikehata, S., Wipf, D., Matsushita, Y., Aizawa, K.: Photometric stereo using sparse bayesian regression for general diffuse surfaces. PAMI 36(9), 1816–1831 (2014)

Woodham, R.J.: Photometric method for determining surface orientation from multiple images. Opt. Eng. 19(1), 121–171 (1980)

Blake, A., Zisserman, A., Knowles, G.: Surface descriptions from stereo and shading. Image Vis. Comput. 3(4), 183–191 (1985)

Jin, H., Cremers, D., Wang, D., Prados, E., Yezzi, A., Soatto, S.: 3-d reconstruction of shaded objects from multiple images under unknown illumination. IJCV 76(3), 245–256 (2008)

Park, J., Sinha, S.N., Matsushita, Y., Tai, Y.W., Kweon, I.S.: Multiview photometric stereo using planar mesh parameterization. In: ICCV, pp. 1161–1168. IEEE (2013)

Samaras, D., Metaxas, D., Fua, P., Leclerc, Y.G.: Variable albedo surface reconstruction from stereo and shape from shading. In: CVPR, vol. 1, pp. 480–487. IEEE (2000)

Wu, C., Liu, Y., Dai, Q., Wilburn, B.: Fusing multiview and photometric stereo for 3d reconstruction under uncalibrated illumination. IEEE Trans. Visual Comput. Graphics 17(8), 1082–1095 (2011)

Wu, C., Wilburn, B., Matsushita, Y., Theobalt, C.: High-quality shape from multi-view stereo and shading under general illumination. In: CVPR, pp. 969–976. IEEE (2011)

Shan, Q., Adams, R., Curless, B., Furukawa, Y., Seitz, S.M.: The visual turing test for scene reconstruction. In: 3DV, pp. 25–32. IEEE (2013)

Zollhöfer, M., Dai, A., Innman, M., Wu, C., Stamminger, M., Theobalt, C., Nießner, M.: Shading-based refinement on volumetric signed distance functions. ACM Trans. Graph. 34(4), 96:1–96:14 (2015)

Okutomi, M., Kanade, T.: A multiple-baseline stereo. PAMI 15(4), 353–363 (1993)

Goesele, M., Curless, B., Seitz, S.M.: Multi-view stereo revisited. In: CVPR, vol. 2, pp. 2402–2409. IEEE (2006)

Kolmogorov, V., Zabih, R.: Multi-camera scene reconstruction via graph cuts. In: Heyden, A., Sparr, G., Nielsen, M., Johansen, P. (eds.) ECCV 2002, Part III. LNCS, vol. 2352, pp. 82–96. Springer, Heidelberg (2002)

Campbell, N.D.F., Vogiatzis, G., Hernández, C., Cipolla, R.: Using multiple hypotheses to improve depth-maps for multi-view stereo. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008, Part I. LNCS, vol. 5302, pp. 766–779. Springer, Heidelberg (2008)

Fuhrmann, S., Goesele, M.: Floating scale surface reconstruction. ACM Trans. Graph. 33(4), 46:1–46:11 (2014)

Kutulakos, K.N., Seitz, S.M.: A theory of shape by space carving. IJCV 38(3), 199–218 (2000)

Labatut, P., Pons, J.P., Keriven, R.: Efficient multi-view reconstruction of large-scale scenes using interest points, delaunay triangulation and graph cuts. In: ICCV, pp. 1–8. IEEE (2007)

Vogiatzis, G., Hernández, C., Torr, P.H., Cipolla, R.: Multiview stereo via volumetric graph-cuts and occlusion robust photo-consistency. PAMI 29(12), 2241–2246 (2007)

Bleyer, M., Rhemann, C., Rother, C.: Patchmatch stereo-stereo matching with slanted support windows. BMVC 11, 1–11 (2011)

Bao, S.Y., Chandraker, M., Lin, Y., Savarese, S.: Dense object reconstruction with semantic priors. In: CVPR, pp. 1264–1271. IEEE (2013)

Kazhdan, M., Bolitho, M., Hoppe, H.: Poisson surface reconstruction. In: Proceedings of the Fourth Eurographics Symposium on Geometry Processing, vol. 7 (2006)

Vu, H.H., Labatut, P., Pons, J.P., Keriven, R.: High accuracy and visibility-consistent dense multiview stereo. PAMI 34(5), 889–901 (2012)

Ikeuchi, K., Horn, B.K.: Numerical shape from shading and occluding boundaries. Artif. Intell. 17(13), 141–184 (1981)

Zhang, R., Tsai, P.S., Cryer, J.E., Shah, M.: Shape-from-shading: a survey. PAMI 21(8), 690–706 (1999)

Forsyth, D.A.: Variable-source shading analysis. IJCV 91(3), 280–302 (2011)

Johnson, M.K., Adelson, E.H.: Shape estimation in natural illumination. In: CVPR, pp. 2553–2560. IEEE (2011)

Basri, R., Jacobs, D.: Photometric stereo with general, unknown lighting. In: CVPR, vol. 2, pp. 374–381. IEEE (2001)

Hernandez, C., Vogiatzis, G., Cipolla, R.: Multiview photometric stereo. PAMI 30(3), 548–554 (2008)

Shi, B., Inose, K., Matsushita, Y., Tan, P., Yeung, S.K., Ikeuchi, K.: Photometric stereo using internet images. In: 3DV, vol. 1, pp. 361–368. IEEE (2014)

Barron, J.T., Malik, J.: Intrinsic scene properties from a single rgb-d image. In: CVPR, pp. 17–24. IEEE (2013)

Han, Y., Lee, J.Y., Kweon, I.S.: High quality shape from a single rgb-d image under uncalibrated natural illumination. In: ICCV, pp. 1617–1624. IEEE (2013)

Wu, C., Zollhfer, M., Niener, M., Stamminger, M., Izadi, S., Theobalt, C.: Real-time shading-based refinement for consumer depth cameras. In: SIGGRAPH Asia (2014)

Yu, L.F., Yeung, S.K., Tai, Y.W., Lin, S.: Shading-based shape refinement of rgb-d images. In: CVPR, pp. 1415–1422. IEEE (2013)

Wu, C.: VisualSFM: a visual structure from motion system (2011). http://homes.cs.washington.edu/~ccwu/vsfm

Sweeney, C.: Theia Multiview Geometry Library: Tutorial & Reference. University of California, Santa Barbara

Meagher, D.: Geometric modeling using octree encoding. Comput. Graph. Image Process. 19(2), 129–147 (1982)

Kobbelt, L.: \(\sqrt{3}\)-subdivision. In: SIGGRAPH, pp. 103–112. ACM (2000)

Ramamoorthi, R., Hanrahan, P.: An efficient representation for irradiance environment maps. In: SIGGRAPH, pp. 497–500. ACM (2001)

Bayer, B.E.: Color imaging array (20 1976) US Patent 3,971,065

Kang, H.R.: Computational Color Technology. Spie Press, Bellingham (2006)

Eberly, D.: Least Squares Fitting of Data. Magic Software, Chapel Hill (2000)

Horn, B.K.P.: Determining lightness from an image. Comput. Graph. Image Process. 3(4), 277–299 (1974)

Jeon, J., Cho, S., Tong, X., Lee, S.: Intrinsic image decomposition using structure-texture separation and surface normals. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014, Part VII. LNCS, vol. 8695, pp. 218–233. Springer, Heidelberg (2014)

Laffont, P.Y., Bousseau, A., Paris, S., Durand, F., Drettakis, G.: Coherent intrinsic images from photo collections. ACM Trans. Graphics 31(6), 202:1–202:11 (2012)

Agarwal, S., Mierle, K., et al.: Ceres solver. http://ceres-solver.org

Bradski, G., et al.: The openCV library. Doct. Dobbs J. 25(11), 120–126 (2000)

Sanderson, C.: Armadillo: an open source C++ linear algebra library for fast prototyping and computationally intensive experiments. Technical report, NICTA (2010)

Project webpage (software, dataset). http://www.ok.ctrl.titech.ac.jp/~torii/project/mvir/

Blender - A 3D Modelling and Rendering Package. Blender Foundation, Blender Institute, Amsterdam (2015)

Havlena, M., Torii, A., Pajdla, T.: Efficient structure from motion by graph optimization. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010, Part II. LNCS, vol. 6312, pp. 100–113. Springer, Heidelberg (2010)

Li, Y., Snavely, N., Huttenlocher, D.P.: Location recognition using prioritized feature matching. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010, Part II. LNCS, vol. 6312, pp. 791–804. Springer, Heidelberg (2010)

Jégou, H., Douze, M., Schmid, C., Pérez, P.: Aggregating local descriptors into a compact image representation. In: CVPR, pp. 3304–3311. IEEE (2010)

Wilson, K., Snavely, N.: Robust global translations with 1DSfM. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014, Part III. LNCS, vol. 8691, pp. 61–75. Springer, Heidelberg (2014)

Acknowledgments

This work was partly supported by JSPS KAKENHI Grant Number 25240025 and 15H05313.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Kim, K., Torii, A., Okutomi, M. (2016). Multi-view Inverse Rendering Under Arbitrary Illumination and Albedo. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds) Computer Vision – ECCV 2016. ECCV 2016. Lecture Notes in Computer Science(), vol 9907. Springer, Cham. https://doi.org/10.1007/978-3-319-46487-9_46

Download citation

DOI: https://doi.org/10.1007/978-3-319-46487-9_46

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46486-2

Online ISBN: 978-3-319-46487-9

eBook Packages: Computer ScienceComputer Science (R0)