Abstract

Virtual Reality (VR) headsets occlude a significant portion of human face. The real human face is required in many VR applications, for example, video teleconferencing. This paper proposes a wearable camera setup-based solution to reconstruct the real face of a person wearing VR headset. Our solution lies in the core of asymmetrical principal component analysis (aPCA). A user-specific training model is built using aPCA with full face, lips and eye region information. During testing phase, lower face region and partial eye information is used to reconstruct the wearer face. Online testing session consists of two phases, (i) calibration phase and (ii) reconstruction phase. In former, a small calibration step is performed to align test information with training data, while the later uses half face information to reconstruct the full face using aPCA-based trained-data. The proposed approach is validated with qualitative and quantitative analysis.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Visualization is an important pillar for multimedia computing. The devices used for visualizing multimedia contents can be broadly categorized into (i) non-wearable computer devices and (ii) wearable computer devices. Non-wearable computer technologies employ two-dimensional (2-D) and/or three dimensional (3-D) displays/technologies for multimedia content rendering [13]. Three dimensional (3-D) technologies offer one more dimension for visualizing the data flow and the interplay of programs in complex multimedia applications. However, in past few years, this trend is shifting from non-wearable visualization to wearable visualization experience [12, 15]. The wearable devices used for watching multimedia content are commonly known as virtual reality (VR) headsets and/or head mounted displays (HMD). These virtual reality (VR) headsets are becoming popular because of cheaper prices, more immersive experience, high quality, better screen resolution, low latency and better control. According to statistics, around 6.7 million people have used VR headsets in year 2015 and it is expected to grow to 43 million users in year 2016 and 171 million users in year 2018 [31]. These headsets, for instance, Oculus rift, Play station VR, Gear VR, HTC vive, google cardboard, etc. [7] (see Fig. 1) are being used in many research and industrial applications, for example, medical simulation [21], gaming [8, 24], 3D movie experience and scientific visualizations [23], etc. The integration of multimedia content inside VR headset is an interesting trend in the development of more immersive experience. The research community in wearable virtual reality field has been developing various hardware and software solutions to solve different issues related to virtual reality [18, 27]. One of the major issues related to VR headsets is that they occlude half of the human face (upper face region) and to reconstruct the full human face is the main contribution of this research work.

We propose an optical sensor-based solution to address this issue. Our solution consists of a wearable camera setup where two cameras are used along with a VR headset as shown in Fig. 2. One camera is facing toward the lower face region (lips region) and the other camera is used to capture the side view of the eye-region. The full face is reconstructed using optical information of lower face region and partial eye region. We have used a google cardboard for our prototypic solution that can be extended to any VR headset.

Reconstructing a full face while wearing a VR headset is not an easy task [6]. The only efforts so far are done by Hao Li et al. [18] and Xavier et al. [3]. The former used an RGB-D camera and eight strain sensors to animate facial feature movements. They animate the facial movements through an avatar however they do not reconstruct the real face of a person wearing a VR headset. On the other hand, the later created a real-looking 3D human face model, where they trained a system to learn the facial expressions of the user from the lower part of the face only, and used it to change the model accordingly during testing. Compared to Hao Li et al., they came up with 3D face model of a person rather than using a 3D animated face. They have estimated the upper face information (eye region) based on lower face information (mouth region). But in literature, there is no direct correlation between upper and lower face regions [2, 34], hence estimating one based on the other is not a good practice. Furthermore, their training model is limited to few (e.g. six) discrete facial expression. However, in theory, human facial expressions are continuous and a combination of different expressions with intermediary emotions [10, 20, 35]. Hence, reconstructing a human face based on few emotions can be problematic.

Considering the above-mentioned limitations, we have revisited the same question (face reconstruction while wearing a VR headset) with an innovative approach, where both upper and lower face information is considered during training and testing phases. We have used an asymmetrical principal component analysis algorithm (aPCA) [26] to reconstruct an original face using lips and eye information. The lips information is used to estimate lower facial expression and eye information is used for the upper facial expression. The proposed approach is validated with qualitative and quantitative evaluation. To the best of our knowledge, we are among the first to consider this problem.

The rest of the paper is disposed as following. Section 2 presents related work for face reconstruction under occlusion. Section 3 gives a description of asymmetrical principal component analysis (aPCA) model. This section further describes training and testing phases. In Sect. 4, a qualitative and quantitative analysis is done on our proposed approach. Section 5 presents the discussion and limitations. Conclusion is presented in Sect. 6.

2 Background and Related Work

Full face Reconstruction of a person wearing VR headset is required in many multimedia applications. The most prominent advantage is in video teleconferencing applications. Face reconstruction while using VR headset has been less studied because headset obstructs a significant portion of human face. The closest works to our research are from Hao et al. [18] and Xavier et al. [3]. Hao et al. have developed a real time facial animation system that augments a VR display with strain gauges and a head-mounted RGB-D camera for facial performance capture in virtual reality. Xavier et al. have recently proposed a solution to reconstruct a real human face by, (i) building a 3D texture model of a person, (ii) building an expression shape model, (iii) projecting a 3D face model on occluded face and (iv) finally combining 3D face model with occluded test image/video. In this section, we further present previously developed optical techniques for face reconstruction.

Optical systems confront occluded faces very often due to the use of accessories, such as scarf or sunglasses, hands on the face, the objects that persons carry, and external sources that partially occlude the camera view. Different computer vision techniques have been developed to counter face occlusion problems [16]. Texture based face reconstruction technique first detects the occluded region of the face and then run a recovery process to reconstruct the original face [11, 19]. The recovery stage exploits the prior knowledge of the face and non-occluded part of the input image to restore the full face image. Furthermore, different model-based techniques have been proposed which exploit both shape and texture model of the face to reconstruct the original face [22, 29]. These techniques extract the facial feature points of the input face, fit the face model, and detect occluded region according to these facial feature points.

Principal Component Analysis (PCA) has been a fundamental technique for face reconstruction, for example, simple-PCA [17], FR-PCA [28], Kernal-PCA [4] and FW-PCA [9]. It starts by training the non-occluded face images by creating an eigenspace based on full image pixel intensities and/or some selective samples of pixel intensities. During testing phase, the occluded image is mapped to eigenspace and the principle component coefficients are restored by iteratively optimizing the error between the original image and the reconstructed image from eigenspace. The above-mentioned PCA based methods are successful if the occluded regions are not larger than the face region. However, the main challenge in our work is that the VR headset occludes a significant portion of a user’s face (nearly whole upper face), preventing effective face reconstruction from traditional PCA techniques. Hence, there is a need of an alternative method.

3 Extended Asymmetrical Principal Component Analysis (aPCA)

Asymmetrical principal component analysis (aPCA) has been previously used for video encoding and decoding [30]. In this work, we have extended aPCA for full face reconstruction for VR applications. Our algorithm consists of two phases, (i) training phase and (ii) testing phase. In training phase, we build an aPCA model by using the full frame and two half frames video sequences. Here, the full frame refers to full face of a person without VR headset and half frames refer to lips and eye regions. A person-specific aPCA based training model is built by synchronously recording full frame and half frames videos. The training model consists of mean faces and eigenspaces for full and half frames. The full frame eigenspace is created by using eigenvalues from half frames. The components spanning this space are called pseudo principal components and this space has the same size as a full frame. In this work, a user-specific training model is constructed for each individual, where each individual is asked to perform certain facial expressions during training session. During testing phase, a person wears a VR headset along with a wearable camera setup. A short calibration step is performed to align test half frames with trained half frames. When a new half frame is presented to a trained model, its own weights are found by projecting the new half frames onto the collection of trained half frame eigenspaces. These new weights with full-frame mean face and full-frame eigenspace are used to reconstruct the original face with all facial deformations (more prominently eyes and mouth deformation).

3.1 Training Phase

A user-specific training model is build based on three synchronous video sequences. Our wearable training setup is shown in Fig. 3(a). Our training setup consists of two cameras denoted by Fc and Sc. Fc captures the full face and lips region of a person as show in Fig. 4a and c, respectively. Sc captures the side region of an eye as shown in Fig. 4b. Let ff, \(hf_{l}\) and \(hf_{e}\) denote the full face, lips region and eye region information, respectively. A Full frame (ff) training is performed by exploiting information from half frames (\(hf_{l}\) and \(hf_{e}\)).

Let, \(I_{e}\) and \(I_{l}\) be the intensity values of the eye and lips regions, respectively. The combined intensity matrix \(I_{hf}\) is denoted by,

The mean \(I_{hfo}\) is calculated as,

where, N is the total number of training frames. The mean is then subtracted from each basis in the training data, ensuring that the data is zero-centered.

Mathematically, PCA is an optimal transformation of an input data in the form of least square error sense. Due to space constraint, we direct readers to [26, 30] for more details. To this end, we need to find out the eigen vectors of the covariance matrix (\(\hat{I}_{hf}\) \(\hat{I}_{hf}^{T}\)). This can be done by singular value decomposition (SVD) [33]. SVD is a factorization method which divides a square matrix into three matrices,

where, V = [\(b_{1}, b_{2}\,...\,b_{N}\)] is a matrix of an eigen vector and variable \(b_{n}\) corresponds to the eigen vector. The eigen space for the half frame \(\phi _{hf}\) = [\(\phi _{hf}^{1}\) ... \(\phi _{hf}^{N}\)] is constructed by multiplying V with the \(\hat{I}_{hf}\),

The half frame coefficients (\(\alpha _{hf}\)) from training frames are calculated as,

Similarly, for full frame intensity values \(I_{ff}\), we follow Eqs. 2 and 3 to get,

The eigen space for the full frame \(\phi _{ff}\) = [\(\phi _{ff}^{1}\,\)...\(\,\phi _{ff}^{N}\)] is constructed by multiplying the eigen vector from half frame V = [\(b_{1}\), \(b_{2}\) ... \(b_{N}\)] with the \(\hat{I}_{ff}\). This eigen space is spanned by half frame components. The component spanning this space are called pseudo principal components; information where not all the data is a principal component. This space has the same size as of full frame.

The full and half frame Eigen spaces and mean intensities values are saved and are used in the online testing session.

3.2 Testing Phase

A VR display is attached to a wearable camera setup as shown in Fig. 3(b). The full frame (ff) is no more active during the testing phase. The testing phase is sub-divided into calibration and reconstruction phase.

Calibration Phase: In the start of the testing phase a manual calibration step is performed. The camera position during the training phase can be inconsistent with the camera position during the testing phase. To align test half-frame with trained half-frame, we propose the following calibration step.

The mean half frames from the training phase and the first half-frames from the testing phase are used for the calibration. Figure 5 (top row) shows half frames from the training phase and Fig. 5 (bottom row) shows half frames from the testing phase. We manually learn the feature parameters for both trained and test frames by using lips and eyes feature points. Let,

The center coordinates \(c_{l}\) are calculated as,

The \(w_{l}\), \(h_{l}\) and \(c_{l}\) are graphically shown in Fig. 6b (top row). The in-plane rotational angle \(\theta _{l}\) is calculated as,

The angle calculation \(\theta _{l}\) is graphically shown in Fig. 6c (top row). The scale \(s_{l}\), rotation \(R_{l}\) and translation \(t_{l}\) between the trained and test lips frames are calculated as,

A similar procedure is applied to calculate scale \(s_{e}\), rotation \(R_{e}\) and translation \(t_{e}\) between the trained and test eyes frames as given below,

Reconstruction Phase: During testing phase, half frame information is only available (see Fig. 5 (bottom row)). This half frame information along with trained model from training phase are used to reconstruct the original face of a person with respective facial deformation. The first step is to adjust the test half frames according to calibration step. Let, \(I_{hfe}\) and \(I_{hfl}\) are the test half frames, then \(\bar{I}_{hfe}\) and \(\bar{I}_{hfl}\) are calculated as,

The calibrated test half frames (\(\bar{I}_{hf}\) = [\(\bar{I}_{hfe}\) \(\bar{I}_{hfl}\)]) are then subtracted from the mean half frames (Eq. 2),

where, superscript t denotes the test phase. The coefficients (\(\alpha _{hf}^{t}\)) are calculated by using half frame Eigen space \(\phi _{hf}\) (Eq. 5),

The entire frame containing full face information is constructed by using following equation,

where, \(I_{ffo}\) is taken from Eq. 7, \(\alpha _{hf}^{t}\) from Eq. 18 and \(\phi _{ff}\) from Eq. 9. The M is a selected number of principal components used for reconstruction (\(M < N\)). The N is the total number of frames available for training and M is the number of most significant eigen images. In our experiment, N is around 1000 frames and M is 25 frames. The qualitative and quantitative results are presented in evaluation section.

4 Evaluation

We have performed an experiment with five subjects. For each subject, a training session of two minutes is done using our wearable setup as shown in Fig. 3(a). Each participant is requested to perform different (but natural) facial expressions, e.g. neutral, happy, sad, eye-blink, etc. Three synchronous video sequences of eye, mouth and full face regions are recorded as shown in Fig. 4. An offline training (Sect. 3.1) is performed for each individual using the recorded sequences. During testing session, a person wears a VR headset along with our wearable setup as shown in Fig. 3(b). A full face of a participant is reconstructed using two half frames according to the details in Sect. 3.2. Qualitative and Quantitative analyses are performed on our proposed approach. Qualitative analysis measures the reconstruction quality of a human face. Whereas, quantitative analysis measures the accuracy of our proposed approach.

4.1 Qualitative Analysis

The 75 % of data acquired during the training session is used for training and remaining 25 % of data is used for validation and testing purpose. Please note that we cannot do proper analysis with test data as upper face region is completely occluded by the VR display. The data is qualitatively analyzed with three different scenarios; (i) When just mouth information is used as a half frame during training (similar to [3]), (ii) when just eye information is used as a half frame during training and (iii) when both eye and mouth are used as a half frame during training. Figure 7 shows qualitative results on three users given the above-mentioned three scenarios. Left most face is the original face, second is the reconstructed face from mouth information (scenario i), third is the reconstructed face from the eye information (scenario ii) and the last is the reconstructed face from both mouth and eye information (scenario iii). The results clearly show that facial mimic is not just dependent on mouth area, facial mimic of a person can be modelled accurately by modeling the mouth and eye regions. The qualitative results on the test data is shown in Fig. 8.

4.2 Quantitative Analysis

We have performed two types of quantitative analysis;

-

1.

Shape based quantitative analysis.

-

2.

Appearance based quantitative analysis.

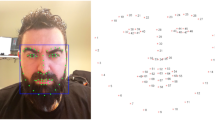

In shape based quantitative analysis, we have compared the differences between the original facial feature points and the reconstructed facial feature points. We have used constrained local model (CLM) [5] to capture these facial feature points. Figure 9 shows the CLM on the human face. The left side of Fig. 9 shows the original face frame and the right side shows the reconstructed face frame. The shape based analysis is performed on 25 % of validation data for each individual and the results are presented in Table 1 according to the following equation.

where, s, \({\overline{s}}\), N and F refer to spatial locations of original facial feature points, spatial locations of reconstructed facial feature points, number of facial feature points and number of frames, respectively. For this work, N= 66 and F = 250–500. The shape analysis results show a small difference between the facial points of original and reconstructed face with an average difference of 1.5327 pixels.

In appearance based quantitative analysis, we have compared the intensity differences between the reconstructed face and the original test face. We have used the mouth region for comparison as upper face region is occluded during real testing. Appearance quality is measured through peak signal to noise ration (PSNR). This ratio depends on the mean square error (\(mse_{app}\)) between the original and reconstructed face. \(mse_{app}\) and PSNR is calculated according to:

where, h and v are the horizontal and vertical resolution of the frames, respectively. \(I_{j}\) is the original test face and \(\bar{I}_{j}\) is the reconstructed face.

where 255 is the maximum value for the pixel intensity. The results are presented in Table 2. A higher PSNR value means that there is a low difference in pixel intensity between the original and reconstructed faces.

5 Discussion

The qualitative and quantitative results show the validity of proposed aPCA-based approach. The qualitative results are presented in the form of reconstructed face frames, whereas quantitative results yield high PSNR and low difference in facial feature points. The PSNR values greater than 40 is considered good [25] and our experiment yields between 48–75 PSNR values. Similarly, shape-based analysis give good results with maximum difference of 2.34 (pixels).

We plan to use the reconstructed face of a wearer in virtual reality (VR) based video teleconferencing application. We will use our embodied telepresence agent (ETA) [14] along with a VR headset for teleconferencing purpose. The application scenario is shown in Fig. 10 and will be considered in future work. In this work, we have used two half frames for face reconstruction. However, this work could be simplified with just one half frame information with some compromise on results.

We have cut a portion of a cardboard for an eye camera. The eye camera is mounted smartly which does not affect significantly the virtual reality experience. The eye camera is mounted externally for this work but this work can be extended by integrating small camera inside VR displays, for example in other works, such as [1, 27]. Furthermore, we have developed our own wearable setup for cameras. However, initially, we have mounted two cameras on google cardboard for testing purpose as shown in Fig. 11. There were two issue with this setup, (i) increase in weight and (ii) normalization issue between training and testing video sequences. These issues will be considered in future work.

This version of the work uses manual calibration step. In our future work, we plan to automate the calibration step by developing feature point localization technique. Furthermore, a problem with PCA is that it is very sensitive to light. To counter this problem, we are working to use edge feature information [32]. The half frame image will be converted to an edge map using a sobel filter (see Fig. 12) and the magnitude values of an edge image will be used to train the full face. During testing phase, the half frame edge map will be used to reconstruct the full face of a person. This work is in progress and will be considered in future publication.

6 Conclusion

We have proposed a novel technique for face reconstruction, when face is occluded by virtual reality (VR) headset. Full face reconstruction is based on asymmetrical principal component analysis (aPCA) framework. The aPCA framework exploits lips and eye appearance information for full face reconstruction. We have estimated the upper face expressions by partial eye information and lower face expression by lips information. This version uses appearance information for face modeling. In future, we plan to use shape based (or feature based) technique for full face reconstruction.

References

Fove eyetracker, January 2016. http://www.getfove.com/

Bentsianov, B., Blitzer, A.: Facial anatomy. Clin. Dermatol. 22(1), 3–13 (2004)

Burgos-Artizzu, X.P., Fleureau, J., Dumas, O., Tapie, T., LeClerc, F., Mollet, N.: Real-time expression-sensitive hmd face reconstruction. In: SIGGRAPH Asia 2015 Technical Briefs, p. 9. ACM (2015)

Chakrabarti, A., Rajagopalan, A., Chellappa, R.: Super-resolution of face images using kernel pca-based prior. IEEE Trans. Multimedia 9(4), 888–892 (2007)

Cristinacce, D., Cootes, T.F.: Feature detection and tracking with constrained local models. In: BMVC, vol. 2, p. 6 (2006)

Ekenel, H.K., Stiefelhagen, R.: Why is facial occlusion a challenging problem? In: Tistarelli, M., Nixon, M.S. (eds.) ICB 2009. LNCS, vol. 5558, pp. 299–308. Springer, Heidelberg (2009). doi:10.1007/978-3-642-01793-3_31

VR Headset, January 2016. http://www.wareable.com/headgear/the-best-ar-and-vr-headsets

Hoberman, P., Krum, D.M., Suma, E.A., Bolas, M.: Immersive training games for smartphone-based head mounted displays. In: 2012 IEEE Virtual Reality Short Papers and Posters (VRW), pp. 151–152. IEEE (2012)

Hosoi, T., Nagashima, S., Kobayashi, K., Ito, K., Aoki, T.: Restoring occluded regions using fw-pca for face recognition. In: 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 23–30. IEEE (2012)

Hupont, I., Baldassarri, S., Cerezo, E.: Facial emotional classification: from a discrete perspective to a continuous emotional space. Pattern Anal. Appl. 16(1), 41–54 (2013)

Hwang, B.W., Lee, S.W.: Reconstruction of partially damaged face images based on a morphable face model. IEEE Trans. Pattern Anal. Mach. Intell. 25(3), 365–372 (2003)

Jäckel, D.: Head-mounted displays. In: Proceedings of RTMI, pp. 1–8 (2013)

Javidi, B., Okano, F.: Three-Dimensional Television, Video, and Display Technologies. Springer, Heidelberg (2002)

Khan, M., Li, H., Rehman, S.: Telepresence mechatronic robot (tebot): toward the design and control of socially interactive bio-inspired system. J. Intell. Fuzzy Syst., Special Issue: Multimedia in Technology Enhanced Learning (2016)

Kimura, S., Fukuomoto, M., Horikoshi, T.: Eyeglass-based hands-free videophone. In: Proceedings of the 2013 International Symposium on Wearable Computers, pp. 117–124. ACM (2013)

Kuo, C.J., Lin, T.G., Huang, R.S., Odeh, S.F.: Facial model estimation from stereo/mono image sequence. IEEE Trans. Multimedia 5(1), 8–23 (2003)

Leonardis, A., Bischof, H.: Robust recognition using eigenimages. Comput. Vis. Image Underst. 78(1), 99–118 (2000)

Li, H., Trutoiu, L., Olszewski, K., Wei, L., Trutna, T., Hsieh, P.L., Nicholls, A., Ma, C.: Facial performance sensing head-mounted display. ACM Trans. Graph. (Proceedings SIGGRAPH 2015) 34(4), 47 (2015)

Lin, D., Tang, X.: Quality-driven face occlusion detection and recovery. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2007, pp. 1–7. IEEE (2007)

Liu, L., et al.: Vibrotactile rendering of human emotions on the manifold of facial expressions. J. Multimedia 3(3), 18–25 (2008)

McCloy, R., Stone, R.: Virtual reality in surgery. BMJ 323(7318), 912–915 (2001)

Mo, Z., Lewis, J.P., Neumann, U.: Face inpainting with local linear representations. In: BMVC, pp. 1–10 (2004)

Reinhard Friedl, M.: Virtual reality and 3d visualizations in heart surgery education. In: The Heart Surgery Forum, vol. 2001, p. 03054 (2002)

Schild, J., LaViola, J., Masuch, M.: Understanding user experience in stereoscopic 3d games. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 89–98. ACM (2012)

Sderstrm, U.: Very low bitrate video communication: a principal component analysis approach. Ph.D. thesis, Ume Unviersity (2008)

Söderström, U., Li, H.: Asymmetrical principal component analysis: theory and its applications to facial video coding. Effective Video Coding for Multimedia Applicationss, Dr. Sudhakar Radhakrishnan (ed.) (2011). InTech, ISBN: 978-953-307-177-0

Stengel, M., Grogorick, S., Eisemann, M., Eisemann, E., Magnor, M.A.: An affordable solution for binocular eye tracking and calibration in head-mounted displays. In: Proceedings of the 23rd Annual ACM Conference on Multimedia Conference, pp. 15–24. ACM (2015)

Storer, M., Roth, P.M., Urschler, M., Bischof, H.: Fast-robust PCA. In: Salberg, A.-B., Hardeberg, J.Y., Jenssen, R. (eds.) SCIA 2009. LNCS, vol. 5575, pp. 430–439. Springer, Heidelberg (2009)

Tu, C.-T., Lien, J.-J.J.: Facial occlusion reconstruction: recovering both the global structure and the local detailed texture components. In: Mery, D., Rueda, L. (eds.) PSIVT 2007. LNCS, vol. 4872, pp. 141–151. Springer, Heidelberg (2007)

Soderstrom, U., Li, H.: Asymmetrical principal component analysis for video coding. Electron. Lett. 44, 276–277 (2008)

V.R. Users, January 2016. http://www.statista.com/statistics/426469/active-virtual-reality-users-worldwide/

Vincent, O., Folorunso, O.: A descriptive algorithm for sobel image edge detection. In: Proceedings of Informing Science & IT Education Conference (InSITE), vol. 40, pp. 97–107 (2009)

Wall, M.E., Rechtsteiner, A., Rocha, L.M.: Singular value decomposition and principal component analysis. In: Berrar, D.P., Dubitzky, W., Granzow, M. (eds.) A Practical Approach to Microarray Data Analysis, pp. 91–109. Springer, US (2003)

Waters, K.: A muscle model for animation three-dimensional facial expression. ACM SIGGRAPH Comput. Graph. 21, 17–24 (1987). ACM

Whissell, C.: The dictionary of affect in language. Emot. Theor. Res. Exp. 4(113–131), 94 (1989)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Khan, M.S.L., Réhman, S.U., Söderström, U., Halawani, A., Li, H. (2016). Face-Off: A Face Reconstruction Technique for Virtual Reality (VR) Scenarios. In: Hua, G., Jégou, H. (eds) Computer Vision – ECCV 2016 Workshops. ECCV 2016. Lecture Notes in Computer Science(), vol 9913. Springer, Cham. https://doi.org/10.1007/978-3-319-46604-0_35

Download citation

DOI: https://doi.org/10.1007/978-3-319-46604-0_35

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46603-3

Online ISBN: 978-3-319-46604-0

eBook Packages: Computer ScienceComputer Science (R0)