Abstract

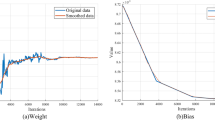

Model combination nearly always improves the performance of machine learning methods. Averaging the predictions of multi-model further decreases the error rate. In order to obtain multi high quality models more quickly, this article proposes a novel deep network architecture called “Fissionable Deep Neural Network”, abbreviated as FDNN. Instead of just adjusting the weights in a fixed topology network, FDNN contains multi branches with shared parameters and multi Softmax layers. During training, the model divides until to be multi models. FDNN not only can reduce computational cost, but also overcome the interference of convergence between branches and give an opportunity for the branches falling into a poor local optimal solution to re-learn. It improves the performance of neural network on supervised learning which is demonstrated on MNIST and CIFAR-10 datasets.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Srivastava, N., Hinton, G., Krizhevsky, A., et al.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Ciresan, D., Meier, U., Schmidhuber, J.: Multi-column deep neural networks for image classification. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3642–3649. IEEE (2012)

Elman, J.L.: Learning and development in neural networks: the importance of starting small. Cognition 48(1), 71–99 (1993)

Mishkin, D., Matas, J.: All you need is a good init (2015). arXiv preprint arXiv:1511.06422

Szegedy, C., Liu, W., Jia, Y., et al.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift (2015). arXiv preprint arXiv:1502.03167

Szegedy, C., Vanhoucke, V., Ioffe, S., et al.: Rethinking the Inception Architecture for Computer Vision (2015). arXiv preprint arXiv:1512.00567

Szegedy, C., Ioffe, S., Vanhoucke, V.: Inception-v4, inception-resnet and the impact of residual connections on learning (2016). arXiv preprint arXiv:1602.07261

Springenberg, J.T., Dosovitskiy, A., Brox, T., et al.: Striving for simplicity: The all convolutional net (2014). arXiv preprint arXiv:1412.6806

He, K., Zhang, X., Ren, S., et al.: Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1026–1034 (2015)

Maas, A.L., Hannun, A.Y., Ng, A.Y.: Rectifier nonlinearities improve neural network acoustic models. In: Proceedings of ICML, vol. 30, no. 1 (2013)

Goodfellow, I.J., Warde-Farley, D., Mirza, M., et al.: Maxout networks (2013). arXiv preprint arXiv:1302.4389

Fahlman, S.E., Lebiere, C.: The cascade-correlation learning architecture (1989)

LeCun, Y., Denker, J.S., Solla, S.A., et al.: Optimal brain damage. In: NIPs (1989)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images. Master’s thesis, Department of Computer Science, University of Toronto (2009)

Lin, M., Chen, Q., Yan, S.: Network in network (2013). CoRR, abs/1312.4400

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Tan, D., Wu, J., Zheng, H., Yin, Y., Liu, Y. (2016). Fissionable Deep Neural Network. In: Hirose, A., Ozawa, S., Doya, K., Ikeda, K., Lee, M., Liu, D. (eds) Neural Information Processing. ICONIP 2016. Lecture Notes in Computer Science(), vol 9950. Springer, Cham. https://doi.org/10.1007/978-3-319-46681-1_44

Download citation

DOI: https://doi.org/10.1007/978-3-319-46681-1_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46680-4

Online ISBN: 978-3-319-46681-1

eBook Packages: Computer ScienceComputer Science (R0)