Abstract

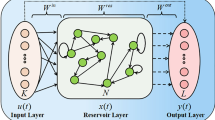

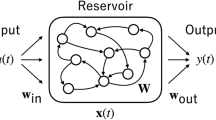

The echo state network is a framework for temporal data processing, such as recognition, identification, classification and prediction. The echo state network generates spatiotemporal dynamics reflecting the history of an input sequence in the dynamical reservoir and constructs mapping from the input sequence to the output one in the readout. In the conventional dynamical reservoir consisting of sparsely connected neuron units, more neurons are required to create more time delay. In this study, we introduce the dynamic synapses into the dynamical reservoir for controlling the nonlinearity and the time constant. We apply the echo state network with dynamic synapses to several benchmark tasks. The results show that the dynamic synapses are effective for improving the performance in time series prediction tasks.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Jaeger, H.: The “echo state” approach to analysing and training recurrent neural networks. Technical Report 148, GMD - German National Research Institute for Computer Science (2001)

Jaeger, H.: Tutorial on training recurrent neural networks, covering BPPT, RTRL, EKF and the “echo state network” approach. GMD-Forschungszentrum Informationstechnik (2002)

Markram, H., Tsodyks, M.: Redistribution of synaptic efficacy between neocortical pyramidal neurons. Nature 382, 807–810 (1996)

Mongillo, G., Barak, O., Tsodyks, M.: Synaptic theory of working memory. Science 319, 1543 (2008)

Tsodyks, M., Markram, H.: The neural code between neocortial pyramidal neurons depends on neurotransmitter release probability. Proc. Natl. Acad. Sci. USA 94, 719–723 (1997)

Tsodyks, M., Markram, H.: Differential signaling via the same axon of neocortical pyramidal neurons. Proc. Natl. Acad. Sci. USA 95, 5323–5328 (1998)

Jaeger, H.: Short term memory in echo state networks. GMD-Report 152, German National Research Institute for Computer Science (2002)

Goudarzi, A., Banda, P., Lakin, M.R., Teuscher, C., Stefanovic, D.: A Comparative Study of Reservoir Computing for Tenporal Signal Processing, arXiv:1401.2224v1 [cs.NE] (2014)

Schrauwen, B., Verstraeten, D., Van Campenhout, J.: An overview of reservoir computing: theory, applications and implementations. In: Proceedings of the 15th European Symposium on Articial Neural Networks, pp. 471–482 (2007)

Acknowledgments

This work was partially supported by JSPS KAKENHI Grant Number 16K00326 (GT), 26280093 (KA).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Mori, R., Tanaka, G., Nakane, R., Hirose, A., Aihara, K. (2016). Computational Performance of Echo State Networks with Dynamic Synapses. In: Hirose, A., Ozawa, S., Doya, K., Ikeda, K., Lee, M., Liu, D. (eds) Neural Information Processing. ICONIP 2016. Lecture Notes in Computer Science(), vol 9947. Springer, Cham. https://doi.org/10.1007/978-3-319-46687-3_29

Download citation

DOI: https://doi.org/10.1007/978-3-319-46687-3_29

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46686-6

Online ISBN: 978-3-319-46687-3

eBook Packages: Computer ScienceComputer Science (R0)