Abstract

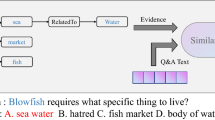

Recognizing Textual Entailment (RTE) plays an important role in NLP applications like question answering, information retrieval, etc. Most previous works either use classifiers to employ elaborately designed features and lexical similarity or bring distant supervision and reasoning technique into RTE task. However, these approaches are hard to generalize due to the complexity of feature engineering and are prone to cascading errors and data sparsity problems. For alleviating the above problems, some work use LSTM-based recurrent neural network with word-by-word attention to recognize textual entailment. Nevertheless, these work did not make full use of knowledge base (KB) to help reasoning. In this paper, we propose a deep neural network architecture called Multi-task Knowledge Assisted LSTM (MKAL), which aims to conduct implicit inference with the assistant of KB and use predicate-to-predicate attention to detect the entailment between predicates. In addition, our model applies a multi-task architecture to further improve the performance. The experimental results show that our proposed method achieves a competitive result compared to the previous work.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Beltagy, I., Chau, C., Boleda, G., Garrette, D., Erk, K., Mooney, R.: Deep semantics with probabilistic logical form. In: Proceedings of the Second Joint Conference on Lexical and Computational Semantics (* SEM-13) (2013)

Bowman, S.R., Angeli, G., Potts, C., Manning, C.D.: A large annotated corpus for learning natural language inference. arXiv preprint arXiv:1508.05326 (2015)

Dagan, I., Glickman, O., Magnini, B.: The Pascal recognising textual entailment challenge. In: Quiñonero-Candela, J., Dagan, I., Magnini, B., dAlché-Buc, F. (eds.) MLCW 2005. LNCS, vol. 3944, pp. 177–190. Springer, Heidelberg (2006)

Dinu, G., Wang, R.: Inference rules and their application to recognizing textual entailment. In: Proceedings of the 12th Conference of the European Chapter of the Association for Computational Linguistics, pp. 211–219. Association for Computational Linguistics (2009)

Etzioni, O., Fader, A., Christensen, J., Soderland, S., Mausam, M.: Open information extraction: the second generation. In: IJCAI, vol. 11, pp. 3–10 (2011)

Galárraga, L.A., Teflioudi, C., Hose, K., Suchanek, F.: Amie: association rule mining under incomplete evidence in ontological knowledge bases. In: Proceedings of the 22nd International Conference on World Wide Web, pp. 413–422. International World Wide Web Conferences Steering Committee (2013)

Hendrickx, I., Kim, S.N., Kozareva, Z., Nakov, P., Ó Séaghdha, D., Padó, S., Pennacchiotti, M., Romano, L., Szpakowicz, S.: Semeval-2010 task 8: multi-way classification of semantic relations between pairs of nominals. In: Proceedings of the Workshop on Semantic Evaluations: Recent Achievements and Future Directions, pp. 94–99. Association for Computational Linguistics (2009)

Hickl, A.: Using discourse commitments to recognize textual entailment. In: Proceedings of the 22nd International Conference on Computational Linguistics, Vol. 1, pp. 337–344. Association for Computational Linguistics (2008)

Jijkoun, V., de Rijke, M.: Recognizing textual entailment using lexical similarity. In: Proceedings of the PASCAL Challenges Workshop on Recognising Textual Entailment, pp. 73–76 (2005)

Lin, Y., Liu, Z., Luan, H., Sun, M., Rao, S., Liu, S.: Modeling relation paths for representation learning of knowledge bases. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pp. 705–714. Association for Computational Linguistics, Lisbon, Portugal, September 2015. https://aclweb.org/anthology/D/D15/D15-1082

Liu, X., Gao, J., He, X., Deng, L., Duh, K., Wang, Y.Y.: Representation learning using multi-task deep neural networks for semantic classification and information retrieval. In: Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 912–921. Association for Computational Linguistics, Denver, Colorado, May–June 2015. http://www.aclweb.org/anthology/N15-1092

Malakasiotis, P.: Paraphrase and textual entailment recognition and generation. Ph. D. thesis, Department of Informatics, Athens University of Economics and Business, Greece (2011)

Malakasiotis, P., Androutsopoulos, I.: Learning textual entailment using SVMs and string similarity measures. In: Proceedings of the ACL-PASCAL Workshop on Textual Entailment and Paraphrasing, pp. 42–47. Association for Computational Linguistics (2007)

Nielsen, R.D., Ward, W., Martin, J.H.: Recognizing entailment in intelligent tutoring systems. Nat. Lang. Eng. 15(04), 479–501 (2009)

Pennington, J., Socher, R., Manning, C.D.: Glove: global vectors for word representation. In: Proceedings of the Empiricial Methods in Natural Language Processing (EMNLP 2014), vol. 12, pp. 1532–1543 (2014)

Rios, M., Specia, L., Gelbukh, A., Mitkov, R.: Statistical relational learning to recognise textual entailment. In: Gelbukh, A. (ed.) CICLing 2014, Part I. LNCS, vol. 8403, pp. 330–339. Springer, Heidelberg (2014)

Rocktäschel, T., Grefenstette, E., Hermann, K.M., Kočiskỳ, T., Blunsom, P.: Reasoning about entailment with neural attention. arXiv preprint arXiv:1509.06664 (2015)

Sha, L., Li, S., Chang, B., Sui, Z., Jiang, T.: Recognizing textual entailment using probabilistic inference. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pp. 1620–1625. Association for Computational Linguistics, Lisbon, Portugal, September 2015. https://aclweb.org/anthology/D/D15/D15-1185

Shnarch, E., Goldberger, J., Dagan, I.: A probabilistic modeling framework for lexical entailment. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies: Short Papers, vol. 2, pp. 558–563. Association for Computational Linguistics (2011)

Shnarch, E., Goldberger, J., Dagan, I.: Towards a probabilistic model for lexical entailment. In: Proceedings of the TextInfer 2011 Workshop on Textual Entailment, pp. 10–19. Association for Computational Linguistics (2011)

Wan, S., Dras, M., Dale, R., Paris, C.: Using dependency-based features to take the para-farce out of paraphrase. In: Proceedings of the Australasian Language Technology Workshop, vol 2006 (2006)

Wang, R., Neumann, G.: Recognizing textual entailment using sentence similarity based on dependency tree skeletons. In: Proceedings of the ACL-PASCAL Workshop on Textual Entailment and Paraphrasing, pp. 36–41. Association for Computational Linguistics (2007)

Zanzotto, F.M., Moschitti, A.: Automatic learning of textual entailments with cross-pair similarities. In: International Conference on Computational Linguistics and the 44th Annual meeting of the Association for Computational Linguistics, vol. 1, pp. 401–408. Association for Computational Linguistics (2006)

Zeiler, M.D.: Adadelta: an adaptive learning rate method. arXiv preprint arXiv:1212.5701 (2012)

Zeng, D., Liu, K., Lai, S., Zhou, G., Zhao, J.: Relation classification via convolutional deep neural network. In: Proceedings of COLING, pp. 2335–2344 (2014)

Acknowledgements

We would like to thank our three anonymous reviewers for their helpful advice on various aspects of this work. This research was supported by the National Key Basic Research Program of China (No. 2014CB340504) and the National Natural Science Foundation of China (No. 61375074,61273318). The contact author for this paper is Baobao Chang and Zhifang Sui.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Sha, L., Li, S., Chang, B., Sui, Z. (2016). Recognizing Textual Entailment via Multi-task Knowledge Assisted LSTM. In: Sun, M., Huang, X., Lin, H., Liu, Z., Liu, Y. (eds) Chinese Computational Linguistics and Natural Language Processing Based on Naturally Annotated Big Data. NLP-NABD CCL 2016 2016. Lecture Notes in Computer Science(), vol 10035. Springer, Cham. https://doi.org/10.1007/978-3-319-47674-2_24

Download citation

DOI: https://doi.org/10.1007/978-3-319-47674-2_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-47673-5

Online ISBN: 978-3-319-47674-2

eBook Packages: Computer ScienceComputer Science (R0)