Abstract

In this paper, we propose a method to recognize the 6DoF pose of multiple objects simultaneously. One good solution to recognize them is applying a Hypothesis Verification (HV) algorithm. This type of algorithm evaluates consistency between an input scene and scene hypotheses represented by combinations of object candidates generated from the model based matching. Its use achieves reliable recognition because it maximizes the fitting score between the input scene and the scene hypotheses instead of maximizing the fitting score of an object candidate. We have developed a more reliable HV algorithm that uses a novel cue, the naturalness of an object’s layout (its physical reasoning). This cue evaluates whether the object’s layout in a scene hypothesis can actually be achieved by using simple collision detection. Experimental results show that using the physical reasoning have improved recognition reliability.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

3D object recognition and 6DoF pose estimation from depth data is one of the fundamental techniques for scene understanding, bin-picking for industrial robots, and semantic grasping for partner robots.

A Model-based Matching (MM) method is generally used to recognize 6DoF of target objects. The MM detects 6DoF pose parameters having high fitting score exceeding a predefined threshold.

However, this method sometimes detects false positives on surfaces having a high fitting score. The reasons are the occurrence of a pseudo surface shared by multiple objects and the presence of objects having parts similar to those of the target object. This is the essential problem of the MM approaches because they detect objects on basis of local consistency such as the surface of object model and the partial regions of the input scene data.

One good solution to solve this problem is applying the Hypothesis Verification (HV) algorithm shown in Fig. 1. HV is an algorithm for scene understanding that uses the MM algorithm as a module for generating object candidates. This algorithm simultaneously recognizes the 6DoF poses of multiple objects by matching an input scene and the scene hypotheses represented by combinations of object candidates generated by the MM with low thresholds. Using this algorithm enables reliable recognition. This is because it maximizes the consistency calculated from global information, such as consistency between the input scene and the scene hypothesis, instead of maximizing the local consistency.

Using the HV algorithm helps to achieve reliable recognition; however, it sometimes detects spatially overlapping objects. This is because it evaluates 2.5D consistency, the similarity of scene point cloud and rendered scene hypothesis (2.5D point cloud or depth image), but it does not consider volumetric information behind point cloud data.

In this research, we have enhanced the reliability of the HV algorithm by using a novel cue, the naturalness of an object’s layout (its physical reasoning). This cue evaluates whether the object’s layout in the scene hypothesis can actually be achieved. Our HV algorithm evaluates not only the shape consistency between an input scene and a scene hypothesis but also the physical reasoning of the scene hypothesis.

The contributions of this work are the following.

-

1.

We developed a method to detect multiple 3D objects in highly cluttered scenes using the HV algorithm, which uses two types of cues, the physical reasoning of the object’s layout and the shape consistency.

-

2.

We developed and propose a simple and fast collision detector using the Sphere Set Approximation method [16].

-

3.

We demonstrated that, when using a well-known dataset, our method outperforms the state-of-the-art HV method from the viewpoint of recognition performance and processing time.

2 Related Work

In this section, we will introduce the algorithm of the HV method and various kinds of model matching methods as an object hypothesis generator of the HV method.

Model matching: This method detects the 6DoF parameter (object candidate) of each object in the scene by matching features obtained from each object model. The model matching role in HV is to detect object candidate with no undetected positives, but where false positives are allowed. The HV method does this by using various model matching approaches as the object candidate generator [1].

There are various kind of features for 6DoF estimation. 3D feature generated from local point cloud around a keypoint [6, 14] is generally used. SHOT [14] feature encodes the distribution of surface normal. RoPS [6] feature encodes the statistics (central moments and Shannon entropy) of projected local point clouds onto 2D planes. When the shape of target object is smooth or flat, it is effective extending the region for feature description. OUR-CVFH [2] feature encodes the semi-global surface structures, such as smooth segments. Using RGB information helps to enhance the uniqueness of the feature. The methods [5, 15] use such multimodal information. Recently, learned features such as those using decision forest [4] and deep learning [8] have been proposed, and they can deliver robust detection result.

HV method: This approach simultaneously recognize multiple objects by calculating consistency between the scene hypotheses and the input scene. The scene hypothesis is generated by combining object candidates obtained by the model matching method. The HV method regards the multiple object recognition problem as a combinatorial optimization problem of object candidates. The important thing here is what kind of information is suitable for calculating scene consistency. Methods [7, 10, 13] used depth data in order to evaluate shape consistency. Papazov and Burschka [11] and Aldoma et al. [3] minimizes the number of scene points described by multiple object candidates. Aldoma et al. [3] also used similarity of normal vector direction in addition to the depth similarity. The method [1] have used not only shape information but also color information if it is available.

3 Proposal of HV Method Using Shape Consistency and Physical Reasoning

3.1 Overview

The proposed method consists of main two modules: a hypothesis generation module and a verification module. It’s same as general HV algorithm described by Fig. 1.

First of all, in the hypothesis generation module, object hypotheses \(H=\{h_{i}, \ldots , h_{n}\}\) are generated by using the model matching module. All object models \(M=\{M_{i}, \ldots ,M_{m}\}\) stored in the library are matched to the input point cloud data S. Object hypothesis \(h_i\) is given by the pair \((M_i, T_i)\), where \(T_i\) represents the 6DoF parameter of object \(M_i\) in the scene S. The scene hypothesis generator generates scene hypothesis hs(X) by combining a number of object candidates. X is a bit string with length of n. The bit indicating 1 means a corresponding object candidate is selected as the scene hypothesis.

In the verification module, the similarity between S and hs(X) is calculated. The proposed method solves the combinatorial optimization problem, which explores the scene hypothesis with the highest similarity value by selecting arbitrary bits from X. Therefore, the size of the solution space is \(2^n\). The proposed method optimizes a fitness function consisting of the physical reasoning and the shape consistency. Each component is explained in the following subsections.

3.2 Physical Reasoning: \(f_P(X)\)

This term, shown in Eq. 1, evaluates the physical reasoning of scene hypothesis hs(X). In particular, this term evaluates whether an intersection occurs in the object hypotheses of hs(X).

In this equation, function \(C(h_i, h_j)\) is a collision detector. If an intersection occurs between the object hypotheses \(h_i\) and \(h_j\), this function returns 1. The term R indicates all combinations of valid object hypotheses (without any identical pairs occurring) in hs(X).

In order to evaluate intersections occurring in object hypotheses, we used collision models for each object. Figure 2 shows the proposed collision models. They comprise two types of spheres, outer spheres (red) and inner spheres (gray). The outer spheres represent circumscribed spheres of the object shapes; inner spheres represent sets of spheres approximating object shapes.

Using these models enables fast collision detection, because a sphere is the simplest primitive for computing collisions. If two spheres intersect, the following condition (the distance between the centers of two spheres) < (sum of sphere radii) is satisfied.

Collisions in paired object candidates are detected by carrying out the following two sequential steps.

Step 1. Detecting collisions in outer spheres

Step 2. Detecting collisions in the inner spheres of \(h_i\) and \(h_j\)

Step 2 is skipped if no intersections occur in the outer spheres, but is executed otherwise. Using such a two-stage decision enables fast calculation to be achieved even if scene hypotheses include many object candidates. If intersections occur in spheres, the paired object candidates are regarded as unnatural. This means that the paired object candidates \(h_i\) and \(h_j\) are spatially overlapped.

Figure 3 shows the result of collision detection. (a) shows the overview of the input scene. (b) shows the collision models of a scene hypothesis with 13 object candidates. Spatial intersection did not occur with the white models, but it did occur with the colored models. In this case, this scene hypothesis has the low physical reasoning value \(f_P(X)\). (c) shows the actual object candidates of this scene hypothesis. Green numbers indicates the index of each candidate.

In order to generate inner spheres, applying the method proposed in [16] is one practical solution. However, the inner spheres used in the work reported in this paper are manually generated.

3.3 Shape Consistency: \(f_S(X)\)

This term, shown in Eq. 2, evaluates the shape consistency of the hypothesis scene hs(X). In particular, an image similarity between the depth image of input scene S and that of hs(X) is calculated.

Here, \( I_S(i)\) and \(I_{hs}(i)\) represent the i th pixel value of the depth image of S and hs(X). Value N represents the number of pixels that have a depth value of either \( I_S(i)\) or \(I_{hs}(i)\). Function \(Sim( I_S(i), I_{hs}(i) )\) returns

where \(th_{d}\) is the depth similarity threshold. This value is adjusted by considering the quality of input depth data.

3.4 Optimization

The proposed method solves the combinatorial optimization that maximizes function F(X) by modifying bit string X. The cost function is defined as Eq. 4.

where w means the weighting value of two terms. The effect of value w on recognition performance is described in Sect. 4.2. We solved this optimization by using the Genetic Algorithm (GA). The chromosome is defined as the bit string X and the fitness value is the value of cost function F(X).

The GA parameters we used in experiments are as follows: The number of population was 200, Crossover rate was 97 %, Mutation rate was 3 %. When crossover occurred, then two individuals are generated by swapping randomly chosen bits of the parent. When mutation occurred, then a new individual is generated by switching a randomly chosen bit of a randomly chosen chromosome. Initial individuals are generated by turning on randomly chosen bits (valid bits). The optimal number of valid bits are experimentally decided (see Sect. 4.2).

4 Experiments

4.1 Dataset

We evaluated the recognition performance of the proposed HV algorithm. In order to evaluate the method’s versatility, two kinds of datasets (Laser Scanner and Kinect) with different data quality are used.

Laser Scanner [9]: This dataset consists of 50 scenes, which are captured by a laser scanner, and five object models. Each object in the scene has ground truth data as the transformation matrix.

Kinect Dataset [3]: This dataset consists of 50 scenes, which are captured by a Kinect sensor, and 35 CAD models of household objects. This dataset also has ground truth data as the transformation matrix.

In order to evaluate the HV performance while ignoring the performance of the model matching method as the object candidate generator, we used artificially generated object candidates (test data elements). Test data elements were prepared by combining ground truth and automatically generated object candidates that represent randomly chosen object models and ground truth transformations which are affected by noise for the translation in the range of ±10 [mm] and ±5 [cm] for Laser scanner dataset and Kinect dataset). As for the Laser scanner dataset, we selected 38 scene which do not include model “rhino” for generating test data elements. Because full 3D surface model of it is not provided. We generate one test data element per scene. As for the Kinect dataset, we selected especially complicated scene data including 12 closely placed objects (see Fig. 3). We prepared 30 test data elements for this scene.

4.2 Parameters

There are some parameters that affect recognition performance. In this section, we discuss the optimal value of each parameter.

Weighted value w : This is the most significant parameter for recognition performance. In the experiment we conducted for this, we explored the optimal w value by iterating a recognition test while changing the value w in the range [0,1]. The recognition performance for the Kinect dataset is shown in Fig. 4. This figure shows recall/precision values and the number of undetected/false positives for each value w. In our experiment, recall is computed by (# of detected true positive) / (# of all true positive), precision rate is computed by (# of detected true positive) / (# of detected object candidate).

\(w = 0\): In this case, the proposed HV algorithm does not take into account the physical reasoning of the object’s layout. As a result, many false positives were detected. Specifically, about 15.5 objects per scene were detected. (Each scene contained 12 objects.)

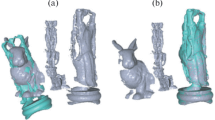

\(w > 0\): In this case, the proposed HV algorithm takes the physical reasoning into account. The number of false positives per scene was less than 1. However, when the value w was larger than 0.4, the recall rate was decreased. A typical recognition result for this case is shown in Fig. 5.

In this figure, (a) shows the recognition result obtained using \(w=0.7\), and (b) shows the ground truth (the correct scene hypothesis). In this case, there were two objects that were not detected. The common point these objects share is that they appear small in a depth image, which means that the image similarity would not be improved much if they were detected. As a result, they were frequently not detected.

The result shown in Fig. 4 seems to indicate that \(w = 0.3\) is the optimal value.

Depth threshold \(th_{d}\): This parameter is used for calculating the shape consistency \(f_S(X)\). This parameter should be adjusted by taking into account the accuracy of the depth data acquired from the sensor. In the experiment we conducted for this, the parameters we used were 1.0 mm for the Laser Scanner dataset and 1.5 mm for the Kinect dataset.

Valid bits for initial bit strings: One important parameter is the number of valid bits on the initial bit string. In the experiment we conducted for this, we investigated the recognition performance (F-measure and Processing time) while changing the valid bit ratio (indicated as 1) on the initial bit strings. F-measure is computed by (2 \(\cdot \) precision \(\cdot \) recall)/(precision \(+\) recall). Results are shown in Fig. 6.

The figure results confirm that the processing time lineally increased when the valid bit ratio was increased. When the valid bit ratio is larger than 5 %, the recognition performance reached its peak. Therefore, we used 5 % as the ratio for the experiments described below.

4.3 Recognition Performance

In order to evaluate the performance of the proposed method, we carried out a recognition experiment for two datasets. In these we compared the following three methods.

-

1.

GHV [3]

-

2.

Proposed HV (S)

-

3.

Proposed HV (S+P)

To describe these methods specifically, GHV is the HV algorithm proposed by Aldoma et al. at ECCV2012, HV (S) is the proposed HV algorithm using only shape consistency for evaluating the effectiveness of the proposed physical reasoning, and HV (S+P) is the proposed HV algorithm using shape consistency and physical reasoning with \(w = 0.3\). All three methods were implemented by using the Point Cloud Library [12]. All experiments were performed on a desktop computer with an Intel Core i7-6700 3.40 GHz CPU and 16 GB RAM.

Example recognition results obtained with the proposed method are shown in Fig. 7. Initial object candidates are shown in (a). In (a), 100 objects rendered as a surface are illustrated; the red points represent input point clouds. (b) shows recognition result. A scene hypotheses having maximum score are shown. Overview of the input scene is shown in Fig. 3(a).

Table 1 shows the recognition performance for the Laser Scanner dataset. For the method HV(S), its precision rate is lower than its recall rate because some object hypotheses are falsely detected. Such object hypotheses are spatially overlapped (see Fig. 8(a)). This problem has been solved by taking physical reasoning into account. As a result, the precision rate of HV(S+P) was higher than that of HV(S).

Table 2 shows the recognition performance for the Kinect dataset. This dataset poses greater problems than the Laser Scanner dataset because of its noise, point cloud sparseness, the number of objects in the scene (12 objects), and the size of the model library (35 objects). The best precision rate obtained was that for the GHV method, but the proposed method showed almost the same performance. In terms of processing time, however, the proposed method’s performance was four times faster than that of the GHV method.

5 Conclusion

We have developed and in this paper proposed a novel Hypothesis Verification (HV) algorithm that can recognize the 6 DoF parameters of multiple objects. Our method optimizes the layout of the objects in the scene by using two cues. One is the shape consistency for evaluating similarity between the input scene and the scene hypothesis representing a candidate for the object’s layout. The other is a novel cue, the layout’s physical reasoning. It evaluates the physical naturalness of the object’s layout by using simple and fast collision detection. We have demonstrated that using the physical reasoning is an effective way to improve precision rate. We also confirmed that the proposed method shows higher reliability than that of the state-of-the-art method.

References

Aldoma, A., Tombari, F., Prankl, J., Richtsfeld, A., di Stefano, L., Vincze, M.: Multimodal cue integration through hypotheses verification for RGB-D object recognition and 6DOF pose estimation. In: IEEE International Conference on Robotics and Automation (ICRA), pp. 2104–2111 (2013)

Aldoma, A., Tombari, F., Rusu, R.B., Vincze, M.: OUR-CVFH – oriented, unique and repeatable clustered viewpoint feature histogram for object recognition and 6DOF pose estimation. In: Pinz, A., Pock, T., Bischof, H., Leberl, F. (eds.) DAGM/OAGM 2012. LNCS, vol. 7476, pp. 113–122. Springer, Heidelberg (2012). doi:10.1007/978-3-642-32717-9_12

Aldoma, A., Tombari, F., Stefano, L., Vincze, M.: A global hypotheses verification method for 3D object recognition. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7574, pp. 511–524. Springer, Heidelberg (2012). doi:10.1007/978-3-642-33712-3_37

Tejani, A., Tang, D., Kouskouridas, R., Kim, T.-K.: Latent-class hough forests for 3D object detection and pose estimation. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8694, pp. 462–477. Springer, Heidelberg (2014). doi:10.1007/978-3-319-10599-4_30

Drost, B., Ilic, S.: 3D object detection and localization using multimodal point pair features. In: Second International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission (3DIMPVT), pp. 9–16 (2012)

Guo, Y., Sohel, F.A., Bennamoun, M., Lu, M., Wan, J.: Rotational projection statistics for 3D local surface description and object recognition. Int. J. Comput. Vis. 105(1), 63–86 (2013)

Hashimoto, M., Sumi, K., Usami, T.: Recognition of multiple objects based on global image consistency. In: Proceedings of the British Machine Vision Conference (BMVC), pp. 1–10 (1999)

Kehl, W., Milletari, F., Tombari, F., Ilic, S., Navab, N.: Deep learning of local RGB-D patches for 3D object detection and 6D pose estimation. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9907, pp. 205–220. Springer, Heidelberg (2016). doi:10.1007/978-3-319-46487-9_13

Mian, A.S., Bennamoun, M., Owens, R.: Three-dimensional model-based object recognition and segmentation in cluttered scenes. IEEE Trans. Pattern Anal. Mach. Intell. 28(10), 1584–1601 (2006)

Narayanan, V., Likhachev, M.: PERCH: perception via search for multi-object recognition and localization. In: IEEE International Conference on Robotics and Automation (ICRA), pp. 5052–5059 (2016)

Papazov, C., Burschka, D.: An efficient RANSAC for 3D object recognition in noisy and occluded scenes. In: Kimmel, R., Klette, R., Sugimoto, A. (eds.) ACCV 2010. LNCS, vol. 6492, pp. 135–148. Springer, Heidelberg (2011). doi:10.1007/978-3-642-19315-6_11

Rusu, R.B., Cousins, S.: 3D is here: Point cloud library (PCL). In: IEEE International Conference on Robotics and Automation (ICRA), pp. 1–4 (2011)

Sui, Z., Jenkins, O.C., Desingh, K.: Axiomatic particle filtering for goal-directed robotic manipulation. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4429–4436 (2015)

Tombari, F., Salti, S., Stefano, L.: Unique signatures of histograms for local surface description. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6313, pp. 356–369. Springer, Heidelberg (2010). doi:10.1007/978-3-642-15558-1_26

Tombari, F., Salti, S., di Stefano, L.: A combined texture-shape descriptor for enhanced 3D feature matching. In: 18th IEEE International Conference on Image Processing (ICIP), pp. 809–812 (2011)

Wang, R., Zhou, K., Snyder, J., Liu, X., Bao, H., Peng, Q., Guo, B.: Variational sphere set approximation for solid objects. Vis. Comput. 22(9–11), 612–621 (2006)

Acknowledgements

This work was partially supported by Grant-in-Aid for Scientific Research (C) 26420398.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Akizuki, S., Hashimoto, M. (2016). Physical Reasoning for 3D Object Recognition Using Global Hypothesis Verification. In: Hua, G., Jégou, H. (eds) Computer Vision – ECCV 2016 Workshops. ECCV 2016. Lecture Notes in Computer Science(), vol 9915. Springer, Cham. https://doi.org/10.1007/978-3-319-49409-8_51

Download citation

DOI: https://doi.org/10.1007/978-3-319-49409-8_51

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-49408-1

Online ISBN: 978-3-319-49409-8

eBook Packages: Computer ScienceComputer Science (R0)