Abstract

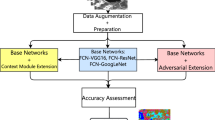

As machine learning techniques increase in complexity, their hunger for more training data is ever-growing. Deep learning for image recognition is no exception. In some domains, training images are expensive or difficult to collect. When training image availability is limited, researchers naturally turn to synthetic methods of generating new imagery for training. We evaluate several methods of training data augmentation in the context of improving performance of a Convolutional Neural Network (CNN) in the domain of fine-grain aircraft classification. We conclude that randomly scaling training imagery significantly improves performance. Also, we find that drawing random occlusions on top of training images confers a similar improvement in our problem domain. Further, we find that these two effects seem to be approximately additive, with our results demonstrating a 45.7% reduction in test error over basic horizontal flipping and cropping.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Mash, R., Becherer, N., Woolley, B.P., Pecarina, J.P.: toward aircraft recognition with convolutional neural networks. In: Proceedings of the 2016 IEEE National Avionics and Electronics Conference (2016)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1–9 (2012)

Visin, F., Kastner, K., Cho, K., Matteucci, M., Courville, A., Bengio, Y.: ReNet: a recurrent neural network based alternative to convolutional networks, pp. 1–9. Arxiv (2015)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Fei-Fei, L.: Large-scale video classification with convolutional neural networks. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1725–1732 (2014)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos, pp. 1–11 (2014) arXiv preprint, arXiv:1406.2199

Dieleman, S., Willett, K., Dambre, J.: Rotation-invariant convolutional neural networks for galaxy morphology prediction. Mon. Not. R. Astron. Soc. 450, 1441–1459 (2015)

Yilmaz, O.: Classification of occluded objects using fast recurrent processing (2015)

Malli, R.C., Aygun, M., Ekenel, H.K.: Apparent age estimation using ensemble of deep learning models (2016)

Chatfield, K., Simonyan, K., Vedaldi, A., Zisserman, A.: Return of the devil in the details: delving deep into convolutional nets. Brit. Mach. Vision Conf. 2014, 1–11 (2014)

Wang, K.K.: Image classification with pyramid representation and rotated data augmentation on torch 7 (2015)

Pepik, B., Benenson, R., Ritschel, T., Schiele, B.: What is holding back convnets for detection? In: Gall, J., Gehler, P., Leibe, B. (eds.) GCPR 2015. LNCS, vol. 9358, pp. 517–528. Springer, Heidelberg (2015). doi:10.1007/978-3-319-24947-6_43

Rematas, K., Ritschel, T., Fritz, M., Tuytelaars, T.: Image-based synthesis and re-synthesis of viewpoints guided by 3D models. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 3898–3905 (2014)

Stark, M., Goesele, M., Schiele, B.: Back to the future: learning shape models from 3D CAD data. BMVC, pp. 1–11 (2010)

Xu, J., Vazquez, D., Lopez, A.M., Marin, J., Ponsa, D.: Learning a multiview part-based model in virtual world for pedestrian detection. In: Proceedings, IEEE Intelligent Vehicles Symposium, pp. 467–472 (2013)

Nykl, S., Mourning, C., Chelberg, D.: Interactive mesostructures with volumetric collisions. IEEE Trans. Vis. Comput. Graph. 20, 970–982 (2014)

Goodfellow, I., Pouget-Abadie, J., Mirza, M.: Generative adversarial networks, pp. 1–9. arXiv preprint (2014)

Radford, A., Metz, L., Chintala, S.: Unsupervised representation learning with deep convolutional generative adversarial networks, pp. 1–15. arXiv (2015)

Denton, E., Chintala, S., Szlam, A., Fergus, R.: Deep generative image models using a Laplacian pyramid of adversarial networks, pp. 1–10. Arxiv (2015)

Dosovitskiy, A., Springenberg, J.T., Brox, T.: Learning-to-generate-chairs-with-convolutional-neural-networks. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1538–1546 (2015)

Ross, S.M.: Formation flight control for aerial refueling. Master’s thesis, pp. 1–280 (2006)

Acknowledgements

This work was supported by the Air Force Research Lab’s Sensors Directorate, Layered Sensing Exploitation Division. The views expressed in this work are those of the authors, and do not reflect the official policy or position of the United States Air Force, Department of Defense, or the U.S. Government. This document has been approved for public release.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Mash, R., Borghetti, B., Pecarina, J. (2016). Improved Aircraft Recognition for Aerial Refueling Through Data Augmentation in Convolutional Neural Networks. In: Bebis, G., et al. Advances in Visual Computing. ISVC 2016. Lecture Notes in Computer Science(), vol 10072. Springer, Cham. https://doi.org/10.1007/978-3-319-50835-1_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-50835-1_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-50834-4

Online ISBN: 978-3-319-50835-1

eBook Packages: Computer ScienceComputer Science (R0)