Abstract

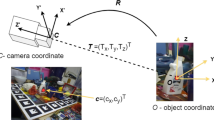

In this paper, we present a novel framework to drive automatic robotic grasp by matching camera captured RGB-D data with 3D meshes, on which prior knowledge for grasp is pre-defined for each object type. The proposed framework consists of two modules, namely, pre-defining grasping knowledge for each type of object shape on 3D meshes, and automatic robotic grasping by matching RGB-D data with pre-defined 3D meshes. In the first module, we scan 3D meshes for typical object shapes and pre-define grasping regions for each 3D shape surface, which will be considered as the prior knowledge for guiding automatic robotic grasp. In the second module, for each RGB-D image captured by a depth camera, we recognize 2D shape of the object in it by an SVM classifier, and then segment it from background using depth data. Next, we propose a new algorithm to match the segmented RGB-D shape with predefined 3D meshes to guide robotic self-location and grasp by an automatic way. Our experimental results show that the proposed framework is particularly useful to guide camera based robotic grasp.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Han, J., Shao, L., Xu, D., Shotton, J.: Enhanced computer vision with microsoft kinect sensor: a review. IEEE Trans. Cybern. 43(5), 1318–1334 (2013)

Cheng, H., Chen, H., Liu, Y.: Topological indoor localization and navigation for autonomous mobile robot. IEEE Trans. Autom. Sci. Eng. 12(2), 729–738 (2015)

Henry, P., Krainin, M., Herbst, E., Ren, X.F., Fox, D.: RGB-D mapping: using kinect-style depth cameras for dense 3D modeling of indoor enviroments. 31(5), 647–663 (2012)

Prusak, A., Melnychuk, O., Roth, H., Schiller, I., Koch, R.: Pose estimation and map building with a time-of-flight-camera for robot navigation. Int. J. Intell. Syst. Technol. Appl. 5, 355–364 (2008)

Peasley, B., Birchfield, S.: RGBD point cloud alignment using Lucas-Kanade data association and automatic error metric selection. IEEE Trans. Robot. 31(6), 1–7 (2015)

Bergamasco, F., Cosmo, L., Albarelli, A., Torsello, A.: A robust multi-camera 3D ellipse fitting for contactless measurement. In: International Conference on 3D Imaging, Modeling, Processing, Visualization and Transmission, pp. 168–175 (2012)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection, computer vision and pattern recognition (2005)

Chapelle, O., Haffner, P., Vapnik, N.: Support vector machines for histogram-based image classification (1995)

Snyder, E., Cowart, E.: An iterative approach to region growing using associative memories. IEEE Trans. Pattern Anal. Mach. Intell. 5(3), 349–352 (1983)

Rusu, R.B., Blodow, N., Beetz, M.: Fast point feature histograms (FPFH) for 3D registration. In: ICRA, pp. 3212–3217 (2009)

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981)

Zaharescu, A.: An object grasping literature survey in computer vision and robotics

Saponaro, G.: Pose estimation for grasping preparation from stereo ellipses

Horaud, R., Dufournaud, Y., Long, Q.: Robot stereo-based coordination for grasping cylindric parts

Jiang, Y., Moseson, S., Saxena, A.: Efficient grasping from RGBD images: learning using a new rectangle representation. In: 2011 IEEE International Conference on Robotics and Automation (ICRA), pp. 3304–3311. IEEE (2011)

Montesano, L., Lopes, M.: Active learning of visual descriptors for grasping using non-parametric smoothed beta distributions. Robot. Auton. Syst. 60(3), 452–462 (2012)

Saxena, A., Driemeyer, J., Ng, A.Y.: Robotic grasping of novel objects using vision. Int. J. Robot. Res. 27(2), 157–173 (2008)

Lenz, I., Lee, H., Saxena, A.: Deep learning for detecting robotic grasps. Int. J. Robot. Res. 34(4–5), 705–724 (2015)

Acknowledgment

The work described in this paper was supported by the Natural Science Foundation of China under Grant No. 61672273, No. 61272218 and No. 61321491, the Science Foundation for Distinguished Young Scholars of Jiangsu under Grant No. BK20160021.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Zhou, Y. et al. (2017). Visual Robotic Object Grasping Through Combining RGB-D Data and 3D Meshes. In: Amsaleg, L., Guðmundsson, G., Gurrin, C., Jónsson, B., Satoh, S. (eds) MultiMedia Modeling. MMM 2017. Lecture Notes in Computer Science(), vol 10132. Springer, Cham. https://doi.org/10.1007/978-3-319-51811-4_33

Download citation

DOI: https://doi.org/10.1007/978-3-319-51811-4_33

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-51810-7

Online ISBN: 978-3-319-51811-4

eBook Packages: Computer ScienceComputer Science (R0)