Abstract

The estimation of mixture models is a well-known approach for cluster analysis and several criteria have been proposed to select the number of clusters. In this paper, we consider mixture models using side-information, which gives the constraint that some data in a group originate from the same source. Then the usual criteria are not suitable. An EM (Expectation-Maximization) algorithm has been previously developed to jointly allow the determination of the model parameters and the data labelling, for a given number of clusters. In this work we adapt three usual criteria, which are the bayesian information criterion (BIC), the Akaike information criterion (AIC), and the entropy criterion (NEC), so that they can take into consideration the side-information. One simulated problem and two real data sets have been used to show the relevance of the modified criterion versions and compare the criteria. The efficiency of both the EM algorithm and the criteria, for selecting the right number of clusters while getting a good clustering, is in relation with the amount of side-information. Side-information being mainly useful when the clusters overlap, the best criterion is the modified BIC.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Mixture Model

- Akaike Information Criterion

- Bayesian Information Criterion

- Gaussian Mixture Model

- Cluster Label

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The goal of clustering is to determine a partition rule of data such that observations in the same cluster are similar to each other. A probabilistic approach to clustering is based on mixture models [4]. It is considered that the sample is composed of a finite number of sub-populations which are all distributed according to an a priori parameterized probability density function [11]. If the parameter set of the mixture is known, then the unknown labels can be estimated and a partition of the sample is obtained. Thus the clustering result depends on the number of sub-populations and on the estimated parameters given this number. In the context of clustering via finite mixture models the problem of choosing a particular number of clusters is important.

In this work, the clustering problem consists in a semi-supervised learning using side-information, which is given as constraints between data points. These constraints determine whether points were generated by the same source. Spatiotemporal data for which the statistical properties do not depend on the time is a good example of such a context. Since all the measures originating from the same position in space are realization of the same random variable, it is known that they belong to a same cluster and a constraint has to be imposed. An example is the temperature in a given town in a given month. The measured temperature for different years can be considered as different realizations of the same random variable. Then a group is formed by the values for a given town and for the different years. Using the temperature measures in different towns, the aim of the clustering problem is to group similar towns, considering the values of the different years for one town as one group. It has to be noticed that the number of samples in a group is not fixed. Clustering of time series is another example of clustering data with side-information. All points in a series have to be assigned to the same cluster and thus they make a group.

The mixture models using side-information have been previously introduced. In [14] an algorithm has been proposed for determining the parameters of a Gaussian mixture model when the number of components in the mixture is a priori known. In [9] the clustering of processes has been proposed in the case of any mixture model approach specifically with the issue of data partition. However, the main difficulty as in classical clustering problems is to choose the number of clusters since it is usually not known a priori.

In this paper, criteria are proposed for assessing the number of clusters in a mixture-model clustering approach with side-information. The side-information defines constraints, grouping some points. Because usual criteria do not consider the side-information in the data they are not suitable. We propose to modify three well-known criteria and to compare the efficiency of the adapted version with the original version of the criteria. We also compare each criterion to each others.

This paper is organized as follows. Section 2 introduces notations and describes the method for determining jointly the parameters of the mixture model and the cluster labels, with the constraint that some points are in the same group, for the case of a given a priori number of clusters. Section 3 is devoted to the criteria. To take into consideration the side-information, adjustments have been made to the Bayesian information criterion (BIC), the entropy criterion (NEC), and the Akaike information criterion (AIC). The results using one example on simulated data and two examples on real data are reported in Sect. 4. A comparison with the classical criteria demonstrates the relevance of the modified versions. The efficiency of the criteria are compared and the influence of the group size is discussed. We conclude the paper in Sect. 5.

2 Clustering with Side-Information

2.1 Data Description

The objects to be classified are considered as a sample composed of N observations \(\mathcal {X}=\{s_n \}_{n=1..N}\). Each observation \(s_n\) is a group \(s_n=\{x_i^n \}_{i=1..|s_n|}\) which is a small subset of \(|s_n|\) independent data points that are known to belong to a single unknown class. An example of a data set with 9 observations is given on Fig. 1.

It is considered that the sample is composed of K sub-populations which are all distributed according to an a priori statistical parameterized law. The latent cluster labels are given by \(\mathcal {Z}=\{z_{n}\}_{n=1..N}\) where \(z_{n}=k\) means that the \(n^{th}\) realization originates from the \(k^{th}\) cluster, which is modeled by the statistical law with parameter \(\theta _{k}\). The \(|s_n|\) points \(x_i^n\) in the group n are assigned to the same cluster \(z_{n}\). Thus the constraints between data points are observed.

In summary, the observation set and the cluster label set in the case of data with side-information are respectively given by:

and

Let’s define \(\mathcal {X'}\) the same observation set with the removal of the side-information. \(\mathcal {X'}\) is composed of \(N'\) points with \(N'= \sum _{n=1}^N {|s_n|}\):

and the cluster label set \( \mathcal {Z'}\) is:

in which \(z_{i}^n\) is not constrained to be equal to \(z_{j}^n\).

2.2 Mixture Model

The mixture model approach to clustering [12] assumes that data are from a mixture of a number K of clusters in some proportions. The density function of a sample s writes as:

where \(f_k({s|\theta _k})\) is the density function of the component k, \(\alpha _k\) is the probability that a sample belongs to class k: \(\alpha _k = P(Z=k)\), \(\theta _k\) is the model parameter value for the class k, and \(\pmb {\theta }_K\) is the is parameter set \(\pmb {\theta }_K= \{\theta _k,\alpha _k\}_{k=1..K}\).

The maximum likelihood approach to the mixture problem given a data set and a number of clusters consists of determining the parameters that maximizes the log-likelihood:

in which \(\mathcal {X}\) is the data set, K is the number of clusters and \(\pmb {\theta }_K\) is the parameter set to be determined.

Due to the constraint given by the side-information, and the independence of points within a group, we get

Then

This expression can be compared to the log-likelihood for the same observation set with the removal of the side-information

in which \(\mathcal {X'}\) is the data set and \(\pmb {\theta '}_K =\{\theta '_k,\alpha '_k\}_{k=1..K}\) is the parameter set.

A common approach for optimizing the parameters of a mixture model is the expectation-maximization (EM) algorithm [3]. This is an iterative method that produces a set of parameters that locally maximizes the log-likelihood of a given sample, starting from an arbitrary set of parameters.

A modified EM algorithm has been proposed in [9]. Its aim is to take into consideration the side-information, as in [14], and to get a hard partition, as in [4]. It repeats an estimation step (E step), a classification step, and a maximization step (M step). The algorithm is the following one.

-

Initialize the parameter set \(\pmb {\theta }_K^{(0)}\)

-

Repeat from \(m=1\) until convergence

-

E step: compute the posteriori probability \(c_{nk}^{(m)}\) that the \(n^{th}\) group originates from the \(k^{th}\) cluster according to

$$\begin{aligned} c_{nk}^{(m)}= & {} p(Z_n=k|s_n,\pmb {\theta }_K^{(m-1)}) = \frac{ \alpha _{k}^{(m-1)} \prod _{i=1}^{|s_n|} f_{k}( x_i^n| {\theta }_k^{(m-1)})}{\sum _{r=1}^{K} \alpha _{r}^{(m-1)} \prod _{i=1}^{|s_n|} f_{r}(x_i^n| {\theta }_r^{(m-1)})} \end{aligned}$$(8) -

determine the cluster label set \(\mathbf {Z}^{(m)}\): choose \(z_n^{(m)}=k\) corresponding to the largest value \(c_{nk}^{(m)}\)

-

M step: determine the parameters \( {\theta }_k^{(m)}\) that maximizes the log-likelihood for each class k and compute the new value of \(\alpha _k^{(m)}\) for each k.

-

This algorithm provides an optimal parameter set \(\pmb {\theta }^*_K\) for a given number K. We define \(L^*(K)\) as the corresponding log-likelihood

In the case without side-information using the initial set \(\mathcal {X}'\), we get a similar optimal log-likelihood \(L'^*(K)\). The maximum likelihood increases with the model complexity as reminded in [7]. Then the maximized log-likelihood \(L'^*(K)\) is an increasing function of K, and the maximized log-likelihood cannot be used as a selection criterion for choosing the number K.

In the case of data with side-information, the maximized log-likelihood \(L^*(K)\) is also an increasing function of K, and thus it is not adapted as a selection criterion for choosing the number of clusters.

3 Criteria

The aim of a criterion is to select a model in a set. The criterion should measure the model’s suitability which balances the model fit and the model complexity depending on the number of parameters in the model.

In the case of data without side-information, there are several information criteria that help to support the selection of a particular model or clustering structure. However, specific criteria may be more suitable for particular applications [7].

In this paper we have chosen three usual criteria and have adapted them to data with side-information. The bases of the criteria are briefly described below and their modified version are given.

3.1 Criterion BIC

The criterion BIC [13] is a widely used likelihood criterion penalized by the number of parameters in the model. It is based on the selection of a model from a set of candidate models \(\{M_m\}_{m=1,\ldots ,M}\) by maximizing the posterior probability:

where \(M_m\) corresponds to a model of dimension \(r_m\) parameterized by \({\phi }_m\) in the space \({\varPhi }_m\).

Assuming that \(P(M_1)=P(M_m), \forall m=1,\ldots ,M\), the aim is to maximize \(P(\mathcal {X}|M_m)\) which can be determined from the integration of the joint distribution:

An approximation of this integral can be obtained by using the Laplace approximation [10]. Neglecting error terms, it is shown that

The criterion BIC is derived from this expression. It corresponds to the approximation of \(\log P(\mathcal {X}|M_m)\) and then is given by

where \(L'\) and \(N'\) are respectively the log-likelihood and the number of points in the set \(\mathcal {X'}\) defined by relation (3) for the case of no side-information, and r is the number of free parameters. The model, i.e. the number of clusters, to be selected has to minimize this criterion.

For the case of data with side-information, described by the set \(\mathcal {X}\) given by relation (1), the criterion has to be adapted. We suggest to use the modified BIC (BICm) criterion:

It has to be noticed that the criterion does not depend directly on the total number of points \(\sum _{n=1}^N {|s_n|}\) but depends on the number of groups N. The maximum log-likelihood \(L^*(K)\), which takes into account the positive constraints is computed differently to classical criterion.

3.2 Criterion AIC

Another frequently used information criterion to select a model from a set is the criterion AIC proposed in [1]. This criterion is based on the minimization of the Kullback-Leibler distance between the model and the truth \(M_0\):

The criterion AIC to be minimized in the case of data without side-information takes the form:

For taking into account that some points arise from the same source, we propose to replace \(L'^*(K)\) by \(L^*(K)\). Then the modified AIC (AICm) is given by:

3.3 Criterion NEC

The normalized entropy criterion (NEC) was proposed in [5] to estimate the number of clusters arising from a mixture model. It is derived from a relation linking the likelihood and the classification likelihood of a mixture.

In the case of a data set without side-information \(\mathcal {X'}\), the criterion to be minimized in order to assess the number of clusters of the mixture components is defined as:

where \(E'^*(K)\) denotes the entropy term which measures the overlap of the mixture components.

Because this criterion suffers of limitation to decide between one and more than one clusters, a procedure that consists in setting NEC(1) = 1 was proposed in [2].

In the case of data set with side-information, the computation of the terms of entropy and of log-likelihood has to be modified. Since \(\sum _{k=1}^{K}c_{nk}=1\), we can rewrite \(L_\mathcal {X}(\pmb {\theta }_K)\) given by relation (6) as:

The expression of \(c_{nk}\) for data with side-information is given by:

Thus the log-likelihood can be expressed as:

and can be rewritten as

with

and

Thus we propose to use the criterion given by:

where \(E^*(K)= E_\mathcal {X}(\pmb {\theta }^*_K)\) and \(L^*(K)\) is given by (9).

4 Results

The performances of the three criteria have been assessed using simulated problems, which are composed of Gaussian distributions, and two real problems using iris data and climatic data.

First of all, the relevance of the adapted version of the three criteria is shown. Then the performances of the criteria are compared in different situations in order to select the best one.

4.1 Comparison of the Original and Adapted Versions of the Three Criteria

In order to compare the original criteria with the modified version, we have used a mixture of four two dimensional Gaussian components. The observation data have been generated with the following parameter values

\(N=40\) (10 groups per cluster) and \(|s_n|=30\; \forall n\). Consequently the total number of points is equal to 1200.

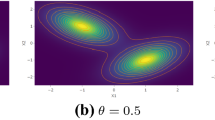

Examples of data sets are given on Fig. 2, with a variance equal to 1.2, 0.7, 0.3 and 0.03. Let note that on this figure the groups are not shown and then one can only see the points.

The data set was modeled by a Gaussian mixture. Thus the model fits the data when the number of clusters is equal to 4. For a given number of clusters K, the parameters of the Gaussian mixture were estimated according to the algorithm described in Sect. 2.2. The parameters to be estimated are the proportions of each class, the mean vector, and the variance-covariance matrix, in dimension 2. Thus the total number of parameters is equal to \(6K-1\). Then the value of each criterion was computed using the initial expression and the modified expression. The number of clusters was selected for each of the six criteria (BIC, AIC, NEC, BICm, AICm, NECm). This experiment has been repeated 200 times in order to estimate the percent frequency of choosing a K-component mixture for each criterion. This experiment has been done for 6 different values of \(\sigma ^2\): 0.01, 0.03, 0.1, 0.3, 0.7, 1.2. The results are reported in Table 1.

Comparing the criteria BIC and BICm, it can be observed that BIC allows to select the correct number of clusters only when the clusters do not overlap (\(\sigma ^2 < 0.3\)), whereas BICm is efficient even when the clusters are not obviously separated. Comparing the criteria AIC and AICm, it can be observed that when the clusters do not overlap AIC is slightly better than AICm, but when the clusters are not obviously separated AICm remains efficient contrary to AIC. Comparing the criteria NEC and NECm, NEC selects only one class when the clusters are not well separated whereas NECm selects 2 or 3 classes whereas the true value is 4. Thus NECm performs better than NEC.

This experiment shows that the modified criteria are better suited to the data with side-information than the original criteria.

Comparing the three modified criterion, one observes that the best modified criterion is BICm and the worst results are obtained with NECm. With BICm over 99% success is obtained in all cases. AICm has a slight tendency to overestimate the number of clusters, while NECm has a tendency to underestimate. This conclusion is in accordance with the results given in [7] obtained in case of the classical criteria for mixture-model clustering without side-information. It is also mentioned that for normal distributions mixtures, BIC performs very well. In [5], it is mentioned that NEC is efficient when some cluster structure exists. Since the cluster structure is not obvious in this experiment, this criterion has low performance.

4.2 Influence of the Amount of Side-Information

The efficiency of the modified criteria has been studied in relation with the amount of side-information and also the overlapping of the clusters, which depends directly on the number of points in the groups. For that a mixture of three two dimensional Gaussian components has been used, with the following parameter values

The number of points can vary with the group but for the study we set the same number for all groups. We have used 4 different values for the triplet \((a,N, |s_n|)\). The total number of points given by the product \(N \, |s_n|\) was always equal to 750. Thus when the number of groups (N) decreases, the number of points in each group (\(|s_n|\)) increases and the “side-information” increases. An example of data is given on Fig. 3 for \(a=2, N=150, |s_n|=5\).

A Gaussian mixture was used as the model. For a given number of clusters K, the \(6K-1\) parameters of the Gaussian mixture were estimated according to the algorithm described in Sect. 2.2. The number of clusters was determined using each criterion. This experiment has been repeated 200 times in order to estimate the percent frequency of choosing a K-component mixture for each criterion. The results for the four cases and for each of the criteria BICm, AICm and NECm are given in Table 2.

The results confirm the conclusions obtained in the previous section: the best results are obtained with BICm and that holds for any amount of side-information. Comparing the four cases, when the side-information increases, or when the cluster overlapping decreases, it is easier to determine the right number of clusters.

4.3 Iris Data Set

The Iris flower data set is a multivariate data set, available on the web (archive.ics.uci.edu). This is a well-known database to be found in the pattern recognition literature (for example [6]). Four features were measured from each sample: the length and the width of the sepals and petals, in centimetres. The data set contains 3 classes of 50 instances each, where each class refers to a type of iris plant. The data set only contains two clusters with rather obvious separation. One of the clusters contains Iris setosa, while the other cluster contains both Iris virginica and Iris versicolor and is not obviously separable.

Side-information has been added to the original data. Some groups in each class have been randomly built, given a number of groups per class. Four values have been used: 50, 25, 16, 10 and 6, corresponding respectively to \(|s_n|\) approximatively equal to 1, 2, 3, 5, 8. The case with 50 groups corresponds to the case without side-information (only one observation par class).

For the clustering, a Gaussian mixture was used as the model. In order to reduce the number of parameters to be estimated we have considered that the random variables are independent. Thus, for a given number of clusters equal to K, the number of parameters r is equal to \(9K-1\), corresponding to 4K for the mean vectors, 4K for the mean standard deviations, and \(K-1\) for the cluster probabilities. The number of clusters was selected using each of the three proposed criteria (BICm, AICm, and NECm). The experiment has been repeated 20 times for each value of K for estimating the percent frequency of choosing K clusters. The classification results for a realization with 10 groups are reported on Fig. 4. The probability of correct classification in the case of 3 clusters has also been estimated for studying the influence of the number of groups on the classification rate. The estimated value of this probability and the mean of the selected number of clusters are given in Table 3. The results of the percent frequency of choosing a K-component mixture are given in Table 4.

The results in Table 3 show that the probability of correct classification is quite good when the number of clusters is known. However the results in Table 4 show that the determination of the right number of clusters is quite difficult. As previously, the best results are obtained with the criterion BICm whatever the value of K. The results with the criteria AICm and NECm are not good. Because the clusters Iris virginica and Iris versicolor and are not obviously separable, NECm gives almost always two clusters.

4.4 Climatic Data

Climatic data in France are available on the public website donneespubliques.meteofrance.fr. We have used the average of the daily maximum and minimum temperatures and the cumulative rainfall amounts for the months of January and July in 109 available towns. Then each measure for each town is a realization of a random variable of dimension 6. We have used the data for the years 2012 to 2015, and have assumed that the random variable for a given town has the same distribution within all years. Then we can consider that 4 realizations are available for each random variable. It also means that the number of points for each town (group) is equal to 4. We have used a Gaussian mixture model for the clustering algorithm and have considered that the random variables are independent in order to limit the number of parameters to be estimated. Thus, for a given number of clusters equal to K, the number of parameters r is equal to \(13K-1\).

The selected number of clusters with the criterion BICm is 6, although the criterion values for K equal to 5, 6 and 7 are very similar.

The values of the mixture model parameters are reported in Table 5. The class labels in respect to the longitude and latitude are reported on Fig. 5. We can see different towns with climates which are rather semi-continental (1), continental (2), maritime and warm (3), maritime (4), mediterranean (5), and mountainous (6). In comparison with the results in [8], it can be observed that the different clusters appear more homogeneous, thanks to a larger number of variables (6 instead of 4) and to a larger number of points in each group (4 instead of 3).

5 Conclusion

This paper addresses the problem of assessing the number of clusters in a mixture model for data with the constraint that some points arise from the same source. To select the number of clusters, usual criteria are not suitable because they do not consider the side-information in the data. Thus we have proposed suitable criteria which are based on usual criteria.

The Bayesian information criterion (BIC), the Akaike information criterion (AIC) and the entropy criterion (NEC) have been modified, for taking into account the side-information. Instead of using the total number of points, the criteria use the number of groups, and instead of using the log-likelihood of the points, they use the log-likelihood of the groups.

The relevance of the modified versions of the criteria in respect to the original versions has been shown through a simulated problem. The performance of the proposed criteria in relation with the number of points per group has been studied using simulated data and using the Iris data set. According to the obtained results, we conclude that BICm is the best performing criterion. NECm is a criterion which has a tendency to underestimate the number of clusters and AICm tends to overestimate the cluster number. These results are similar with former results on the original criteria, in the case without side-information [7]. The side-information is relevant for overlapped clusters. On the contrary NEC is suitable for well separated components. Thus the modified criterion NECm was not efficient for the experimental data. Finally the EM algorithm and the modified criterion BICm were used for the classification of climatic data.

The proposed approach for the clustering with side-information allows to deal with a variable number of points within each group. For example, in the Iris problem the number of groups in each cluster could be different, in the climatic problem the number of years for each town could be different.

This clustering approach can be used to only cluster available data. It can also be used as a learning stage, for afterwards classifying new groups. A new group could be classified according to the log-likelihood, and thus associated to a sub-population described by the parameters of the corresponding mixture component. Thus it is important to estimate correctly both the number of clusters and the parameters of the component model. Then it would be necessary to define a criterion that takes into account these two items to assess an estimated mixture model.

References

Akaike, H.: A new look at the statistical model identification. IEEE Trans. Autom. Control 19(6), 716–723 (1974)

Biernacki, C., Celeux, G., Govaert, G.: An improvement of the nec criterion for assessing the number of clusters in a mixture model. Pattern Recogn. Lett. 20(3), 267–272 (1999)

Celeux, G., Govaert, G.: A classification EM algorithm for clustering and two stochastic versions. Comput. Stat. Data Anal. 14(3), 315–332 (1992)

Celeux, G., Govaert, G.: Gaussian parcimonious clustering models. Pattern Recogn. 28, 781–793 (1995)

Celeux, G., Soromenho, G.: An entropy criterion for assessing the number of clusters in a mixture model. J. Classif. 13(2), 195–212 (1996)

Duda, R.O., Hart, P.E.: Pattern Classification and Scene Analysis. Wiley, Hoboken (1973)

Fonseca, J.R., Cardoso, M.G.: Mixture-model cluster analysis using information theoretical criteria. Intell. Data Anal. 11(1), 155–173 (2007)

Grall-Maës, E., Dao, D.: Assessing the number of clusters in a mixture model with side-information. In: Proceedings of 5th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2016), Rome, Italy, pp. 41–47, 24–26 February 2016

Grall-Maës, E.: Spatial stochastic process clustering using a local a posteriori probability. In: Proceedings of IEEE International Workshop on Machine Learning for Signal Processing (MLSP 2014), Reims, France, 21–24 September 2014

Lebarbier, E., Mary-Huard, T.: Le critère BIC: fondements théoriques et interprétation. Research report, INRIA (2006)

McLachlan, G., Peel, D.: Finite Mixture Models. Wiley, Hoboken (2000)

McLachlan, G., Basford, K.: Mixture models. Inference and applications to clustering. In: Statistics: Textbooks and Monographs, vol. 1. Dekker, New York (1988)

Schwarz, G.: Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978)

Shental, N., Bar-Hillel, A., Hertz, T., Weinshall, D.: Computing Gaussian mixture models with EM using side-information. In: Proceedings of 20th International Conference on Machine Learning. Citeseer (2003)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Grall-Maës, E., Dao, D.T. (2017). Criteria for Mixture-Model Clustering with Side-Information. In: Fred, A., De Marsico, M., Sanniti di Baja, G. (eds) Pattern Recognition Applications and Methods. ICPRAM 2016. Lecture Notes in Computer Science(), vol 10163. Springer, Cham. https://doi.org/10.1007/978-3-319-53375-9_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-53375-9_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-53374-2

Online ISBN: 978-3-319-53375-9

eBook Packages: Computer ScienceComputer Science (R0)