Abstract

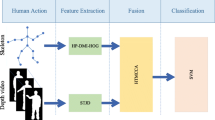

This paper proposes a novel human action recognition using the decision-level fusion of both skeleton and depth sequence. Firstly, a state-of-the-art descriptor RBPL, relative body part locations, is adopted to represent skeleton. But the original RBPL employs all the available joints, which may introduce redundancy or noise. This paper proposes an adaptive optimal joint selection model based on the distance traveled by joints before RBPL for each different action, which can reduce redundant joints. Then we use dynamic time warping to handle temporal misalignment and adopt KELM, kernel-based extreme learning machine, for action recognition. Secondly, an efficient feature descriptor DMM-disLBP, depth motion maps-based discriminative local binary patterns, is constructed to describe depth sequences, and KELM is also used for classification. Finally, we present an effective decision fusion for action recognition based on the maximum sum of decision values from skeleton and depth maps. Comparing with the baseline methods, we improve the performance using either skeleton or depth information, and achieve the state-of-the-art average recognition accuracy on the public dataset MSR Action3D using proposed fusing strategy.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

The authors don’t name their method. In order to facilitate the writing, we name it RBPL (Relative Body Part Locations).

- 2.

- 3.

The diagram of RBPL is quoted from [1].

References

Vemulapalli, R., Arrate, F., Chellappa, R.: Human action recognition by representing 3D skeletons as points in a lie group. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 588–595 (2014)

Xia, L., Chen, C.C., Aggarwal, J.: View invariant human action recognition using histograms of 3D joints. In: 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 20–27. IEEE (2012)

Ye, M., Zhang, Q., Wang, L., Zhu, J., Yang, R., Gall, J.: A survey on human motion analysis from depth data. In: Grzegorzek, M., Theobalt, C., Koch, R., Kolb, A. (eds.) Time-of-Flight and Depth Imaging. Sensors, Algorithms, and Applications. LNCS, vol. 8200, pp. 149–187. Springer, Heidelberg (2013). doi:10.1007/978-3-642-44964-2_8

Du, Y., Wang, W., Wang, L.: Hierarchical recurrent neural network for skeleton based action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1110–1118 (2015)

Li, W., Zhang, Z., Liu, Z.: Action recognition based on a bag of 3D points. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 9–14. IEEE (2010)

Yang, X., Zhang, C., Tian, Y.: Recognizing actions using depth motion maps-based histograms of oriented gradients. In: Proceedings of the 20th ACM International Conference on Multimedia, pp. 1057–1060. ACM (2012)

Chen, C., Jafari, R., Kehtarnavaz, N.: Action recognition from depth sequences using depth motion maps-based local binary patterns. In: 2015 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 1092–1099. IEEE (2015)

Althloothi, S., Mahoor, M.H., Zhang, X., Voyles, R.M.: Human activity recognition using multi-features and multiple kernel learning. Pattern Recogn. 47, 1800–1812 (2014)

Liu, T., Pei, M.: Fusion of skeletal and STIP-based features for action recognition with RGB-D devices. In: Zhang, Y.-J. (ed.) ICIG 2015. LNCS, vol. 9218, pp. 312–322. Springer, Heidelberg (2015). doi:10.1007/978-3-319-21963-9_29

Liu, Z., Zhang, C., Tian, Y.: 3D-based deep convolutional neural network for action recognition with depth sequences. Image Vis. Comput. 55, 93–100 (2016)

Müller, M.: Information Retrieval for Music and Motion, vol. 2. Springer, Heidelberg (2007)

Huang, G.B., Zhou, H., Ding, X., Zhang, R.: Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B: Cybern. 42, 513–529 (2012)

Guo, Y., Zhao, G., PietikäInen, M.: Discriminative features for texture description. Pattern Recogn. 45, 3834–3843 (2012)

Wang, J., Liu, Z., Wu, Y., Yuan, J.: Mining actionlet ensemble for action recognition with depth cameras. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1290–1297. IEEE (2012)

Evangelidis, G., Singh, G., Horaud, R.: Skeletal quads: human action recognition using joint quadruples. In: ICPR 2014-International Conference on Pattern Recognition (2014)

Theodorakopoulos, I., Kastaniotis, D., Economou, G., Fotopoulos, S.: Pose-based human action recognition via sparse representation in dissimilarity space. J. Vis. Commun. Image Represent. 25, 12–23 (2014)

Vieira, A.W., Nascimento, E.R., Oliveira, G.L., Liu, Z., Campos, M.F.: On the improvement of human action recognition from depth map sequences using space-time occupancy patterns. Pattern Recogn. Lett. 36, 221–227 (2014)

Shen, X., Zhang, H., Gao, Z., Xue, Y., Xu, G.: Human behavior recognition based on axonometric projections and phog feature. J. Comput. Inf. Syst. 10, 3455–3463 (2014)

Zhu, Y., Chen, W., Guo, G.: Fusing multiple features for depth-based action recognition. ACM Trans. Intell. Syst. Technol. (TIST) 6, 18 (2015)

Acknowledgement

This work is supported in part by Beijing Natural Science Foundation: 4142051.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Ni, H., Liu, H., Wang, X., Qian, Y. (2017). Action Recognition Based on Optimal Joint Selection and Discriminative Depth Descriptor. In: Lai, SH., Lepetit, V., Nishino, K., Sato, Y. (eds) Computer Vision – ACCV 2016. ACCV 2016. Lecture Notes in Computer Science(), vol 10112. Springer, Cham. https://doi.org/10.1007/978-3-319-54184-6_17

Download citation

DOI: https://doi.org/10.1007/978-3-319-54184-6_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-54183-9

Online ISBN: 978-3-319-54184-6

eBook Packages: Computer ScienceComputer Science (R0)