Abstract

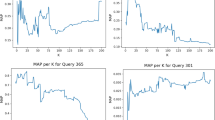

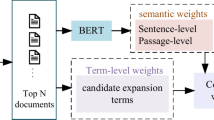

Pseudo-relevance feedback (PRF) is an effective technique for improving the retrieval performance through updating the query model using the top retrieved documents. Previous work shows that estimating the effectiveness of feedback documents can substantially affect the PRF performance. Following the recent studies on theoretical analysis of PRF models, in this paper, we introduce a new constraint which states that the documents containing more informative terms for PRF should have higher relevance scores. Furthermore, we provide a general iterative algorithm that can be applied to any PRF model to ensure the satisfaction of the proposed constraint. In this regard, the algorithm computes the feedback weight of terms and the relevance score of feedback documents, simultaneously. To study the effectiveness of the proposed algorithm, we modify the log-logistic feedback model, a state-of-the-art PRF model, as a case study. Our experiments on three TREC collections demonstrate that the modified log-logistic significantly outperforms competitive baselines, with up to \(12\%\) MAP improvement over the original log-logistic model.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

References

Clinchant, S., Gaussier, E.: Information-based models for ad hoc IR. In: SIGIR (2010)

Clinchant, S., Gaussier, E.: A theoretical analysis of pseudo-relevance feedback models. In: ICTIR (2013)

Collins-Thompson, K.: Reducing the risk of query expansion via robust constrained optimization. In: CIKM (2009)

Dehghani, M., Azarbonyad, H., Kamps, J., Hiemstra, D., Marx, M.: Luhn revisited: significant words language models. In: CIKM (2016)

Keikha, M., Seo, J., Croft, W.B., Crestani, F.: Predicting document effectiveness in pseudo relevance feedback. In: CIKM (2011)

Kleinberg, J.M.: Authoritative sources in a hyperlinked environment. J. ACM 46(5), 604–632 (1999)

Lavrenko, V., Croft, W.B.: Relevance based language models. In: SIGIR (2001)

Montazeralghaem, A., Zamani, H., Shakery, A.: Axiomatic analysis for improving the log-logistic feedback model. In: SIGIR (2016)

Pal, D., Mitra, M., Bhattacharya, S.: Improving pseudo relevance feedback in the divergence from randomness model. In: ICTIR (2015)

Seo, J., Croft, W.B.: Geometric representations for multiple documents. In: SIGIR (2010)

Zamani, H., Dadashkarimi, J., Shakery, A., Croft, W.B.: Pseudo-relevance feedback based on matrix factorization. In: CIKM (2016)

Zhai, C., Lafferty, J.: Model-based feedback in the language modeling approach to information retrieval. In: CIKM (2001)

Acknowledgements

This work was supported in part by the Center for Intelligent Information Retrieval. Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect those of the sponsor.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Ariannezhad, M., Montazeralghaem, A., Zamani, H., Shakery, A. (2017). Iterative Estimation of Document Relevance Score for Pseudo-Relevance Feedback. In: Jose, J., et al. Advances in Information Retrieval. ECIR 2017. Lecture Notes in Computer Science(), vol 10193. Springer, Cham. https://doi.org/10.1007/978-3-319-56608-5_65

Download citation

DOI: https://doi.org/10.1007/978-3-319-56608-5_65

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-56607-8

Online ISBN: 978-3-319-56608-5

eBook Packages: Computer ScienceComputer Science (R0)