Abstract

Brain-computer interface (BCI) provides an alternative way for lock in patients to interact with the environment solely based on neural activity. The drawback with independent BCIs is their lack in the number of commands – usually only two are available. This provides great challenges to the BCI’s usefulness and applicability in real-life scenarios. A potential avenue to increasing the number of independent BCI commands is through modulating brain activity with music, in regions as the orbitofrontal cortex. By quantifying oscillatory signatures such as alpha rhythm at the frontal cortex, we can obtain a greater understanding of the effect of music at the cortical level. Similar to how desynchronization patterns during motor imagery is comparable to that in real movement, the imagination of music elicits response from the auditory cortex as if the sound is actually heard. We propose an experimental paradigm to train subjects to elicit discriminative brain activation pattern with respect to imagining high and low music scales. Each trial of listening to music (high or low scale) was followed by its respective music imagery. The result of this three subjects experiment achieved over 70% accuracy in independent BCI performance and showed similar distributions in Discriminative Brain Patterns (DBP) between high and low music listening and imagination, as shown in Fig. 1. This pilot study opens an avenue for increasing BCI commands, especially in independent BCI, which are currently very limited. It also provides a potential channel for music composition.

Sound stimuli of high and low scale composed with online NoteFlight software. (1) Shows the high music scale and (2) the low scale music scale.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Brain-computer interface (BCI) technologies provide an alternative mean of interacting with the environment independent of peripheral nerves and muscles, based solely on neural activities [1]. BCI technologies hold great potential to improve their autonomy and quality of life for those with severe and multiple motor disabilities such as lock-in syndrome. Generally, users can use the BCI system by consciously eliciting distinct, reproducible patterns of activity in particular brain regions through mental tasks. A decoding system detects these patterns of activity and translates them to the designated commands for external device control (e.g., computer cursor, robotic hands, rehabilitation devices) [2].

Among the various BCI systems developed up to date [3], independent BCI without external stimulation has received vast amounts of interest [4,5,6]. Due to its independent nature, BCI systems based solely on cognitive tasks such as motor imagery [7], slow cortical potential [8], sensorimotor rhythms [9], and somatosensory attention orientation [10] have shown promising potential not only in communication and control but also in neurorehabilitation [11, 12]. However, there are still many drawbacks in these independent BCI systems, such that the degree of control commands is very limited – generally only two degrees of control available. Further, there is also the challenge of “BCI-Illiteracy”, which is that BCI control does not work for a non-negligible portion of users (estimated 15 to 30%) [13]. Increasing the currently limited BCI commands by introducing novel BCI modalities would significantly improve the information transfer rate [14]. A larger range of diversified BCI systems would make BCI suitable for a greater number of users.

Brain responses to music stimulation opened a new window of opportunity for the study of music at the cognitive level. There’s a potential for using music to modulate activities in brain structures such as the orbitofrontal cortex [15]. By quantifying oscillatory signatures such as alpha rhythm at the frontal cortex, we can obtain a greater understanding of the effect of music at the cortical level [16]. Similar to how desynchronization patterns during motor imagery models is comparable to that in real movement [17], the imagination of music elicits response from the auditory cortex as if the sound is actually heard [18]. Based on current works on EEG music studies [16, 19, 20], we will provide a novel paradigm to train the subjects to elicit distinguishable brain patterns with respect to imagination of low and high pitched piano music scales. The EEG activation patterns as a result of music imagery will be analyzed and translated into control for independent BCI system.

2 Methods

Subjects

Three healthy subjects, two male and one female, mean age 23, were recruited in the study. All three were naïve BCI subjects and all have normal hearing and normal or corrected to normal vision, none reported to be diagnosed with any neurological disorder or hearing impairment. This study was approved by the Ethics Committee of the University of Waterloo and all subjects signed a written informed consent before participation in the experiment.

Sound Stimuli

Two different ranges of sound stimuli (high and low scales) were administered to the subjects. The stimuli were composed with the online NoteFlight software, which can be found at www.noteflight.com. The high scale composes of a full 8-note ascending scale starting at C6 and ending at C7. The low scale composes of the same full 8-note ascending scale but starts at C2 and ends at C3. Both scales are electronically generated by the piano simulator on NoteFlight at 2/4 measure and tempo of 120 quarter notes per minute. See Fig. 1 for music sheet.

EEG Recording

EEG signals were recorded using the g.Nautilus system (g.tec, Austria). A 32-channel wireless g.Nautilus EEG cap was used to collect 32 channel EEG signals, and the active gel-based electrodes were placed according to the extended 10/20 system. The reference electrode was placed on the right earlobe and the ground electrode was placed on the forehead. A 60 Hz notch filter was applied during recording to the raw signals, which were sampled at a frequency of 250 Hz with 24-bit resolution.

Experimental Procedure

Subjects were seated in a comfortable chair at a distance of approximately 0.5 m away from a 23-inch Dell computer monitor. A built-in speaker beneath the monitor was facing the subject and was used to present the auditory stimuli. The subjects were asked to sit still, limiting their ocular and facial movements to a minimal. A total of 160 trails were performed by the subject in 4 runs. Subjects rested for 1–2 min between each run.

Each run consisted of 40 trials, and the trials were done in pairs of listening to either the high or the low music scale followed by the auditory imagination of the music scale that was just presented (high scale listening was followed by high scale imagination; low scale listening was followed by low scale imagination). 20 pairs of trails were performed in random order.

At the beginning of the first trial of the pair, a white cross (“+”) appeared at the center of the black screen. After 3 s, either the high or the low music scale was auditorily presented to the subject. At 5.2 s, the screen turns black and the subject relaxed for 2 s, followed by an additional random rest period of 0 to 2 s to avoid adaptation. For the second trail of the pair, the same procedure is performed, except a faint ‘pop’ cue sound is played for 0.2 s to prompt the subject to begin imagining the music scale they just heard in the trial before. This procedure is shown visually in Fig. 2.

Experiment protocol, subjects are instructed to perform music listening task or music imagination task during the mental task period. Each music listening task is followed by corresponding music imagination task, i.e. high scale listening is followed by high scale imagination after the next trial, and also to low scale music. After the music imagination trial, the next music listening trial will be presented randomly.

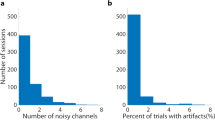

EEG Data Processing

EEG signals were visually inspected and trails contaminated with muscle or eye movement activities were removed. A minimum of 35 out of 40 trials remained. The artifact-free EEG was re-referenced according to the average referencing method. Time-frequency decomposition of each trial along each EEG channel was undertaken to construct the spatio-spectral-temporal structure according to the predefined mental tasks. It was calculated every 200 ms with a hanning tapper, convoluted with a modified sinusoid basis in which the number of cycles linearly changed with frequency to achieve proper time and frequency resolution. The R2 index was calculated based on the above spatio-spectral-temporal structures between different mental tasks, and used to locate the components of different EEG channels for the classification of the two corresponding mental tasks. The Discriminative Brain Pattern (DBP) was defined as a topographic plot of the R2 index, which was averaged along the task-line time interval mentioned above, and along certain frequency bands, such as alpha of [8 13] Hz, beta of [13 26] Hz.

Algorithm and Performance Evaluation

Spatial filtering technique was adopted for both reducing the number of channels and for enhancing the feature discrimination between different mental tasks. The spatial filters were determined with the Common Spatial Pattern (CSP) procedure, which has been extensively validated for BCI. The log variance of the first three and last three components of the spatially filtered signals were chosen as feature vectors, and linear discriminative analysis (LDA) was used for classification. During the online experiment, spatial filters and LDA parameters were retrained at every trial, i.e., the classification of the current trial was based on the 40 previous trials in the previous run and trials before the current trial in the same run.

As the most discriminative frequency bands are highly subject-dependent, the bands were selected as: lower alpha [8 10] Hz (α−), upper alpha [10 13] Hz (α+), lower beta [13 20] Hz (β−), upper beta [20 26] Hz (β+), alpha [8 13] Hz (α), beta [13 26] Hz (β), alpha-beta [8 26] Hz (αβ), and [10 16] Hz (η, good for some subjects to our experience). A fourth- order Butterworth filter was applied to the raw EEG signals before the CSP spatial filtering. A 10 × 10 fold cross-validation was utilized to evaluate the BCI performance among different frequency bands, and for selecting the sub-optimal frequency band.

3 Results

Discriminative Brain Pattern Between Low and High Music in Listening and Imagination

R2 value distribution in spatial-spectral-temporal space with respect to music listening and imagination from subject s1 was presented as shown in Fig. 3. The discrimination information was mainly concentrated in the frontal cortex and laid between 20 to 35 Hz frequency range.

R2 value distribution in spatial-spectral-temporal space from subject s1. (1) R2 value distribution across frequency and spatial domains in music listening task (R2 was averaged along the temporal dimension corresponding to the third–fifth seconds from the beginning of the trial), the color bar indicates the R2 values. (2) Topoplot of R2 averaged between 25 and 30 Hz (Discriminative Brain Pattern) with respect to music perception task. (3) R2 value distribution across frequency and spatial domains in music imagination task. (4) Topoplot of R2 averaged between 25 and 30 Hz with respect to music imagination task. (Color figure online)

Classification Performance of the Potential Music Imagination BCI System

EEG signals during the mental task period were extracted for listening and imagination classes, and the first to third seconds from the beginning of the trial was extracted for idle state classes. The classification results between high and low pitch during the music listening are outlined in the second column of the Table 1; the classification results between high and low pitch during the music imagination are outlined in the third column; the results between Idle state and Imagination state are presented in the fourth column.

4 Discussion

In this preliminary study, we have shown that an independent BCI based on music imagination is possible upon music perception training. The classification between low and high music imagination resulted in an average BCI performance of 73.5% accuracy. In this current proof-of-concept investigation, the subjects first listened to the music and then were asked to imagine the music they just heard (either high or low scale) in the following trial. This was done in order to guide and train the subjects in their music imagery.

The oscillation differences during high and low music listening were located at the frontal cortex as shown in subject s1, and the reactive frequency range was concentrated between 20 and 35 Hz. The activation patterns in music imagination were found to be similar to those in music listening. This indicates that it is possible to train the subject’s music imagination through guided music listening followed by music imagination recall of the previously heard music. It was unexpected that the oscillation difference between high and low music listening is located at the frontal cortex instead of the auditory cortex [18], but a greater number of subjects in the future will help to explain this discrepancy.

As this is a pilot study, more subjects will be recruited to solidify this finding. The event related potential (ERP) with respect to different music stimulation and also the instructing void cue will be further analyzed to localize the brain activation source, and ERP features will also be investigated for discrimination analysis. The subject’s musical experience and musical background may also play a significant role in the subject’s ability to use music imagination BCI control. The subject’s engagement in the music and personal preference may also come into play [16]. These will be taken into consideration in future data collection and analysis. The ultimate goal is to provide a way for subjects to use music imagery to control a BCI independent of the music listening cue prior to imagery.

There are a few limitations to this study, such that there is no control over the quality of the subjects’ music imagery. Further, the subject’s motivation level can also skew the results, such that if the subject was not motivated, the effect would be smaller [16].

In summary, the current work found a reactive frequency range concentrated between 20 and 35 Hz. The activation patterns in music imagination were found to be similar to those in music listening.

5 Conclusion

In this work, we have shown that imagination of low and high scale music can be discriminated on EEG signals and potentially be translated into a modality for independent BCI control, through providing music listening guided training. The music imagination based BCI system will expand the variety of BCI commands and hold the potential in music composition solely through brain activity.

References

Vallabhaneni, A., Wang, T., He, B.: Brain–computer interface. In: He, B. (ed.) Neural Engineering, pp. 85–121. Springer, Boston (2005)

He, B., Gao, S., Yuan, H., Wolpaw, J.R.: Brain–computer interfaces. In: He, B. (ed.) Neural Engineering, pp. 87–151. Springer, Boston (2013)

Hassanien, A.E., Azar, A.T. (eds.): Brain-Computer Interface: Current Trends and Applications, vol. 74. Springer International Publishing, Cham (2015)

Lesenfants, D., Habbal, D., Lugo, Z., Lebeau, M., Horki, P., Amico, E., Pokorny, C., Gómez, F., Soddu, A., Müller-Putz, G., Laureys, S., Noirhomme, Q.: An independent SSVEP-based brain-computer interface in locked-in syndrome. J. Neural Eng. 11(3), 35002 (2014)

Kaufmann, T., Holz, E.M., Kübler, A.: Comparison of tactile, auditory, and visual modality for brain-computer interface use: a case study with a patient in the locked-in state. Front. Neurosci. 7(7 July), 1–12 (2013)

Allison, B., McFarland, D., Schalk, G., Zheng, S., Jackson, M., Wolpaw, J.: Towards an independent brain - computer interface using steady state visual evoked potentials. Clin. Neurophysiol. 119(2), 399–408 (2008)

Kevric, J., Subasi, A.: Comparison of signal decomposition methods in classification of EEG signals for motor-imagery BCI system. Biomed. Sig. Process. Control 31, 398–406 (2017)

Mensh, B.D., Werfel, J., Seung, H.S.: BCI competition 2003—data set Ia: combining gamma-band power with slow cortical potentials to improve single-trial classification of electroencephalographic signals. IEEE Trans. Biomed. Eng. 51(6), 1052–1056 (2004)

Kübler, A., Nijboer, F., Mellinger, J., Vaughan, T.M., Pawelzik, H., Schalk, G., McFarland, D.J., Birbaumer, N., Wolpaw, J.R.: Patients with ALS can use sensorimotor rhythms to operate a brain-computer interface. Neurology 64(10), 1775–1777 (2005)

Yao, L., Sheng, X., Zhang, D., Jiang, N., Farina, D., Zhu, X.: A BCI system based on somatosensory attentional orientation. IEEE Trans. Neural Syst. Rehabil. Eng. 4320(1), 1 (2016)

Ang, K.K., Guan, C., Chua, K.S.G., Ang, B.T., Kuah, C., Wang, C., Phua, K.S., Chin, Y.Z., Zhang, H.: Clinical study of neurorehabilitation in stroke using EEG-based motor imagery brain-computer interface with robotic feedback. In: 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC 2010 (2010)

Ramos-Murguialday, A., Broetz, D., Rea, M., Yilmaz, Ö., Brasil, F.L., Liberati, G., Marco, R., Garcia-cossio, E., Vyziotis, A., Cho, W., Cohen, L.G., Birbaumer, N.: Brain-machine-interface in chronic stroke rehabilitation: a controlled study. Ann. Neurol. 74(1), 100–108 (2014)

Vidaurre, C., Blankertz, B.: Towards a cure for BCI illiteracy. Brain Topogr. 23(2), 194–198 (2010)

Wolpaw, J.R., Birbaumer, N., McFarland, D.J., Pfurtscheller, G., Vaughan, T.M.: Brain–computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791 (2002)

Koelsch, S.: Brain correlates of music-evoked emotions. Nat. Rev. Neurosci. 15(3), 170–180 (2014)

Schaefer, R.S., Vlek, R.J., Desain, P.: Music perception and imagery in EEG: alpha band effects of task and stimulus. Int. J. Psychophysiol. 82, 254–259 (2011)

Höller, Y., Bergmann, J., Kronbichler, M., Crone, J.S., Schmid, E.V., Thomschewski, A., Butz, K., Schütze, V., Höller, P., Trinka, E.: Real movement vs. motor imagery in healthy subjects. Int. J. Psychophysiol. 87(1), 35–41 (2013)

Zatorre, R.J., Halpern, A.R.: Mental concerts: musical imagery and auditory cortex. Neuron 47(1), 9–12 (2005)

Vlek, R.J., Schaefer, R.S., Gielen, C.C.A.M., Farquhar, J.D.R., Desain, P.: Shared mechanisms in perception and imagery of auditory accents. Clin. Neurophysiol. 122(8), 1526–1532 (2011)

Reck Miranda, E., Sharman, K., Kilborn, K., Duncan, A.: On harnessing the electroencephalogram for the musical braincap. Sour. Comput. Music J. 27(2), 80–102 (2003)

Acknowledgement

We thank all volunteers for their participation in the study. This work is supported by the University Starter Grant of the University of Waterloo (No. 203859).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Chen, M.L., Yao, L., Jiang, N. (2017). Music Imagery for Brain-Computer Interface Control. In: Schmorrow, D., Fidopiastis, C. (eds) Augmented Cognition. Enhancing Cognition and Behavior in Complex Human Environments. AC 2017. Lecture Notes in Computer Science(), vol 10285. Springer, Cham. https://doi.org/10.1007/978-3-319-58625-0_21

Download citation

DOI: https://doi.org/10.1007/978-3-319-58625-0_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-58624-3

Online ISBN: 978-3-319-58625-0

eBook Packages: Computer ScienceComputer Science (R0)