Abstract

The scenarios as smart homes and its devices requires novel ways to perform interactive actions. In this work we explore and develop a model to interact, in a natural, easy learning and intuitive manner, with a smart home, without use special sensors or another controllers, based on interpretation of complex context images captured with a trivial camera. We use artificial intelligence and computer vision techniques to recognize action icons in a uncontrolled environment and identify user interact actions gestures. Our model connects with well know computational platforms, which communicate with devices and another residential functions. Preliminary tests demonstrated that our model fits well for the objectives, working in different conditions of light, distance and ambiances.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Forms of interaction between humans and computers have been evolved to new and diverse objects and environments of our day-to-day life. Advances in technologies, as miniaturization of devices, tools for no wired communications, and others, have provided the design of applications for smart homes, turning casual activities more simple, comfortable and intuitive. Residential automation is composed by a set of sensors, equipments, services and diverse technological systems integrated with the aim of assist basic necessities of security, communication, energetic management and housing comfort [1,2,3].

Those scenarios demand ways of interaction more dynamic, natural and easy learning. Several works presents novel interaction solutions based on voice commands, facial expressions, as well capture and interpretation of gestures, the last a point of investigation of this work. Systems that use free gestures interpret actions naturally realized by persons to communicate, giving the possibility of a more easy and intuitive way of interact to users, reducing the cognitive overload of information, training and learning [4]. Such type of interaction was perceived by researches in Human-Computer Interactions as relevant since the firsts investigations [5].

Therefore, we propose a model of interaction for smart homes without use of sensors or controllers, based on artificial intelligence techniques and computer vision. These methods are applied to recognizing actions of interaction with targets dynamically distributed in a residence, using capture of images from a trivial camera, in a manner similar to a touch area. This approach aims a more cheap, efficient and simple manner of interact with a smart home and its electric devices, using representative symbols and artificial intelligence techniques, recognizingly interactions from the user. Our work connect these techniques with well known computational platforms, which communicate with devices and another residential functions.

This work is organized as follow: in the next section, we present related works in residential automation. Our model, the techniques used and methodology is explicated in the section Methodology. Experiments and results are showed in section Experiments and Results. Finally, we conclude discussing the results and possible future improvements and researches to be explored.

2 Related Work

Capture of the gestures can be performed through the use of special devices, such as sensors, accelerometers and gloves. These accessories facilitate interpretation, however, the total cost of the system is increased [6]. In this sense, the work developed in Bharambe et al. [7] presents a system composed of an accelerometer to capture the gestures, a microcontroller responsible for identifying the information collected, a transmitter and an infrared receiver. It is worth mentioning that there is a limitation regarding distance, in addition to the fact that the infrared transmitter and receiver must be fully aligned.

In contrast to the use of special accessories, the methods based on computer vision require only a computer camera to capture images and automatically interpret complex scenes [8]. Therefore, Dey et al. [9] proposed a work where the capture and interpretation of gestures is performed using the camera of a smartphone. A binary signal is generated from the captured image, which is graphically analyzed and transmitted using bluetooth to a control board responsible for activate the devices in the residence.

Still referring to methods based on computer vision, Pannase [10] developed a research that focused on quickly detecting hand gestures. For this, algorithms of segmentation and detection of the positioning of the fingers were used, and, finally, the classification of the gesture through the neural network. Another aspect of this study is the limited amount of gestures, as well as the hand should be positioned exactly in front of the camera.

In this work, we propose a model of interaction between a human and a residential environment using gestures, dispensing especial sensors or another devices, providing a interaction solution more natural and intuitive to control the functions of a smart home as, for example, switch on and switch off electronic devices, lamps, open and close doors and windows etc. The system must be able to operate in both daytime and nighttime conditions. For this, a webcam was adapted to see infrared light.

In the next section will be presented the main characteristics of the proposed model.

3 Metodology

For the development of this project, techniques of artificial intelligence and computer vision, as well complex contexts images, have been adopted to identify interaction targets and actions of the user with the ambiance.

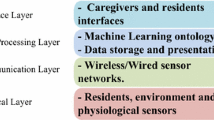

Our model is composed as presented in Fig. 1. An application located in a main computer is responsible for the execution of all procedures involving the techniques of artificial intelligence and computer vision. These procedures are applied upon a image captured from a webcam located in the environment, directed to where the target icons are placed. The action icons are disposed in the ambiance as desired by the user. The application in the main computer identifies if a user interaction action occurred. If positive, the respective action command is send to the control board, which is connected with the devices and another interactive functions of the residence.

To illustrate the operation of the proposed model was used a miniature of a residence. Is possible notice the triggering of a light as the interaction with an object occurs, as seen in the Fig. 2.

3.1 The Computer Vision Model

The application in the main computer incorporate a model developed in previous researches made by our research team. This model integrate different techniques of artificial intelligence, computer vision and image processing to discovery and classify specific objects (in this case, target icons of interaction) in a complex and low controlled environment. From a captured image of the ambiance, a pre-processing is performed for saturation of red-colored pixels, then we apply visual attention to identify interest areas in the image and discard any other visual information. In this step we have modified the classic method of visual attention as proposed in Itti et al. [11], simplifying it to respond only for color stimulus. The areas of interest are marked as seeds, which are used as input in the segmentation process. For segmentation we have used the method Watershed, as developed by Klava [12]. The segmentation step is responsible for generating individual elements of the target icons from the input image. These elements are classified using neural networks, were a action of interaction is attributed in respect with the type of each icon.

In follow, the interaction routines are started, continuously capturing images and searching for possible interact actions performed by the user. Interactions are recognized as changes in the coefficient of dissimilarity between the histograms of the regions where icons of interaction has been found. The dissimalirity \(dSim_{cos}\) is calculated using an adaptation of the cosine similarity defined by:

where \(n = 255\), \(h_{ini}\) corresponds to the histogram of the red channel obtained from an initial capture and \(h_{acq}\) is the histogram of the red channel acquired from subsequent captured images.

The scheme of the computer vision model is show in Fig. 3.

3.2 The Human-House Interaction Model

The Human-house interaction model is composed as previously show in Fig. 1. The interactive icons placed in the environment was designed considering its function, simplicity and intuitive meaning. The icons are tested in diverse ambiances, with different conditions of light, distance from the webcam, and elements in the surrounds, to assure its correct recognizability by the computer vision model. The interactive functions are elected by its pertinence and utility. In Fig. 4 there is five icons of interaction used by our model, presented with their names and related functions.

Its is pertinent mention that our model is expansible and more icons can be introduced in the application.

4 Experiments and Results

The experiments were realized in several residential environments varying the positioning of the camera and with different levels of luminosity, including total absence of light. The process occurred through the attempt of interactions and the answers obtained as results. The environments where the tests were performed can be seen as in Fig. 5.

In total, 530 interactions were executed in ambiances with luminosity, being divided into 106 interactions attempts per option. The results in these conditions are presented through a confusion matrix, unsuccessful interactions (UI) and individual accuracy, as seen in Table 1. In addiction, the total accuracy was calculated, with a result of 96.04%.

According with the objectives of this work, which refers to operation in nocturnal conditions, have been realized specific tests for these settings. Were executed the total of 205 interactions, distributed in 41 attempts per option. As done previously, the results are showed through confusion matrix, unsuccessful interactions (UI) and individual accuracy. The Table 2 exposes the results. In this case, the total accuracy obtained was 96.59%.

In Fig. 6 is presented a graphic where interaction actions can be seen with its respective coefficient of dissimilarity, obtained using the Eq. 1, representing the human-house interaction value (\(\theta _{HHI}\)). Only \(\theta _{HHI}\) values above 0.8 are considered as a interaction by the user, triggering the send of the command of action. In the test execution of the Fig. 6 was intended the execution of twenty-nine interactions, with different functions icons, which all of them been recognized correctly.

The tests presented consistent results in both settings of light and scenarios, reaching high accuracy values. It is remarkable that none action have been triggered instead of another, corroborating the efficiency of the computer vision model adopted. Also, it is noteworthy that unsuccessful interactions are defined as the no recognized user interaction by the model, and not a interaction action executed arbitrarily, the last case being a more problematic situation.

Lastly, the interactions actions have been detected with confortable margins, with values of \(\theta _{HHI}\) close of one, for the actions actually desired, and zero for the others, demonstrating robustness of the model.

5 Conclusions

In this work, a model of interaction based on artificial intelligence techniques and computer vision was proposed for smart homes without use of sensors or controllers. The proposed model was able to detected and recognized the icons of interaction correctly in a uncontrolled environment, with different distances from the camera used and the target icons, and different luminosity variances in the ambiance, rarely being necessary parameters changes or any type of calibration.

As presented in the previous section, the results exposed that interactions made by the user have been correctly identified in almost every action of interaction, which reinforces the confidence in the model been proposed.

Additionally, our model works in day and night conditions, only using a ordinary camera, dispensing special sensors or another controllers, with allows a low cost, easy learning and more natural form of interaction only using gestures. These characteristics was possible by the use of artificial intelligence and computer vision techniques, directing the complexity and cognitive enforces to the model and not to the users.

Conclusively, the model demonstrated satisfactory results, concretely offering a new option to control a residence. As future works, improvements in the method for detect interactions can be investigated, applying more intelligent approaches. Furthermore, a segmentation technique, providing a lower computational cost, and better segments quality, could be developed.

References

Chan, M., Hariton, C., Ringeard, P., Campo, E.: Smart house automation system for the elderly and the disabled, systems, man and cybernetics. In: IEEE International Conference on Intelligent Systems for the 21st Century, vol. 2, pp. 1586–1589. IEEE (1995)

Lee, K.Y., Choi, J.W.: Remote-controlled home automation system via bluetooth home network. In: SICE 2003 Annual Conference, pp. 2824–2829. IEEE (2003)

Ahmim, A., et al.: Design, implementation of a home automation system for smart grid applications. In: 2016 IEEE International Conference on Consumer Electronics (ICCE), pp. 538–539. IEEE (2016)

Valli, A.: Natural interaction white paper (2007). http://www.naturalinteraction.org/images/whitepaper.pdf

Aggarwal, J.K., Cai, Q.: Human motion analysis: a review. In: Proceedings of Nonrigid and Articulated Motion Workshop. IEEE (1997)

Erol, A., et al.: A review on vision-based full DOF hand motion estimation. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, CVPR Workshops. IEEE (2005)

Bharambe, A., et al.: Automatic hand gesture based remote control for home appliances. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 5(2), 567–571 (2015)

Jain, A.K., Dorai, C.: Practicing vision: integration, evaluation and applications. Pattern Recogn. 30(2), 183–196 (1997)

Dey, S., et al.: Gesture controlled home automation. Int. J. Emerg. Eng. Res. Technol. 3, 162–165 (2015)

Pannase, D.: To analyze hand gesture recognition for electronic device control. Int. J. 2(1), 44–53 (2014)

Itti, L., et al.: A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 20(11), 1254–1259 (1998)

Klava, B.: Segmentação interativa de imagens via transformação watershed. Dissertation Masters thesis, Instituto de Matemática e Estatística-Universidade de São Paulo (2009)

Acknowledgments

The authors would like to thank the Brazilian National Research Council (CNPq/PIBIC/UFS/PVE47492016) for the financial support provided for this research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Santos, B.F.L., de Andrade Santos, I.B., Guimarães, M.J.M., Benicasa, A.X. (2017). Human-House Interaction Model Based on Artificial Intelligence for Residential Functions. In: Stephanidis, C. (eds) HCI International 2017 – Posters' Extended Abstracts. HCI 2017. Communications in Computer and Information Science, vol 714. Springer, Cham. https://doi.org/10.1007/978-3-319-58753-0_51

Download citation

DOI: https://doi.org/10.1007/978-3-319-58753-0_51

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-58752-3

Online ISBN: 978-3-319-58753-0

eBook Packages: Computer ScienceComputer Science (R0)