Abstract

Next-generation sequencing (NGS) technologies initiated a revolution in genomics, producing massive amounts of biological data and the consequent need for adapting current computing infrastructures. Multiple alignment of genomes, analysis of variants or phylogenetic tree construction, with quadratic polynomial complexity in the best case are tools that can take days or weeks to complete in conventional computers.

Most of these analysis, involving several tools integrated in workflows, present the possibility of dividing the computational load in independent tasks allowing parallel execution. Determining adequate load balancing, data partitioning, granularity and I/O tuning are key factors for achieving suitable speedups.

In this paper we present a coarse-grain parallelization of GH caller (Genotype/Haplotype caller), a tool used in population genomics workflows that performs a probabilistic identification process to account for the frequency of variants present between population individuals. It implements a master-worker model, using the standard Message Passing Interface (MPI), and concurrently and iteratively distributes the data among the available worker processes by mapping subsets of data and leaving the orchestration to the master process. Our results show a performance gain factor of 260x using 64 processes and additional optimizations with regard to the initial non-parallelized version.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

More than a decade ago bioinformatics analysis experienced a major revolution with the introduction of NGS technologies, able to produce millions of DNA fragments at very high speed [11, 12]. Higher volumes of experimental data have a great impact in multiple areas, where an increase in the amount of observations improves the statistical power and hence the accuracy of results. Variability analysis in population genomics is an example where researchers take advantage of large-scale data volumes by incorporating more individuals and increasing DNA coverage in their experiments [16]. Originally driven by theoretical approaches, population genomics needs to test their models and validate its hypothesis using large amounts of sequencing data which require high performance technologies for its processing. In this context, population genomics has become a data-driven discipline. By contrast, there is a wide gap between data production and current processing capabilities. This divergence results in the need of providing new methods and solutions in the field of high performance computing. A typical case of variant analysis involves the identification, catalog, and map of variations at a single position (locus) in a DNA sequence among individuals, also known as single nucleotide polymorphism or SNP. The number of tools and its relation inside analytical workflows depends on each specific study [13], but we can identify three common stages illustrated in Fig. 1. First stage is performed to prepare raw input data (preprocess), followed by a main processing stage like SNP calling (process), and a final stage where results are arranged for its review.

Bioinformatics tools like Samtools [8], GATK [1] or ANGSD [7] have been mainly designed for detecting SNPs while keeping lower false discovery rates, but not for the analysis of variability. In this work we present a coarse-grain parallelization of the software Genotype/Haplotype caller (GH caller) [13]. This program is developed for the analysis of variability and implements the Roesti et al. [14] algorithm to accurately account the number of effective positions and the frequency of the variants, making this tool highly suitable for population genomics studies. We show that our parallel implementation can provide a performance advantage when using a data-intensive computing system (cluster).

The remaining of the paper is organized as follows. Section 2 reviews the original serial application GH caller. Our proposal and MPI implementation are described in Sect. 3 while its performance is analyzed in Sect. 4. Final conclusions and outlines of our future work are described in Sect. 5.

2 GH Caller

GH caller implements the Roesti algorithm [14], which is based in the algorithms proposed by Hohenhole et al. [4] and Lynch [10]. The main difference with other SNP callers is that GH caller counts the number of chromosomes that are effectively read per site, so that it makes unbiased in relation to the frequency of the variants for neutrality tests and to the calculation of the nucleotide variability.

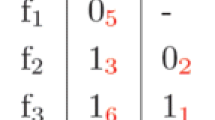

As input, GH caller takes pileup or mpileup file formats obtained from bam files [9] produced in previous steps of a variant analysis workflow. The pileup or mpileup format is a text-based format for summarizing the base calls of aligned reads to a reference sequence. This format describes the base-pair information at each chromosomal position, and one line contains the information of all the individuals at this position (locus). The output of GH caller is a fasta file with two sequences per individual and a length equal to the total length of the input sequence in mpileup.

Table 1 shows an example of sizes used as input and output data, and execution time of GH caller using these data sets. First column represents the number of individuals (bam files) used to create the mpileup input file; second column indicates the total size of the resulting mpileup. These files were obtained from 50 different bam files, and represent only one chromosome (chromosome 1) from the pig genome. Third column shows the size of the output fasta file. Last column shows the elapsed execution time using the original application. These figures show that the execution time increases with the problem size. For the case of 50 individuals and one chromosome (an input file of 283 GBytes) the time elapsed exceeds 60.000 s. It is not hard to foresee that as soon as NGS costs drop another fraction, researchers will be willing to analyze full genomes what it will easily require several days to process. These evidences make this problem worth to be analyzed in order to reduce execution times and improve resource usage.

In order to analyze the original application (serial), Fig. 2 shows the schema of how data is processed through the main flow of execution. The main steps are: First, it obtains all the information needed to apply the SNP algorithm at a given position (locus), reading a full line from the mpileup file. Next, for each locus GH caller applies the SNP calling algorithm and generates the corresponding output. Finally, all results are stored in memory and, at the end of execution, they are written to disk. Because all information is self-contained in each locus and there is no data dependency to apply the SNP calling algorithm, we can ensure that this problem is embarrassingly parallel, and hence it can be divided into components that can be executed concurrently. Next section will shows the strategy applied to parallelize this application.

3 Parallelization

The parallel implementation takes advantage of the inherent data parallelism found in the Roesti algorithm [14]. As we shown in previous section, this algorithm is applied independently in each site (locus), so this problem is embarrassingly parallel. Thanks to this property, we can split input data in groups of n loci and distribute them in chunks among the available processing units, where all the computations will be performed in parallel. The main parallelization phases are the following:

-

(1)

All processors initialize the parallel environment;

-

(2)

the main process (master) indexes the input file and maps the input data to other processes (workers);

-

(3)

the parallel computations start asynchronously on each worker. Locally, each process reads and parses a subset of data (‘Read’ and ‘Parse’), applies the base calling algorithm to these data (‘Process’), and finally writes his partial results to the final fasta output file (‘Write’);

-

(4)

if it was selected as a command line option, a synchronization point (barrier) is used to allow the master process append a reference/outgroup sequence.

Phases 1 and 3 are independent and only need synchronization, hence are performed in parallel, while phases 2 and 4 are serial and must be performed in order. The master-worker model implemented is illustrated in Fig. 3.

Domain Decomposition

Our application uses the standard Message Passing Interface (MPI) [3] and distributes the data among the available processors by mapping subsets of data (hereinafter “chunks”) in an uniform way, giving one to each worker process at a time. Index and map operations are carried out by the master process. All workers open the input file and read the part of the assigned data. Finally, they process its data using the algorithm described in the previous section (Fig. 4).

This master-worker model provides two main advantages: First, it allows the implicit parallelization of input/output operations along all workers. Second, as not all these chunks needs the same time to be processed, the master process can apply a load balancing strategy that distributes chunks dynamically across multiple workers. Futhermore, each chunk does not need to be replicated at the local memory of any other process, so memory used is released when data is written to disk: this allows GH caller to take advantage of working with data sets without size limits and use the whole memory available to the bunch of allocated processors, at the same time.

Pre-calculating Complex Operations

As described in Sect. 2, to complete the SNP calling process, we should apply the same formulas to all positions (loci). Since the scope of these operations is well-known and limited to a set of numbers, we created an array that holds a set of pre-computed results and use it as a Look-Up-Table (LUT). This approach allows to transform a set of slow complex calculations into faster memory accesses. As a result, we reduced a fraction of high cpu costs accumulated over all the SNP calling iterations.

4 Experimentation and Results

The main objective of the experiments proposed here are to evaluate the benefits obtained in our parallel approach by comparing both applications, serial and parallel versions, in terms of effective usage of variable computational resources using the same data.

Experimental Platform

The experimental platform used is a cluster composed by 8 nodes. Each node has 12 cores in 2 Intel Xeon X5660 processors with a frequency of 2.8 GHz, and 96 GBytes of RAM. The total number of available cores on this platform is 96 with 768 GBytes of RAM. These nodes are connected via 10 Gbit Ethernet links and run Scientific Linux version 6.3.

Our application was evaluated using the parallel file system Lustre, version 2.5.3 configured with 1 Metadata Server (MDS) and 2 object storage servers (OSS), which were connected to the same 10 Gbit Ethernet network. These Lustre servers manage up to 8 Object Storage Target (OST, that stores the data) with a size of 10 TBytes each. OSTs are served by two Storage Arrays (SAN) via 8 Gbit/s fiber channel links. A total of 80 TBytes of space is available to the entire cluster with a default stripe size of 1 Mbyte. We used mpicxx for OpenMPI version 1.8.8, and gcc 4.9.1 to compile all the codes, with −03 optimization enabled.

Experimental Setup

The performance of GH caller is evaluated using the execution time, speedup, and efficiency. These metrics are important because reducing execution time is the main concern to parallelize our application, speedup allows us to know how performs our solution while scales up the number of resources, and efficiency indicates how much of the resources are being used by our implementation.

First, we obtain the execution time of the original GH caller (serial) using only one core as computational resources (\(T_{1}\)). This value is used as a baseline to compare gains obtained regarding with the execution time used by our new parallel version (\(T_{N}\)). Although there are different definitions of speedup [15], we define the relative speedup obtained by the parallel program, Q, when solving a instance of instance I of size i, using NP processes as follows:

Where \(T_{(I,1)}\) is the time to solve I using program Q and 1 process. Because our application uses a master-worker scheme, this value corresponds to \(T_{I,2}\) (2 processes using 2 cores). \(T_{(I,NP)}\) is the time to solve I using program Q and NP processes.

We performed each experiment ten times and kept the average execution time. In all the experiments performed we used the case of 10 individuals, all from the chromosome 1 of the pig genome. Sizes of input and output files used in this example are show in first row of Table 1.

Execution Time and Scalability

Table 2 shows all results obtained from serial and parallel execution, scaling up the parallel implementation from 2 to 96 cores, and showing the obtained execution times, relative speedup, efficiency and gains with respect to the original version. As we can observe, execution times has been drammatically reduced thanks to the parallelization, while maintaining high efficiency figures (above \(80\%\)) until it reaches 64 processes, where the benefits of adding more resources begins to decrease.

In order to clearly show and appreciate gains obtained respect the original version, Fig. 5 plots and compares execution time of different implementations of GH caller. First column represents the original serial implementation, whereas next columns are results from parallel executions. As can be observed, using only 2 processes - a Master and a Worker - using this parallel implementation it reduces in 6x total execution time, due to improvements made in SNP algorithm internal calculations, parallelization of input/output tasks, and use of buffers to reduce total number of input/output operations. Furthermore, if we compare execution time using 64 cores, our results show a significant reduction in the time with respect to the original version, from 13,000 s to nearly 50 s. Looking at these results, we can observed that the execution time can be reduced by adding computational resources up to 260x, by using 64 cores and MPI processes. Nevertheless, these gains start to decrease when using all the available computational resources, a total of 96 cores, proving that there are some scalability issues.

In order to show plainly these issues, at Fig. 6 we plot relative speedup and efficiency. Dashed lines at these figures represent linear speedup and ideal efficiency, respectively. As described above, there are no data dependencies processing each chunk, as a result we can expect a linear speedup when scaling up the number of resources. However, we observe scalability issues using more than 64 processes, and these problems can be appreciate more clearly observing the efficiency values obtained. In fact, relative speedup obtained with 96 processes is limited to 39, clearly showing a low efficiency, with a value obtained of 42% of effectiveness. This low efficiency will be discussed in the next section.

Bottleneck Analysis

The work done in this section should distinguish the overhead introduced by each phase and identify the main reasons. First, like other analysis tools [6], we intercept the MPI calls to instrument the application. We use the MPI Parallel Environment (MPE) library [17] in our parallel application to log custom events and perform further analysis. Next, as MPE logging is accompanied by very precise time stamps, we used output log traces created by MPE in clog2 format to profile all executions and extract the execution time used in all application phases described in Sect. 3.

Figure 7 shows the total CPU time used by all processes in each phase: read the buffer from disk, parse data, apply SNP caller algorithm (process), and finally write results to disk. It clearly shows the impact of contention on the I/O system when we scale up the number of MPI processes. Ideally, with no overhead, the sum of total execution time used by all the processes in each phase should remain constant, but we can observe an overhead in both read and write phases, but it is particularly relevant at the last one. Indeed, when a limited number of processes (\(np<64\)) perform read and/or write operations, accumulated time used in all phases remains almost constant. Nevertheless, when we scale up the number of processes, the impact of I/O, specially in write operations, increases execution time more than 2x over the ideal using 96 processes.

We can conclude that when scaling up the number of MPI processes (\(np>64\)), it ends in a situation with I/O contention during the write phase. In order to mitigate this situation, that leads in a poor scalability, future work should be oriented to improve this writing phase, in a way that results optimal to the underlying distributed file system characteristics.

5 Conclusions and Future Work

In this work we have described the parallelization of GH caller using a master-worker strategy with the MPI library. We have shown that our parallel implementation can provide a performance advantage using a data-intensive computing system (cluster), reaching a performance gain factor of 260x using 64 processes and additional optimizations with regard to the initial non-parallelized version. Although execution time can considerably be reduced by adding more computational resources, we measured a poor efficiency when we scale up the number of MPI processes above a certain limit. The main reason is that our implementation is not optimized for parallel I/O and cannot take advantage of existing advanced techniques in this field, thus leading to poor performance when there are many (\(np>64\)) MPI processes writing to the same output file [2]. Future work could explore the possibility of applying different techniques already employed by specialized input/output libraries in high performance computing, like ROMIO (a MPI-IO implementation) [5].

Source code and instructions to compile are freely available to download at https://bioinformatics.cragenomica.es/projects/ghcaller.

References

Cheng, A.Y., Teo, Y.Y., Ong, R.T.H.: Assessing single nucleotide variant detection and genotype calling on whole-genome sequenced individuals. Bioinformatics 30(12), 1707–1713 (2014)

Corbett, P., et al.: Overview of the MPI-IO Parallel I/O Interface. In: Jain, R., Werth, J., Browne, J.C. (eds.) Input/Output in Parallel and Distributed Computer Systems, vol. 362, pp. 127–146. Springer, Heidelberg (1996). doi:10.1007/978-1-4613-1401-1_5

Forum, M.P.: MPI: a message-passing interface standard. Technical report, Knoxville, TN, USA (1994)

Hohenlohe, P.A., Bassham, S., Etter, P.D., Stiffler, N., Johnson, E.A., Cresko, W.A.: Population genomics of parallel adaptation in threespine stickleback using sequenced RAD tags. PLoS Genet 6(2), e1000862 (2010)

Liao, W.K., Choudhary, A.N.: Dynamically adapting file domain partitioning methods for collective I/O based on underlying parallel file system locking protocols. In: SC 2008, p. 3. IEEE/ACM (2008). http://dblp.uni-trier.de/db/conf/sc/sc2008.html#LiaoC08

Knüpfer, A., Brunst, H., Doleschal, J., Jurenz, M., Lieber, M., Mickler, H., Müller, M.S., Nagel, W.E.: The vampir performance analysis tool-set. In: Resch, M., Keller, R., Himmler, V., Krammer, B., Schulz, A. (eds.) Tools for High Performance Computing, pp. 139–155. Springer, Heidelberg (2008). doi:10.1007/978-3-540-68564-7_9

Korneliussen, T., Albrechtsen, A., Nielsen, R.: ANGSD: analysis of next generation sequencing data. BMC Bioinform. 15(1), 356 (2014)

Li, H.: A statistical framework for SNP calling, mutation discovery, association mapping and population genetical parameter estimation from sequencing data. Bioinformatics 27(21), 2987–2993 (2011)

Li, H., Handsaker, B., Wysoker, A., Fennell, T., Ruan, J., Homer, N., Marth, G., Abecasis, G., Durbin, R.: 1000 genome project data processing subgroup: the sequence alignment/map format and samtools. Bioinformatics 25(16), 2078–2079 (2009)

Lynch, M.: Estimation of nucleotide diversity, disequilibrium coefficients, and mutation rates from high-coverage genome-sequencing projects. Mol. Biol. Evol. 25(11), 2409–2419 (2008)

Mardis, E.R.: A decade’s perspective on DNA sequencing technology. Nature 470(7333), 198–203 (2011). http://dx.doi.org/10.1038/nature09796

Metzker, M.L.: Sequencing technologies - the next generation. Nat. Rev. Genet. 11(1), 31–46 (2010)

Nevado, B., Ramos-Onsins, S.E., Perez-Enciso, M.: Resequencing studies of nonmodel organisms using closely related reference genomes: optimal experimental designs and bioinformatics approaches for population genomics. Mol. Ecol. 23(7), 1764–1779 (2014)

Roesti, M., Hendry, A.P., Salzburger, W., Berner, D.: Genome divergence during evolutionary diversification as revealed in replicate lake-stream stickleback population pairs. Mol. Ecol. 21(12), 2852–2862 (2012)

Sun, X.H., Gustafson, J.L.: Toward a better parallel performance metric. Parallel Comput. 17(10–11), 1093–1109 (1991). http://dx.doi.org/10.1016/S0167-8191(05)80028-6

Wetterstrand, K.: DNA Sequencing Costs: Data from the NHGRI Genome Sequencing Program (GSP). http://www.genome.gov/sequencingcosts

Wu, C.E., Bolmarcich, A., Snir, M., Wootton, D., Parpia, F., Chan, A., Lusk, E., Gropp, W.: From trace generation to visualization: a performance framework for distributed parallel systems. In: Proceedings of SC 2000: High Performance Networking and Computing, November 2000

Acknowledgments

We would like to thank the original author of GH caller [13], Bruno Nevado, for his comments and support. We are also very grateful with Joan Jené for the support and help provided.

This work was supported by Ministerio de Ciencia y Tecnología (Spain) under project number TIN2014-53234-C2-1-R, and Ministerio de Economía y Competitividad (grant AGL2013-41834-R) to S.E.R.-O.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Navarro, J., Vera, G., Ramos-Onsins, S., Hernández, P. (2017). Improving Bioinformatics Analysis of Large Sequence Datasets Parallelizing Tools for Population Genomics. In: Desprez, F., et al. Euro-Par 2016: Parallel Processing Workshops. Euro-Par 2016. Lecture Notes in Computer Science(), vol 10104. Springer, Cham. https://doi.org/10.1007/978-3-319-58943-5_37

Download citation

DOI: https://doi.org/10.1007/978-3-319-58943-5_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-58942-8

Online ISBN: 978-3-319-58943-5

eBook Packages: Computer ScienceComputer Science (R0)