Abstract

Unordered graph algorithms can offer efficient resource utilization that is advantageous for performance in distributed setting. Unordered execution allows for parallel computation without synchronization. In unordered algorithms, work is data-driven and can be performed in any order, refining the result as the algorithm progresses. Unfortunately, a sub-optimal work ordering may lead to more time spent on correcting the results than on useful work. On HPC systems, the issue is compounded by irregular nature of distributed graph algorithms which makes them sensitive to the whole software/hardware stack, collectively referred to as runtime. In this paper, we consider an example of such algorithms: Distributed Control (DC) single-source shortest paths (SSSP). DC relies on performance gains stemming from the inherent asynchrony of unordered algorithms while optimizing work ordering locally. We demonstrate that distributed runtime scheduling policy can prevent effective work ordering optimization. We show that lifting and delegating some scheduling decisions to the algorithm level can result in significantly better performance. We propose that this strategy can be useful for performance engineering.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Large data sets in application areas such as physical networks, social media, bioinformatics, genomics and marketing, to name a few, are well represented by graphs and studied using graph analytics. Ever increasing size of data forces graph analytics to be performed on distributed systems including supercomputers. It is anticipated that the largest, most complex of such data sets, notably, e.g., in precision medicine, do or will require exascale computing resources. Achieving exascale is a nontrivial undertaking demanding a concerted effort at all levels of software/hardware stack. In order to utilize the modern systems, distributed graph algorithms need to be designed to scale. Scaling in turn requires that the algorithms are designed in a manner that maximizes asychrony. Unfortunately, supercomputing resources are notoriously inefficient for irregular applications.

Irregular applications like graph algorithms may exhibit little locality, rarely require any significant computation per memory access, and result in high-rate communication of small messages. In graph applications, work items are generated in an unpredictable pattern. This makes performance of distributed graph algorithms dependent on the whole software/hardware stack, which includes not just the algorithm itself but all levels of the runtime and the hardware. The sensitivity to runtime is correlated with the level of achieved asychrony [7, 21]. Moreover, it has been shown that the performance is further dependent on the type of input graph [11], and even a starting point within the same input graph [5].

In this paper, we propose a solution to how these issues can be ameliorated. Our approach is motivated by the recognition that formulating an algorithm to exploit optimistic parallelism [14] is contingent upon adequate assistance from the runtime. Design choices for general-purpose runtime systems are driven by the need to support a wide range of applications at scale. Yet, for many applications, a specific interleaving of execution of algorithm logic and runtime logic is necessary to achieve performance. While dynamic adaptive runtime systems, such as HPX-5 [10], can bookkeep information to assist an algorithm to perform better, a mechanism utilizing the application programmer’s insight could improve performance even further. Note that any distributed graph algorithm consists of three parts: work items, data structures, and application-level scheduler. For achieving efficient optimistic parallelism, the application developer can provide application-aware scheduling policy to the runtime to be incorporated into the runtime-level scheduler. In this way, the runtime system could utilize programmer’s knowledge of the particular algorithm and provide performance benefits due to better scheduling. Here we propose a mechanism to do so.

Specifically, we implement a family of unordered algorithms [20] for SSSP in HPX-5, based on an earlier implementation of distributed control (DC) SSSP [21]. We have chosen SSSP because SSSP and its variation Breadth First Search (BFS) appear as a kernel for many other graph applications. These include, for example, betweenness centrality and connected components. Additionally, they are good representative problems to study system behavior, as proposed by Graph500 benchmark [17]. Previously, we have categorized and demonstrated the relevance of detailed description of a runtime used in the context of executing graph algorithms, and shown that DC is particularly sensitive to lower-level details of the runtime [6, 7]. Here we study effects of runtime-level scheduler and network progression. We propose to incorporate an application-level plug-in scheduler in general-purpose asynchronous many-task runtime.

The paper is organized as follows: In Sect. 2, we briefly summarize the DC and \(\varDelta \)-stepping algorithms for SSSP. We use \(\varDelta \)-stepping for comparison. In Sect. 3, we discuss the HPX-5 scheduler and the influence of HPX-5 runtime on graph traversal algorithms, DC in particular. Next, in Sect. 4, we introduce our refinements of the basic DC algorithm based on adaptive network progress frequency and flow control. We present performance comparison of different algorithms with our proposed algorithm in Sect. 5. Finally, we discuss the related work in Sect. 6, and we conclude in Sect. 7.

2 Background

Let us denote an undirected graph with n vertices and m edges by G(V, E). Here \(V=\{v_1, v_2,\ldots ,v_n\}\) and \(E=\{e_1, e_2,\ldots ,e_m\}\) represent vertex set and edge set respectively. Each edge \(e_i\) is a triple \((v_j, v_k, w_{jk})\) consisting of two endpoint vertices and the edge weight. We assume that each edge has a nonzero cost (weight) for traversal. In single source shortest path (SSSP) problem, given a graph G and a source vertex s, we are interested in finding the shortest distance between s and all other vertices in the graph. In this section we briefly describe the basic Distributed Control based SSSP algorithm [21] and \(\varDelta \)-stepping algorithm [15]. Both of these algorithms approximate the optimal work ordering of Dijkstra’s sequential SSSP algorithm [2], but each does that in a different way.

2.1 Basic Distributed Control Algorithm

DC is a work scheduling method that removes overhead of synchronization and global data structures while providing partial ordering of work items according to a priority measure. The algorithm starts by initialization of the distance map and by relaxing the source vertex. The work on the graph is performed by removing a work item (a vertex and a distance pair) from the thread-local priority queue in every iteration and then relaxing the vertex targeted by the work item. Vertex relaxation checks whether the distance sent to a vertex v is better than the distance already in the distance map, and it sends a relax message (work item) to all the neighbors of v with the new distance computed from v’s distance \(d_v\) and the weight of the edge between v and v’s neighbors \(v_n\). A receive handler receives the messages sent from the relax function, and inserts the incoming work items into the thread-local priority queue. When a handler finishes executing, it is counted as finished in termination detection. Note that there is no synchronization barrier in the algorithm. All ordering is achieved locally in thread-local priority queues, and all ordering performed on the thread level adds up to an approximation of a perfect global ordering.

2.2 \(\varDelta \)-Stepping

\(\varDelta \)-Stepping approximates the ideal priority ordering by arranging work items into distance ranges (buckets) of size \(\varDelta \) and executing buckets in order. In each epoch i, vertices within the range \(i\varDelta - (i+1)\varDelta \) contained in a bucket \(B_i\) are processed asynchronously by worker threads. Within a bucket, work items are not ordered, and can be executed in parallel. Processing a bucket may produce extra work for the same bucket or for the successive buckets. After processing each bucket, all processes must synchronize before processing the next bucket to maintain work item ordering approximation. The more buckets (the smaller the \(\varDelta \) value), the more time spent on synchronization. Similarly, the fewer buckets (the larger the \(\varDelta \) value), the more sub-optimal work the algorithm generates because larger buckets provide less ordering. With \(\varDelta = 1\), \(\varDelta \)-stepping produces ordering equivalent to the ordering of the Dijkstra’s algorithm (a priority queue ordering of all work items).

3 Interaction of DC with the HPX-5 Scheduler

The HPX-5 runtime system is an initial implementation of the ParalleX model [8]. HPX-5 represents work as parcels. The HPX-5 runtime scheduler is responsible for executing actions associated with parcels. It is a multi-threaded, cooperative, work-stealing thread scheduler, where heavy-weight worker threads run scheduler loops that select parcels to be executed. Specifically, each worker thread in HPX-5 maintains a last-in-first-out (LIFO) queue of parcels, with a possibility of stealing the oldest parcels from other threads. The light-weight threads executing parcels can yield, and HPX-5 maintains separate queue for yielded threads. Parcels can be sent to particular heavy-weight scheduler threads using mail queues. Newly generated parcels may be destined for remote localities, and HPX-5 provides transparent network layer with robust implementation based on Photon [13] and an implementation based on the MPI interface.

Every HPX-5 worker thread running the scheduler keeps spinning until it finds a parcel to execute or it has been signaled to stop. The scheduler loop is outlined in Algorithm 1. The mailboxes are given the highest priority, followed by the yield queue, followed by the LIFO queue. Next, a plug-in-scheduler, an extension we discuss in more detail in Sect. 4 gets a chance to execute. Finally, when the scheduler is unable to obtain work from thread-local sources, it first attempts to progress the network and then to steal work from other scheduler threads. It is important to note that executing work in any of the steps causes the loop to start from the beginning. So, for example, all mail tasks will be processed before any LIFO queue tasks, and no network progress will be performed before all work sources that come before it in the scheduler loop are exhausted. While this approach works well for some applications, it turns out that it does not work well for algorithms like DC that depend on continuous feedback for efficient scheduling.

Our DC approach draws concept from optimistic parallelization and self-stabilization [3]. To achieve optimistic parallelism via asynchrony, DC eliminates global synchronization barriers. However, to reduce the amount of sub-optimal work, DC performs local ordering of work items. This necessitates runtime support for quick delivery of messages so that they can be ordered as soon as possible. When we implemented DC based SSSP algorithm in HPX-5 with the default HPX-5 scheduler, we made a couple of observations on the interaction between the default HPX-5 scheduler and the DC algorithm. As the scheduler does not distinguish between runtime tasks and algorithmic work items, it indiscriminately puts both tasks and work item parcels, received over the network, in the current worker’s LIFO queue. It then chooses a parcel to execute from the queue, if available, or go through the steps in Algorithm 1 to find and schedule one. This mixing of execution of tasks and work items can hurt algorithm’s performance because, in the runtime level, at a particular instance of time, a tradeoff exists between executing tasks vs. work items. For instance, we have encountered situations where most of the work is stuck in the network buffers while the scheduler tries to execute parcels from the application-level priority queue. This left the algorithm to compare and choose from a smaller number of work items. This results dwindling priority queues used for local ordering in DC, even if work items are available in the transport buffers.

Based on these observation, we posit that distinguishing runtime tasks from algorithmic work items by maintaining seperate data structures for them to facilitate scheduling and having a way to provide an algorithm-specific scheduling policy as a plug-in scheduler in the runtime scheduler can benefit unordered algorithms in several ways. First, by separating these sets of works, runtime has better control over when to schedule what type of work. Secondly, runtime can exploit programmer’s knowledge about algorithmic work items. For example, application programmer can provide an ordering policy for the work items (priority for parcels containing shorter distances). Third, we note that irregular graph algorithms are communication bound, rather than computation intensive. If, at any particular time instance, the application level does not have enough work items to work on or compare with, it can voluntarily give up control to other scheduling mechanisms like network progress to fetch more work from the underlying transport. Delaying network progression till exhaustion of work items eliminates the chance of propagating better work from other localities. Such interleaving execution of runtime tasks, work items, and network progress can boost the performance of an unordered algorithm.

To alleviate these issues, we extend the default HPX-5 scheduler with a provision for the application-level programmer to incorporate a configurable, plug-in scheduler. The application-level plug-in scheduler consists of 3 parts: work consumer, work producer, and work Stealing. In the next section, we discuss a plug-in scheduler we designed for DC, and in Sect. 5 its performance.

4 Distributed Control with Adaptive Frequency and Flow Control

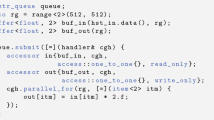

Algorithm 2 shows the pseudo code for the DC plug-in scheduler algorithm. The algorithm consists of 3 parts: the work produce function \(f_p\) that manages extraction of algorithmic work items from the local priority queue, the message handler that receives tasks from other workers, and the relax function that updates distances and generates new work. The basic task of \(f_p\) is to remove work items from the thread-level priority queue and to return them to the runtime scheduler for execution and the basic task of the relax function is to send updates to all neighbors of the vertex being relaxed. In this section, we discuss a plug-in-scheduler implementation that goes beyond these basic tasks by employing flow control and adaptive frequency scheduling.

4.1 Flow Control

Local ordering in DC produces more optimal work orderings when more work is available to order in thread-local priority queues. The runtime, however, needs to deliver messages across the network through multiple layers of implementation. This causes a tension between DC and the runtime, where on one hand it is best to deliver majority of work items into DC priority queues, but, on the other hand, minimizing the amount of work items that are in-flight in the runtime comes at a cost of runtime overhead. We implement a flow control mechanisms to allow DC to control the flow of network communication through the HPX-5 runtime using customizable parameters.

Work items are moved out from the network layers of HPX-5 when the scheduler loop in Algorithm 1 runs network progress (Line 10). The only way that control reaches Line 10 is when the work produce function returns a null work item (Line 8 in Algorithm 1). Our plug-in scheduler DC maintains an approximate measure of work items that have been sent over the network but not yet delivered. To maintain the approximation, we keep a locality-based global counter \( sync\_count \) of work items that have been sent with a request of remote completion notification. When this count grows over some threshold \( sync\_threshold \), \(f_p\) returns control back to the runtime (Line 3 of Algorithm 2).

In Relax function, when the worker thread propagates updated distance to the neighbors (Line 3), it checks how many asynchronous sends has been posted (Line 4). If the count has reached a particular threshold \( send\_threshold \), a send with continuation is performed and the \( sync\_count \) value is incremented to keep track of how many continuations are expected (Lines 8–9). When calls with continuation are completed remotely, the continuation decrements the \( sync\_count \) value on the locality from which the original send call was made. At every send with continuation, the thread-local \( send\_count \) is reset to 0. The call with continuation is performed with the hpx_call_with_continuation HPX-5 function:

hpx_call_with_continuation takes an address addr (local or remote) and invokes the specified action action at that address. Once that action has finished executing, the continuation action c_action is invoked at c_target address. Implementing flow control is very easy with the semantics provided by the hpx_call_with_continuation interface as the continuation is “fire and forget,” and it is automatically handled by the runtime.

4.2 Adapting Frequency of Network Progress

If the current locality keeps receiving messages and the network progress keeps succeeding with adequate amount of work items received over the network, it is an indication that either the algorithm is in the middle of its execution phase or a lot of messages are destined to the current locality. It is thus useful to keep retrieving messages from the network receive buffer and put them in the priority queues in the algorithm level. In this way, when the algorithm gets a chance to progress, it has robust amount of work items in the priority queue to compare and make choices from and minimize the possibility of executing sub-optimal work items.

To get an idea of successful network progression, the algorithm checks the current priority queue size in the \(f_p\) function and compares it with the size seen the last time. Growing size of the priority queue is an indication of successful network probing (Line 5). As mentioned earlier, its better to fetch more work items from the network aggressively if the network progression keeps returning a lot of received messages. To achieve this, the algorithm maintains a thread-local counter freq. Whenever the queue size grows, the freq counter is decremented to indicate that fewer elements will be processed from the priority queue and control will be given to the scheduler to progress the network more frequently (Line 6 in Work produce).

It is noteworthy to mention here that progressing the network for every vertex processed is not a viable option. The reason is that network progress incurs much more overhead compared to processing a vertex. Although eager network progress can assist in the reduction of useless work by increasing priority queues’ size, it has detrimental effect on algorithm performance due to the associated overhead.

5 Experimental Results

In this section, we evaluate several algorithms based on DC and compare their performance with \(\varDelta \)-stepping algorithm. In the following discussion, algorithms without plug-in scheduler carry \( np \) subscript, algorithms which give up control to the runtime schedule at a fixed frequency carry \( ff \) subscript, algorithms with flow control carry \( fc \) subscript, and algorithms with adaptive frequency for network progress carry \( af \) subscript.

5.1 Experimental Setup

We conducted all our experiments on a Cray XC40 system. Each compute node on this system has 32 cores with clockrate of 2.7 GHz, and 64 GB memory. For input, we used Graph500 graphs [9]. For each algorithm, we run 4 problem instances and report the average the execution time with standard deviation of mean as the measurement for uncertainty. We have used \(\varDelta =1\) for \(\varDelta \)-Stepping algorithm. We chose the optimal number of threads for each algorithm. The graph is distributed across different nodes in 1D fashion and represented with a distributed adjacency list data structure. We have compiled our code with gcc 5.1 and with optimization level −O3.

5.2 Comparison of \(\varDelta \)-Stepping and Five Versions of DC Algorithms

Figure 1 shows the execution time taken by different SSSP algorithms. DC, which uses the plug-in capability but does not have flow control or adaptive frequency heuristic performs worse than \(DC_{np}\). Adding a fixed frequency heuristic for network progression helped \(DC_{ ff }\) to perform comparatively up to 8 compute nodes but for larger scale its performance deteriorates. Although for smaller scales fixed frequency heuristic is good enough, to achieve better scaling, the algorithm needs to adjust the network probing according to the work profile, which we do in \(DC_{ af }\). Compared to \(DC_{ ff }\), this heuristic worked better with scale 24 graph input but did not perform well with scale 25 input. In \(DC_{ ff , fc }\), we add flow control. Flow control mechanism helps \(DC_{ ff , fc }\) in achieving almost identical performance as \(\varDelta \)-stepping algorithm. Finally, \(DC_{ af , fc }\) performs the best. Flow control and adaptive frequency together make \(DC_{ af , fc }\) achieve better work ordering and balance in executing tasks and work items. Figure 1 also shows the work profiles for different SSSP algorithms. Although \(\varDelta \)-Stepping executes less work items, it takes longer time. On the other hand, \(DC_{ af , fc }\) algorithm executes more work due to sub-optimal work generation but still runs faster. This is due to the fact that, with proper flow control and adaptive frequency heuristic, \(DC_{ af , fc }\) can schedule work items efficiently and interleave runtime progress and work item execution in a proper manner.

5.3 Performance of \(DC_{ af , fc }\) with various \(send\_threshold\) and \(sync\_threshold\) value

Figure 2 illustrates how the performance of \(DC_{ af , fc }\) varies with different combinations of values for (\( send\_threshold \), \( sync\_threshold \)). The results are obtained on 64 nodes and with scale 25 Graph500 input. As can be seen from the figure, a \( send\_count \) value of 10000 and \( sync\_count \) value of 2 gives the best performance for \(DC_{ af , fc }\). In this case, for every 10000 sent messages, we have issued 2 calls with continuation which gives algorithm \(DC_{ af , fc }\) better opportunity to progress asynchronously. During our experiments, a cursory search for good values for (\( send\_threshold \), \( sync\_threshold \)) parameters resulted in the (200, 100) pair. Thus, we have restricted our search space within the vicinity of 20000 messages and experimented with different combinations of (\( send\_threshold \), \( sync\_threshold \)) for generating 20000 messages. As can be seen from the figure, the execution time reaches a minimum with \(send\_threshold\) of 10000 and \(sync\_threshold\) of 2 and then starts increasing with larger (\( send\_threshold \), \( sync\_threshold \)) values. Although the total activity count keep increasing, a right combination of (\( send\_threshold \), \( sync\_threshold \)) value helps to overcome the overhead of executing more work by scheduling work in a timely fashion and gaining better performance in general.

6 Related Work

Nguyen and Pingali [19] have shown that performance of algorithms for various irregular applications can improve significantly by selecting right scheduling policies. They evaluated different synthesized schedulers for shared memory systems.

Distributed runtimes sometimes allow programmers to specify priorities. For example, Charm++ [12] has provision for controlling delivery of messages by allowing users to adjust delivery order of messages by setting the queuing strategy (FIFO, LIFO) as well as two mechanisms for setting priorities (integer and bitvector) [1]. Another recent runtime, Grappa [18], maintains 4 queues: ready worker queue, deadline task queue, private task queue and public task queue for tasks. The deadline task queue manages high priority system tasks. Grappa scheduler allows threads to yield to tolerate communication latency and also has provision for distributed work stealing. Although, in [18], it has been mentioned that programmers can direct scheduling explicitly, its not clear how this can be done from the application level. UPC [4] provides topology-aware hierarchical work stealing [16] based scheduling mechanism.

7 Conclusion

Unordered distributed graph algorithms enable better utilization of resources in HPC systems, but their performance is sensitive to the underlying runtime system. In this paper we have shown on the example of DC SSSP how to improve performance by modifying the algorithm with a plug-in scheduler that bridges the application and the runtime system. The plug-in scheduler then provides an algorithmic specific scheduling policy to the runtime scheduler, thus lifting some functionality of the lower stacks into the algorithm level. A special provision for this feature needs to be made in the runtime system. We have implemented this in HPX-5, and have shown that performance of DC varies with different heuristics. The plug-in scheduler is useful for improving performance.

References

Charm++ Documentation (2016). http://charm.cs.illinois.edu/manuals/html/charm++/10.html

Dijkstra, E.W.: A note on two problems in connexion with graphs. Numer. Math. 1(1), 269–271 (1959)

Dijkstra, E.W.: Self-stabilization in spite of distributed control. In: Dijkstra, E.W. (ed.) Selected Writings on Computing: A Personal Perspective, pp. 41–46. Springer, Heidelberg (1982)

El-Ghazawi, T., Carlson, W., Sterling, T., Yelick, K.: UPC: Distributed Shared-Memory Programming. Wiley, Hoboken (2003)

Firoz, J.S., Barnas, M., Zalewski, M., Lumsdaine, A.: The value of variance. In: 7th ACM/SPEC International Conference on Performance Engineering (ICPE). ACM (2016)

Firoz, J.S., Zalewski, M., Barnas, M., Kanewala, T.A., Lumsdaine, A.: Context matters: distributed graph algorithms and runtime systems. In: Platform for Advanced Scientific Computing (PASC) (2016)

Firoz, J.S., Kanewala, T.A., Zalewski, M., Barnas, M., Lumsdaine, A.: Importance of runtime considerations in performance engineering of large-scale distributed graph algorithms. In: Hunold, S., et al. (eds.) Euro-Par 2015. LNCS, vol. 9523, pp. 553–564. Springer, Cham (2015). doi:10.1007/978-3-319-27308-2_45

Gao, G., Sterling, T., Stevens, R., Hereld, M., Zhu, W.: ParalleX: a study of a new parallel computation model. In: International Parallel and Distributed Processing Symposium, pp. 1–6, March 2007

Graph500: Version 2 Specification (2016). https://github.com/graph500/graph500/tree/v2-spec

HPX-5 Runtime (2016). http://hpx.crest.iu.edu/

Iosup, A., Hegeman, T., Ngai, W.L., Heldens, S., Pérez, A.P., Manhardt, T., Chafi, H., Capota, M., Sundaram, N., Anderson, M., et al.: LDBC Graphalytics: A Benchmark for Large-scale Graph Analysis on Parallel and Distributed Platforms, a Technical Report (2016)

Kale, L.V., Krishnan, S.: Charm++: a portable concurrent object oriented system based on C++. In: Proceedings of the Eighth Annual Conference on Object-Oriented Programming Systems, Languages, and Applications, OOPSLA 1993, pp. 91–108. ACM, New York (1993)

Kissel, E., Swany, M.: Photon: remote memory access middleware for high-performance runtime systems. In: First Annual Workshop on Emerging Parallel and Distributed Runtime Systems and Middleware, IPDRM 2016 (2016)

Kulkarni, M., Pingali, K.: Scheduling issues in optimistic parallelization. In: IEEE International Parallel and Distributed Processing Symposium, IPDPS 2007, pp. 1–7. IEEE (2007)

Meyer, U., Sanders, P.: \(\varDelta \)-stepping: a parallelizable shortest path algorithm. J. Algorithms 49(1), 114–152 (2003)

Min, S.J., Iancu, C., Yelick, K.: Hierarchical work stealing on manycore clusters. In: Fifth Conference on PGAS Programming Models (2011)

Murphy, R.C., Wheeler, K.B., Barrett, B.W., Ang, J.A.: Introducing the graph 500 benchmark. Cray User’s Group (CUG) (2010)

Nelson, J., Holt, B., Myers, B., Briggs, P., Ceze, L., Kahan, S., Oskin, M.: Grappa: a latency-tolerant runtime for large-scale irregular applications. Technical report, Technical Report UW-CSE-14-02-01, University of Washington (2014)

Nguyen, D., Pingali, K.: Synthesizing concurrent schedulers for irregular algorithms. In: Proceedings of the Sixteenth International Conference on Architectural Support for Programming Languages and Operating Systems, ASPLOS XVI, pp. 333–344. ACM, New York (2011)

Pingali, K., Nguyen, D., Kulkarni, M., Burtscher, M., Hassaan, M.A., Kaleem, R., Lee, T.H., Lenharth, A., Manevich, R., Méndez-Lojo, M., et al.: The tao of parallelism in algorithms. ACM SIGPLAN Not. 46(6), 12–25 (2011)

Zalewski, M., Kanewala, T.A., Firoz, J.S., Lumsdaine, A.: Distributed control: priority scheduling for single source shortest paths without synchronization. In: Proceedings of the Fourth Workshop on Irregular Applications: Architectures and Algorithms, pp. 17–24. IEEE (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Firoz, J.S., Zalewski, M., Barnas, M., Lumsdaine, A. (2017). Improving Performance of Distributed Graph Traversals via Application-Aware Plug-In Work Scheduler. In: Desprez, F., et al. Euro-Par 2016: Parallel Processing Workshops. Euro-Par 2016. Lecture Notes in Computer Science(), vol 10104. Springer, Cham. https://doi.org/10.1007/978-3-319-58943-5_44

Download citation

DOI: https://doi.org/10.1007/978-3-319-58943-5_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-58942-8

Online ISBN: 978-3-319-58943-5

eBook Packages: Computer ScienceComputer Science (R0)