Abstract

A promising approach to improve the energy-efficiency of HPC applications is to apply energy-saving techniques for different code regions according to their characteristics (blocking communication, load imbalance). Since most applications have many parallel code regions, this strategy requires extensive experimental time to find all the time-energy trade-offs for a given application. In this paper we make use of Design of Experiments (DoE) to (1) reduce the experimental time considering a parsimonious evaluation of execution time and energy; and (2) define the Pareto front with all interesting time-energy trade-offs. We report the use of our methodology for seven benchmarks, each with interesting Pareto fronts with distinct shapes. Among them, out of the 25 parallel regions of the MiniFE benchmark, we detect configurations which reduce energy in 9.27% with a non-significant penalty in runtime when compared with using the high frequency for all regions; and, for the Graph500 benchmark with 17 parallel regions, 7.0% execution time reduction with a increase of 2.4% in energy consumption, when comparing against running all regions in the lowest frequency.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Performance has historically overshadowed energy efficiency in the HPC field. This scenario is changing and initiatives focusing on energy efficiency, like the Green500 list [24], have gained importance. The current leader of Green500 offers only 7.0GFLOPs per watt. Considering a 20MW exascale supercomputer, the efficiency would have to be of at least 50GFLOPs per watt. Improvements must be made from both the hardware and software sides to make the leap in energy efficiency. Strategies for energy reduction in parallel applications are a step forward to address the problem from the software side.

Software energy reduction strategies can be divided in two groups: inter-node [2], acting in the system level; and intra-node, where code regions are subject to power manipulation. Usually, application idle states trigger these strategies. Opportunities appear during load imbalances [19], blocking communication phases [16, 21], inter-node communication [15], MPI operations [26], and wait states [13]. Correlating power consumption to source code is also explored [6]. Dynamic Voltage Frequency Scaling [9] (DVFS) is frequently used, attempting different processor frequencies to execute code regions, targeting energy savings with minimal or no performance loss [5].

HPC applications commonly have many parallel regions subject to frequency scaling. For example, Graph500 [18] has 17 parallel regions; MiniFE [7] has 25. Large HPC codes may have hundreds depending on the application complexity and code size. It is unrealistic to evaluate all time-energy trade-offs considering several processor frequencies. The experimental time would be too large, even more as replications are necessary to account for variability. Others [5, 14] have adopted similar per-region strategies but they use simple experimental designs meant to find not all time-energy trade-offs, but a single per-region frequency combination (details in Sect. 2).

The objective of this work is to discover all the time-energy trade-offs when adopting per-region processor frequency scaling. We tackle the explosion in experimental time with a workflow based on Design of Experiments (DoE) techniques [27], such as screening and full factorial designs, ANOVA, and main effect plots [17]. Final results are analyzed with a customized bivariate Pareto front plot demonstrating experimental variability. As far as we know, this is the first time such combined framework is used to evaluate energy savings in HPC.

We report the use of our methodology for seven OpenMP benchmarks with many parallel code regions, each with interesting Pareto fronts with distinct shapes. Among them, out of the 25 parallel regions of the MiniFE benchmark, we detect configurations which reduce energy in 9.27% with a non-significant penalty in runtime when compared with using the high frequency for all regions; and, for the Graph500 benchmark with 17 parallel regions, we obtain a 7% execution time reduction with a 2.4% increase in energy consumption, when comparing against running all regions in the lowest frequency.

Section 2 positions our work against related work. Section 3 presents basic concepts about DoE. Section 4 details our methodology. Section 5 has the evaluation of seven benchmarks. Conclusion and future work appears in Sect. 6. The source code of this paper, including all data that has been used, is publicly available as an org file on https://github.com/lfgmillani/reppar2016/.

2 Related Work

There has been a lot of effort to save energy with minimal performance loss in HPC systems. We focus in application-aware strategies that consider code regions. Freeh and Lowenthal [5] propose per-phase frequency scaling in HPC applications. They define the best processor frequency combination by testing all possible frequencies one by one, sequentially and in order. This is an one-phase at a time design, with a linear experimental time according to the number of phases and processor frequencies. Our approach differs in two main aspects. First, while they verify one factor at a time, our approach combines screening, rapidly discovering regions affecting outcomes, with full factorial designs, detecting all time-energy trade-offs. One factor at a time designs capture only a small subset of such trade-offs. Second, while Freeh et al. evaluate all available frequencies, we are limited to two. Statistical data analysis lacks established tools to analyze measurements with three or more levels per factor (see Sect. 3 for details).

Laurenzano et al. [14] also propose a fine-grained approach to define the best per-loop processor frequency. They generate a series of loops configured with different CPU and memory behavior. In a system characterization step, each loop configuration is evaluated against all possible processor frequencies, ultimately defining which frequency is the best. The real HPC application loops are each one profiled for cache hit rates, flops, and number of memory accesses, forming a loop signature. Frequency determination is obtained by searching the closest point of the loop signature in the system characterization data. Our methodology differs because it works directly with the application code in our screening warm up step. Our methodology also enables the discovery of all time-energy trade-offs that belong to the Pareto front, instead of searching for a single best combination as they do. Laurenzano’s approach has been extended by Tiwari et al. [25] with Green Queue, using eight dimensions for frequency selection. Peraza et al. [20] combine power models and performance measurements, using a method that requires only one application run per frequency configuration. Such technique makes it impossible to detect correlations between frequency configurations on different parts of the program, something we address in our method by using a full factorial design.

There are other approaches. Use of runtime systems [21] to detect frequencies for code regions that give a good balance between performance and energy, those that use profile-based information [8] to find the best frequencies, and analytical [13] and prediction models [6]. Preparatory measurements with all available frequencies are also conducted by Dick et al. [3] on a numerical simulation code to deduce the best frequencies in a per-routine basis.

3 Background on Design of Experiments

In Design of Experiments (DoE), factors are variables that can affect the outcome, such as the compiler used, the CPU architecture, the number of cores, etc. They can be quantitative or qualitative. We present a background of DoE concepts, essential for a good understanding of our experimental methodology.

Full Factorial Designs. The full factorial experimental design keeps the effect of factors orthogonal [17], when level distribution is balanced. The orthogonality is important when analyzing experimental results, as it allows the effect of each factor to be estimated independently. With \(n\) factors, a two-level full factorial design requires \(2^n\) experiments. Since experimental size grows exponentially with the number of factors, its adoption is unfeasible with many factors. Full factorial designs enable the detection of interactions among factors. Such interaction means that simultaneous changes in multiple factors have combined effects in the measured outcome. This implies that a factor’s effect in the outcome depends on another factor. As far as we know, factor interaction is undeveloped (see Sect. 2), being one of the advantages of our approach. Full factorial designs can also be generated for more levels. With \(l\) levels and \(n\) factors, this kind of design requires \(l^n\) experiments. Although possible, the use of \(l>2\) is rare in statistics since there is no rigorous statistical analysis available as of today. For that reason, we limit our methodology to two-level full factorial designs, forcing the analyst to choose two frequencies out of those available. Main effects plots can be used to analyze results obtained from factorial designs. They quantify how much each of the factors affects the response. The main effect of each factor is the difference between the mean response for that factor considering its two possible levels [1].

Fractional and Screening Designs. The sparsity of effects principle asserts a system is usually dominated by main effects and low order interactions [17]. As such, identifying factors responsible for the majority of the effect being measured does not require expensive \(2^n\) full factorial designs. This principle does not hold when there are complex interactions between the factors. Fractional factorial and screening designs require less experimental effort than full factorial designs and still give a good exploration of the configuration space. These designs can be used to screen which factors have the most effect. While common in some sciences due to the high cost of each experiment, fractional factorial designs are not often used in parallel computing, where the preference is with one-factor-at-a-time designs or in rare cases full factorial designs. Fractional designs can be extremely useful when the full factorial design requires many experiments, as it can reduce experimental time. Even for a low number of factors the number of experiments can be considerably reduced. These designs have \(2^{k-p}\) runs, where \(k\) is the number of factors and \(p\) is used to limit the experiment size, at the price of losing complex relations as \(p\) grows. Plackett-Burman (PB) designs are a kind of fractional design that is mostly used for screening [23]. The number of runs of Plackett-Burman designs is always a multiple of four. A PB design is identical to a fractional design iff its number of runs is a power of two. When it is not, PB designs are non-geometrical. This kind of design has more complex aliasing patterns, making analysis of the interactions between the factors more difficult. When there are only minor interactions, the non-geometric designs can save experimental time. Screening designs with more than two levels are still an open research question in statistics. Three-level screening exists [12], but only for quantitative levels, which can be the case for processor frequency.

4 DoE-Based Methodology to Find Time-Energy Trade-Offs

The objective of our DoE-based methodology is to find all interesting Pareto front cases where the energy-performance correlation balances towards HPC goals, which is minimal performance losses. Figure 1 gives an overview of the methodology, which is detailed in the next subsections. It starts with the screening phase (on the left), where initial parallel code regions (from A to F) have their impact on the outcome quickly measured. Results are evaluated with a combination of ANOVA and main effects analysis, both provided by most statistical tools. The objective is to detect which code regions significantly affect the outcome. Those which do (B, E, and F in the example) are used in the full factorial phase (right). There, all time-energy trade-offs are discovered using full factorial designs, allowing the detection of interactions among code regions. We employ Pareto and ANOVA to analyze full factorial results.

4.1 Screening Parallel Code Regions, ANOVA and Main Effects Plot

The screening experimental phase uses a two-level Plackett-Burman design, attempting to identify parallel code regions that affect energy consumption and execution time. For simplicity, the parallel code regions comprise OpenMP’s parallel code blocks. These code regions are the factors, while the levels are the possible frequencies. The screening phase uses only two frequencies – low and high – out of those available in current processors. It is up to the performance analyst to define values to be considered as low and high frequencies.

Analysis of variance (ANOVA) and main effects plots [17] are used to analyze measurements. While ANOVA gives a confidence level of which code regions affect the outcome when the frequency changes, it does not tell the magnitude or direction of such change. Main effects plots are complementary because they cover exactly these points, allowing us to rank regions based on how much they affect the outcome when the processor frequency is changed. For the second phase we select only regions that are significant according to ANOVA and whose effect is significant compared to the effect of other code regions.

4.2 Full Factorial Design, ANOVA and Pareto Analysis

This phase considers only regions that truly affect the outcome, according to screening. The objective is to search for parallel code regions for which the processor frequency could be reduced without too much performance penalty; or regions whose execution time is not too negatively affected while offering high energy savings. We also look for parallel code region interactions when scaling frequency. Measurement variability is addressed through experimental replication, obtaining significance levels through ANOVA.

Results of this final phase are analyzed through ANOVA tests and Pareto plots. They are complementary because ANOVA tests enable a quick verification of effect interactions among parallel code regions. Average energy consumption and execution time are represented using customized Pareto plots, where each point is the result of a frequency combination for code regions. Confidence regions [11] in the time-energy space are shown around average points, quantifying experimental variability. We also define the Pareto front [4] by connecting the best time-energy trade-offs (see Figs. 3 and 4).

5 Experimental Evaluation

We evaluate seven OpenMP-based benchmarks using our DoE methodology. Energy consumption is measured for the whole execution time, while we use DVFS [9] to control processor frequency. Parallel code regions (factors), identified through letters, are marked manually for evaluation. This process could be automated during compilation, for instance by defining a new region for each parallel task or loop. Our experiments use one node, although the methodology could be extended for use in a heterogeneous cluster. In that case, different regions could be used to differentiate between CPU and accelerator code for the same task. For each code region, we verify how the low and high frequency (levels) affect two outcomes: energy consumption and execution time. We present our benchmarks and the experimental platform below; a full analysis of Graph500; and global results of the six remaining benchmarks.

Case studies: Table 1 lists the OpenMP benchmarks with the number of regions, and the low and high processor frequency for each of them. BFS and Delaunay belong to PBBS [22], while the Graph500 [18] is a benchmark on its own. MiniFE, HPCCG, CoMD, and Pathfinder are part of the Mantevo [7] suite.

Experimental Platform: Experiments are executed on orion, a machine of the GPPD Team of INF/UFRGS. This machine has two Intel Xeon E5-2630 Sandy Bridge processors, with 24 cores (12 physical), with 32 GB of memory. The processor has twelve clock frequencies, from 1.2 to 2.3 GHz. Benchmarks are compiled with GCC 5.1.1, using the -O3 optimization flag. Energy consumption of the package and memory are measured through Intel’s RAPL counters [10].

5.1 Full Analysis of Graph500 Benchmark

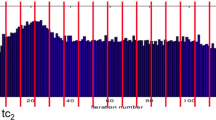

Seventeen parallel code regions (from A to Q) of the Graph500 benchmark have been manually instrumented. Table 2 shows the ANOVA results of each factor’s impact on energy (left) and time (right). The number of stars on each line’s end indicates the significance of each factor’s impact. For example, the three stars of region J indicates that a low to high frequency change has a 99.9% chance of impacting both energy and execution time. Therefore, scaling frequency on regions J and L has a 99.9% chance of affecting energy consumption, while on regions E, I and J the impact is on execution time. Figure 2 is the main effect plots for energy (top) and execution time (bottom). It shows the magnitude of the effect when one factor changes its level from low to high. For example, region J increases energy consumption when it goes from low to high, while reducing execution time. We conclude that regions J, L and E have a non-negligible impact on energy when we upscale the processor frequency. Remaining regions could be kept in the highest processor frequency since downscaling has no effect on energy. We observe that regions I, J and E have smaller execution time when upscaling frequency. Remaining regions make no significant difference on execution time, at a 99% confidence level. A promising code region to act upon is I, where we can see in the main effects plot that a significative execution time reduction appears with a minor energy consumption increase when upscaling, compared to the rest. Regions E, I, J and L are the only regions whose scaling affects energy consumption or execution time, with a 99% confidence level (two stars). These regions were selected for the full factorial phase.

Table 3 shows the ANOVA for the full factorial experiments. Regions E, I, J and L affect execution time and energy consumption at a 99.9% confidence level. Figure 3 presents the Pareto plot showing the correlation between energy savings (in the Y axis) and execution time (in X). The blue line represents the Pareto front, connecting the best time-energy trade-offs. Each point is the average of 50 executions. An ellipse around each point represents the confidence region in the bivariate space according to a 99% confidence level. The HIGH and LOW labels indicate points where all regions are in the high and low frequency. Pareto points are labeled with the corresponding high (+) and low (−) frequency configuration for the E, I, J and L parallel regions, in that order.

The Pareto front is composed of seven points: LOW, HIGH, and five region-based trade-offs. Table 4 details the region-based points against HIGH (at the left) and LOW (right). Most of the region-based points in the Pareto front, when compared to HIGH, offer more energy reduction than performance loss. The only exception is the configuration \(\texttt {(+ - - -)}\) whose execution time loss against HIGH is larger than gains in energy consumption. Comparing to LOW, the more interesting result comes from configuration \(\texttt {(+ + - -)}\): so setting only regions E and I to the high frequency gives an execution time reduction of 7.0% while increasing energy consumption by 2.4%. Remaining comparison against LOW show that runtime gains are always greater than the increase in energy. Next section shows the Pareto front results of six other benchmarks.

5.2 Global Results Considering Remaining Benchmarks

Figure 4 depicts the detailed Pareto plots for the other benchmarks. Each one shows distinct trade-offs, detailed as follows. BFS. The Pareto front is composed of two region-based points: \(\texttt {{-}{+}{+}}\) and \(\texttt {+{-}{-}}\). Remaining region-based points and HIGH and LOW get grouped around these two points, with no significant difference. As we can see, our methodology fails to detect important trade-offs between energy and execution time for this benchmark. It discovers, however, an anomaly with the two points that are above the LOW group but far from the Pareto front. Such anomaly, which should be avoided, provides no performance gains and higher energy cost. Delaunay. Only two parallel regions were considered relevant for the full factorial. Results are similar to BFS: the two region-based points are very similar to the LOW and HIGH points, considering experimental variability. There is a significant different in energy consumption when moving from LOW to \(\texttt {{+}{-}}\). MiniFE. Five parallel regions were considered for MiniFE. As we can see in the Pareto plot, four groups of region-based points are formed: two of them around the LOW and HIGH points, and two that present other energy performance trade-offs. Combinations below the HIGH group have interesting results. The Pareto point \(\texttt {{+}{-}{+}{+}{+}}\), for instance, reduces energy by 9.27% with a minor penalty in execution time when compared to HIGH. Another region-based point with combination \(\texttt {{+}{+}{+}{-}{+}}\) in the HIGH group provides a potential reduction both in time (1.64%) and in energy (1.63%), but results are unclear since there is some overlap with the HIGH point considering the confidence region. For this benchmark, fixing all regions in the lowest frequency would be insufficient to bring enough benefits in energy while causing a large slowdown. In this case, our methodology clearly captures the new trade-off. HPCCG. The screening phase has detected only two parallel regions for this benchmark. Results show that the combination \(\texttt {{-}{+}}\) offers an energy reduction of 21.35% with a non-significant execution time penalty of only 2.04% when compared to the HIGH point. The other region-based point \(\texttt {{+}{-}}\) demonstrates energy reduction of 4.21% with a 1.36% penalty in time, also non-significant. These values are based on 50 replications for each combination, indicating a small variability in energy but large in execution time probably due to the small timespan. CoMD. Experiments with CoMD with two parallel code regions showed a high variability after 50 replications. The energy scale is very small, indicating that any changes in frequency cause minor energy gains but large performance penalties. The region-based point \(\texttt {{+}{-}}\) offers 1.97% energy reduction causing 4.1% slowdown, when compared to HIGH. We can conclude that this benchmark is unsuited to energy gains with minor performance losses. Pathfinder. Only one region has been selected for the full factorial tests probably indicating that such region is the benchmark’s compute-bound. The HIGH point dominates the LOW point, forming a simple Pareto front. We can see that running the parallel code region in LOW frequency causes a 40% slowdown for the application with no significant gains in energy reduction.

6 Conclusion

We propose a workflow based on Design of Experiments to evaluate the time-energy trade-offs when per-region frequency scaling is adopted in HPC applications. Our approach consists of two phases: a screening phase using ANOVA and main effects plots to identify which regions deserve further investigation; and a detailed phase using full factorial designs along with ANOVA and Pareto plots for measurement analysis. We evaluated our strategy with seven OpenMP benchmarks: BFS, Delaunay, Graph500, MiniFE, HPCCG, CoMD and Pathfinder. Our DoE-based methodology enables the discovery of different time-energy trade-offs: for MiniFE, we have found region-based frequency configurations that enable a 9.27% improvement in energy with no significant change in runtime; and for Graph500, a time reduction of 7.0% with an increase of 2.4% in energy consumption, when compared with using the lowest frequency for all regions. In the other benchmarks, per-region frequency scaling resulted in little to no energy improvements when compared against using only one frequency for all regions. Another interesting result is that measurement variability makes the limits of the Pareto front unclear. Distinct Pareto front shape might help better understand the impact of region-based frequency scaling for each HPC application. Planned future work includes a full factorial analysis when all processor frequencies are considered. We also plan to improve the interpretation of the screening by including the time taken to execute each parallel code region.

References

Box, G.E., Hunter, J.S., Hunter, W.G.: Statistics for experimenters: design, innovation, and discovery. AMC 10, 12 (2005)

Cicotti, P., Tiwari, A., Carrington, L.: Efficient speed (ES): Adaptive DVFS and clock modulation for energy efficiency. In: International Conference on Cluster Computing, pp. 158–166 (2014)

Dick, B., Vogel, A., Khabi, D., Rupp, M., Küster, U., Wittum, G.: Utilization of empirically determined energy-optimal CPU-frequencies in a numerical simulation code. Comput. Vis. Sci. 17(2), 89–97 (2015)

Ehrgott, M.: Multicriteria Optimization. LNEMS. Springer, Heidelberg (2000)

Freeh, V.W., Lowenthal, D.K.: Using multiple energy gears in MPI programs on a power-scalable cluster. In: Symposium on Principles and Practice of Parallel Programming. ACM (2005)

Ge, R., Feng, X., Song, S., Chang, H.C., Li, D., Cameron, K.: Powerpack: energy profiling and analysis of high-performance systems and applications. IEEE Trans. Parallel Distrib. Syst. 21(5), 658–671 (2010)

Heroux, M.A., Doerfler, D.W., Crozier, P.S., Willenbring, J.M., Edwards, H.C., Williams, A., Rajan, M., Keiter, E.R., Thornquist, H.K., Numrich, R.W.: Improving performance via mini-applications. Technical report, SAND2009-5574, Sandia (2009)

Hotta, Y., Sato, M., Kimura, H., Matsuoka, S., Boku, T., Takahashi, D.: Profile-based Optimization of power performance by using dynamic voltage scaling on a PC cluster. In: IPDPS (2006)

Hsu, C.H., Feng, W.: A feasibility analysis of power awareness in commodity-based high-performance clusters. In: Cluster Computing, pp. 1–10. IEEE (2005)

Intel: Intel 64 and IA-32 Architectures Software Developer’s Manual - Volume 3B. Intel Corporation, September 201

Johnson, R.A., Wichern, D.W. (eds.): Applied Multivariate Statistical Analysis. Prentice-Hall Inc., Upper Saddle River (1988)

Jones, B., Nachtsheim, C.J.: A class of three-level designs for definitive screening in the presence of second-order effects. Qual. Technol. 43(1), 1–15 (2011)

Kerbyson, D., Vishnu, A., Barker, K.: Energy templates: exploiting application information to save energy. In: IEEE International Conference on Cluster Computing, pp. 25–233 (2011)

Laurenzano, M.A., Meswani, M., Carrington, L., Snavely, A., Tikir, M.M., Poole, S.: Reducing energy usage with memory and computation-aware dynamic frequency scaling. In: Jeannot, E., Namyst, R., Roman, J. (eds.) Euro-Par 2011. LNCS, vol. 6852, pp. 79–90. Springer, Heidelberg (2011). doi:10.1007/978-3-642-23400-2_9

Lim, M.Y., Freeh, V.W., Lowenthal, D.K.: Adaptive, transparent CPU scaling algorithms leveraging inter-node MPI communication regions. Parallel Comput. 7(10–11), 667–683 (2011)

Lim, M., Freeh, V.W., Lowenthal, D.: Adaptive, transparent frequency and voltage scaling of communication phases in MPI programs. In: Supercomputing, p. 14 (2006)

Montgomery, D.C.: Design and Analysis of Experiments. Wiley, Hoboken (2008)

Murphy, R.C., Wheeler, K.B., Barrett, B.W., Ang, J.A.: Introducing the graph 500. Cray User’s Group (CUG) (2010)

Padoin, E., Castro, M., Pilla, L., Navaux, P., Mehaut, J.F.: Saving energy by exploiting residual imbalances on iterative applications. In: International Conference on HPC (2014)

Peraza, J., Tiwari, A., Laurenzano, M., Carrington, L., Snavely, A.: PMaC’s green queue: a framework for selecting energy optimal DVFS configurations in large scale MPI applications. Concur. Comput.: Pract. Exp. 28(2), 211–231 (2013)

Rountree, B., Lownenthal, D.K., de Supinski, B.R., Schulz, M., Freeh, V.W., Bletsch, T.: Adagio: making DVS practical for complex HPC applications. In: Proceedings of the 23rd International Conference on Supercomputing, pp. 460–469. ACM (2009)

Shun, J., Blelloch, G.E., Fineman, J.T., Gibbons, P.B., Kyrola, A., Simhadri, H.V., Tangwongsan, K.: Brief announcement: the problem based benchmark suite. In: 24th Annual ACM Symposium on Parallelism Algorithms and Architectures, pp. 68–70. ACM, New York (2012)

Simpson, T., Poplinski, J., Koch, P.N., Allen, J.: Metamodels for computer-based engineering design: survey and recomm. Eng. Comput. 17(2), 129–150 (2001)

Feng, W.C., Cameron, K.: The Green500 list: encouraging sustainable supercomputing. Computer 40(12), 50–55 (2007). doi:10.1109/MC.2007.445. ISSN 0018-9162

Tiwari, A., Laurenzano, M., Peraza, J., Carrington, L., Snavely, A.: Green queue: customized large-scale clock frequency scaling. In: International Conference on Cloud and Green Computing, pp. 260–267, November 2012

Venkatesh, A., Vishnu, A., Hamidouche, K., Tallent, N., Panda, D.D., Kerbyson, D., Hoisie, A.: A case for application-oblivious energy-efficient MPI runtime. In: International Conference for High Performance Computing, Networking, Storage and Analysis, NY, USA, pp. 29:1–29:12 (2015)

Wu, C., Hamada, M., Wu, C.: Experiments: Planning, Analysis, and Parameter Design Optimization. Wiley, New York (2000)

Acknowledgements

We thank CAPES and CNPq for partially funding this work. In addition, we thank Arnaud Legrand for his ideas on design of experiments that inspired us to develop the work of this paper, and for his series of lectures on Scientific Methodology and Performance Evaluation (SMPE).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Millani, L.F., Mello Schnorr, L. (2017). Computation-Aware Dynamic Frequency Scaling: Parsimonious Evaluation of the Time-Energy Trade-Off Using Design of Experiments. In: Desprez, F., et al. Euro-Par 2016: Parallel Processing Workshops. Euro-Par 2016. Lecture Notes in Computer Science(), vol 10104. Springer, Cham. https://doi.org/10.1007/978-3-319-58943-5_47

Download citation

DOI: https://doi.org/10.1007/978-3-319-58943-5_47

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-58942-8

Online ISBN: 978-3-319-58943-5

eBook Packages: Computer ScienceComputer Science (R0)