Abstract

Sparse approximation of signals using often redundant and learned data dependent dictionaries has been successfully used in many applications in signal and image processing the last couple of decades. Finding the optimal sparse approximation is in general an NP complete problem and many suboptimal solutions have been proposed: greedy methods like Matching Pursuit (MP) and relaxation methods like Lasso. Algorithms developed for special dictionary structures can often greatly improve the speed, and sometimes the quality, of sparse approximation.

In this paper, we propose a new variant of MP using a Shift-Invariant Dictionary (SID) where the inherent dictionary structure is maximally exploited. The dictionary representation is simple, yet flexible, and equivalent to a general M channel synthesis FIR filter bank. Adapting the MP algorithm by using the SID structure gives a fast and compact sparse approximation algorithm with computational complexity of order \(\mathcal {O}(N \log N)\). In addition, a method to improve the sparse approximation using orthogonal matching pursuit, or any other block-based sparse approximation algorithm, is described. The SID-MP algorithm is tested by implementing it in a compact and fast C code (Matlab mex-file), and excellent performance of the algorithm is demonstrated.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Obtaining a sparse representation of a signal is an important part of many signal processing tasks. A typical way to do this is to use a dictionary of atoms, and to represent the signal as a linear sum of some of the available atoms. A sparse approximation of a signal \(\mathbf {x}\) of size \(N \times 1\), allowing a (small) error \(\mathbf {r}\), can be written as

where D is the \(N \times K\) dictionary, \(\mathbf {d}_k\) of size \(N \times 1\) is atom number k, w(k) is coefficient k, and \(\mathbf {w}\) is the \(K \times 1\) coefficient vector. For a sparse approximation, only a limited number, s, of the coefficients are non-zero.

Common predefined dictionaries are the Discrete Cosine Transform (DCT) basis, wavelet packages and Gabor bases, both orthogonal and overcomplete variants can be used. Advantages of these predefined dictionaries are: They can be implicitly stored, they are, in general, signal independent and thus can successfully be used for most classes of signals, and fast algorithms are available for common operations. The overlapping transforms, i.e. wavelets and filter banks, perform better than the block-based transforms, like DCT, on many common signals. This is because the structure in the overlapping transforms does not need the signal to be divided into (small) blocks and thus they avoid the blocking artifacts.

Learned dictionaries are an alternative to predefined dictionaries. Since they are learned, i.e. adapted to a certain class of signals given by a relevant set of training signals, they will usually give better sparse approximation results for signals belonging to the class. Many methods have been proposed for dictionary learning; K-SVD [2], ODL [14], MOD [8, 9] and RLS-DLA [23]. All these methods are block-based and the blocks should be rather small to limit the number of free variables in the dictionary; as seen from Eq. 1 the number of elements in D is NK.

Structured learned dictionaries try to combine the advantages of predefined dictionaries and general (all elements are free) learned dictionaries [17, 22]. Imposing a structure on the dictionary allows the atoms to be overlapping and the dictionary could be very large and still have a reasonable number of free variables. In addition, the structure can make dictionary learning computationally more tractable. Several variants of structured dictionaries are possible [1, 3, 9, 22]. An example of a structured dictionary that has been shown to be useful is the Shift-Invariant Dictionary (SID) [12, 18, 20]. In this paper, we propose a flexible representation for the SID structure.

The sparse approximation step is an important part when both using and learning a dictionary. It is often the computationally most expensive part, thus its speed is very important. Popular approaches are the \(l_1\)-norm minimization methods like LARS/Lasso [7], and the greedy algorithms directly using the \(l_0\)-quasinorm. For the latter approach Matching Pursuit (MP) [15] and its many variants, like orthogonal matching pursuit [19], are much used. MP algorithms typically has computationally complexity of \(\mathcal {O}(N^3)\) and this effectively limits the dictionary size. To overcome this restriction FFT-based algorithms tailored for shift-invariant dictionaries have been developed [10].

FFT-based algorithms work very well for regular dictionaries, i.e. Gabor, harmonic, chirp or DCT based atoms, but for short arbitrary waveforms time-domain algorithms should be expected to perform better. As an example the execution times for Matlab functions fftfilt() and filter() can be compared for different signal and filter lengths; the time domain approach is faster for filters shorter than 300 and a signal length of 10000 samples. For many learned shift-invariant dictionaries, the atoms will be arbitrary and of limited (short) length, and sparse approximation should benefit from using a well-designed and well-implemented time domain based algorithm. Therefore, this is what we present here.

The matching pursuit variant presented in this paper, denoted as SID-MP, is completely implemented in time domain but still has the same low computationally complexity of order \(\mathcal {O}(N \log N)\) as the FFT-based algorithms, it uses a simple and flexible representation for the shift-invariant dictionary, and it allows the atoms to be shifted in steps larger than one. For short arbitrary shape atoms, it should be faster than FFT-based algorithms.

Orthogonal Matching Pursuit (OMP) finds better approximations than MP using the same number of non-zeros [4] and fast algorithms are available for small dictionaries. For large shift-invariant dictionaries (true) OMP is slow, but a low complexity approximate OMP variant has been presented by Mailhé et al. [11]. This variant is FFT-based and achieves results close to true OMP with execution times close to MP. We propose another OMP variant, denoted SID-OMP. Its main idea is to divide the long signal into blocks (segments) of moderate size that are processed by OMP or any other block-oriented sparse approximation algorithm. By doing several iterations, or using partial search [24] instead of OMP, better approximation than (true) OMP can be achieved. The SID structure is used in the proposed method, it has computationally complexity of order \(\mathcal {O}(N)\) but the constant factor is large and depends on the segment size.

The organization of this paper is: Sect. 2 presents the representation of the SID structure. The proposed algorithm SID-MP is presented in Sect. 3 and the SID-OMP method in Sect. 4. Finally, in Sect. 5, it is shown that the proposed algorithms are fast and efficient.

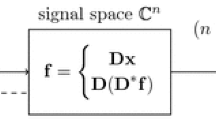

A general synthesis filter bank of M channels (filters). Filter \(\mathbf {f}_m\) has length \(Q_m\) and upsampling factor \(P_m\), these values may be different for each filter. \(\tilde{w}_{m}(n)\) is the upsampled coefficients for filter m. If each filter \(\mathbf {f}_m\) here is given as the reversed (and shifted) atom \(\mathbf {q}_m\) the synthesis equation can be written as in Eq. 5.

2 The Shift-Invariant Dictionary

A Shift-Invariant Dictionary (SID) should be represented independently of the signal size, and it should be easy to extend it to match the (possibly large) signal length N. In this paper, we suggest to use a dictionary structure, denoted as SID structure, equivalent to the general synthesis filter bank in Fig. 1. A matrix representation as in Eq. 1 is equivalent to the M channels filter bank in Fig. 1 when each channel is represented by one submatrix \(D_m\) and the dictionary is a concatenation of these:

One submatrix, \(D_m\), contains one atom (the reversed synthesis filter) and its shifts (below the shift \(P_m=1\) is visualized):

Atom m is denoted as \(\mathbf {q}_m\) with elements \(q_m(i)\) for \(i=1,2,\ldots ,Q_m\). It has length \(Q_m\) and is shifted \(P_m\) positions down for each new column in \(D_m\). Here it is assumed that each atom has unit norm and is real. Note that the support for all shifts of an atom is completely within the dictionary column (length N). There is neither circular extension nor mirroring at the ends. This means that the number of columns in submatrix \(D_m\) is given as:

\(\lceil \cdot \rceil \) denotes the ceiling function. The dictionary D has size \(N \times K\) where \(K = \sum _{m=1}^M K_m\). The overlapping factor, i.e. the ratio K/N (as \(N \rightarrow \infty \)), is \(\sum _{m=1}^M 1\)/\(P_m\). The SID structure is given by the number of atoms M, the atom lengths \(\{Q_m\}_{m=1}^M\), their shifts \(\{P_m\}_{m=1}^M\), and their \(Q=\sum _{m=1}^M Q_m\) values which are collected into one vector \(\mathbf {q^T} = [\mathbf {q}_1^T, \mathbf {q}_2^T, \ldots \mathbf {q}_M^T]\). This structure for a shift-invariant dictionary is actually quite flexible, and many dictionaries fit quite well into this structure. It is a subclass of the flexible structure dictionary introduced in [3].

The sparse approximation equation can be divided into blocks as

where the approximation is split into M terms, each corresponding to a submatrix of the dictionary. It is easily seen that this is an alternative representation of a general synthesis FIR filter bank [5] of M filters as in Fig. 1.

3 Matching Pursuit Using SID

Matching Pursuit (MP), presented and analyzed in [6, 15], is the simplest of the matching pursuit algorithms. An overview of the algorithm is given in Fig. 2. The MP algorithm is quite simple, but it is not very efficient. The loop may be executed many times (\({\ge }s\)), sometimes selecting the same dictionary atom multiple times, and the calculation of the inner products (line 4) is demanding, where approximately NK multiplications and additions are done. As both K and the number of non-zero coefficients to select, s, are (usually) of the order \(\mathcal {O}(N)\) this gives the computational complexity of MP as \(\mathcal {O}(N^3)\). Using FFT and taking advantage of the dictionary structure, and all shift steps equal to one \((P_m = 1)\), shift-invariant MP can be implemented by an algorithm of the order \(\mathcal {O}(N \log N)\) [10]. Below, we present a simple time domain algorithm that exploits the SID structure, and allows different shift steps \(P_m \ge 1\). It follows the steps of MP as seen in Fig. 2, but has the complexity order of \(\mathcal {O}(N \log N)\) or \(\mathcal {O}(MQN \log N)\) if the (constant) SID size is included.

The Matching pursuit algorithm using shift-invariant dictionary. Input s is the target number of non-zero coefficients to select. The inner products are stored in a heap, initialized by the InitHeap-function, top extracted by topHeap-function, and in line 8 the inner products and the heap are updated, but only for atoms where the inner product has changed, i.e. the atoms with support overlapping the support of the selected atom.

The proposed algorithm is done in a straightforward way in time domain, but exploiting the SID structure maximally and thus making the inner loop as fast as possible. An outline of the algorithm is given in Fig. 3 and the similarity to MP in Fig. 2 is obvious. The input is the SID which is given by the number of atoms M, the atom lengths \(\{Q_m\}_{m=1}^M\), their shifts \(\{P_m\}_{m=1}^M\), and their Q values, the signal \(\mathbf {x}\) (length N) and the target number of non-zeros s. In the analysis below the SID is assumed to be constant and independent of signal length N.

As for MP, initialization sets \(\mathbf {w}\) to zeros and the residual \(\mathbf {r}\) to \(\mathbf {x}\), but here the calculation of the inner products, \(\mathbf {c}\), is done before the main loop. Since the atoms has fixed support the complexity is \(\mathcal {O}(N)\). Line 5 in Fig. 2 has the computational complexity of \(\mathcal {O}(N)\) which is not wanted inside a loop. To avoid this a max-heap data structure is used, to build the heap outside the loop is \(\mathcal {O}(N)\). Inside the loop max is found in \(\mathcal {O}(1)\) and the heap is updated in \(\mathcal {O}(\log N)\).

The most demanding task inside the loop is to update the involved inner products and their place in the heap. But, since all atoms have local support the number of inner products to update is limited, depending only of the SID structure and independent of signal length N. The update step (line 8) is thus \(\mathcal {O}(\log N)\) which also becomes the order of the loop. The loop is done at least \(s = \mathcal {O}(N)\) times. This gives the computational cost for the SID-MP algorithm in the order of \(\mathcal {O}(N \log N)\).

An implementation of this algorithm could be both compact and fast and it will be available on the web: http://www.ux.uis.no/~karlsk/dle/.

4 Orthogonal MP Using SID

Orthogonal Matching Pursuit (OMP), somewhere denoted as Order Recursive Matching Pursuit (ORMP), finds better approximations than MP using the same number of non-zeros [4]. A throughout description of OMP/ORMP can be found in [4] or [24] and is not included here. For small (or moderately sized) dictionaries OMP is efficient, and fast implementations using QR-factorization are available: SPAMS from Mairal et al. [13] and OMPbox from Rubinstein et al. [21]. Nevertheless, these effective implementations have time complexity of order \(\mathcal {O}((K+s^2)N)=\mathcal {O}(N^3)\) where N and K refer to the size of the dictionary for the signal block.

To do OMP on a large signal using a shift-invariant dictionary is more complicated than to do MP; since orthogonalization is done when a new atom is selected the effect on the residual, and thus the updated inner products, goes beyond the support of the selected atom. But the effect decreases as the distance from the selected atom increases. Using this fact, an approximate OMP algorithm can be developed in a way similar to the MP algorithm [11]. Another approach is used in the proposed method. It utilizes the local support of the atoms to divide the large OMP problem into many moderately sized OMP problems. The segment size is fixed and independent of N giving the computational complexity as \(\mathcal {O}(N)\), but the constant factor is large \(\mathcal {O}(N_{seg}^3)\) where \(N_{seg}\) is the signal segment length used in the OMP problems.

The proposed method, denoted as SID-OMP, has almost the same input as the SID-MP algorithm in Fig. 3: the shif-invariant dictionary represented as a SID structure, the long signal \(\mathbf {x}\) of length N, and the target number of non-zero coefficients s or an initial coefficient vector \(\mathbf {w}\) having the target number s non-zero elements. The initial coefficient vector \(\mathbf {w}\) is found by the SID-MP algorithm in previous section if s is given as input, or \(\mathbf {w}\) could be found by another preferred initialization method and given directly as input. The algorithm then does some few iterations, and in each iteration improved coefficients are found but the number of non-zero coefficients is unchanged. The coefficient vector \(\mathbf {w}\) is returned. A detailed description of one iteration follows:

-

1.

The signal is divided into L consecutive segments, the segment length is \(N_{seg}\) and the signal may be longer than the total length of the L segments, \(N = N_{b} + L N_{seg} + N_{e}\). The excess portions are \(N_{b}\) samples at the beginning and \(N_{e}\) samples at the end of the signal, \(0 \le N_{b}, N_{e} < N_{seg}\).

-

2.

The coefficients for atoms with support completely within one segment are set to zero, for each segment the number of coefficients set to zero is stored: \(s_i\) for \(i=1,2,\ldots ,L\). The other coefficients are not changed. The original coefficients (and segment residuals) are also stored, and may be restored for some of the segments (in step 5 below).

-

3.

The residual is calculated using the remaining coefficients, i.e. only the non-zero coefficients belonging to atoms with support that span two segments.

-

4.

The residual segments are used as input for the block-oriented sparse approximation algorithm. If the segment size is chosen correctly, depending on the SID (\(P_m\) values), the dictionary \(D_{seg}\) will be the same for all segments and all iterations. For each segment the target number of non-zeros is \(s_i\) as found in step 2.

-

5.

The coefficients are updated. If the sparse representation for a segment is better than what it was the new coefficients are used, if not the coefficients are set to the values they had when the iteration started. This way each iteration will improve (or keep) the sparse approximation.

-

6.

Go back and do next iteration using a new segmentation of the original signal, i.e. new values for \(N_{b}\) and \(N_{e}\). A few (4–8) iterations are sufficient.

One remark should be made to the algorithm as it is described above. The locations of the non-zeros coefficients will only change slowly from one iteration to the next, and it will take many iterations to move one non-zero coefficient a long distance. This problem with the algorithm can be avoided by selecting a good initial distribution, as SID-MP will give, or by modifying the algorithm: For all segments (\(i = 1,2,\ldots ,L\)) the sparse approximation in step 4 could find solutions for \(s_i-1\), \(s_i\) and \(s_i+1\) non-zero coefficients and the respective residual norms, \(r_{i,-1}, r_{i,0}, r_{i,+1}\), can be calculated. A non-zero coefficient should be moved from segment i to segment j if \((r_{j,0}-r_{j,+1}) > (r_{i,-1}-r_{i,0})\). Repeating this will move non-zeros from one segment to another as long as this operation will reduce the total error. The locations of the non-zeros will now move more quickly between signal parts. We should also note that SID-OMP does not need to use OMP as the sparse approximation algorithm, any block-oriented algorithm will do.

5 Experiments

In this section we present three simple experiments to illustrate how the proposed methods work. All experiments are conducted on an ECG signal from the MIT arrhythmia database [16]. The used signal, MIT100 where a short segment is shown in Fig. 5, is a normal heart rhythm, and the reason for using such an ECG signal is that it will obviously benefit from a SID structure. This signal was also used for dictionary learning. As the purpose of these experiments is to test sparse approximation only, not a particular application, the actual signal or dictionaries used are not that important.

Experiment 1 is done to show how well, i.e. how fast, the SID-MP and SID-OMP algorithms run as the signal size increases. A SID with structure \(M=5\), \(Q_m=\{35,25,20,10,10\}\) and \(P_m=\{5,2,2,1,1\}\) as illustrated in Fig. 4, is used to make a sparse approximation of the MIT100 signal. The sparsity factor throughout the experiment is kept at 0.08, i.e. the number of non-zero coefficients is \(0.08\,N\), where N is the signal length. The MIT100 signal is 250000 samples long, and longer test signals are made simply by repeating it. Figure 6 shows the running time for SID-MP as the signal length increases, it scales (as expected) close to linear order and it is fast. For the longest signal, N = 5 million samples and the total number of dictionary atoms is K = 15.6 million, the number of non-zero coefficients is 0.4 million, and to find these takes less than 8 s on an Intel Core i5 3.2 GHz CPU PC. Another advantage of the proposed algorithm is that it is small (the compiled mex-file is 23 kB) and it does not depend on any special pre-installed libraries. The running times for one iteration for SID-OMP are also shown in Fig. 6, this line shows the linear order for computational times.

In Experiment 2 SID-OMP is tested using different segment sizes \(N_{seg}\) and different number of iterations. For one segment size OMP is replaced by the computationally more demanding partial search algorithm [24] in the sparse approximation step. All tests use 250000 samples of the MIT100 signal and the sparsity factor is 0.08. Doing eight iterations the Signal to Noise Ratio (SNR) improves for each iteration as shown in Table 1. Most of the improvements is achieved already after two iterations, and using smaller segment sizes keeps on improving SNR for more iterations. After eight iterations, using \(N_{seg} = 150\) is the better option. Also, using many small segments is faster that using fewer and larger segments. Thus it is better to use smaller segments than larger, but note that \(N_{seg}\) should always be larger than \(\max _m Q_m\).

Experiment 3 tests SID-MP and SID-OMP using five different dictionaries, i.e. SID structures. The first three dictionaries are learned for the MIT100 signal, the first is as in Fig. 4. The fourth dictionary is a SID structure with atoms set as low-pass or band-pass filters, \(Q_m \in \{128, 64, 32, 16\}\) and \(P_m \in \{8, 4, 2, 1\}\), and the fifth dictionary has synthesis atoms from the modified DCT transform, \(Q_m=64\) and \(P_m=1\). The results are shown in Table 2.

6 Conclusion

The presented SID-MP is a variant of the matching pursuit algorithm that can be used for a shift-invariant dictionary structure. This structure is flexible as it encompasses any system that can be expressed as a general synthesis FIR filter bank of M filters, including the shift-invariant dictionary. The algorithm is fast and scales well to large signals. We also presented the SID-OMP method that allows us to use any (fast) block-oriented MP algorithm to further improve the sparse approximation.

References

Aharon, M., Elad, M.: Sparse and redundant modeling of image content using an image-signature-dictionary. SIAM J. Imaging Sci. 1(3), 228–247 (2008)

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006). http://dx.doi.org/10.1109/TSP.2006.881199

Barzideh, F., Skretting, K., Engan, K.: The flexible signature dictionary. In: Proceedings of 23rd European Signal Processing Conference, EUSIPCO-2015, Nice, France, September 2015

Cotter, S.F., Adler, J., Rao, B.D., Kreutz-Delgado, K.: Forward sequential algorithms for best basis selection. IEE Proc. Vis. Image Signal Process 146(5), 235–244 (1999)

Cvetković, Z., Vetterli, M.: Oversampled filter banks. IEEE Trans. Signal Process. 46(5), 1245–1255 (1998)

Davis, G.: Adaptive nonlinear approximations. Ph.D. thesis, New York University, September 1994

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression. Ann. Stat. 32, 407–499 (2004)

Engan, K., Aase, S.O., Husøy, J.H.: Method of optimal directions for frame design. In: Proceedings of ICASSP 1999, Phoenix, USA, pp. 2443–2446, March 1999

Engan, K., Skretting, K., Husøy, J.H.: A family of iterative LS-based dictionary learning algorithms, ILS-DLA, for sparse signal representation. Digit. Signal Proc. 17, 32–49 (2007)

Krstulovic, S., Gribonval, R.: MPTK: matching pursuit made tractable. In: Proceedings ICASSP 2006, Toulouse, France, vol. 3, pp. 496–499, May 2006

Mailhé, B., Gribonval, R., Bimbot, F., Vandergheynst, P.: A low complexity orthogonal matching pursuit for sparse signal approximation with shift-invariant dictionaries. In: Proceedings ICASSP 2009, Taipei, Taiwan, pp. 3445–3448, April 2009

Mailhé, B., Lesage, S., Gribonval, R., Bimbot, F.: Shift-invariant dictionary learning for sparse representations: extending K-SVD. In: Proceedings of the 16th European Signal Processing Conference (EUSIPCO-2008), Lausanne, Switzerland, August 2008

Mairal, J., Bach, F., Ponce, J.: Sparse modeling for image and vision processing. Found. Trends Comput. Graph. Vis. 8(2–3), 85–283 (2014)

Mairal, J., Bach, F., Ponce, J., Sapiro, G.: Online dictionary learning for sparse coding. In: ICML 2009: Proceedings of the 26th Annual International Conference on Machine Learning, pp. 689–696. ACM, New York, June 2009

Mallat, S.G., Zhang, Z.: Matching pursuit with time-frequency dictionaries. IEEE Trans. Signal Process. 41(12), 3397–3415 (1993)

Massachusetts Institute of Technology: The MIT-BIH Arrhythmia Database CD-ROM, 2 edn. MIT (1992)

Muramatsu, S.: Structured dictionary learning with 2-D non-separable oversampled lapped transform. In: Proceedings ICASSP 2014, Florence, Italy, pp. 2643–2647, May 2014

O’Hanlon, K., Plumbley, M.D.: Structure-aware dictionary learning with harmonic atoms. In: Proceedings of the 19th European Signal Processing Conference (EUSIPCO-2011), Barcelona, Spain, August 2011

Pati, Y.C., Rezaiifar, R., Krishnaprasad, P.S.: Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition. In: Proceedings of Asilomar Conference on Signals Systems and Computers, November 1993

Pope, G., Aubel, C., Studer, C.: Learning phase-invariant dictionaries. In: Proceedings ICASSP 2013, Vancouver, Canada, May 2013

Rubinstein, R., Zibulevsky, M., Elad, M.: Efficient implementation of the K-SVD algorithm using batch orthogonal matching pursuit. Technical report, CS Technion, Haifa, Israel, April 2008

Rubinstein, R., Zibulevsky, M., Elad, M.: Double sparsity: learning sparse dictionaries for sparse signal approximation. IEEE Trans. Signal Process. 58(3), 1553–1564 (2010)

Skretting, K., Engan, K.: Recursive least squares dictionary learning algorithm. IEEE Trans. Signal Process. 58, 2121–2130 (2010). doi:10.1109/TSP.2010.2040671

Skretting, K., Husøy, J.H.: Partial search vector selection for sparse signal representation. In: NORSIG-2003, Bergen, Norway (2003). http://www.ux.uis.no/~karlsk/

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Skretting, K., Engan, K. (2017). Sparse Approximation by Matching Pursuit Using Shift-Invariant Dictionary. In: Sharma, P., Bianchi, F. (eds) Image Analysis. SCIA 2017. Lecture Notes in Computer Science(), vol 10269. Springer, Cham. https://doi.org/10.1007/978-3-319-59126-1_30

Download citation

DOI: https://doi.org/10.1007/978-3-319-59126-1_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59125-4

Online ISBN: 978-3-319-59126-1

eBook Packages: Computer ScienceComputer Science (R0)