Abstract

The current practice of assessing infants’ pain is subjective and intermittent. The misinterpretation or lack of attention to infants’ pain experience may lead to misdiagnosis and over- or under-treatment. Studies have found that poor management and treatment of infants’ pain can cause permanent alterations to the brain structure and function. To address these shortcomings, the current practice can be augmented with an automated system to monitors various pain indicators continuously and provide a quantitative assessment. In this paper, we present methods to analyze infants’ crying sounds, and other pain indicators for the purpose of developing an automated multimodal pain assessment system. The average accuracy of estimating infants’ level of cry was around 88%. Combining crying sounds to facial expression, body motion, and vital signs for classifying infants’ emotional states as no pain or severe pain yielded an accuracy of 96.6%. The reported results demonstrate the feasibility of developing an automated system that integrates multiple pain modalities for pain assessment in infants.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

On average, infants receiving care in the Neonatal Intensive Care Unit (NICU) experience fourteen painful procedures per day [7]. Inadequate management of infants’ pain due to the poor assessment during this vulnerable developmental period can cause serious long-term impacts. Studies have found that the experience of pain in preterm and post-term infants might be associated with permanent neuroanatomical changes, behavioral, developmental and learning disabilities [9]. Additionally, inadequate treatment of infants’ pain may increase avoidance behaviors and social hypervigilance [10]. Therefore, assessing pain accurately using valid, standardized, and reliable pain assessment tools is critical.

Current assessment of infants’ pain involves observing objective measures (e.g., heart rate and blood pressure) along with subjective indicators (e.g., facial expression and crying) by bedside caregivers. This practice has two main shortcomings. First, it relies on caregivers’ subjective interpretation of multiple indicators and fails to meet rigorous psychometric standards. The inter-observer variation in the use of pediatric pain scales may result in an inconsistent assessment and treatment of pain. Second, the current pain assessment is intermittent, which can potentially lead the caregivers to miss pain or delay their ability to promptly detect and treat pain. To mitigate these shortcomings and provide standardized, yet continuous assessment of infants’ pain, we proposed an automated pain assessment system in [19]. This paper extends our previous work [19] that has preliminary results of assessing pain using facial expression, body motion, and vital sign data to include crying sounds and state of arousalFootnote 1 as behavioral measures for pain.

Crying is one of the most widely used pain indicators in infants. Several studies [8, 14, 18] reported that crying sound is one of the most specific indicators of pain and emphasized the importance of including it as a behavioral measure when assessing infants’ pain. As such, most pediatric pain scales include crying sound as a main indicator of pain. Additionally, clinical studies [5, 8] have found that premature infants (i.e., gestational age 32–34 weeks) have limited ability to sustain facial actions associated with pain, since their facial muscles are not well-developed, and reported that premature infants communicate their pain mainly through crying sounds. Hence, it is important to include crying sounds as a main indicator of pain when developing an automated pain assessment system. State of arousal is another behavioral measure that is often included as a dimension of valid pain scales. Analyzing crying sounds and state of arousal along with other pain indicators allows us to develop an automated multimodal assessment system comparable to the current pediatric scales.

This paper makes two main contributions.

First, it is the first paper to present a completely automated version of the current multimodal pediatric scales that is continuous and standardized. The proposed system can be easily integrated into clinical environments since it uses non-invasive devices (e.g., RGB cameras) to monitor infants. The continuous assessment of pain is important because infants might experience pain when they are left unattended. The multimodal nature allows for a reliable assessment of pain during circumstances when not all data points are available owing to developmental stage, clinical condition, or level of activity.

Second, this paper presents the first automatic analysis of infants’ sounds for the purpose of estimating the level of pain. Particularly, we used signal processing and machine learning methods to analyze infants’ sounds and classify them into no cry, whimper cry, and vigorous cry. We also combined infants’ crying sounds to other pain indicators to classify the infants’ emotional state as no pain or severe pain.

In the next section, we briefly discuss current automated methods to assess infants’ pain, followed by a description of the study design and data collection. In Sect. 4, we present our automated system for assessing infants’ pain. Section 5 includes the experimental results for infants’ pain assessment. Finally, we conclude and discuss possible future directions in Sect. 6.

2 Overview of Current Work

Although much effort has been made to assess pain using computer vision and machine learning methods, the vast majority of the current work in this area focus on adults’ pain assessment. Those works that investigate machine assessment of infants’ pain are discussed next.

Crying sound is a behavioral indicator that has been used commonly to classify infants’ emotional states. One of the first studies to analyze infants’ crying sounds was introduced in [14]. To extract features for classification, Mel Frequency Cepstral Coefficients (MFCC) method was applied in segmented crying signals to extract sixteen coefficients as features. The accuracy of classifying crying sounds for sixteen infants as pain cry, fear cry, or anger cry was 90.4%. Similarly, Vempada et al. [18] investigated the use of thirteen MFCC coefficients along with other time-domain features for recognizing infants’ crying sounds. A total of 120 hospitalized premature infants were recorded undergoing different emotional states, namely pain, hunger, or wet-diaper. For classification, a score-level and feature-level fusion were performed to classify infants’ cries as one of the three classes. The weighted accuracies for feature-level and score-level fusion were observed to be around 74% and 81%, respectively.

Another behavioral indicator that is commonly used to assess infants’ pain experience is facial expression. Automated methods to analyze infants’ facial expression and classify them as no pain or pain expression can be found in [1, 3]. In case of physiological pain indicators, studies found that there is an association between pain and physiological measures such as vital signs [2] and changes in cerebral oxygenation [15, 16].

The failure to record a specific pain indicator is common in clinical environment due to several reasons such as developmental stage (e.g., an infant’s facial muscles are not well-developed), physical exertion, (e.g., exhaustion), specific disorders (e.g., paralysis), clinical conditions (e.g., occlusion by oxygen mask), and individual differences. Therefore, combining multiple indicators can provide a more reliable pain assessment.

Pal et al. [12] presented a bimodal method to classify infants’ emotional states as pain, hunger, anger, sadness, and fear based on analysis of facial expression and crying sounds. Similarly, Zamzmi et al. [19] introduced an automated approach to assess infants’ pain based on analysis of facial expression, body motions, and vital signs measures. The results of these works showed that combining multiple modalities might provide reliable assessment of infants’ emotional states. We refer the reader to our survey paper [20] for a comprehensive review and discussion of existing machine-based pain assessment methods.

3 Study Design

3.1 Subjects

The data for a total of eighteen infants were recorded during acute episodic painful procedure. Infants’ average gestational age was 36 weeks; seven infants were Caucasian, three Hispanic, three African American, two Asian, and three others. Any infant born in the range of 28 and 41 weeks gestation was eligible for enrollment after obtaining informed consent from the parents. Infants with cranial facial abnormalities and neuromuscular disorders were excluded.

3.2 Apparatus

Video and audio recordings were carried out using GoPro Hero3+ camera to capture the infant’s facial expression, body motion, and record crying sound. Since digital output of vital signs (i.e., vital signs that are shown in Fig. 1D) was not readily available from the monitor, we placed another GoPro camera in front of a Philips MP-70 cardio-respiratory monitor to record it and Optical Character Recognition (OCR) was performed to convert the recorded vital signs into data. All study recordings were carried out in the normal clinical environment that is only modified by the addition of the cameras.

3.3 Data Collection and Ground Truth Labeling

Data were recorded for infants who were undergoing routine acute painful procedure such as heel lancing and immunization over seven epochs. Specifically, the infants were recorded for five minutes prior the painful procedure (i.e., epoch 1) to get the baseline state. Then, they were recorded at the start of the painful procedure (i.e., epoch 2) and every minute for five minutes after the completion of painful procedure (i.e., epochs 3 to 7). To get the ground truth labels, trained nurses scored the infants and estimated their level of pain at the beginning of each epoch using a pediatric pain scale known as the Neonatal Infant Pain Scale (NIPS).

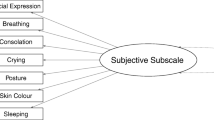

NIPS is a multimodal pain scale that takes into account both subjective and objective measures (i.e., behavioral and physiological indicators) when assessing infants’ pain. The scale involves observing facial expression, crying sound, body motion (i.e., arms and legs), and state of arousal data along with vital signs readings to estimate the level of pain. Table 1 presents the score ranges for each indicator/measure of NIPS scale. After scoring each of these measures individually, caregivers add these scores together to generate the total pain score. The computed total score is then used to classify the infant’s pain state as: no pain (0–2 score), moderate pain (3–4 score), or severe pain (>4 score). We refer the interested reader to [4] for further description about this pediatric scale and its measures. The performance of our algorithms is measured by comparing their output to the ground truth.

4 Methodology

We divided the pain analysis into two groups: Those that analyze behavioral measures and those that analyze physiological measures. An overview of the methodology for the entire assessment system is depicted in Fig. 1.

4.1 Methods that Analyze Behavioral Measures

We analyzed four behavioral measures and used them to assess infants’ pain. These measures are crying sound, facial expression, body motion, and state of arousal. The proposed methods to analyze each of these measures and extract pain-relevant features for classification are presented below.

Overview of the Methodology for the Assessment System. A. The face in each frame is detected, cropped, and divided into four regions; optical flow is applied in each region and used to estimate the strain. B. The input crying signal is analyzed using LPCC and MFCC. C. The motion image is computed for the cropped body area. D. Statistics are computed for the input HR, RR, and SpO2 data.

Facial Expression. We used the method presented in our previous work [19] to analyze infants’ facial expression. As shown in Fig. 1A, the method consists of three main steps, which are preprocessing, feature extraction, and classification.

In the preprocessing stage, we detected the infants face in each frame using Viola-Jones object detector that is trained to specifically detect faces of infants in different positions and with varying degrees of facial obstruction. The detector was able to detect faces with frontal and near-frontal views but failed to detect faces with significant changes in position or facial obstruction; these faces were excluded from further analysis. We also excluded the frames where the infant’s face was out of sight from further analysis. After detecting the face, we applied facial landmark points algorithm [21] to extract 68 facial points. These points were then used to align the face, crop it, and divide it into four regions.

To extract pain-relevant features, we used the strain-based expression segmentation algorithm presented in [19]. For classification, the extracted features (i.e., the strain magnitude computed for each region from I to IV) are used to train different machine-learning classifiers such as Support Vector Machine (SVM) and Random Forest trees. We performed LOSOXV to evaluate the trained model and estimate the generalization performance. For each training fold, 10-fold cross-validation was performed for parameter estimation.

Crying Sound. We employed Yang’s speech recognition method [11] to extract pain-relevant features from infants’ sounds. As shown in Fig. 1B, the method consists of three main steps: preprocessing, feature extraction, and classification.

The preprocessing step involves dividing the audio signals into several consecutive overlapped windows. After segmenting the signals into small windows, Linear Prediction Cepstral Coefficients (LPCC) and MFCC coefficients were extracted from each window as features. The window to extract LPCC was 32 ms Hamming window with 16 ms overlapping; 30 ms Hamming window with 10 ms shift was used to extract MFCC. After extracting LPCC and MFCC coefficients, we compressed them by employing vector quantization method. The compressed vector was then used to train a LS-SVM for classification. To evaluate the classifier and estimate its generalization performance, we performed leave-one-subject-out cross-validation (LOSOXV). For each training fold, another level of cross-validation was performed for parameter estimation.

Body Motion. To extract pain-relevant features from infants’ body, we computed the motion image between consecutive video frames after cropping the exact body area, as shown in Fig. 1C. The motion image is a binary image that has values of one to represent pixels that move and zero to represent pixels that do not move. The frames where the infant’s body was out of sight or occluded were excluded from further analysis. Then, we applied median filter to reduce the computed image’s noise and get the maximum visible movement.

The amount of body motion presents a good indication about the infant’s emotional state. Generally, a relaxed infant has less body motion in comparison to an infant who is feeling pain. Hence, we used the amount of motion in each video frame, which is computed by summing up the motion’s image pixels, as the main feature for classification. The interested reader is referred to our previous work [19] for more details about our method to analyze infants’ body motion.

State of Arousal. Bedside caregivers determine the state of arousal for an infant by observing the infant’s behavioral responses (e.g., facial and body movements) to a painful stimulus [17]. For example, an infant in a sleep or calm state shows a relaxed/neutral expression and a little or no body activity while an infant in the alert state shows facial expressions and more frequent body activity. Consequently, we used facial expression and body motion to estimate the infant’s state of arousal.

4.2 Methods that Analyze Physiological Measures

To assess infants’ pain using objective measures, vital signs (i.e., heart rate [HR], respiratory rate [RR] and oxygen saturation levels [SpO2]) numeric readings were extracted from short period epochs. Then, we applied median filter to remove outliers. For classification, we calculated different descriptive statistics (e.g., mean and standard deviation) from the extracted vital signs readings and used them to train different machine-learning classifiers (e.g., Random Forest trees). For classifier’s evaluation and estimation, we performed the same model evaluation discussed under facial expression.

5 Pain Assessment Results

This section presents the results of estimating the scores for NIPS pain measures (see Table 1). Also, it presents the results of classifying the emotional states of an infant as no pain or severe pain using each pain modality and combinations of multiple pain modalities. Along with the score estimation and pain classification, a statistical comparison for pain classification performance is provided. Before we proceed any further, we would like to note that NIPS has three levels of pain, namely no pain, moderate pain, and severe pain (see Sect. 3.3). However, due to the small number of instances for moderate pain in our limited dataset, we decided to exclude instances of this pain level and classify the infant’s emotional state as no pain or severe pain.

5.1 Score Estimation

Score estimation is the process of generating NIPS scores presented in Table 1. To generate the score for each pain modality, we employed the methods presented in Sect. 4 to extract pain-relevant features. Next, we used the extracted features of these modalities to classify the scores as either 0 or 1, except for crying which is scored as 0, 1 or 2. Our previous work [19] reports the results of estimating the scores for facial expression, body motion, and vital signs readings.

The score estimation for crying sound involves classifying infants’ sounds into vigorous cry (score of 2), whimper cry (score of 1), or no cry (score of 0). To generate these scores, we used the extracted LPCC and MFCC coefficients with k-nearest neighbors (k-NN) classifier and LOSOXV. The accuracy of estimating the infants’ crying sounds as vigorous cry, whimper cry, or no cry in comparison with the ground truth was around 84%. Table 2 shows the confusion matrix.

We would like to mention that the achieved accuracy was obtained using crying sounds recorded in a realistic clinical environment that has ambient noises such as human speech, machine sounds, and other infants’ crying. Excluding the episodes that have significant noise from analysis (i.e., a clean dataset) yielded an accuracy of 90% as discussed in [11].

5.2 Pain Classification

Pain classification is the process of classifying the infant’s emotional state as severe pain or no pain. To classify infants’ pain, we conducted two sets of experiments. The first experiment involves recognizing the emotional state of infants using a single pain indicator. In the second experiment, we combined different pain indicators for pain classification. The results of both are reported and discussed below.

In unimodal pain classification, the features from each pain modality were used individually to classify infants’ pain. Particularly, features of crying sound, facial expression, body motion, and vital signs were used separately to classify the emotional state of an infant as no pain or severe pain.

The One Measure column of Table 3 summarizes the unimodal pain classification performance. As is evident from the table, facial expression has the highest classification accuracy. This result is consistent with our previous work [19] and supports previous findings [5, 6] that facial expression is the most specific and common indicator of pain. As such, most pediatric pain scales utilize facial expression as the main indicator for assessment. Crying sound and body motion have similar classification performance that are notably higher than the performance of vital signs. A possible explanation for the low accuracy of classifying pain using vital signs can be attributed to the fact that vital signs readings are less specific for pain since they are more sensitive to other conditions such as loud noise or underlying disease.

To combine pain modalities together for the multimodal assessment, we performed two schemes: thresholding and majority voting. For the thresholding scheme, we added the scores of crying sound, facial expression, body motion, state of arousal, and vital signs together and then preformed a thresholding on the total pain score to classify the infant’s pain state (see Sect. 3.3); this scheme follows the exact process of assessing pain by bedside caregivers but it is automated. Classifying infants’ pain using the thresholding scheme achieves an average accuracy of around 94%.

The majority-voting scheme is a decision-level fusion method to predict the final outcome of different modalities by combining the outcomes (i.e., class labels) of these modalities together and choosing the major label in the combination as the final outcome. For example, the final assessment of pain would be severe pain for a combination of two measures with severe pain labels and one measure with no pain label. In case these labels make a tie, we break this tie by choosing the class label with the highest confidence score as the final assessment.

The Two Measure and Three and Above columns in Table 3 present the results of assessing pain using the majority voting scheme for eleven combinations of pain indicators. As can be seen, the performance of pain classification using combination of behavioral pain indicators (CFB) was around 96%. Adding vital signs to crying sounds, facial expression, and body motion (CFBV) slightly increases the pain classification performance. These results indicate that combining multiple indicators might provide a better and more reliable pain assessment.

5.3 Statistical Comparison

Referring to Table 3, we note that different combinations of pain indicators have similar performance. For example, the difference of performance between (CFB) and (CFBV) is around 0.6%. Also, the difference between (F) performance and (CF) performance is around 0.3%. The purpose of this section is to determine if there is a significant difference in the performance of two combinations using a statistical significance test known as Mann Whitney U test. U test is a non-parametric test to measure the central tendencies of two groups. We decided to use U test for comparison instead of T-test because our data (i.e., subjects’ accuracies) are not normally distributed.

Using U-test, we compared the pain classification performance of vital signs, which is the lowest in Table 3, with the performance of all other combinations. U test indicated that the performance of classifying infants’ pain was significantly higher for a combination of behavioral and physiological measures (CFBV) than for physiological measures alone V, (U = 33.5, p < 0.05 two-tailed). Also, we found that the performance of classifying infants’ pain using behavioral indicators (CFB) is significantly higher than using physiological measures (V), (U = 35.5, p < 0.05 two-tailed). These results support previous finding [13] that physiological changes such as an increase in heart rate are less specific for pain and thus are not sufficient for pain assessment.

We also compared the pain classification of facial expression (F), which has the highest accuracy under One Measure column in Table 3, with the pain classification of all other combinations under Two Measures and Three and Above columns. U test indicated that there is no significant difference between the pain classification using facial expression individually and the pain classification using combinations of different pain indicators. Although the pain classification accuracy using facial expression is not statistically different than the accuracies of multiple indicators, we still believe it is important to consider multiple pain indicators when assessing infants’ pain. The multimodal nature can provide more reliable assessment system that is able to function in case of missing data due to developmental stage, occlusions, noise, or level of activity (e.g., exhaustion).

The final statistical comparison we performed was to compare the pain predication using the thresholding scheme with the pain predication of (CFBV) using the voting scheme. No significant difference was found between the pain classification performance of these two schemes.

6 Conclusions and Future Research

This paper expands upon our previous work that presented an approach for assessing infants’ pain using facial expression, body movement, and vital signs by including crying sounds and state of arousal. The results of assessing infants’ pain presented in this paper are encouraging and, if further confirmed on a larger dataset, would influence and ultimately improve the current practice of assessing infants’ pain.

In terms of future research possibilities, we would like to evaluate our method on a larger dataset and expand our system to include chronic pain. Another research direction is to perform a feature-level fusion of all modalities’ features to generate a single feature vector for classification. A final research direction would be to include clinical data such as the gestational age to the assessment process.

Notes

- 1.

The individual degree of alertness to a stimulus.

References

Brahnam, S., Chuang, C.-F., Shih, F.Y., Slack, M.R.: Machine recognition and representation of neonatal facial displays of acute pain. Artif. Intell. Med. 36(3), 211–222 (2006)

Faye, P.M., De Jonckheere, J., Logier, R., Kuissi, E., Jeanne, M., Rakza, T., Storme, L.: Newborn infant pain assessment using heart rate variability analysis. Clin. J. Pain 26(9), 777–782 (2010)

Fotiadou, E., Zinger, S., a Ten, W.T., Oetomo, S.B. et al.: Video-based facial discomfort analysis for infants. In: IS&T/SPIE Electronic Imaging, pp. 90290F–90290F. International Society for Optics and Photonics (2014)

Gallo, A.-M.: The fifth vital sign: implementation of the neonatal infant pain scale. J. Obstet. Gynecol. Neonatal Nurs. 32(2), 199–206 (2003)

Grunau, R.V.E., Craig, K.D.: Pain expression in neonates: facial action and cry. Pain 28(3), 395–410 (1987)

Grunau, R.V.E., Johnston, C.C., Craig, K.D.: Neonatal facial and cry responses to invasive and non-invasive procedures. Pain 42(3), 295–305 (1990)

Hummel, P., van Dijk, M.: Pain assessment: current status and challenges. Semin. Fetal Neonatal Med. 11, 237–245 (2006). Elsevier

Johnston, C.C., Stevens, B., Craig, K.D., Grunau, R.V.E.: Developmental changes in pain expression in premature, full-term, two-and four-month-old infants. Pain 52(2), 201–208 (1993)

American Academy of Pediatrics, Fetus, Newborn Committee, et al.: Prevention and management of pain in the neonate: an update. Pediatrics 118(5), 2231–2241 (2006)

Page, G.G.: Are there long-term consequences of pain in newborn or very young infants? J. Perinat. Educ. 13(3), 10–17 (2004)

Pai, C.-Y.: Automatic pain assessment from infants’ crying sounds (2016)

Pal, P., Iyer, A.N., Yantorno, R.E.: Emotion detection from infant facial expressions and cries. In: 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings, vol. 2, pp. II-II. IEEE (2006)

Pereira, A.L.D.S.T., Guinsburg, R., Almeida, M.F.B.D., Monteiro, A.C., Santos, A.M.N.D., Kopelman, B.I.: Validity of behavioral and physiologic parameters for acute pain assessment of term newborn infants. São Paulo Med. J. 117(2), 72–80 (1999)

Petroni, M., Malowany, A.S., Johnston, C.C., Stevens, B.J.: Identification of pain from infant cry vocalizations using artificial neural networks (ANNs). In: SPIE’s 1995 Symposium on OE/Aerospace Sensing and Dual Use Photonics, pp. 729–738. International Society for Optics and Photonics (1995)

Slater, R., Cantarella, A., Gallella, S., Worley, A., Boyd, S., Meek, J., Fitzgerald, M.: Cortical pain responses in human infants. J. Neurosci. 26(14), 3662–3666 (2006)

Slater, R., Fabrizi, L., Worley, A., Meek, J., Boyd, S., Fitzgerald, M.: Premature infants display increased noxious-evoked neuronal activity in the brain compared to healthy age-matched term-born infants. Neuroimage 52(2), 583–589 (2010)

Thoman, E.B.: Sleeping and waking states in infants: a functional perspective. Neurosci. Biobehav. Rev. 14(1), 93–107 (1990)

Vempada, R.R., Kumar, B.S., Rao, K.S.: Characterization of infant cries using spectral and prosodic features. In: 2012 National Conference on Communications (NCC), pp. 1–5. IEEE (2012)

Zamzmi, G., et al.: An approach for automated multimodal analysis of infants’ pain. In: 2016 23rd International Conference on Pattern Recognition (ICPR). IEEE (2016)

Zamzmi, G., Pai, C.-Y., Goldgof, D., Kasturi, R., Sun, Y., Ashmeade, T.: Machine-based multimodal pain assessment tool for infants: A review. arXiv preprint. arXiv:1607.00331 (2016)

Zhang, Z., Luo, P., Loy, C.C., Tang, X.: Facial landmark detection by deep multi-task learning. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8694, pp. 94–108. Springer, Cham (2014). doi:10.1007/978-3-319-10599-4_7

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Zamzmi, G., Pai, CY., Goldgof, D., Kasturi, R., Sun, Y., Ashmeade, T. (2017). Automated Pain Assessment in Neonates. In: Sharma, P., Bianchi, F. (eds) Image Analysis. SCIA 2017. Lecture Notes in Computer Science(), vol 10270. Springer, Cham. https://doi.org/10.1007/978-3-319-59129-2_30

Download citation

DOI: https://doi.org/10.1007/978-3-319-59129-2_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59128-5

Online ISBN: 978-3-319-59129-2

eBook Packages: Computer ScienceComputer Science (R0)