Abstract

Feature selection and attribute reduction have been tackled in the Rough Set Theory through fuzzy reducts. Recently, Goldman fuzzy reducts which are fuzzy subsets of attributes were introduced. In this paper, we introduce an algorithm for computing all Goldman fuzzy reducts of a decision system, this algorithm is the first one reported for this purpose. The experiments over standard and synthetic data sets show that the proposed algorithm is useful for datasets with up to twenty attributes.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

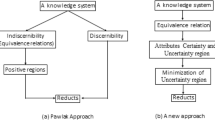

Goldman fuzzy reducts were introduced in [3] as a new kind of reducts. Goldman fuzzy reducts are inspired by an idea developed by R. S. Goldman in the framework of Testor Theory [2]. Additionally in [3] the application of Goldman fuzzy reducts for solving supervised classification problems was also discussed. These reducts are fuzzy in the sense that each Goldman fuzzy reduct is a fuzzy subset of the set of attributes, this means that every attribute belonging to a Goldman fuzzy reduct has associated a membership degree to this attribute subset. This membership degree represents the ability to discern that each attribute in the Goldman fuzzy reduct has.

The fundamental result of the present work is to introduce a first algorithm for computing all Goldman fuzzy reducts.

This document is organized as follows. Section 2 provides basic concepts related to Goldman fuzzy reducts. Section 3 introduces the proposed algorithm for computing Goldman fuzzy reducts. Besides, some experiments applying the proposed algorithm over real and synthetic databases are shown. Finally, our conclusions are summarized in Sect. 4.

2 Goldman Fuzzy Reducts

In many data analysis applications, information and knowledge are stored and represented as a decision table which provides a convenient way to describe a finite set of objects within a universe through a finite set of attributes [5, 6].

In Rough Set Theory, a decision table is a matrix representation of a decision system, in this matrix rows represent objects while columns specify attributes. Formally, a decision system is defined as a 4-tuple \(DS = (U, A^*_{t}=A_{t}\cup \{d\},\) \(\{ V_{a}\;|\; a \in A^*_{t}\},\{ I_{a}\;|\; a \in A^*_{t}\})\), where U is a finite non-empty set of objects, \(A^*_t\) is a finite non-empty set of attributes, d denotes the decision attribute, \(V_a\) is a non-empty set of values of \(a\in A^*_t\), and \(I_a\): \(U \rightarrow V_a\) is a function that maps an object of U to one value in \(V_a\).

In practice, it is common that decision tables contain descriptions of a finite sample U of objects from a larger (possibly infinite) universe \(\mathcal U\), where values of descriptive attributes are always known for all objects from \(\mathcal U\), but the decision attribute is a hidden function, except for those objects from the sample U. The main problem of learning theory is to generalize the decision function to the whole universe \(\mathcal U\).

Let us define for each attribute a in \(A_{t}\) a real valued dissimilarity function for comparing pairs of values \(\varphi _a: V_ a\times V_a \rightarrow [0,1]\) in such a way that 0 is interpreted as the minimal difference and 1 is interpreted as the maximum possible difference.

Applying these dissimilarity functions to all possible pairs of objects belonging to different classes in DS, a [0,1]-pairwise dissimilarity matrix can be built. We will denote such dissimilarity matrix as DM. We assume that DS is consistent, that is, there is not a pair of indiscernible objects belonging to different classes, this means that DM does not have a row containing only zeros.

We will refer to the value corresponding to row \(\rho _i\) in the column associated to attribute \(a_{j}\) in DM as \(\mu _{\rho _i}(a_{j})\).

Definition 1

(Goldman fuzzy reduct) Let \(A_t= \{a_1, a_2, ..., a_n\}\) and let \( T=\{a_{r_1}|\mu _{T}(a_{r_1}), ..., a_{r_s}|\mu _{T}(a_{r_s})\}\) be a fuzzy subset of \(A_t\) such that \(\forall p \in \{1,2,...,s\}\) \(\mu _{T}(a_{r_p}) \ne 0\). T is a Goldman fuzzy reduct with respect to DS if:

-

(i)

\(\forall \rho _i \in DM\) (being \(\rho _i\) the i-th row in DM) \(\exists a_{r_p}|\mu _{T}(a_{r_p}) \in T\) such that \(\mu _{T}(a_{r_p}) \le \mu _{\rho _i}(a_{r_p})\).

-

(ii)

\(\forall p \in \{1,2,...,s\}, T \setminus \{a_{r_p}|\mu _{T}(a_{r_p})\}\) does not fulfill condition (i).

-

(iii)

\(\forall T'\) such that \(T \subset T'\) and \(supp(T) = supp(T')\) (it means that \(\forall p \in \{1,2,...,s\}\) \(\mu _{T}(a_{r_p}) \le \mu _{T'}(a_{r_p})\) and for at least one index the inequality is strict) \(T'\) does not fulfill condition (i).

We will denote the set of all Goldman fuzzy reducts of a decision system by \(\varPsi ^*(DS)\). \(\varPsi (DS)\) will denote the set of all fuzzy subsets of \(A_t\) satisfying condition (i) in Definition 1.

According to the above definition, a Goldman fuzzy reduct is a fuzzy subset of attributes such that this sub-set of attributes and their corresponding membership degrees, are able to discern all pairs of objects belonging to different classes (condition (i)). Condition (ii) means that if a fuzzy singleton \(\{a_{r_p}|\mu _{r_p}\}\) is eliminated from a Goldman fuzzy reduct, the resulting subset is not anymore a Goldman fuzzy reduct. Condition (iii) means that if the membership degree of any attribute in T is increased, then the resulting subset is not anymore a Goldman fuzzy reduct. This definition is supported by the subset-based definition of reduct [7].

Suppose A is a finite set and \(\mathfrak {p}(A)\) is the power set of A. Let \(\mathbb {P}\) be a unary predicate on \(\mathfrak {p}(A)\). \(\mathbb {P}(S)\) stands for the statement that subset S fulfills property \(\mathbb {P}\). The values of \(\mathbb {P}\) are computed by an evaluation \(\mathfrak {e}\) with reference to certain available data, for example, a decision system. For a subset \(S \in \mathfrak {p}(A)\), \(\mathbb {P}(S)\) is true if S fulfills property \(\mathbb {P}\), otherwise, it is false. In this way, a conceptual definition of reduct is given based on an evaluation \(\mathfrak {e}\) as follows.

Definition 2

(Subset-based definition [7]). Given an evaluation \(\mathfrak {e}\) of \(\mathbb {P}\), a subset R of A is a reduct if R fulfills the following conditions:

-

(a)

existence: \(\mathbb {P}_\mathfrak {e}(A)\).

-

(b)

sufficiency: \(\mathbb {P}_\mathfrak {e}(R)\).

-

(c)

minimization: \(\forall B\subset R \ \ (\lnot {\mathbb {P}_\mathfrak {e}(B))}\).

These three conditions reflect the fundamental characteristics of a reduct. Condition of existence (a) ensures that a reduct of S exists. Condition of sufficiency (b) expresses that a reduct R of A is sufficient for preserving the property \(\mathbb {P}\) of A. Condition of minimization (c) expresses that a reduct is a minimal subset of A fulfilling property \(\mathbb {P}\) in the sense that none of the proper subsets of R fulfills property \(\mathbb {P}\).

For our convenience, we consider \(\mathfrak {P}(A)\) as the set of all fuzzy subsets of A, instead of the classical power set \(\mathfrak {p}(A)\). Besides, we consider the partial order \(\preceq \) defined in [3] instead of the classic inclusion:

Let \(t_1\), \(t_2 \in \mathfrak {P}(A)\), then we say that \(t_1 \preceq t_2\) iff

\((t_1 \cap t_2) \cup ((supp(t_1) \setminus supp(t_2))\cap t_1) \cup ((supp(t_2) \setminus supp(t_1))\cap t_2) = t_2\).Footnote 1

In [3], it was proved that \(\preceq \) is a partial order over \(\mathfrak {P}(A)\), as well as, that \(T \in \varPsi (DS)\) is a Goldman fuzzy reduct with respect to DS if T is minimal for the relation \(\preceq \) defined over \(\varPsi (DS)\).

Example 1

Let \(A= \{a_1, a_2, a_3, a_4\}\), and let \(\mathfrak {P}(A)\) the set of all subsets of A, including fuzzy subsets. Let \(t_1\), \(t_2\) and \(t_3\) \(\in \mathfrak {P}(A)\), \(t_1= \{a_1|0.5, a_2|0.4, a_3|1\}\), \(t_2= \{a_1|0.5, a_2|0.4, a_3|1, a_4|0.6\}\), \(t_3= \{a_1|0.5, a_2|0.4, a_3|0.8, a_4|0.6\}\). According to the definition of \(\preceq \) we have, for example, that \(t_1 \preceq t_2\) and \(t_2 \preceq t_3\) since \((t_1 \cap t_2) \cup ((supp(t_1) \setminus supp(t_2))\cap t_1) \cup ((supp(t_2) \setminus supp(t_1))\cap t_2) = \{a_1|0.5, a_2|0.4, a_3|1\} \cup (\emptyset \cap \{a_1|0.5, a_2|0.4, a_3|1\}) \cup ({a_4} \cap \{a_1|0.5, a_2|0.4, a_3|1, a_4|0.6\}) = \{a_1|0.5, a_2|0.4, a_3|1,\) \( a_4|0.6\} = t_2\) and \((t_2 \cap t_3) \cup ((supp(t_2) \setminus supp(t_3))\cap t_2) \cup ((supp(t_3) \setminus supp(t_2))\cap t_3) = \{a_1|0.5, a_2|0.4, a_3|0.8, a_4|0.6\} \cup (\emptyset \cap \{a_1|0.5, a_2|0.4, a_3|1, a_4|0.6\}) \cup (\emptyset \cap \{a_1|0.5, a_2|0.4, a_3|0.8, a_4|0.6\}) = \{a_1|0.5, a_2|0.4, a_3|0.8, a_4|0.6\} = t_3\)

Let A be, as before, the set of condition attributes in DS, and \(S=\{a_{r_1}|\mu _{r_1}\), \(a_{r_2}|\mu _{r_2}\), ..., \(a_{r_s}|\mu _{r_s}\}\) a fuzzy subset of A, (\(0< \mu _{r_p} \le 1\), \(p=\{1,2,...s\}\)). Let \(A^o=\{a_1|\mu ^o_1\), \(a_2|\mu ^o_2\), ..., \(a_n|\mu ^o_n\}\), being \(\mu ^o_j = min \{\mu _{\rho _i}(a_{j}) \ne 0\}\) for all rows in DM, \(1 \le j \le n\).

Let us consider the following predicate \(\mathbb {P}\):

\(\mathbb {P}(S) \equiv \forall \rho _i \in DM \ \exists x_{r_p}|\mu _{r_p} \in S\) such that \(\mu _{r_p} \le \mu _{\rho _i}(a_{j})\).

Notice that \(A^o\) fulfills the property \(\mathbb {P}\) by construction, unless there exists a zero row in DM, but this is not possible since we have assumed that DS is consistent. Then, we have \(\mathbb {P}_\mathfrak {e}(A^o)\).

On the other hand, let \( T=\{a_{r_1}|\mu _{T}(a_{r_1}), ..., a_{r_s}|\mu _{T}(a_{r_s})\}\) be a Goldman fuzzy reduct, then from condition (i) in Definition 1 it follows that T also has the property \(\mathbb {P}\), i.e. \(\mathbb {P}_\mathfrak {e}(T)\). Finally, taking into account that minimal elements according to \(\preceq \) in \(\varPsi (DS)\) are Goldman fuzzy reducts, it follows that \(\forall B \ne T ;\; B \preceq T \; \implies [\lnot {\mathbb {P}_\mathfrak {e}(B)]}\).

Then we have that if T is a Goldman fuzzy reduct, it satisfies Definition 2.

Example 2

Consider the following matrix:

For this matrix the set of Goldman fuzzy reducts is \(\varPsi ^{*}(BM) = \{\{a_1 | 0.2\}\), \(\{a_2|0.2\}\), \(\{a_3|0.7\}\), \(\{a_1|0.3, a_2|0.5\}\), \(\{a_2|0.4, a_3|0.9\}\), \(\{a_2|0.5, a_3|0.8\}\), \(\{ a_1|0.5\), \(a_2|0.5\), \(a_3|0.9\}\}\)

It is not difficult to prove that Definition 1 is equivalent to the next definition, which is easier to be verified.

Definition 3

\( T=\{a_{r_1}|\mu _{r_1}, a_{r_2}|\mu _{r_2}, ..., a_{r_s}|\mu _{r_s}\}\) is a Goldman fuzzy reduct with respect to DS iff T satisfies condition (i) of Definition 1 and \( \forall a_{r_i} \in supp(T) \ \exists \rho _j\) in \(DM : [\mu _T(a_{r_i}) = \mu _{\rho _j}(a_{r_i}) \wedge \forall (p\ne i) \mu _T(a_{r_p}) > \mu _{\rho _j}(a_{r_p})]\).

Definition 3 indicates that each attribute, together with its membership degree, of a Goldman fuzzy reduct, is indispensable for covering some rows in DM. This necessary condition can be verified for each attribute at the same time of condition (i) of Definition 1; with a low additional cost. Moreover, using Definition 3, verifying conditions (ii) and (iii) of Definition 1 can be done with a very low computational cost.

3 GFR Algorithm

The proposed algorithm, called GFR, is inspired by the MSLC algorithm [4] for computing reducts, which is based on the binary discernibility matrix.

GFR is able to compute all Goldman fuzzy reducts of a decision table, but it can also be used to find just one reduct, or a certain number of them.

First, the search space is conveniently ordered such that the pruning strategy goes through all the possible fuzzy subsets of attributes to decide whether or not they are Goldman fuzzy reducts but discarding from the analysis some subsets.

It is not difficult to prove that if \(\rho _i\) and \(\rho _j\) are rows of DM, and \(\forall p\in \{1,2,...,n\} \ \mu _{\rho _i}(a_p) \le \mu _{\rho _j}(a_p)\) and for at least one attribute the inequality is strict, then \(\rho _j\) is not needed for computing Goldman fuzzy reducts, in this case we say that \(\rho _j\) is a superfluous row. Thus, we can filter DM eliminating all superfluous rows. The new matrix is called basic matrix and will be denoted as BM.

Notice that, since only superfluous rows are eliminated, the Goldman fuzzy reducts computed from BM are exactly the same as those computed from DM. Moreover, a direct consequence of the last fact is that Definition 3 also applies for BM.

The GFR algorithm traverses the searching space in ascending order according to the Boolean representation of the attribute subsets, and the membership degrees in each column are also considered in ascending order. Let \(m_i\) and \(M_i\) be the minimum and the maximum values for the attribute \(a_i\) in BM respectively, we have that:

-

(1)

The first fuzzy subset of attributes in the order will be \(\{a_n|m_n\}\) corresponding to the Boolean tuple 00...01 with the minimum possible membership degree for the attribute \(a_n\).

-

(2)

The last fuzzy subset of attributes in the order will be \( \{a_{1}|M_{1}, a_{2}|M_{2}, ...,\) \( a_{n}|M_{n}\}\), corresponding to the Boolean tuple 11...1 with the maximum possible membership degrees for each attribute.

-

(3)

Given a fuzzy subset of attributes \(T=\{a_{r_1}|\mu _{T}(a_{r_1}), ..., a_{r_s}|\mu _{T}(a_{r_s})\}\); \(r_1< ... < r_s\), the next fuzzy subset in the order is calculated as follows:

-

(a)

Find k such that \(k=max\{ j\ | \ \mu _{T}(a_{r_j}) \ne M_{r_j}\}\), k is the greatest index among the attributes in T for which the membership degree is not the maximum.

-

(b)

If \(k < s\) then for all attributes from \(a_{r_{k+1}}\) to \(a_{r_s}\) in the current combination, the corresponding minimum membership degree \(m_{r_j} (j= k+1,...,s)\) is assigned. In any case, for \(a_{r_k}\) the next value (in ascending order) of the membership degrees in the corresponding column of BM is assigned.

-

(c)

If \(\forall j \in \{1,...,s\} \ \mu _T(a_{r_j}) = M_{r_j}\) then the next combination of attributes is generated and to each attribute the corresponding minimum membership degree is assigned.

-

(a)

Example 3

The traversal order of GFR over the searching space for the matrix BM in Example 2 comprises the 63 combinations shown in Table 1.

The pruning strategy of the GFR algorithm is based on the following facts:

-

(1)

If T is a Goldman fuzzy reduct and \( T \subseteq T'\) with \(supp(T)=supp(T')\), then \(T'\) is not a Goldman fuzzy reduct.

-

(2)

Let \(T=\{a_{r_1}|\mu _{T}(a_{r_1}), ..., a_{r_s}|\mu _{T}(a_{r_s})\}\) be a fuzzy subset of attributes. We say that \(a_{r_j}\) covers a row \({\rho _s}\) of BM iff \(\mu _T(a_{r_j}) \le \mu _{\rho _s}(a_{r_j})\) and \(\forall \ p < j \) \(\mu _T(a_{r_p}) > \mu _{\rho _s}(a_{r_p})\). We say that \(a_{r_j}\) generates redundancy if it does not cover any row of BM or if the number of rows that cannot be covered without using attributes subsequent to \(a_{r_j}\) is less than the number of attributes after it. This means that one of the attributes subsequent to \(a_{r_j}\) does not cover any row.

If T is not a Goldman fuzzy reduct, but it satisfies condition (i) in Definition 1, then all the combinations of attributes that follow in the order and maintain the same membership degree in the attribute that generates redundancy with the smallest index are not Goldman fuzzy reducts.

-

(3)

If T does not fulfill condition (i) in Definition 1 and \( T \subseteq T'\) with \(supp(T)=supp(T')\), then \(T'\) also does not fulfill condition (i) in Definition 1.

-

(4)

If \(T=\{a_{r_p}|\mu _T(a_{r_p})\}\) fulfills condition (i) in Definition 1, then T is a Goldman fuzzy reduct, and consequently \(a_{r_p}\) does not appear in any other Goldman fuzzy reduct with this membership degree \(\mu _T(a_{r_p})\).

-

(5)

If \(T=\{a_{r_p}|\mu _T(a_{r_p})\}\) does not fulfill condition (i) in Definition 1, then no singleton \(\{a_{r_p}|\mu _{r_p}\}\) is a Goldman fuzzy reduct.

The GFR algorithm is as follows:

3.1 Experimental Results

To illustrate the behavior of the proposed algorithm, ten datasets were selected from the UCI Machine Learning Repository [1]. For each dataset (decision system), we computed its dissimilarity matrix, and then its respective basic matrix, and our proposed algorithm GFR was applied over this basic matrix. All experiments were carried out on an Intel(R) Core(TM) Duo CPU T5800 @ 2.00 GHz 64-bit system with 4 GB of RAM running on the Windows 10 System. Table 2 contains information about the selected datasets and it also shows the amount of Goldman fuzzy reducts as well as the runtime taken by our algorithm for each dataset.

As we can see in Table 2, the amount of rows in the basic matrix (see BM rows column) highly influences the time needed by our algorithm for computing all the Goldman fuzzy reducts. For this reason, in a second experiment, we evaluate the scalability of GFR; for this experiment we randomly generate a basic matrix with 20 rows and 5 columns (attributes), each cell in the matrix has a value between 0.0 and 1.0. We increased the number of columns of this matrix by adding a column at a time up to 20 columns.

Table 3 shows the results of this experiment, for each generated matrix, Table 3 includes the number of Goldman fuzzy reducts calculated and the time required (in seconds). Figure 1 shows a graph of runtime (in seconds) vs. number of attributes. Clearly, we can see the exponential dependence of the runtime in terms of the number of attributes.

Since for GFR the size of the search space is \( \prod _{i=1}^{n}(d_{i}+1)\), being \(d_i\) the number of different non zero values in column i in BM, from Fig. 1 we can see that for an amount of attributes greater than 20 the time needed by the algorithm grows drastically. In addition, from Table 2 we observe that the runtime depends not only on the number of attributes nor on the number of rows in the basic matrix. Runtime for computing Goldman fuzzy reducts, in general, depends on the number of attributes, objects and classes, as well as the number of different non zero values in each column of BM.

4 Conclusions

In this paper, the GFR algorithm as a first solution to the problem of computing all Goldman fuzzy reducts was proposed. Based on our experiments, we conclude that the proposed algorithm allows solving problems in a reasonable time for decision systems with no more than 20 attributes. We can also conclude that the search for more efficient algorithms for computing all Goldman fuzzy reducts is mandatory as future work.

GFR is the first algorithm for computing Goldman fuzzy reducts, which opens as a new branch the search for more efficient algorithms for computing all Goldman fuzzy reducts.

Notes

- 1.

Since \(supp(t_1)\) and \(supp(t_2)\) are not fuzzy, \(\setminus \) denotes the classic set difference operation in Set Theory; on the other hand, \(\cup \) and \(\cap \) are, respectively, the union and intersection operations as classically defined in Fuzzy Set Theory.

References

Bache, K., Lichman, M.: UCI Machine Learning Repository. http://archive.ics.uci.edu/ml. Irvine, CA, University of California, School of Information and Computer Science (2013)

Goldman, R.S.: Problems of fuzzy test theory. Avtomat. Telemech. 10, 146–153 (1980). (in Russian)

Lazo-Cortés, M.S., Martínez-Trinidad, J.F., Carrasco-Ochoa, J.A.: A glance to the goldman’s testors from the point of view of rough set theory. In: Martínez-Trinidad, J.F., Carrasco-Ochoa, J.A., Ayala-Ramírez, V., Olvera-López, J.A., Jiang, X. (eds.) MCPR 2016. LNCS, vol. 9703, pp. 189–197. Springer, Cham (2016). doi:10.1007/978-3-319-39393-3_19

Lazo-Cortés, M.S., Martínez-Trinidad, J.F., Carrasco-Ochoa, J.A., Sanchez-Diaz, G.: A new algorithm for computing reducts based on the binary discernibility matrix. Intell. Data Anal. 20(2), 317–337 (2016)

Pawlak, Z.: Rough sets. Int. J. Comput. Inform. Sci. 11(5), 341–356 (1982)

Pawlak, Z.: Rough Sets: Theoretical Aspects of Reasoning About Data. Kluwer Academic Publishers, Dordrecht (1991)

Yao, Y.Y.: The two sides of the theory of rough sets. Knowl. Based Syst. 80, 67–77 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Carrasco-Ochoa, J.A., Lazo-Cortés, M.S., Martínez-Trinidad, J.F. (2017). An Algorithm for Computing Goldman Fuzzy Reducts. In: Carrasco-Ochoa, J., Martínez-Trinidad, J., Olvera-López, J. (eds) Pattern Recognition. MCPR 2017. Lecture Notes in Computer Science(), vol 10267. Springer, Cham. https://doi.org/10.1007/978-3-319-59226-8_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-59226-8_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59225-1

Online ISBN: 978-3-319-59226-8

eBook Packages: Computer ScienceComputer Science (R0)