Abstract

Trace clustering techniques are a set of approaches for partitioning traces or process instances into similar groups. Typically, this partitioning is based on certain patterns or similarity between the traces, or done by discovering a process model for each cluster of traces. In general, however, it is likely that clustering solutions obtained by these approaches will be hard to understand or difficult to validate given an expert’s domain knowledge. Therefore, we propose a novel semi-supervised trace clustering technique based on expert knowledge. Our approach is validated using a case in tablet reading behaviour, but widely applicable in other contexts. In an experimental evaluation, the technique is shown to provide a beneficial trade-off between performance and understandability.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Process mining is a research field at the crossroads of data mining and business process management. Its main reason of existence stems from the vast amount of data that is generated in modern information systems, and the desire of organizations to extract meaningful insights from this data. Generally speaking, three subdomains exist within process mining: process discovery, a set of techniques concerned with the elicitation of process models from event data; conformance checking, a set of techniques that aim to quantify the conformance between a certain process model and a certain event log; and process enhancement, approaches that aim to extend existing or discovered process models by using other data attributes such as resource or timing information [18].

Trace clustering, or the partitioning of traces of behaviour in an event log into separate clusters, is mainly related to the process discovery sub-domain of process mining. Process discovery techniques aim to discover a process model from an event log. However, when this event log consists of real-life behaviour, it is likely to contain highly varied and complex behavioural structures. This leads to a lower quality of the process models which can be discovered. Therefore, it is desirable to first split the event log into several different clusters of traces, and then discover a process model for each trace cluster separately. By doing so, the goal is to achieve a higher quality of the process models.

From an application-oriented point of view, trace clustering techniques have proven to be a valuable asset in multiple contexts, with applications ranging from incident management to health care [10, 11]. Nonetheless, trace clustering, like traditional clustering, is hindered by its unsupervised nature: it is often hard to validate a clustering solution, even for domain experts. This problem has been recognized in [7], in which an approach is proposed to increase understandability of trace clustering solutions by extracting short and accurate explanations as to why a certain trace is included in a certain cluster.

Although explaining cluster solutions to domain experts is a valid approach for enhancing the understandability of trace clustering solutions, it remains a post-processing step. A potentially better approach for improving trace clustering solutions is to directly take an expert’s opinion into account while performing the clustering. This is the core contribution of this paper: an approach for incorporating expert knowledge into a trace clustering is proposed. In a real-life case study, based on behaviour of newspaper readers, our approach is shown to lead to clustering solutions that are more in line with the expert’s expectations, without substantially diminishing the quality of the clustering solution.

In light of this objective, the rest of this paper is structured as follows: in Sect. 2, the field of trace clustering is described and potential approaches for the incorporation of expert knowledge are investigated. Furthermore, Sect. 3.1 describes our proposed approach. In Sect. 4, the motivating case study is outlined, illustrating a specific situation in which expert knowledge is used to enhance the justifiability of trace clustering solutions. Subsequently, the contribution of our novel approach is evaluated in Sect. 5. Finally, a conclusion and outlook towards future work is provided in Sect. 6.

2 Potential Approaches for Incorporating Expert Knowledge

In this section, a short overview of trace clustering is provided. Then, we conceptually discuss how expert knowledge can be represented and how it can be incorporated in a trace clustering approach. The three distinct categories delineated here are: expert-driven initialization, constraint clustering, and complete expert clustering. Finally, we describe how our approach fits into the methodology of [12].

2.1 Existing Approaches for Traditional Trace Clustering

Typically, the starting point of a trace clustering exercise is an event log, which is a set of traces. Each trace is a registered series of events (instantiations of activities), possibly along with extra information on the event, such as the resource that executed the event or time information. A trace clustering is then a partitioning of an event log into different clusters such that each trace is assigned to a single cluster.

A wide variety of trace clustering techniques exist. Broadly speaking, there are three main categories of trace clustering techniques: those based on direct instance-level similarity, those based on the mapping of traces onto a vector space model, and those based on process model quality. With regards to direct instance-level similarity, i.e. the direct quantification of the similarity between two traces, an adapted Levenshtein distance could be computed as in [3]. An alternative set of approaches are those where the behaviour present in each trace is mapped onto a vector space of features [4]. The third category regards process model quality as an important goal for trace clustering. An approach based on the active incorporation of the process model quality of process models discovered from each cluster has been described in [10].

2.2 Incorporating Expert Knowledge: Expert-Driven Initialization

A first potential approach is based on expert-driven initialization. It is conceptually related to semi-supervised learning [2], in the sense that the user is expected to manually assign a small subset of traces to a cluster, after which an automatic clustering algorithm extends the clusters to the entire dataset. The approach is especially useful for centroid-based algorithms like k-means, which often rely on a random initialization of seeds in order to commence the clustering. By setting these seeds based on the domain knowledge of an expert instead of randomly, the confidence of an expert in the solution should increase, and with it the justifiability of the solution.

With regards to the three types of trace clustering described in Sect. 2.1, it is clear that including expert-driven knowledge directly in the similarity between traces is not attainable. If the underlying technique used to cluster the traces is seed-based, such as k-means, then the expert-driven pre-defined clusters could be chosen as seeds. The same observation holds for clustering traces which have been mapped onto a vector space model, if this vector space representation is clustered in a seed-based way. If a hierarchical technique is preferred for clustering the vector space representation, the incorporation is less straightforward. Finally, a process model-driven trace clustering technique that is based on initialization does not exist yet.

2.3 Incorporating Expert Knowledge: Constrained Clustering

A second potential approach is the use of constraints. Rather than provide a starting subset of clustered traces, the expert provides a set of constraints to which the clustering solution is expected to conform (either strictly or at a penalty). Typical examples of expert constraints are must-link constraints, which indicate that two elements must be included in the same cluster, and cannot-link constraints, indicating that two elements should not be clustered together [20].

In the specific application of constrained clustering to traces, a general must-link constraint that applies to all algorithms is related to process instances and distinct process instances: trace clustering techniques that construct clusters based on process instances should ensure that all process instances pertaining to the same distinct process instance, are included in the same cluster.

2.4 Incorporating Expert Knowledge: Complete Expert Solution

A final possible input type of expert knowledge can be a complete clustering solution based on the expert’s expectations. If the expert’s expectations can be captured using an automatic clustering technique, the availability of such a complete solution is not far-fetched. In other cases, the opinion of a human expert could be based on features of the traces which are not incorporated into a trace clustering solution. In such a case, a clustering obtained with the use of these features could be a useful starting point for a clustering exercise. Two different approaches can be conceived to deal with this complete expert clustering. On the one hand, one could apply a trace clustering technique on the event log from scratch to obtain a regular trace clustering solution. Then, the solution of the expert and the regular trace clustering solution can be combined to create a consensus clustering. The idea is to quantify how often two elements are clustered together in different solutions, and then construct a final partitioning based on this quantification. Consensus clustering has been used in a multiple-view trace clustering technique [1]. Nonetheless, consensus clustering is mainly useful for combining a higher number of different solutions. If there are only two solutions to combine, creating a consensus may prove difficult. On the other hand, in a case where a single complete expert clustering is available, a different strategy could be to take this complete clustering as a starting point: re-cluster the traces which are grouped together by the expert, but whose grouping hinders the performance the clustering on other objectives, such as process model quality.

2.5 Organizational Aspects

According to PM\(^{2}\), a process mining methodology, 4 types of roles are typically involved in a process mining project: business owners, business experts, system experts and process analysts. Ideally, the expert knowledge comes from a business expert who knows the business aspect and executions of the processes [12]. The expectations of the experts are to be captured in the Extraction stage, when process knowledge is transferred from the business expert to the process analyst, who will be performing the process discovery and trace clustering.

The approach presented in the next section of this paper is based on incorporating expert knowledge starting from a complete expert solution. This is done by re-clustering the event log based in a process model-driven approach. Initialization- and constraint-based approaches remain open for future research.

3 Incorporating Expert Knowledge in Trace Clustering

3.1 Proposed Approach

In this section, a novel trace clustering algorithm is described, specifically designed to be driven by expert knowledgeFootnote 1. Corresponding to Sect. 2.4 a trace clustering technique that starts from a complete expert solution is described. The technique is based on the multi-objective approach described in [6].

In general, the technique consists of three phases:

-

Phase 1. An initialization phase, during which the clusters are initialized.

-

Phase 2. A trace assignment phase, during which traces are assigned to the cluster which leads to the best results, if that best result is sufficiently good.

-

Phase 3. A resolution phase, during which traces that where not assigned in the previous phase, are either included in an additional separate cluster, or in the best possible existing cluster.

Phase 1: Initialization. The first phase is an initialization phase, which is described in Algorithm 1. The algorithm is structured as follows: first, a set of clusters is built by extracting the number of different clusters in the pre-clustered event log. Then, each distinct process instance (dpi) is added to its respective cluster. Next, traces are removed from each cluster to increase the process model quality of each cluster. Nonetheless, a certain percentage of dpi per cluster can be fixed: dpi’s will not be removed when there are less traces left in the cluster than a FixedPercentage given by the user. For each of the clusters, traces are removed in order from least frequent dpi to most frequent dpi. Dpi frequency, or distinct process frequency, is the frequency with which a certain process instance (trace) is present in the event log. For each trace, starting with the least frequent, a process model PM is mined and the trace is removed if the trace metric value and cluster metric value are not above the declared thresholds. The trace metric value is the result of the metric computed on the mined process model using only the trace that is under scrutiny. The cluster metric value is obtained by calculating this result using all traces in the cluster, including the trace to be added. Two options are possible: if both values are above the threshold, the cluster is of sufficient quality, and no traces are removed from the cluster any more. If this is not the case, the trace is removed and added to the traces that will be assigned to a cluster in a later phase.

Phase 2: Trace Assignment. The second phase is detailed in Algorithm 2, and described here. After the initialization, the set of remaining traces to be clustered will be assigned to the cluster they fit best with. This is done by mining a process model, and calculating the trace metric value and cluster metric value for each cluster. Four situations are possible: (1) the cluster metric value is the highest one, in which case the cluster is denoted as the current best; (2) the cluster metric value is only equal to the current highest value but the trace metric value is higher than the current best, in which case the cluster is also denoted as the current best; (3) the values are above the threshold but lower than the current best found in one of the other clusters, in which case the trace will not be added to the cluster which is currently being tested; or (4) these values are below the provided thresholds, and again the trace will not be added to the cluster which is currently being tested.

After determining the best cluster, the distinct process instance is added to the best possible cluster. If no best possible cluster exists (because the metric values were below the threshold for each of the clusters), the distinct process instance is added to the set of unassignable traces.

Phase 3: Unassignable Resolution. In the third phase, any remaining traces which were not assignable to a cluster in Phase 2 will be assigned to a cluster. They are either added to a separate cluster (if SeparateBoolean is true), or they are added to the best possible existing cluster. This assignment is done from most frequent distinct process instance to least frequent process instances, following the same procedure as in Phase 2, with the exception that the thresholds are no longer checked.

3.2 Configuration

In this subsection, a small discussion is provided on how the algorithm could be configured. While the choice of the two thresholds, the fixed percentage for the initialization, and the choice whether or not to separate the not-assignable traces are important decisions, these are case-specific decisions. The algorithm allows these to be set by the user: higher thresholds combined with the separation of traces that do not exceed these thresholds will likely lead to small but highly qualitative clusters and one large surplus-cluster, which may be desirable in some cases but not in all.

In terms of the metric chosen as input for the clustering, this depends on the expectation of the underlying process models. A wide array of accuracy and simplicity metrics for discovered process models have been described in the literature (e.g. [9]). In general, a weighted metric such as the robust F-score proposed in [8] might be appropriate, since it provides a balance between fitness and precision.

A similar argument holds for the process discovery technique one could use. A wide array of techniques exist, and our approach can be combined with most of them. Observe that the chosen technique should be able to discover processes with a decent performance, since a high number of processes needs to be discovered in certain steps of our algorithm. In [10], the preference goes to Heuristics Miner [21]. Other possibilities are Inductive Miner [15] and Fodina [19].

4 Motivating Case Study: Reading Behaviour in Tablet Newspapers

4.1 Case Description

We performed experiments with people who read a digital newspaper using a tablet app. We set up two types of experiments: qualitative, in which 30 people were interviewed about their typical newspaper reading behaviour by an experienced independent marketer; and quantitative experiments, in which 209 paying subscribers of the newspaper allowed us to track every device interaction with the app during one month of habitual news reading.

The app’s name cannot be mentioned due to confidentiality reasons, but it exists already for several years and has more than 10,000 monthly active users. The newspaper brand is one of the most popular in a Western-European country.

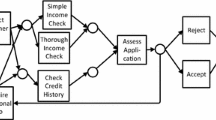

As can be seen in Fig. 1, the app is not just a replica of a regular newspaper in print, but is optimized for tablet and mobile use. Reading through the newspaper with this app can be considered a process and investigated with process mining techniques. The user starts on page one, and can swipe horizontally, going from page to page, choosing on which articles to spend more time, and the process ends when the user decides to quit reading. In this tablet-optimized version of the newspaper, a couple of additional features also allow users to jump between pages, thereby skipping content to e.g. immediately go to the start of a new news category like Sports, Opinions, and so on.

An experienced marketer from an independent marketing bureau did interviews with 30 people in total. Each interview had a duration of two hours. The marketer had experience working with the same app and newspaper brand for other market studies, and was considered to be a domain expert by the journalists and editors of the newspaper. The end result of these interviews was a presentation of a set of typical reader profiles, with a textual explanation about what kind of reading behaviour characterized each reader profile.

This set of reader profiles can be considered to be expert-driven clusters. Note that this expert-driven clustering is not based on actual data of the reading process of these users, but on the self-reported reading behaviour of the interviewed users. Using users’ self-reported behaviour is typically how companies do user segmentation if they want to get insight into the different types of users that use their app, especially if there is no data available about how their product is actually used.

We worked together with TwipeFootnote 2, the company which developed the app, to modify the app so every user interaction could be logged. A selection of paying subscribers of the newspaper was e-mailed with an invitation to fill in a recruitment survey. The recruitment survey assessed eligibility for participation in the experiment. It consisted of socio-demographic questions and questions concerning the users’ typical reading behaviour. Based on the answers to this survey, a sample of candidate participants could be drawn that was representative for the newspaper’s population of subscribers. All of the candidate participants were acquainted with the app and used it regularly (at least weekly, often more frequently). This set of candidate participants received a personal invitation to download the modified version of the app they normally used for reading the newspaper, and to use that version during one month. Eventually, we collected useful data for 209 experiment participants, and ended up with 2900 useful reading sessions.

4.2 Application of Data-Driven Clustering

Recall from the previous section that the domain expert created textual descriptions of cluster profiles. The variables used to describe these profiles are used for data-driven clustering approaches, to come up with a full expert solution. These variables contain information such as the reading moment, length of a session, how focused a reader is, how thoroughly the paper is read, etc. For the clustering, three distinct approaches were taken: (1) A traditional k-means was performed using these variables. (2) Given the textual description of cluster profiles by the expert, representative observations are defined for each cluster. Then, these representative observations are used as centroids in a single nearest neighbour approach. Each trace is included in the cluster of the centroid it is closest to in terms of normalized values on the variables. (3) The third approach consists of starting from these same centroids, and using those centroids as initial seeds in a seeded version of the k-means algorithm.

4.3 Transforming Case to Enable Trace Clustering

In order to create insight into the reading process followed by the users, the low-level interaction data as described in Sect. 4.1 needs to be mapped onto intuitive high-level activities. Four groups of activities were defined: (1) activities that lead to the start of a reading session, either by starting the application or reopening it from the background of the tablet; (2) activities concerning a user spending time reading an article; (3) activities concerning a user inspecting an image; and (4) activities concerning a user shutting down her session.

In terms of granularity of the reading activity, two aspects were considered for inclusion: the time spent on the article, and the newspaper category the article belonged to. After descriptive analysis of the data, the following transformation was applied. With regards to reading times, a typical user reading in the newspaper’s language will read about 240 words per minute [5]. A reading activity is defined as a read-page-event if the user spent enough time on the article to read at least half of the text. Similarly, a scan-page-event is defined to have occurred if a reader has taken the time to read a quarter of the text, and a skip-page-event if the reader only took the time to read the title of the article. Additionally, the newspaper also consists of 8 different categories. Extending the read, scan and skip events with these categories leads to a total number of 24 different reading events. Next to text categories, the inspect-image-event was also divided into categories, creating 8 distinct image-events. Overall, this brings the number of activities to 34 (1 launch-event, 24 page-reading events, 8 image-related events and 1 quit-event). Self-loops between activities were disregarded. Finally, observe that the created event log contains a wide variety of behaviour: of 2900 reading sessions, there are 2794 distinct process instances. For an exemplary excerpt, see Table 1.

5 Experimental Evaluation

In this section, we will apply a number of data-driven clustering approaches, existing trace clustering techniques, and our expert-driven trace clustering technique, on the newspaper reading data. The obtained clustering solutions are then compared in terms of process model quality.

5.1 Setup

Techniques. All techniques are listed in Table 2, with an indication of whether they are data-driven techniques, trace clustering techniques, or expert-driven techniques. Five pure trace clustering techniques are incorporated for comparison: ActFreq and \( ActMRA \) [10], two process-quality based techniques, GED [3], a direct instance-similarity technique, and two vector-space model-based methods, MRA [4] and 3-gram [17]. Three data-driven clustering techniques are included, one of which requires no expert knowledge (k-means), and two that do (k-seeded and 1nn). Finally, ActSemSupexp is the general name for our proposed expert-driven trace clustering technique, where exp is the technique that is used to obtain the expert knowledge: k-seeded or 1nn. All settings are tested with 4,5 and 6 clusters, in line with the expectations of the domain expert.

Metrics. To evaluate the quality of the clustering solutions, a process model is mined for each cluster, using the Fodina technique [19]. The accuracy of each process model discovered per cluster is then measured using the F1-score as proposed by [8], where p is a precision metric and r is a recall metric:

In this paper, the recall metric we have chosen is behavioural recall rb [14], and the precision metric we use is etcp [16]. Finally, a weighted average F-score metric for the entire clustering solution is then calculated as follows, similar to the approach in [10], where k is the number of clusters in C and \(n_{i}\) the number of traces in cluster i:

Furthermore, we can calculate the relative improvement of our semi-supervised technique with expert knowledge (\(ActSemiSup_{exp}\)) opposed to the best pure trace clustering technique (TC) as follows:

Three situations might arise: (1) \(RI > 1\): in that case, the expert-driven technique creates a solution which is able to combine higher ease-of-interpretation with better results in terms of process model quality; (2) \(RI = 1\): the expert-driven technique leads to higher ease-of-interpretation from an expert’s point of view without reducing model quality; and (3) \(RI <1\): there is a trade-off present between clustering solutions which are justifiable for an expert and the optimal solution in terms of process model quality.

One final metric we propose to compare how similar two clustering solutions are, is the Normalized Mutual Information [13]. With it, we can illustrate how similar the solution found by our semi-supervised approach is to the complete expert clustering it used as input. This value is a decent proxy for how easy-to-interpret the solution is given the expert knowledge used to create the input clustering. It is defined as follows: let \(k_{a}\) be the number of clusters in clustering a, \(k_{b}\) the number of clusters in clustering b, n the total number of traces, \(n^{a}_{i}\) the number of elements in cluster i in clustering a, \(n^{b}_{j}\), the number of elements in cluster j in clustering b, and \(n^{ab}_{ij}\) the number of elements present in both cluster i in clustering a and cluster j in clustering b. The NMI is then defined as:

5.2 Results

The results in terms of F1-score are presented in Fig. 2. A couple of observations can be made from this figure. First, since the F1-score is a metric scaled between 0 and 1, it is clear that the overall results are rather low. Nonetheless, all clustering solutions have a weighted average behavioural recall between 0.88 and 0.92. The reason for the low F1-scores lies in the precision: due to the high variability of behaviour (many distinct process instances) in the event log, all clustering solutions perform rather low in terms of etcp.

Secondly, observe that all clustered solutions outperform the non-clustered event log (1 cluster). This is mainly due to the precision of the clusters, which increases if a higher number of clusters is used. This observation is supported by the ordering of the results across different numbers of clusters: all other things being equal, the F1-score at 6 clusters is always the highest, except for the ActMRA and MRA solutions.

Furthermore, it is noticeable that ActMRA (at a cluster number of 4 and 5), and ActFreq (at a cluster number of 6) attain the highest quality of the existing data-driven and trace clustering techniques. Observe as well that the data-driven clustering techniques k-means and k-seeded perform quite well in terms of process model quality, on par with dedicated trace clustering techniques, especially at higher cluster numbers.

The most important remark concerns the quality attained by the semi-supervised algorithm. Both when using the results of the k-seeded algorithm as expert knowledge, as well as when using the results of the 1nn clustering as expert knowledge, the F1-score improves. To illustrate this, Table 3 contains the results of the relative improvement of the semi-supervised algorithm compared to just using the expert knowledge, and compared to the overall best trace clustering algorithm. From Table 3, it is clear that our semi-supervised algorithm attains its goal of enhancing the expert knowledge and increasing the process model quality of the trace clustering (\(RI>1\)). In the setting where the expert knowledge comes from a seeded k-means clustering, there is a small trade-off between performance and understandability for the expert at 4 and 5 clusters (\(RI<1\)), but not at 6 clusters (\(RI>1\)), compared to the overall best trace clustering technique. When the expert knowledge originates from a single nearest neighbour-exercise, our algorithm manages to improve the solution compared to the data clustering (\(RI>1\)), but there is a clear trade-off, especially at cluster numbers of 4 and 5, compared to the best trace clustering solutions (\(RI<1\)).

Finally, Table 4 contains values for the Normalized Mutual Information, capturing the similarity between the input of the semi-supervised trace clustering solutions and their solutions. For comparison, the NMI of the ActMRA-technique with both inputs is provided as well. It is clear that the semi-supervised solutions are much more in line with the expectations of the expert input, as illustrated by their high NMI-values.

6 Conclusion

In a situation where an expert has a preconceived notion of what a clustering should look like, it is unlikely that a trace clustering algorithm will lead to clusters which are in line with his or her expectations. Motivated by a case in tablet reading behaviour, this paper proposes an expert-driven trace clustering technique that balances improvement in terms of trace clustering quality with the challenge of making clusters more interpretable for the expert. In an experimental evaluation, we have shown how our algorithm creates more interpretable solutions which are simultaneously better in terms of trace clustering quality then purely using an expert-driven data clustering, and in some cases even produce higher quality than dedicated trace clustering techniques.

Several different avenues for future work exist: (1) phase 1 of our approach could be adapted for non-complete expert input; (2) an evaluation could then be performed regarding how much expert knowledge is needed to achieve high quality clustering results; (3) our algorithm could be extended with a window-based assignment strategy, to increase performance and make the direct incorporation of other data possible; (4) the case study could be extended using the self-reported clustering of the readers; and (5) our approach could be validated in other use cases.

Notes

- 1.

The algorithm has been implemented as a plugin for ProM 6, and is available on http://processmining.be/expertdriventraceclustering/.

- 2.

References

Appice, A., Malerba, D.: A co-training strategy for multiple view clustering in process mining. IEEE Trans. Serv. Comput. PP(99), 1 (2015)

Basu, S., Banerjee, A., Mooney, R.: Semi-supervised clustering by seeding. In: Proceedings of 19th International Conference on Machine Learning (ICML-2002), pp. 27–34 (2002)

Bose, R.P.J.C., van der Aalst, W.M.P.: Context aware trace clustering: towards improving process mining results. In: SDM, pp. 401–412 (2009)

Bose, R.P.J.C., van der Aalst, W.M.P.: Trace clustering based on conserved patterns: towards achieving better process models. In: Rinderle-Ma, S., Sadiq, S., Leymann, F. (eds.) BPM 2009. LNBIP, vol. 43, pp. 170–181. Springer, Heidelberg (2010). doi:10.1007/978-3-642-12186-9_16

Buzan, T., Spek, P.: Snellezen. Tirion (2009)

De Koninck, P., De Weerdt, J.: Multi-objective trace clustering: finding more balanced solutions. In: Business Process Management Workshops 2016 (2016, accepted)

De Koninck, P., De Weerdt, J., vanden Broucke, S.K.L.M.: Explaining clusterings of process instances. Data Mining Knowl. Discov. 31(3), 1–35 (2016)

De Weerdt, J., De Backer, M., Vanthienen, J., Baesens, B.: A robust f-measure for evaluating discovered process models. In: 2011 IEEE Symposium on Computational Intelligence and Data Mining (CIDM), pp. 148–155. IEEE (2011)

De Weerdt, J., De Backer, M., Vanthienen, J., Baesens, B.: A multi-dimensional quality assessment of state-of-the-art process discovery algorithms using real-life event logs. Inf. Syst. 37(7), 654–676 (2012)

De Weerdt, J., Vanden Broucke, S., Vanthienen, J., Baesens, B.: Active trace clustering for improved process discovery. IEEE Trans. Knowl. Data Eng. 25(12), 2708–2720 (2013)

Delias, P., Doumpos, M., Grigoroudis, E., Manolitzas, P., Matsatsinis, N.: Supporting healthcare management decisions via robust clustering of event logs. Knowl.-Based Syst. 84, 203–213 (2015)

van Eck, M.L., Lu, X., Leemans, S.J.J., van der Aalst, W.M.P.: PM\(^2\): a process mining project methodology. In: Zdravkovic, J., Kirikova, M., Johannesson, P. (eds.) CAiSE 2015. LNCS, vol. 9097, pp. 297–313. Springer, Cham (2015). doi:10.1007/978-3-319-19069-3_19

Fred, A., Lourenço, A.: Cluster ensemble methods: from single clusterings to combined solutions. In: Okun, O., Valentini, G. (eds.) Supervised and Unsupervised Ensemble Methods and their Applications, pp. 3–30. Springer, Heidelberg (2008)

Goedertier, S., Martens, D., Vanthienen, J., Baesens, B.: Robust process discovery with artificial negative events. J. Mach. Learn. Res. 10, 1305–1340 (2009)

Leemans, S.J.J., Fahland, D., van der Aalst, W.M.P.: Discovering block-structured process models from event logs - a constructive approach. In: Colom, J.-M., Desel, J. (eds.) PETRI NETS 2013. LNCS, vol. 7927, pp. 311–329. Springer, Heidelberg (2013). doi:10.1007/978-3-642-38697-8_17

Muñoz-Gama, J., Carmona, J.: A fresh look at precision in process conformance. In: Hull, R., Mendling, J., Tai, S. (eds.) BPM 2010. LNCS, vol. 6336, pp. 211–226. Springer, Heidelberg (2010). doi:10.1007/978-3-642-15618-2_16

Song, M., Günther, C.W., van der Aalst, W.M.P.: Trace clustering in process mining. In: Ardagna, D., Mecella, M., Yang, J. (eds.) BPM 2008. LNBIP, vol. 17, pp. 109–120. Springer, Heidelberg (2009). doi:10.1007/978-3-642-00328-8_11

Van der Aalst, W., Adriansyah, A., Van Dongen, B.: Replaying history on process models for conformance checking and performance analysis. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2(2), 182–192 (2012)

Vanden Broucke, S.K.L.M.: Artificial negative events and other techniques. Ph.D. thesis, KU Leuven (2014)

Wagstaff, K., Cardie, C., Rogers, S., Schrödl, S., et al.: Constrained k-means clustering with background knowledge. In: ICML, vol. 1, pp. 577–584 (2001)

Weijters, A., van Der Aalst, W.M., De Medeiros, A.A.: Process mining with the heuristics miner-algorithm. Technische Universiteit Eindhoven, Technical report WP 166, pp. 1–34 (2006)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

De Koninck, P., Nelissen, K., Baesens, B., vanden Broucke, S., Snoeck, M., De Weerdt, J. (2017). An Approach for Incorporating Expert Knowledge in Trace Clustering. In: Dubois, E., Pohl, K. (eds) Advanced Information Systems Engineering. CAiSE 2017. Lecture Notes in Computer Science(), vol 10253. Springer, Cham. https://doi.org/10.1007/978-3-319-59536-8_35

Download citation

DOI: https://doi.org/10.1007/978-3-319-59536-8_35

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59535-1

Online ISBN: 978-3-319-59536-8

eBook Packages: Computer ScienceComputer Science (R0)