Abstract

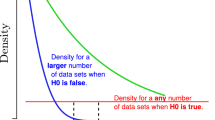

Statistical tests has arisen as a reliable procedure for the validation of results in many kind of problems. In particular, due to their robustness and applicability, non-parametric tests are a common and useful tool in the process of design and evaluation of a machine learning algorithm or in the context of an optimization problem. New trends in the field of statistical comparison applied to the field of algorithms’ performance comparison indicate that Bayesian tests, which provides a distribution over the parameter of interest, are a promising approach.

In this contribution rNPBST (R Non-Parametric and Bayesian Statistical tests), an R package that contains a set of non-parametric and Bayesian tests for different purposes as randomness tests, goodness of fit tests or two-sample and multiple-sample analysis is presented. This package constitutes also a solution which integrates many of non-parametric and Bayesian tests in a single repository.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

- 2.

Abalone, australian, automobile, balance, breast, bupa, car, cleveland, crx, dermatology, german, glass, hayes-roth, heart, ionosphere, led7digit, letter, lymphography, mushroom, optdigits, satimage, spambase, splice, tic-tac-toe, vehicle, vowel, wine, yeast and zoo.

References

Alcalá, J., Fernández, A., Luengo, J., Derrac, J., García, S., Sánchez, L., Herrera, F.: Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J. Multiple-Valued Logic and Soft Comput. 17(2–3), 255–287 (2010)

Alpaydin, E.: Combined 5 x 2cv f test for comparing supervised classification learning algorithms. Neural Comput. 11, 1885–1892 (1998)

Benavoli, A., Campos, C.P.: Statistical tests for joint analysis of performance measures. In: Suzuki, J., Ueno, M. (eds.) AMBN 2015. LNCS, vol. 9505, pp. 76–92. Springer, Cham (2015). doi:10.1007/978-3-319-28379-1_6

Benavoli, A., Corani, G., Mangili, F., Zaffalon, M., Ruggeri, F.: A Bayesian Wilcoxon signed-rank test based on the Dirichlet process. In: Proceedings of the 31th International Conference on Machine Learning, ICML 2014, Beijing, China, 21–26 June 2014, JMLR Workshop and Conference Proceedings, vol. 32, pp. 1026–1034 (2014). http://JMLR.org

Benavoli, A., Corani, G., Demsar, J., Zaffalon, M.: Time for a change: a tutorial for comparing multiple classifiers through bayesian analysis. CoRR abs/1606.04316 (2016)

Bernardo, J.M., Smith, A.F.: Bayesian Theory (2001)

Calvo, B., Santafe, G.: scmamp: Statistical comparison of multiple algorithms in multiple problems. The R Journal Accepted for Publication (2015)

Corani, G., Benavoli, A.: A bayesian approach for comparing cross-validated algorithms on multiple data sets. Mach. Learn. 100(2–3), 285–304 (2015)

Demšar, J.: Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 7, 1–30 (2006)

Derrac, J., García, S., Herrera, F.: Javanpst: Nonparametric statistical tests in java. ArXiv e-prints, January 2015

Derrac, J., García, S., Molina, D., Herrera, F.: A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 1(1), 3–18 (2011)

Dietterich, T.G.: Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput. 10(7), 1895–1923 (1998)

Garcia, S., Herrera, F.: An extension on “statistical comparisons of classifiers over multiple data sets” for all pairwise comparisons. J. Mach. Learn. Res. 9, 2677–2694 (2008)

Hamilton, N.: ggtern: An Extension to ‘ggplot2’, for the Creation of Ternary Diagrams (2016). https://CRAN.R-project.org/package=ggtern, R package version 2.1.4

Japkowicz, N., Shah, M.: Evaluating Learning Algorithms: A Classification Perspective. Cambridge University Press, New York (2011)

Luengo, J., García, S., Herrera, F.: A study on the use of statistical tests for experimentation with neural networks: analysis of parametric test conditions and non-parametric tests. Expert Syst. Appl. 36(4), 7798–7808 (2009)

Nadeau, C., Bengio, Y.: Inference for the generalization error. Mach. Learn. 52(3), 239–281 (2003)

Pesarin, F., Salmaso, L.: Permutation Tests for Complex Data: Theory. Applications and Software. Wiley, Hoboken (2010)

Pizarro, J., Guerrero, E., Galindo, P.L.: Multiple comparison procedures applied to model selection. Neurocomputing 48(1), 155–173 (2002)

Sheskin, D.J.: Handbook of Parametric and Nonparametric Statistical Procedures. CRC Press, Boca Raton (2003)

Acknowledgments

This work was supported by the Spanish National Research Projects TIN2014-57251-P and MTM2015-63609-R, and the Andalusian Research Plan P11-TIC-7765.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Carrasco, J., García, S., del Mar Rueda, M., Herrera, F. (2017). rNPBST: An R Package Covering Non-parametric and Bayesian Statistical Tests. In: Martínez de Pisón, F., Urraca, R., Quintián, H., Corchado, E. (eds) Hybrid Artificial Intelligent Systems. HAIS 2017. Lecture Notes in Computer Science(), vol 10334. Springer, Cham. https://doi.org/10.1007/978-3-319-59650-1_24

Download citation

DOI: https://doi.org/10.1007/978-3-319-59650-1_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59649-5

Online ISBN: 978-3-319-59650-1

eBook Packages: Computer ScienceComputer Science (R0)