Abstract

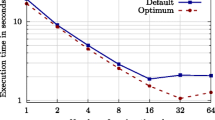

Parallel programs have the potential of executing several times faster than sequential programs. However, in order to achieve its potential, several aspects of the execution have to be parameterized, such as the number of threads, task granularity, etc. This work studies the task granularity of regular and irregular parallel programs on symmetrical multicore machines. Task granularity is how many parallel tasks are created to perform a certain computation. If the granularity is too coarse, there might not be enough parallelism to occupy all processors. But if granularity is too fine, a large percentage of the execution time may be spent context switching between tasks, and not performing useful work.

Task granularity can be controlled by limiting the creation of new tasks, executing the workload sequentially in the current task. This decision is performed by a cut-off algorithm, which defines a criterion to execute a task workload sequentially or asynchronously. The cut-off algorithm can have a performance impact of several orders of magnitude.

This work presents three new cut-off algorithms: MaxTasksInQueue, StackSize and MaxTasksSS. MaxTasksInQueue limits the size of the current thread queue, StackSize limits the number of stacks in recursive calls, and MaxTasksSS limits both the number of tasks and the number of stacks. These new algorithms can improve the performance of parallel programs.

Existing studies have analyzed only two cut-off approaches at a time, each with its own set of benchmarks and machines. In this work we present a comparison of a manual threshold approach to 5 state-of-the-art algorithms (MaxTasks, MaxLevel, Adaptive Tasks Cutoff, Load-Based and Surplus Queued Task Count) and 3 new approaches (MaxTasksInQueue, StackSize and MaxTasksSS). The evaluation was performed using 24 parallel programs, including divide-and-conquer and loop programs, on two different machines with 24 and 32 hardware threads, respectively.

Our analysis provided insight of how cut-off algorithms behave with different types of programs. We have also identified the best algorithms for combinations of balanced/unbalanced and loop/recursive programs.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Dagum, L., Menon, R.: OpenMP: an industry standard API for shared-memory programming. IEEE Comput. Sci. Eng. 5(1), 46–55 (1998)

Blumofe, R.D., Joerg, C.F., Kuszmaul, B.C., Leiserson, C.E., Randall, K.H., Zhou, Y.: Cilk: an efficient multithreaded runtime system, vol. 30. ACM (1995)

Lea, D.: A java fork/join framework. In: Proceedings of the ACM 2000 Conference on Java Grande, pp. 36–43. ACM (2000)

Haghighat, M.R., Polychronopoulos, C.D.: Symbolic analysis: a basis for parallelization, optimization, and scheduling of programs. In: Banerjee, U., Gelernter, D., Nicolau, A., Padua, D. (eds.) LCPC 1993. LNCS, vol. 768, pp. 567–585. Springer, Heidelberg (1994). doi:10.1007/3-540-57659-2_32

Mohr, E., Kranz, D., Halstead, R.: Lazy task creation: a technique for increasing the granularity of parallel programs. IEEE Trans. Parallel Distrib. Syst. 2(3), 264–280 (1991)

Duran, A., Corbal, J., Ayguad, E.: Evaluation of OpenMP Task Scheduling Strategies, pp. 100–110 (2008)

Duran, A., Corbalán, J., Ayguadé, E.: An adaptive cut-off for task parallelism. In: Proceedings of the 2008 ACM/IEEE conference on Supercomputing, p. 36. IEEE Press (2008)

Olivier, S.L., Prins, J.F.: Evaluating OpenMP 3.0 run time systems on unbalanced task graphs. In: Müller, M.S., Supinski, B.R., Chapman, B.M. (eds.) IWOMP 2009. LNCS, vol. 5568, pp. 63–78. Springer, Heidelberg (2009). doi:10.1007/978-3-642-02303-3_6

Olivier, S.L., Prins, J.F.: Comparison of OpenMP 3.0 and other task parallel frameworks on unbalanced task graphs. Int. J. Parallel Prog. 38(5–6), 341–360 (2010)

Stork, S., Naden, K., Sunshine, J., Mohr, M., Fonseca, A., Marques, P., Aldrich, J.: Æminium: a permission-based concurrent-by-default programming language approach. ACM Trans. Program. Lang. Syst. (TOPLAS) 36(1), 2 (2014)

Georges, A., Buytaert, D., Eeckhout, L.: Statistically rigorous java performance evaluation. ACM SIGPLAN Notices 42(10), 57–76 (2007)

Shun, J., Blelloch, G.E., Fineman, J.T., Gibbons, P.B., Kyrola, A., Simhadri, H.V., Tangwongsan, K.: Brief announcement: the problem based benchmark suite. In: Proceedings of the 24th ACM Symposium on Parallelism in Algorithms and Architectures, pp. 68–70. ACM (2012)

Bienia, C.: Benchmarking modern multiprocessors. PhD thesis, Princeton University, January 2011

Frigo, M., Leiserson, C.E., Randall, K.H.: The implementation of the cilk-5 multithreaded language. In: ACM Sigplan Notices, vol. 33, pp. 212–223. ACM (1998)

Duran, A., Teruel, X., Ferrer, R., Martorell, X., Ayguadé, E.: Barcelona OpenMP tasks suite: a set of benchmarks targeting the exploitation of task parallelism in OpenMP. In: 38th International Conference on Parallel Processing, pp. 124–131 (2009)

Smith, L.A., Bull, J.M., Obdrizalek, J.: A parallel java grande benchmark suite. In: Supercomputing, ACM/IEEE 2001 Conference, p. 6. IEEE (2001)

Acknowledgments

This work was partially supported by the Portuguese Research Agency FCT, through CISUC (R&D Unit 326/97), the CMU|Portugal program (R&D Project Aeminium CMU-PT/SE/0038/2008). The first author was also supported by the Portuguese National Foundation for Science and Technology (FCT) through a Doctoral Grant (SFRH/BD/84448/2012).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Fonseca, A., Cabral, B. (2017). Evaluation of Runtime Cut-off Approaches for Parallel Programs. In: Dutra, I., Camacho, R., Barbosa, J., Marques, O. (eds) High Performance Computing for Computational Science – VECPAR 2016. VECPAR 2016. Lecture Notes in Computer Science(), vol 10150. Springer, Cham. https://doi.org/10.1007/978-3-319-61982-8_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-61982-8_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-61981-1

Online ISBN: 978-3-319-61982-8

eBook Packages: Computer ScienceComputer Science (R0)