Abstract

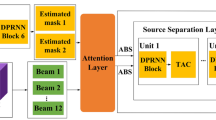

Speech-processing systems such as automatic speech recognition (ASR) usually consist of a large number of steps to accomplish their tasks. Due to the long processing pipeline, the processing steps are usually designed to optimize cost functions that are not directly related to the task, leading to suboptimal performance. In this chapter, we introduce a beamforming (BF) network to perform spatial filtering that is optimal for the ASR task. The BF network takes in array signals and predicts the optimal beamforming parameters in the frequency domain, assuming that the array geometry does not change. The network consists of both deterministic processing steps and trainable steps realized by neural networks and trained to minimize the cross-entropy cost function of ASR. In our experiments, the BF network is trained with both artificially generated and real microphone array signals. On the AMI meeting transcription, we found that the trained BF network produces competitive ASR results compared to traditional delay-and-sum beamforming on unseen array signals.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Agarwal, A., Akchurin, E., Basoglu, C., Chen, G., Cyphers, S., Droppo, J., Eversole, A., Guenter, B., Hillebrand, M., Hoens, R., et al.: An introduction to computational networks and the computational network toolkit. Microsoft Technical Report, MSR-TR-2014-112 (2014)

Allen, J., Berkley, D.: Image method for efficiently simulating small-room acoustics. J. Acoust. Soc. Am. 65(4), 943–950 (1979)

Anguera, X., Wooters, C., Hernando, J.: Acoustic beamforming for speaker diarization of meetings. IEEE Trans. Audio Speech Lang. Process. 15(7), 2011–2022 (2007)

Barker, J., Marxer, R., Vincent, E., Watanabe, S.: The third “CHiME” speech separation and recognition challenge: dataset, task and baselines. In: 2015 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU 2015) (2015)

Bitzer, J., Simmer, K.U.: Superdirective microphone arrays. In: Brandstein, M.S., Ward, D. (eds.) Microphone Arrays: Signal Processing Techniques and Applications, Chap. 2, pp. 19–38. Springer, Berlin (2001)

Capon, J.: High-resolution frequency-wavenumber spectrum analysis. Proc. IEEE 57(8), 1408–1418 (1969)

Doclo, S., Moonen, M.: GSVD-based optimal filtering for single and multimicrophone speech enhancement. IEEE Trans. Signal Process. 50(9), 2230–2244 (2002)

Elko, G.W.: Spatial coherence functions for differential microphones in isotropic noise fields. In: Brandstein, M.S., Ward, D. (eds.) Microphone Arrays: Signal Processing Techniques and Applications, Chap. 4, pp. 61–85. Springer, Berlin (2001)

Er, M., Cantoni, A.: Solar wind monitor satellite: derivative constraints for broad-band element space antenna array processors. IEEE Trans. Audio Speech Lang. Process. 31(6), 1378–1393 (1983)

Gales, M.J.: Maximum likelihood linear transformations for HMM-based speech recognition. Comput. Speech Lang. 12(2), 75–98 (1998)

Griffiths, L.J., Jim, C.W.: An alternative approach to linearly constrained adaptive beamforming. IEEE Trans. Antennas Propag. 30(1), 27–34 (1982)

Haeb-Umbach, R., Warsitz, E.: Adaptive filter-and-sum beamforming in spatially correlated noise. In: International Workshop on Acoustic Echo and Noise Control (IWAENC 2005) (2005)

Heymann, J., Drude, L., Chinaev, A., Haeb-Umbach, R.: BLSTM supported GEV beamformer front-end for the 3rd CHiME challenge. In: 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), pp. 444–451. IEEE, New York (2015)

Hoshen, Y., Weiss, R.J., Wilson, K.W.: Speech acoustic modeling from raw multichannel waveforms. In: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 4624–4628. IEEE, New York (2015)

Jahn Heymann, L.D., Haeb-Umbach, R.: Neural network based spectral mask estimation for acoustic beamforming. In: IEEE International Conference on Acoustics, Speech and Signal Processing. IEEE, New York (2016)

Kinoshita, K., Delcroix, M., Yoshioka, T., Nakatani, T., Sehr, A., Kellermann, W., Maas, R.: The REVERB challenge: a common evaluation framework for dereverberation and recognition of reverberant speech. In: IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), pp. 1–4. IEEE, New York (2013)

Knapp, C.H., Carter, G.C.: The generalized correlation method for estimation of time delay. IEEE Trans. Acoust. Speech Signal Process. 24(4), 320–327 (1976)

Liu, Y., Zhang, P., Hain, T.: Using neural network front-ends on far field multiple microphones based speech recognition. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5542–5546. IEEE, New York (2014)

Narayanan, A., Wang, D.: Joint noise adaptive training for robust automatic speech recognition. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2504–2508. IEEE, New York (2014)

Picone, J.W.: Signal modeling techniques in speech recognition. Proc. IEEE 81(9), 1215–1247 (1993)

Povey, D., Ghoshal, A., Boulianne, G., Burget, L., Glembek, O., Goel, N., Hannemann, M., Motlicek, P., Qian, Y., Schwarz, P., Silovsky, J., Stemmer, G., Vesely, K.: The Kaldi speech recognition toolkit. In: IEEE 2011 Workshop on Automatic Speech Recognition and Understanding. IEEE Signal Processing Society (2011). IEEE Catalog No.: CFP11SRW-USB

Renals, S., Hain, T., Bourlard, H.: Recognition and understanding of meetings: the AMI and AMIDA projects. In: IEEE Workshop on Automatic Speech Recognition and Understanding, ASRU, Kyoto (2007). IDIAP-RR 07-46

Robinson, T., Fransen, J., Pye, D., Foote, J., Renals, S.: WSJCAM0: a British English speech corpus for large vocabulary continuous speech recognition. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 81–84 (1995)

Sainath, T.N., Weiss, R.J., Wilson, K.W., Narayanan, A., Bacchiani, M., Senior, A.: Speaker location and microphone spacing invariant acoustic modeling from raw multichannel waveforms. In: IEEE Workshop on Automatic Speech Recognition and Understanding (ARSU), pp. 30–36 (2015)

Sainath, T.N., Weiss, R.J., Wilson, K.W., Narayanan, A., Bacchiani, M.: Factored spatial and spectral multichannel raw waveform CLDNNs. In: IEEE International Conference on Acoustics, Speech and Signal Processing (2016)

Seltzer, M.L., Raj, B., Stern, R.M.: Likelihood-maximizing beamforming for robust hands-free speech recognition. IEEE Trans. Speech Audio Process. 12(5), 489–498 (2004)

Souden, M., Benesty, J., Affes, S.: On optimal frequency-domain multichannel linear filtering for noise reduction. IEEE Trans. Audio Speech Lang. Process. 18(2), 260–276 (2010)

Swietojanski, P., Ghoshal, A., Renals, S.: Convolutional neural networks for distant speech recognition. IEEE Signal Process Lett. 21(9), 1120–1124 (2014)

Van Veen, B.D., Buckley, K.M.: Beamforming: a versatile approach to spatial filtering. IEEE ASSP Mag. 5(2), 4–24 (1988)

Xiao, X., Zhao, S., Zhong, X., Jones, D.L., Chng, E.S., Li, H.: A learning-based approach to direction of arrival estimation in noisy and reverberant environments. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2814–2818. IEEE, New York (2015)

Xiao, X., Xu, C., Zhang, Z., Zhao, S., Sun, S., Watanabe, S., Wang, L., Xie, L., Jones, D.L., Chng, E.S., Li, H.: Investigation of neural networks based beamforming approaches for speech recognition: the NTU systems for CHiME-4 evaluation. In: CHiME 4 Workshop (2016)

Young, S., Evermann, G., Gales, M., Hain, T., Kershaw, D., Liu, X., Moore, G., Odell, J., Ollason, D., Povey, D., et al.: The HTK Book, 3.4 edn. Cambridge University Engineering Department, Cambridge (2006)

Yu, D., Eversole, A., Seltzer, M., Yao, K., Huang, Z., Guenter, B., Kuchaiev, O., Zhang, Y., Seide, F., Wang, H., et al.: An introduction to computational networks and the computational network toolkit. Tech. Rep. MSR, Microsoft Research (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Xiao, X. et al. (2017). Discriminative Beamforming with Phase-Aware Neural Networks for Speech Enhancement and Recognition. In: Watanabe, S., Delcroix, M., Metze, F., Hershey, J. (eds) New Era for Robust Speech Recognition. Springer, Cham. https://doi.org/10.1007/978-3-319-64680-0_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-64680-0_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-64679-4

Online ISBN: 978-3-319-64680-0

eBook Packages: Computer ScienceComputer Science (R0)