Abstract

The recent advances in tissue clearing and optical imaging have enabled us to obtain three-dimensional high-resolution images of various tissues. However, the severe background noise remains a major obstacle. In addition, there is an urgent need for fast background ground correction methods. In this paper, we present a fast background removal method for 3D multi-channel deep tissue fluorescence images, in which the objectives of different channels are well separated. We first conduct a window-based normalization to distinguish foreground signals from background noises in all channels. Then, we identify the pure background regions by conducting subtraction of images in different channels, which allow us to estimate the background noises of the whole images by interpolation. Experiments on real 3D datasets of mouse stomach show our method has superior performance and efficiency comparing with the current state-of-the-art background correction methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The emerging tissue clearing techniques can transform intact tissues into optically transparent and macromolecule permeable assemblies without significant alteration to their native structure. The combination of tissue clearing and new optical imaging modalities, such as light sheet fluorescence microscopy, enables the three-dimensional structural elucidations of various mammal tissues and organs with single-cell resolution demonstrating an incredible potential for biomedical research and clinical application [1]. However, the severe background noise due to light scattering in tissue and autofluorescence associated with whole-mount tissue imaging remains a major challenge for precise and effective extraction of quantitative structural information from these high-resolution 3D images. In addition, there is urgent need for background ground correction methods with fast processing speed for the very large datasets which are typically on the scale of terabytes.

As a fundamental question of biomedical imaging processing, background removal has been well studied, such as spatial filtering [2], fitting function [3], entropy minimization [4], low rank method [5], morphological filtering [6] and Gaussian blurring [7]. Rolling ball algorithm [8] is a prevailing method for background removal which has been implemented as a plug-in in the popular open-source imaging processing program ImageJ. The performance of rolling ball algorithm, as well as other window-based methods, is impaired due to the high variances of objects in tissues. Specifically, the pre-selected windows/ball sizes may be smaller than the sizes of target objects leading to the removal of object signals, which is called “undersized windows” [3, 9]. To overcome the “undersized windows” issue, [9] presents a background removal algorithm based on one-class learning which has demonstrated outstanding performances. However, the processing speed is slower than rolling ball algorithm.

In this paper, we develop a new fast background removal method for 3D multi-channel deep tissue fluorescence images, in which the objectives of different channels are well separated, by taking advantages of the crucial object locations information contained in the multi-channel images. Using the object locations information, we are able to obtain the exact locations of non-object or background regions and therefore calculate the background noises around. Through conducting experiments on real 3D tissue images of mouse gastric gland, we show that our method can achieve similar accuracy comparing with one-class learning method and the processing speed is comparable to the rolling ball algorithm.

2 Method

2.1 Background Model

Additive models and multiplicative models are the two common background models [3, 5]. Since additive noise is stronger than multiplicative noise in fluorescence microscopy images, we use \(I(x, y) = F(x, y) + B(x, y)\) to model the pixel intensities of images, where I(x, y) denotes the intensity of pixel, F(x, y) denotes the foreground signals, and B(x, y) denotes the background noises.

Our algorithm to estimate B(x, y) includes two major steps. The first step is to identify the background pixels by comparing the intensity of each pixel of different channels, which will be described in detail in following sections. Our algorithm can successfully obtain a large set of identified background pixels. The number of these pixels typically is more than the minimum number required by the Nyquist-Shannon sampling theorem to recover the true background noise at every pixel. The second step of our algorithm is to estimate the background noises of the whole image based on these identified background pixels using linear interpolation.

2.2 Multi-channel Image

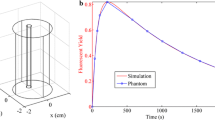

A multi-channel image in fluorescence imaging is composed of images acquired at multi fluorescent wavelengths emitted from different fluorophores. Typically, each fluorophore is used to label one target in a cell or tissue, and therefore, are separated spatially in three-dimensional space. Figure 1 shows a two-channels fluorescence image of a mouse stomach. The green channel is the fluorescence image of F-actin stained using Alexa 647 labled phalloidin and the red channel is nuclei stained by DAPI. Despite the optical diffraction limitation on resolution, it can be clearly seen that the two objects are not physically overlapped in many pixels.

2.3 Assumption

For single channel images, the intensity variation is a very important feature to differentiate background from foreground signals. The foreground signals usually have larger intensities than the surrounding background, which is used to identify the background pixels. For a two-channel image, i.e. channel \(C_1\) and \(C_2\), we can define \(\textit{Dif}_{C_1}(x, y) = I_{C_1}(x, y) - I_{C_2}(x, y)\). Our observation is that if \(\textit{Dif}_{C_1}(x, y)\) is smaller, the probability that \(I_{C_1}(x, y)\) is background and \(I_{C_2}(x, y)\) is foreground is larger. We can formalize this observation as an assumption:

Assumption 1

Suppose we have two-channel images \(I_1\) and \(I_2\), \(I(x, y) = F(x, y) + B(x, y)\), \(\textit{Dif}_1(x, y) = I_1(x, y) - I_2(x, y)\). Then probability \(P(F_1(x, y) = 0\), \(F_2(x, y)> 0 | \textit{Dif}_1(x, y) = a) > P(F_1(x, y) = 0\), \(F_2(x, y) > 0| \textit{Dif}_1(x, y) = b)\) if \(a < b\).

The assumption may not be always true when \(I_1\) and \(I_2\) are raw images because the intensities of two channels are independent. In addition, the intensities of different regions in one image can be quite variable. However, the intensities of foreground signals should be always larger than the background noises around. Therefore, a local normalization can be carried out to make our assumption true. The normalization procedure is shown in Algorithm 1. If the intensity range of one channel is [min, max], then each pixel subtracts min and then is divided by (\(max - min\)). The resulted intensity is in the range of [0,1]. The windows size should be as large as the largest objectives to ensure that each window contains both background and foreground.

In Algorithm 1, I represents one channel image of a tissue and (x, y) represents a pixel in I. We divide I into a set of small windows \(W_i\) to conduct local normalization. For \(\textit{Dif}_1 = I_1-I_2\), there are four cases including foreground minus foreground, background minus foreground, foreground minus background, background minus background. To prove that the assumption is true after local normalization, we formulate these four cases as a classification problem. We use 16 ground truth images labeled by experts to identify the foreground signals and background pixels. Figure 2(a) and (b) show the distributions of the pixels in the four cases. We can find the values from background minus foreground (yellow line) are mostly negative, while the values from foreground minus background are almost positive. Figure 2(c) and (d) are the conditional probabilities, computed using Bayes’ theorem, that \(\textit{Dif}_1(x, y)\) belongs to which case (four cases mentioned above) when given the value of \(\textit{Dif}_1(x, y)\). We can find that the smaller the \(\textit{Dif}_1(x, y)\) is, the probability that \(I_1(x, y)\) is background and \(I_2(x, y)\) is foreground signal is larger, confirming the Assumption 1 is true.

2.4 Algorithm

In the subsection, we introduce our algorithm under Assumption 1. Suppose we have two channels, \(I_1\) and \(I_2\), we calculate \(\textit{Dif}_1 = I_1 - I_2\) at first. If the smaller the \(\textit{Dif}_1(x, y)\) is, the higher the probability that \(I_1(x, y)\) is background is. We then select the sampling pixels or points depending on their values in an ascending order in order to sample the pixels with the high probability of being background. The sampling points may be densely distributed in some regions, and sparsely in other regions, which is not desirable for estimating the background of the whole image. Therefore, we check whether there are any other selected sampling points within a distance threshold before we decide to take one point.

Algorithm 2 is the main algorithm. The variables \(ws_1\), \(ws_2\) and \(ws_3\) in the Input line represent window sizes. The w in the 4th line represents the small windows we divide the \(\textit{Dif}_1\) into. The purpose of using windows is to make the algorithm more robust to noises. The window size shouldn’t be larger than the smallest objectives. w.center in 7th line represents the coordinate of the center of w. \(\textit{Dif}_1(w)\) and \(I_1(w)\) mean the values in the window w of \(\textit{Dif}_1\) and \(I_1\), respectively. th is the threshold to identify the points which must be background. The value of th should guarantee the conditional probability of being background is high enough (i.e. >90%). The 6th line means that if the values in the w are not all smaller than th, the window w will be considered as an “undersized windows”, because background noises tend to form large areas. SP in the 11th line means the sampling points for linear interpolation. W(L(i, 2), dis) in the 13th represents that the window centered at L(i, 2) whose size is \(dis*dis\). The value of dis should reflect the variation of background. Because of the background various slowly, we recommend dis is equal to 0.03% of the number of pixels in one image according to our experiment. The estimated value of the background in this window, 14th line, is the minimum value of the qth quantile of W(P, dis) and L(i, 3), the purpose of which is to make the algorithm more robust because the estimated value of single region is dynamic due to noise.

3 Experiments and Results

The dataset is fluorescence imaging stack (80 sections) with two channels (actin and nuclei) of a mouse gastric gland. We divide the stack into four continuous sub-stacks or parts (i.e. 1 to 20, 21 to 40, ...). The typical images in the four parts are shown in Fig. 3. The average backgrounds are 12, 33, 44, 55 for Part 1, Part 2, Part 3, and Part 4 respectively. Since the background noise increase with the imaging depth, we can use these four datasets to test the robustness of our methods. We compare our algorithm with other two well-known methods, rolling ball [8] and one-class learning [9]. The radius of ball is 100 for rolling ball algorithm. The parameter for one-class clearing, percentile \(p = 20\%\), window size \(ws = 50\). The parameter for our algorithm, \(ws_1 = 128\), \(ws_2 = 256\), \(ws_3 = 11\), \(th = -0.2\), \(dis = 32\), \(q = 10\%\).

We choose root mean error (RMSE) to measure the quality, which is also used in [3, 5, 9]. RMSE = \(\sqrt{\sum _{i,j}(F_{i,j} - G_{i,j})^2/n}\), where F is the result and G is the ground truth labeled by human experts using Photoshop. Experts label the signal pixels in raw images. Then the intensity of the signals of raw images will be kept in F while the intensity of background will be set to 0 in F. Smaller RMSE means better quality. We use four images in each dataset to test the quality. Table 1 lists the results including the average improvement over the raw images. Clearly, our method based on the multi-channel structural information can achieve good performance even if the background noises are strong. Figure 4 shows the 3D reconstruction of the stack processed by the rolling ball, one-class learning and our method.

We use the average processed time per pixel, to measure the processing speed of each algorithm. Table 2 shows the results. We use the rolling ball implemented in ImageJ. One-Class Learning and our method are implemented in MATLAB on the same PC. The result shows that the processing speed of our method is as fast as rolling ball.

4 Conclusion

We present a fast background removal method based on the intrinsic location property of multi-channel deep tissue fluorescence images, in which the objectives of different channels are well separated. Experiments on real 3D datasets show that our method has superior performance and efficiency comparing with the current state-of-the-art background method and the rolling ball algorithm.

References

Susaki, E.A., Hiroki, R.U.: Whole-body and whole-organ clearing and imaging techniques with single-cell resolution: toward organism-level systems biology in mammals. Cell Chem. Biol. 23(1), 137–157 (2016)

Gonzalez, R.C., Woods, R.E.: Digital Image Processing, 2nd edn. Addison-Wesley Longman Publishing Co., Inc., Boston (2001)

Lindblad, J., Bengtsson, E.: A comparison of methods for estimation of intensity non uniformities in 2D and 3D microscope images of fluorescence stained cells. In: Proceedings of the Scandinavian Conference on Image Analysis, pp. 264–271 (2001)

Likar, B., Maintz, J.A., Viergever, M.A., Pernus, F.: Retrospective shading correction based on entropy minimization. J. Microsc. 197(3), 285–295 (2000)

Peng, T., Wang, L., Bayer, C., Conjeti, S., Baust, M., Navab, N.: Shading correction for whole slide image using low rank and sparse decomposition. In: Golland, P., Hata, N., Barillot, C., Hornegger, J., Howe, R. (eds.) MICCAI 2014. LNCS, vol. 8673, pp. 33–40. Springer, Cham (2014). doi:10.1007/978-3-319-10404-1_5

Sternberg, S.R.: Biomedical image processing. Computer 16(1), 22–34 (1983)

Leong, F.W., Brady, M., McGee, J.O.D.: Correction of uneven illumination (vignetting) in digital microscopy images. J. Clin. Pathol. 56(8), 619–621 (2003)

Collins, T.J.: ImageJ for microscopy. Biotechniques 43(1), 25–30 (2007)

Yang, L., Zhang, Y., Guldner, I.H., Zhang, S., Chen, D.Z.: Fast background removal in 3d fluorescence microscopy images using one-class learning. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 292–299. Springer, Cham (2015). doi:10.1007/978-3-319-24574-4_35

Acknowledgment

This work are partially supported by the National Natural Science Foundation of China (Grant No. 11426026, 61632017, 61173011) and a Project 985 grant of Shanghai Jiao Tong University and the National Science Foundation of China (Grant No. 11374207, 31370750, 31670722 and 81627801) and SJTU Cross-Disciplinary Research Fund in Medicine and Engineering (Grant No. YG2012MS23). D. Chen’s research was supported in part by NSF grants CCF-1217906 and CCF-1617735. The authors are grateful to the generous support by Nikon Instruments Co., Ltd. (Shanghai, China).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Li, C. et al. (2017). Fast Background Removal Method for 3D Multi-channel Deep Tissue Fluorescence Imaging. In: Descoteaux, M., Maier-Hein, L., Franz, A., Jannin, P., Collins, D., Duchesne, S. (eds) Medical Image Computing and Computer-Assisted Intervention − MICCAI 2017. MICCAI 2017. Lecture Notes in Computer Science(), vol 10434. Springer, Cham. https://doi.org/10.1007/978-3-319-66185-8_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-66185-8_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-66184-1

Online ISBN: 978-3-319-66185-8

eBook Packages: Computer ScienceComputer Science (R0)