Abstract

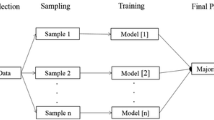

The idea of classification based on simple granules of knowledge (CSG classifier) is inspired by granular structures proposed by Polkowski. The simple granular classifier turned up to be really effective in the context of real data classification. Classifier among others turned out to be resistant for damages and can absorb missing values. In this work we have presented the continuation of series of experimentations with boosting of rough set classifiers. In the previous works we have proven effectiveness of pair and weighted voting classifier in mentioned context. In this work we have checked a few methods for classifier stabilization in the context of CSG classifier - Bootstrap Ensemble (Simple Bagging), Boosting based on Arcing, and Ada-Boost with Monte Carlo split. We have performed experiments on selected data from the UCI Repository. The results show that the committee of simple granular classifiers stabilized the classification process. Simple Bagging turned out to be most effective for CSG classifier.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Artiemjew, P.: Stability of optimal parameters for classifier based on simple granules of knowledge. Tech. Sci. 14(1), 57–69 (2011). UWM Publisher, Olsztyn

Artiemjew, P.: In search of optimal parameters for classifier based on simple granules of knowledge. In: III International Interdisciplinary Technical Conference of Young Scientists (InterTech 2010), vol. 3, pp. 138–142. Poznan University Press (2010)

Artiemjew, P.: On strategies of knowledge granulation and applications to decision systems, Ph.D. dissertation, Polish Japanese institute of Information Technology. L. Polkowski, Supervisor, Warsaw (2009)

Artiemjew, P.: Natural versus granular computing: classifiers from granular structures. In: Chan, C.-C., Grzymala-Busse, J.W., Ziarko, W.P. (eds.) RSCTC 2008. LNCS, vol. 5306, pp. 150–159. Springer, Heidelberg (2008). doi:10.1007/978-3-540-88425-5_16

Breiman, L.: Arcing classifier (with discussion and a rejoinder by the author). Ann. Stat. 26(3), 801–849 (1998). Accessed 18 Jan 2015. Schapire (1990) proved that boosting is possible. (p. 823)

Devroye, L., Gyorfi, L., Lugosi, G.: A Probabilistic Theory of Pattern Recognition. Springer, New York (1996)

Hu, X.: Construction of an ensemble of classifiers based on rough sets theory and database operations. In: Proceedings of the IEEE International Conference on Data Mining (ICDM 2001) (2001)

Hu, X.: Ensembles of classifiers based on rough sets theory and set-oriented database operations. In: Presented at the 2006 IEEE International Conference on Gran-ular Computing, Atlanta, GA (2006)

Lei, S.H.I., Mei, W.E.N.G., Xinming, M.A., Lei, X.I.: Rough set based decision tree ensemble algorithm for text classification. J. Comput. Inf. Syst. 6(1), 89–95 (2010)

Murthy, C.A., Saha, S., Pal, S.K.: Rough set based ensemble classifier. In: Kuznetsov, S.O., Ślęzak, D., Hepting, D.H., Mirkin, B.G. (eds.) RSFDGrC 2011. LNCS, vol. 6743, p. 27. Springer, Heidelberg (2011). doi:10.1007/978-3-642-21881-1_5

Nowicki, R.K., Nowak, B.A., Woźniak, M.: Application of rough sets in k nearest neighbours algorithm for classification of incomplete samples. In: Kunifuji, S., Papadopoulos, G.A., Skulimowski, A.M.J., Kacprzyk, J. (eds.) Knowledge, Information and Creativity Support Systems. AISC, vol. 416, pp. 243–257. Springer, Cham (2016). doi:10.1007/978-3-319-27478-2_17

Ohno-Machado, L.: Cross-validation and Bootstrap Ensembles, Bag-ging, Boosting, Harvard-MIT Division of Health Sciences and Technology. HST.951J: Medical Decision Support, Fall 2005. http://ocw.mit.edu/courses/health-sciences-and-technology/hst-951j-medical-decision-support-fall-2005/lecture-notes/hst951_6.pdf

Pawlak, Z.: Rough sets. Int. J. Comput. Inf. Sci. 11, 341–356 (1982)

Polkowski, L., Artiemjew, P.: On granular rough computing: factoring classifiers through granulated decision systems. In: Kryszkiewicz, M., Peters, J.F., Rybinski, H., Skowron, A. (eds.) RSEISP 2007. LNCS, vol. 4585, pp. 280–289. Springer, Heidelberg (2007). doi:10.1007/978-3-540-73451-2_30

Polkowski, L.: A unified approach to granulation of knowledge and granular computing based on rough mereology. a survey. In: Pedrycz, W., Skowron, A., Kreinovich, V. (eds.) Handbook of Granular Computing, pp. 375–401. Wiley, New York (2008)

Saha, S., Murthy, C.A., Pal, S.K.: Rough set based ensemble classifier for web page classification. Fundamenta Informaticae 76(1–2), 171187 (2007)

Steinhaus, H.: Remarques sur le partage pragmatique. Annales de la SocitPolonaise de Mathmatique 19, 230–231 (1946)

Schapire, R.E.: The boosting approach to machine learning: an overview. In: Denison, D.D., Hansen, M.H., Holmes, C.C., Mallick, B., Yu, B. (eds.) Nonlinear Estimation and Classification. LNS, vol. 171, pp. 149–171. Springer, New York (2003)

Schapire, R.E.: A Short Introduction to Boosting (1999)

UCI Repository. http://www.ics.uci.edu/mlearn/databases

Zhou, Z.-H.: Boosting 25 years, CCL 2014 Keynote (2014)

Zhou, Z.-H.: Ensemble Methods: Foundations and Algorithms, p. 23. Chapman and Hall/CRC (2012). ISBN: 978-1439830031. The term boosting refers to a family of algorithms that are able to convert weak learners to strong learners

Acknowledgement

The research has been supported by grant 1309-802 from Ministry of Science and Higher Education of the Republic of Poland.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Artiemjew, P. (2017). Ensemble of Classifiers Based on Simple Granules of Knowledge. In: Damaševičius, R., Mikašytė, V. (eds) Information and Software Technologies. ICIST 2017. Communications in Computer and Information Science, vol 756. Springer, Cham. https://doi.org/10.1007/978-3-319-67642-5_28

Download citation

DOI: https://doi.org/10.1007/978-3-319-67642-5_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-67641-8

Online ISBN: 978-3-319-67642-5

eBook Packages: Computer ScienceComputer Science (R0)