Abstract

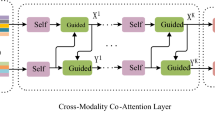

Visual Question Answering (VQA) has emerged as a prominent multi-discipline research problem in artificial intelligence. A number of recent studies are focusing on proposing attention mechanisms such as visual attention (“where to look”) or question attention (“what words to listen to”), and they have been proved to be efficient for VQA. However, they focus on modeling the prediction error, but ignore the semantic correlation between image attention and question attention. As a result, it will inevitably result in suboptimal attentions. In this paper, we argue that in addition to modeling visual and question attentions, it is equally important to model their semantic correlation to learn them jointly as well as to facilitate their joint representation learning for VQA. In this paper, we propose a novel end-to-end model to jointly learn attentions with semantic cross-modal correlation for efficiently solving the VQA problem. Specifically, we propose a multi-modal embedding to map the visual and question attentions into a joint space to guarantee their semantic consistency. Experimental results on the benchmark datasets demonstrate that our model outperforms several state-of-the-art techniques for VQA.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Antol, S., Agrawal, A., Lu, J., Mitchell, M., Batra, D., Zitnick, C.L., Parikh, D.: VQA: visual question answering. In: ICCV, pp. 2425–2433 (2015)

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. CoRR abs/1409.0473 (2014)

Berant, J., Liang, P.: Semantic parsing via paraphrasing. In: ACL, pp. 1415–1425 (2014)

Calixto, I., Liu, Q., Campbell, N.: Incorporating global visual features into attention-based neural machine translation. CoRR abs/1701.06521 (2017). http://arxiv.org/abs/1701.06521

Chen, K., Wang, J., Chen, L., Gao, H., Xu, W., Nevatia, R.: ABC-CNN: an attention based convolutional neural network for visual question answering. CoRR abs/1511.05960 (2015). http://arxiv.org/abs/1511.05960

Derczynski, L., Shaw, R., Solway, B., Wang, J.: Question answering against very-large text collections. CoRR abs/1304.7157 (2013)

Fang, H., Gupta, S., Iandola, F.N., Srivastava, R.K., Deng, L., Dollár, P., Gao, J., He, X., Mitchell, M., Platt, J.C., Zitnick, C.L., Zweig, G.: From captions to visual concepts and back. In: CVPR, pp. 1473–1482 (2015)

Graves, A.: Generating sequences with recurrent neural networks. CoRR abs/1308.0850 (2013)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR, pp. 770–778 (2016)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Kafle, K., Kanan, C.: Answer-type prediction for visual question answering. In: CVPR, pp. 4976–4984 (2016)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: NIPS, pp. 1106–1114 (2012)

Li, R., Jia, J.: Visual question answering with question representation update (QRU). In: NIPS, pp. 4655–4663 (2016)

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). doi:10.1007/978-3-319-10602-1_48

Lu, J., Xiong, C., Parikh, D., Socher, R.: Knowing when to look: Adaptive attention via A visual sentinel for image captioning. CoRR abs/1612.01887 (2016). http://arxiv.org/abs/1612.01887

Lu, J., Yang, J., Batra, D., Parikh, D.: Hierarchical question-image co-attention for visual question answering. In: NIPS, pp. 289–297 (2016)

Luong, T., Pham, H., Manning, C.D.: Effective approaches to attention-based neural machine translation. In: EMNLP, pp. 1412–1421 (2015)

Ma, L., Lu, Z., Li, H.: Learning to answer questions from image using convolutional neural network. In: AAAI, pp. 3567–3573 (2016)

Malinowski, M., Rohrbach, M., Fritz, M.: Ask your neurons: A neural-based approach to answering questions about images. In: ICCV, pp. 1–9 (2015)

Noh, H., Seo, P.H., Han, B.: Image question answering using convolutional neural network with dynamic parameter prediction. In: CVPR, pp. 30–38 (2016)

Pan, Z., Jin, P., Lei, J., Zhang, Y., Sun, X., Kwong, S.: Fast reference frame selection based on content similarity for low complexity HEVC encoder. J. Vis. Commun. Image Represent. 40, 516–524 (2016)

Ren, M., Kiros, R., Zemel, R.S.: Exploring models and data for image question answering. In: NIPS, pp. 2953–2961 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. CoRR abs/1409.1556 (2014). http://arxiv.org/abs/1409.1556

Song, J., Gao, L., Liu, L., Zhu, X., Sebe, N.: Quantization-based hashing: a general framework for scalable image and video retrieval. Pattern Recogn. (2017)

Song, J., Gao, L., Nie, F., Shen, H.T., Yan, Y., Sebe, N.: Optimized graph learning using partial tags and multiple features for image and video annotation. IEEE Trans. Image Process. 25(11), 4999–5011 (2016)

Song, J., Yang, Y., Huang, Z., Shen, H.T., Luo, J.: Effective multiple feature hashing for large-scale near-duplicate video retrieval. IEEE Trans. Multimedia 15(8), 1997–2008 (2013)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S.E., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: CVPR, pp. 1–9 (2015)

Tian, Q., Chen, S.: Cross-heterogeneous-database age estimation through correlation representation learning. Neurocomputing 238, 286–295 (2017)

Vinyals, O., Toshev, A., Bengio, S., Erhan, D.: Show and tell: A neural image caption generator. In: CVPR, pp. 3156–3164 (2015)

Wang, J., Zhang, T., Sebe, N., Shen, H.T., et al.: A survey on learning to hash. IEEE Trans. Pattern Anal. Mach. Intell. (2017)

Wu, Z., Palmer, M.: Verbs semantics and lexical selection. In: ACL, pp. 133–138 (1994)

Xiong, C., Merity, S., Socher, R.: Dynamic memory networks for visual and textual question answering. In: ICML, pp. 2397–2406 (2016)

Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A.C., Salakhutdinov, R., Zemel, R.S., Bengio, Y.: Show, attend and tell: Neural image caption generation with visual attention. In: ICML, pp. 2048–2057 (2015)

Yang, Z., He, X., Gao, J., Deng, L., Smola, A.J.: Stacked attention networks for image question answering. In: CVPR, pp. 21–29 (2016)

Yao, T., Pan, Y., Li, Y., Qiu, Z., Mei, T.: Boosting image captioning with attributes. CoRR abs/1611.01646 (2016). http://arxiv.org/abs/1611.01646

You, Q., Jin, H., Wang, Z., Fang, C., Luo, J.: Image captioning with semantic attention. In: CVPR, pp. 4651–4659 (2016)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. CoRR abs/1511.07122 (2015)

Acknowledgment

This work was supported in part by the National Natural Science Foundation of China under Project 61502080, Project 61632007, and the Fundamental Research Funds for the Central Universities under Project ZYGX2016J085, Project ZYGX2014Z007.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Cao, L., Gao, L., Song, J., Xu, X., Shen, H.T. (2017). Jointly Learning Attentions with Semantic Cross-Modal Correlation for Visual Question Answering. In: Huang, Z., Xiao, X., Cao, X. (eds) Databases Theory and Applications. ADC 2017. Lecture Notes in Computer Science(), vol 10538. Springer, Cham. https://doi.org/10.1007/978-3-319-68155-9_19

Download citation

DOI: https://doi.org/10.1007/978-3-319-68155-9_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68154-2

Online ISBN: 978-3-319-68155-9

eBook Packages: Computer ScienceComputer Science (R0)