Abstract

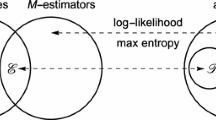

We discuss an information-geometric framework for a regression model, in which the regression function accompanies with the predictor function and the conditional density function. We introduce the e-geodesic and m-geodesic on the space of all predictor functions, of which the pair leads to the Pythagorean identity for a right triangle spinned by the two geodesics. Further, a statistical modeling to combine predictor functions in a nonlinear fashion is discussed by generalized average, and in particular, we observe the flexible property of the log-exp average.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Amari, S., Nagaoka, H.: Methods of Information Geometry. Oxford University Press, Oxford (2000)

Eguchi, S.: Geometry of minimum contrast. Hiroshima Math. J. 22, 631–647 (1992)

Eguchi, S., Komori, O.: Path connectedness on a space of probability density functions. In: Nielsen, F., Barbaresco, F. (eds.) GSI 2015. LNCS, vol. 9389, pp. 615–624. Springer, Cham (2015). doi:10.1007/978-3-319-25040-3_66

Hastie, T., Tibshirani, R., Friedman, J.: The Elements of Statistical Learning. Springer, New York (2009)

Nielsen, F., Sun, K.: Guaranteed bounds on the Kullback-Leibler divergence of univariate mixtures using piecewise log-sum-exp inequalities. arXiv preprint arXiv:1606.05850 (2016)

Murphy, K.: Naive Bayes classifiers. University of British Columbia (2006)

Omae, K., Komori, O., Eguchi, S.: Quasi-linear score for capturing heterogeneous structure in biomarkers. BMC Bioinform. 18(1), 308 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Eguchi, S., Omae, K. (2017). Information Geometry of Predictor Functions in a Regression Model. In: Nielsen, F., Barbaresco, F. (eds) Geometric Science of Information. GSI 2017. Lecture Notes in Computer Science(), vol 10589. Springer, Cham. https://doi.org/10.1007/978-3-319-68445-1_65

Download citation

DOI: https://doi.org/10.1007/978-3-319-68445-1_65

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68444-4

Online ISBN: 978-3-319-68445-1

eBook Packages: Computer ScienceComputer Science (R0)