Abstract

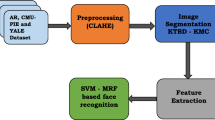

The automatic estimation of gender and face expression is an important task in many applications. In this context, we believe that an accurate segmentation of the human face could provide a good information about these mid-level features, due to the strong interaction existing between facial parts and these features. According to this idea, in this paper we present a gender and face expression estimator, based on a semantic segmentation of the human face into six parts. The proposed algorithm works in different steps. Firstly, a database consisting of face images was manually labeled for training a discriminative model. Then, three kinds of features, namely, location, shape and color have been extracted from uniformly sampled square patches. By using a trained model, facial images have then been segmented into six semantic classes: hair, skin, nose, eyes, mouth, and back-ground, using a Random Decision Forest classifier (RDF). In the final step, a linear Support Vector Machine (SVM) classifier was trained for each considered mid-level feature (i.e., gender and expression) by using the corresponding probability maps. The performance of the proposed algorithm was evaluated on different faces databases, namely FEI and FERET. The simulation results show that the proposed algorithm outperforms the state of the art competitively.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Human Face Segmentation (HFS) is an active research area in computer vision and multi-media signal processing communities until recently. In fact, face segmentation plays a crucial role in many face related applications such as, face tracking, human computer interaction, face recognition, face expression analysis, and head pose estimation. Moreover, face analysis could be useful also for the estimation of shot scale [1], whereas expression recognition could also be useful for the affective characterization of shots [2]. Performance of all these, and of many other potential applications, can be boosted if a properly segmented face is provided in input. However, variations in illumination and visual angle, complex color background, different facial expressions and rotation of the head make it a challenging task.

Researches tried to solve many complicated problems of segmentation with semantic segmentation techniques. To investigate the problem of semantic segmentation, extensive research work has been carried out by PASCAL VOC challenge [3]. Haung et al. [4] performed joint work of face segmentation and pose estimation. They argued that features such as pose, gender and expression can be predicted easily if a well segmented face is provided in input. They used a database of 100 images with three simple poses: frontal, right and left profile images. Their algorithm segmented facial images into three semantic classes: skin, hair and background. Using the segmented image, the head pose was estimated. Psychology literature also confirmed their claim, as facial features are more illuminating for human visual system to recognize face identity [5, 6]. Recently some attention have also been given to the approaches that uses the Convolutional Neural Networks (see, for example, [7]).

In some previous works, we already investigated the HFS challenge [8], and the problem of Head Pose Estimation (HPE) [9]. Unlike previous approaches which consider few classes, we extended the labeled set to six semantic classes. We performed also a preliminary experimental work on a small database of high resolution frontal images. We exploited position, color and shape features to build a discriminative model. The proposed classifier returned a probability value and a class label for each pixel of the testing image. A reasonable pixel-wise accuracy was obtained with the proposed algorithm. In this paper, we propose and test an algorithm which segments a human face image into six semantic classes (using RDF), and then, using the segmented image, address the problems of gender and expression classification (using SVM). In more detail, the proposed algorithm works in few steps. Initially, a database consisting of face images was manually labeled for training a discriminative model. Then, three kinds of features, namely, location, shape and color have been extracted from uniformly sampled square patches. Then, using a trained model, facial images have been segmented into six semantic classes: hair, skin, nose, eyes, mouth, and back-ground, using a Random Decision Forest classifier (RDF). In the end, a linear Support Vector Machine (SVM) classifier was trained for each considered mid-level feature (i.e., gender and expression) by using the corresponding probability maps. The reported results are encouraging, and confirm that extracted information are immensely helpful in the prediction of the considered mid-level features. The structure of the paper is as follow. In Sect. 2 we quickly review previous works on face segmentation and related applications. In Sect. 3, we describe the used HFS algorithm. In Sect. 4, we propose a framework which uses the segmentation results to try to solve GC, and EC problems. Section 5 is about experimental setup, and detail setting of the used databases. In Sect. 6 we discuss the obtained results. Final remarks are given in Sect. 7.

2 Related Works

Face labeling provides a robust representation by assigning each pixel a semantic label in the face image. Several authors proposed different algorithms to assign a semantic label to the different facial parts. Previous algorithms reported in HFS literature consider either three-classes or four-classes. In three-classes labeling methods, e.g.,[4, 10,11,12,13,14], a semantic label is assigned to three prominent parts: skin, hair, and background. Similarly, four-classes labeling algorithms assign an additional label to clothes with skin, hair, and background [15,16,17].

Compared to state-of-the-art, in [8] we proposed a unified approach that generates a more complete labeling of facial parts. A database was also created consisting of manually labeled images. Manual labeling was performed with extreme care using Photo-shop software. An RDF classifier was trained by extracting location, color and shape features from square patches. By changing various parameters which tuned extracted features, the best possible configuration was explored. The output of the trained model is a segmented image with six classes labels; skin, nose, hair, mouth, eyes, and back.

Many methods have been proposed to try to solve the problems of GC and EC. Like HPE, GC and EC are classified into two categories: appearance-based and geometric-based approaches. Geometric-based methods are the same algorithms as model-based but with a different name in GC researchers community. Active Shape Modeling (ASM) [18] is used to locate facial landmarks both in GC and EC methods. A feature vector is created from these landmarks and a machine learning tool is used for training and prediction. However, ASM may fail to locate the landmark if lighting condition is changing, or if facial expressions are too much complicated. ASM also loose performance if the pose of the image is changed from frontal to extreme profile.

State-of-the-art shows that the three problems HPE, GC, and EC were handled separately. Haung et al. [4] is, at our best knowledge, the only work which combined HFS and HPE. The authors of the mentioned paper used a database where discrimination between consecutive poses was less, and three simple poses were used. We believe that if a good information is extracted from human face, all the three problems HPE, GC, and EC can be addressed in a single framework. We therefore extended our labeled data-set to six semantic classes. We used a challenging database Pointing’04, where difference between two consecutive poses was just \(15^{\circ }\). We also integrated the framework to perform the task of GC and EC. The GC task was performed using both smiling and neutral images showing the fact that change in facial expression has little effect on performance of proposed algorithm. The obtained experimental results reflect that the proposed algorithm not only gives appreciable results for GC, but also outperforms EC on standard face databases FEI [19], and FERET [20].

3 The Face Segmentation Algorithm

The presentation of the adopted face segmentation algorithm is divided into two parts. Section 3.1 describes the features extracted from training images and how the label is assigned to each pixel. Section 3.2 describes how the classification is performed, and which classification strategy was used.

3.1 Feature Extraction

Most of the segmentation algorithms work at pixel or super-pixel level. We used pixel-based approach in our work. We considered square patches as processing primitives. We extracted patches from training and testing images while keeping a fixed step size. Every patch is classified by transferring the class label to the center pixel of each patch.

To capture information from spatial relationship between different classes, relative location of the pixel was used as a feature vector. Coordinates of the central pixel of each patch was extracted. Relative location of the central pixel at position (x, y) of a patch can be represented as:

where W is the width, and H is the height of the image.

Color features were extracted from patches by using HSV color space. A single feature vector was created for color by concatenating Hue, Saturation and Value histograms. We noted that the dimension of the patch and the number of bins used has an immense impact on the performance of the segmentation algorithm. We did a lot of experimentation previously, e.g., [8], to investigate the best possible setting for patch size and number of bins. Based on those experimental results, we concluded that patch size 16\(\ \times \) 16, and number of bins 32 is a good combination for our work. Using these values, feature vectors generated for each patch would be \(f_{HSV}^{32} \in R^{96}\).

For extracting shape information we used Histogram of Oriented Gradient (HOG) [21] features. Each patch was transformed into HOG feature space. Like HSV color, we also investigated for proper patch dimension for HOG features. We found that results are best on a patch size 64\(\ \times \ \)64. Using this patch size a feature vector created for each patch would be \(f_{HOG}^{64\ \times \ 64} \in R^{1764}\).

All the three features: spatial prior, HSV and HOG were then concatenated to form a single unique feature vector \(f^I \in R^{1862}\), where \(f^I\) is the feature vector for patch I(x, y).

3.2 Classification

A RDF classier was then trained using ALGLIB [22] implementation. The trained RDF classifier predicted probability value for each pixel. The class label was assigned to every pixel based on maximum probability:

where \(C = \{skin, background, eye, nose, mouth, hair \}\) and \(\mathbf L \), \(\mathbf C \), and \(\mathbf S \) are random variables for features \(f_{loc}\) (relative location), \(f_{HSV}\) (HSV color), and \(f_{HOG}\) (Shape information), respectively.

4 The Proposed Gender and Expression Classification Algorithms

Rather than outputting the most likely class, we used probabilistic classification to get probability map for each class from HFS algorithm. Given a set C of classes \(c \in C\), we created a probability map for each semantic class represented by P \(_{skin}\), P \(_{hair}\), P \(_{eyes}\), P \(_{nose}\), P \(_{mouth}\), and P \(_{back}\). The probability maps were computed by converting the probability of each pixel to a gray scale image. In probability maps, higher intensity represents higher value of probability for the most likely class on their respective position. Figure 1 shows two images of Pointing’04 database, and their respective probability maps for five classes. We investigated thoroughly which probability map is more helpful in GC and EC. A feature vector was created comprised of probability maps which is more helpful in GC and EC. We trained a linear SVM classifier using the feature vector. A binary SVM was trained for GC and EC. Our proposed algorithm flow is described in Table 1.

Frontal images (Pointing’04 database): column 1: original images; column 2: ground truth images; column 3: images segmented by the proposed algorithm; column 4: probability map for skin; column 5: probability map for hair; column 6: probability map for mouth; column 7: probability map for eyes; column 8: probability map for nose.

4.1 Gender Classification

Some features play a key role in gender discrimination in human faces. We categorize these features as: eyes, nose, mouth, hair, and forehead. Locating these features is easy for human, but it is a challenging task for machine. Our segmentation algorithm provides reasonable information about these main differences. The proposed HFS algorithm fully segment facial image into six parts: back, nose, mouth, eyes, skin, and hair. After a large pool of experiments, we found that four parts play a key role in male and female face differentiation. We list these as: skin, nose, eyes and hair. We are giving an overview below how these parts are helpful in gender classification, if a fully segmented image is provided. Gender Classification literature reported that male forehead is larger than female. First reason for larger forehead; the hairline for male is back than a female. Even in some cases hairline is completely missing. Second reason; male neck is comparatively thicker, and on larger area. Forehead has a skin label in our HFS algorithm. As a result, skin pixels are bounded on larger area, and a more brighter probability maps is noted for male. Figure 2 compares probability maps of the two subjects (first line female, and second line male) from FERET database.

Expression classification differentiation. Column 1: original database images; column 2: images segmented by proposed segmentation algorithm; column 3: probability maps for mouth; column 4: original database images; column 5: images segmented by proposed segmentation algorithm; column 6: probability maps for mouth.

From our segmentation results we noted that male eyes are more efficiently segmented as compared to female. Usually female eyelashes are longer and curl outwards. Mostly, these eyelashes are mis-classified with hair by our HFS algorithm. As a result, location having eyes pixels are predicted with less probability. As compared to female, male eyelashes are smaller and hardly visible, so better segmentation results are produced (see Fig. 3, column 3). It was also noted that female nose is smaller, having smaller bridge and ridge [23]. Male nose is comparatively larger and its width is also more. The valid reason reported for this fact: male body mass is comparatively larger, which require larger lungs and sufficient passage for air supply to the lungs. As a result, male nostrils are usually bigger resulting in a comparatively big nose (e.g., Fig. 3, column 4). Hairstyle is another one of the effective features for gender discrimination. It provides discriminative clues which are extremely helpful in gender differentiation. In earlier work hair was not counted as a feature because of its complicated geometry which varies from person to person. Our proposed segmentation algorithm get 95.14% pixel-wise accuracy for hair. It is locating the geometry of hair in a good way. Sometime eyebrows also help in discrimination. The class label for eyebrow is the same as hair in our HFS algorithm. In general, female eyebrows are thinner and longer. In contrary, male eyebrows are shorter and wide. The mouth can really help make a human face more masculine or feminine. Female lips are more clear as compared to male. Even in some cases upper lip is completely missing in male. But our segmentation algorithm does not help to utilize these differences, and we do not noted any increase in classification rate while using mouth. We trained an RDF classifier to create a feature vector for gender discrimination. We manually labeled 15 images for each gender, from each database for training the classifier. The probabilistic classification approach was used to get probability maps for each of the four facial features; skin, nose, hair, and eyes. These probability maps were concatenated to form a single feature vector for each training image. A binary linear SVM classifier was then trained to differentiate between the two classes.

4.2 Expression Classification

We believe that movement of some facial parts plays a decisive role in the identification of some facial expressions. In case of smiling and neutral faces, single movement of mouth pixels are sufficient to decide if a face is smiling or neutral. Movement of mouth pixels is more, and more area is covered if expression is smiling. In contrary, mouth pixels are bounded in a small area with limited movement if expression is neutral. However, this phenomenon is not implemented in complicated facial expression. Multiple movements of facial parts are necessary to be investigated in differentiating between complicated facial expressions. After some experimental work, we concluded to use probability map of mouth for expression discrimination.

We implemented our expression classification algorithm on two simple facial expressions, smiling and non-smiling faces. Experiments were carried out by using two standard face databases FEI and FERET. We labeled 15 images manually, each from smiling and neutral expression from both databases. These images were used to train an RDF classifier. Each testing image is then passed to an RDF classifier. Probability map was obtained for mouth from HFS algorithm. Mouth probability map was used as a feature vector to train a binary SVM classifier.

5 Experimental Setup

5.1 Gender and Expression Classification

To evaluate the performance of gender and expression classification algorithms we used two databases, FEI and FERET. The FEI database is composed of 200 subjects (100 male and 100 female). Each person has two frontal images (one with a smiling and the other neutral facial expression). We used both images for each person making a total number of 400 images. The FEI data-set is in two sessions; FEI A and FEI B. All neutral expression images are in FEI A and smiling expression images in FEI B. We used both FEI A and FEI B in gender and expression tests. In similar way, 400 images were used from FERET database for gender and expression tests. To measure the classification performance, 10-fold cross validation protocol was adopted for GC and EC as well. Finally, results are averaged and reported in the paper.

6 Discussion of Results

6.1 Gender Recognition

The FEI and FERET databases are large databases but we performed our experimental work only on frontal images. The criterion used to evaluate the system is Classification Rate (CR). The CR is a real life deployment criterion, and it finds how many images are correctly classified by the framework. Figure 4 shows reported results and its comparison with state-of-the-art. It should be noted that reported results and its comparison as in figure are on the same set of images. A large amount of literature exist specially on FERET database with different image settings. Preeti et al. [24] used the same set of images, but validation protcol was 2-fold. Figure 8 shows that classification results achieved with the proposed algorithm are better than those reported, except one case (FEI database), where [25] surpassed us. The proposed gender recognition algorithm is robust to illumination and expression variations. We used both neutral and smiling facial expression images in our experiments. However, further investigations are needed to know how much CR will drop with change of pose or with complicated facial expression.

6.2 Expression Recognition

The same FEI and FERET databases were used for training and testing. Ten-fold cross validation experiments were performed. CR is the evaluation criterion here as well. We obtained an average CR of 96.25 % on FEI and 83.25 % on FERET which are best till date on the same set of images and same databases. Figure 5 compares results obtained with previously reported results.

7 Conclusions

In this paper we have proposed a framework which try to solve the challenging problems of gender and expression classification. A face segmentation algorithm segments a facial image into six semantic classes. A probabilistic classification strategy is then used to generate probability maps for each semantic class. A series of experiments were carried out to identify which probability map is more supportive in gender and expression classification. The respective probability maps are used to generate a feature vector and train a linear SVM classifier for each of the possible cases. The proposed methods have been compared with state-of-the-art on standard face databases FEI, and FERET, and good results were obtained.

Several promising directions can be planned as future work, and we are considering two of these. Firstly, we believe that our segmentation results provide sufficient information for various hidden variables in face and provide a rout towards solving difficult recognition problems. Our planes also include adding the problems of age estimation and race classification into the architecture. Secondly, the current segmentation model can be improved to get nearly perfectly segmented results.

References

Benini, S., Svanera, M., Adami, N., Leonardi, R., Kovács, A.B.: Shot scale distribution in art films. Multimedia Tools Appl. 75(23), 16499–16527 (2016)

Canini, L., Benini, S., Leonardi, R.: Affective analysis on patterns of shot types in movies. In: 2011 7th International Symposium on Image and Signal Processing and Analysis (ISPA), pp. 253–258. IEEE (2011)

Everingham, M., Van Gool, L., Williams, C.K., Winn, J., Zisserman, A.: The Pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 88(2), 303–338 (2010)

Huang, G.B., Narayana, M., Learned-Miller, E.: Towards unconstrained face recognition. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, CVPRW 2008, pp. 1–8. IEEE (2008)

Davies, G., Ellis, H., Shepherd, J.: Perceiving and Remembering Faces. Academic Press, Cambridge (1981)

Sinha, P., Balas, B., Ostrovsky, Y., Russell, R.: Face recognition by humans: nineteen results all computer vision researchers should know about. In: Proceedings of IEEE, vol. 94(11), pp. 1948–1962 (2006)

Levi, G., Hassner, T.: Age and gender classification using convolutional neural networks. In: Computer Vision and Pattern Recognition (CVPR) (2015)

Khan, K., Mauro, M., Leonardi, R.: Multi-class semantic segmentation of faces. In: 2015 International Conference on Image Processing, ICIP 2015, vol. 1, pp. 63–66. IEEE (2015)

Khan, K., Mauro, M., Migliorati, P., Leonardi, R.: Head pose estimation through multi-class face segmentation. In: Proceedings of ICME-2017 (2017, to appear)

Yacoob, Y., Davis, L.S.: Detection and analysis of hair. IEEE Trans. Pattern Anal. Mach. Intell. 28(7), 1164–1169 (2006)

Lee, C., Schramm, M.T., Boutin, M., Allebach, J.P.: An algorithm for automatic skin smoothing in digital portraits. In: Proceedings of the 16th IEEE International Conference on Image processing, pp. 3113–3116. IEEE Press (2009)

Lafferty, J., McCallum, A., Pereira, F.C.: Conditional random fields: probabilistic models for segmenting and labeling sequence data (2001)

Kae, A., Sohn, K., Lee, H., Learned-Miller, E.: Augmenting CRFs with Boltzmann machine shape priors for image labeling. In: 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2019–2026. IEEE (2013)

Eslami, S.A., Heess, N., Williams, C.K., Winn, J.: The shape Boltzmann machine: a strong model of object shape. Int. J. Comput. Vis. 107(2), 155–176 (2014)

Li, Y., Wang, S., Ding, X.: Person-independent head pose estimation based on random forest regression. In: 2010 17th IEEE International Conference on Image Processing (ICIP), pp. 1521–1524. IEEE (2010)

Scheffler, C., Odobez, J.-M.: Joint adaptive colour modelling and skin, hair and clothing segmentation using coherent probabilistic index maps. In: British Machine Vision Association-British Machine Vision Conference (2011)

Ferrara, M., Franco, A., Maio, D.: A multi-classifier approach to face image segmentation for travel documents. Expert Syst. Appl. 39(9), 8452–8466 (2012)

Cootes, T.F., Taylor, C.J., Cooper, D.H., Graham, J.: Active shape models-their training and application. Comput. Vis. Image Underst. 61(1), 38–59 (1995)

da Fei, C.U.: Fei database. http://www.fei.edu.br/~cet/facedatabase.html

Phillips, P.J., Wechsler, H., Huang, J., Rauss, P.J.: The FERET database and evaluation procedure for face-recognition algorithms. Image Vis. Comput. 16(5), 295–306 (1998)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2005, vol. 1, pp. 886–893. IEEE (2005)

Bochkanov, S.: Alglib: http://www.alglib.net

Enlow, D.H., Moyers, R.E.: Handbook of Facial Growth. WB Saunders Company, Philadelphia (1982)

Rai, P., Khanna, P.: An illumination, expression, and noise invariant gender classifier using two-directional 2DPCA on real Gabor space. J. Vis. Lang. Comput. 26, 15–28 (2015)

Thomaz, C., Giraldi, G., Costa, J., Gillies, D.: A Priori-Driven PCA. In: Park, J.-I., Kim, J. (eds.) ACCV 2012. LNCS, vol. 7729, pp. 236–247. Springer, Heidelberg (2013). doi:10.1007/978-3-642-37484-5_20

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Khan, K., Mauro, M., Migliorati, P., Leonardi, R. (2017). Gender and Expression Analysis Based on Semantic Face Segmentation. In: Battiato, S., Gallo, G., Schettini, R., Stanco, F. (eds) Image Analysis and Processing - ICIAP 2017 . ICIAP 2017. Lecture Notes in Computer Science(), vol 10485. Springer, Cham. https://doi.org/10.1007/978-3-319-68548-9_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-68548-9_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68547-2

Online ISBN: 978-3-319-68548-9

eBook Packages: Computer ScienceComputer Science (R0)