Abstract

Online resource provisioning of applications in cloud is challenging due to the variable nature of workloads and the interference caused by sharing resources. Current on-demand scaling is based on manually configured thresholds that cannot capture the dynamics of applications and virtual infrastructure. This results in slow responses or inaccurate provisioning that lead to unfulfilled service level objectives (SLOs). More automated approaches, in turn, use fixed model structures and feedback loops to control key performance indicators (KPIs). However, workload surges and the non-linear behavior of software (e.g. overload control) make the control mechanisms vulnerable to rapid variations, eventually leading to oscillatory or unstable elasticity. In this paper we introduce RobOps, a robust control system for automated resource provisioning in cloud. RobOps incorporates online system identification (SID) to dynamically model the application and detect variations in the underlying hardware/software. Our framework combines feedforward/feedback control with prompt response to achieve reference performance. The feedforward control allows to compensate for delays in the scaling mechanism and provides robustness to workload surges. We validate RobOps performance using an enterprise communication service. Compared to baseline approaches, RobOps achieves 2X less SLO violations in case of traffic surges, and reduces the impact of interferences at least by \(20\%\).

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Incorporating elasticity to resource management in cloud allows for maximizing the benefits of service providers. Although attractive, provisioning resources on-demand is challenging for applications running in a shared environment and serving varying workloads – particularly for over-the-top applications with stringent service level objectives (SLOs), e.g. video and real-time messaging where over-provisioning is the common practice for resource management [22].

Elasticity in commercial clouds is currently enabled by threshold-based policies that keep track of key performance indicators (KPIs) to instantiate or terminate resources [1, 2]. Configuring these thresholds is time consuming, as it involves user expertise and iterative testing. Moreover, thresholds are insufficient to model system dynamics and dependencies. This leads to performance degradation, as variations in traffic rate and elasticity delays across service chains cannot be captured properly [20].

To account for such delays and to better control service dynamics, several proposals combine theoretical models and control theory [19]. In general, these models have static structures (e.g. fix order), with adjustable parameters calibrated using fitting techniques over the available data [6, 13]. However, static model structures can fail to represent the cloud stack and the complex characteristics of production software [9]. That is, hardware upgrades and interference of collocated applications can modify model structures significantly. From a software perspective, applications and services can also vary their behavior according to certain system states and workload events, e.g. overload control and traffic prioritization. This variability makes static models inaccurate, causing overshooting in the response of feedback controllers and even instability [21].

We argue that control-based solutions should dynamically update the application model using arbitrary structures that better fit to the current behavior of the cloud environment. Given the constantly changing and shared nature of cloud resources, model updates must be done automatically and performed at runtime. To this end, we extend System IDentification (SID) [16] techniques with modifications to allow for detecting model variations online.

In this paper we introduce RobOps, a system that combines online SID with dual feedforward (FF) and feedback (FB) control to operate services in the cloud with robustness to workload surges and infrastructure or software changes. SID enables a data-driven modeling of dynamic systems by leveraging various statistical methods and accuracy criteria. The control mechanism receives model updates at runtime, and adapts the control actions accordingly. The reasons for using a FF compensator are: (1) reducing propagation delays in the FB controller and the impact of scaling latency; (2) changing the elasticity dynamics, i.e. prompt scale-out and conservative scale-in. The FF compensator allows for a fast reaction to traffic changes while the FB controller adapts resource provisioning to track reference KPIs – usually derived from SLOs. This allows RobOps to serve workloads by shifting the control effort between FF and FB controllers according to the workload demand and application state. More specifically, our contributions are the following:

-

Online and data-driven system identification that concurrently computes various multiple input multiple output (MIMO) models to control elasticity of applications in cloud. Models are calculated automatically and ranked using likelihood criteria which relieves application owners of modeling and profiling tasks, or threshold setting.

-

A feedback controller able to adapt the control matrices according to updated models received on the fly. The feedback loop provides stability and ensures that KPIs are close to the desired references.

-

A feedforward compensator to drastically reduce the oscillations caused by varying and bursty workload rates that can make the applications to trigger overload control or other similar mechanisms. This compensator performs a switching control that uses short-term predictions to accelerate scale-out in presence of increasing workloads, or scale-in conservatively otherwise.

The paper is organized as follows. An overview of RobOps is given in Sect. 2, while Sects. 3 and 4 describe in detail the online SID modeling and the controllers, respectively. In Sect. 5 we evaluate RobOps against thresholds and other control-based solutions using commercial applications. Section 6 elaborates on related work, while Sect. 7 describes our future work. Finally, we conclude in Sect. 8.

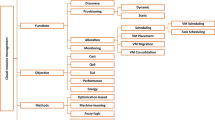

(a) Overview of RobOps framework for a Linux container (LxC) based service. (b) Online system identification (SID) computes a set of MIMO linear models taking the values of d, u and y as inputs, and returns the model with the minimum Akaike information criteria (AIC) score. (c) Such model, jointly with the variation in d and the error \(e = y - ref\) will condition the control input \(u_c\) and the final number of instances u in each elastic component of the service.

2 RobOps Design

Figure 1a depicts the main components of RobOps. The application or service can run on one or many virtual machines (VM) or Linux containers (LXC) depending on the underlying cloud infrastructure. VMs, as well as LXC, are elastic and can be scaled out. We denote as u the number of instances of each elastic component. The service key performance indicators (KPIs) are the control outputs, denoted as y. KPIs are affected by the execution of workloads, which are denoted as d for consistence with control theory notation. All u, y and d are monitored and sampled at discrete intervals or control periods. Throughout the paper we focus on services that scale horizontally. However, RobOps can also control services that scale vertically using a different set of control inputs u.

The SID module consumes u, y and d to select and configure a model of the service, the selection is based on a best-fit ranking among several models. This model is used to configure the controllers gain, while d and the error \(e = ref - y\) are inputs of the controller module. The reference (\( ref \)) or setpoint is a set of target values for the control outputs y. We select these values according to the service SLOs. The controller generates a control signal (\(u_c\)) that modifies the amount u of instances to maintain the outputs close to their reference values.

Figure 1b gives a closer view of the online SID module. We evaluate several MIMO linear models such as ARX, ARMAXFootnote 1 and other finite impulse response (FIR) models [16]. We compute the Akaike Information Criterion (AIC) [4] for each model. The model with lowest AIC score has higher likelihood to more accurately represent the service. We describe the online SID in detail in Sect. 3.

Figure 1c illustrates the controller module with a dual FF/FB control. The FB loop aims at reducing the error between references and control outputs, increasing the number of instances when the error is negative and reducing it otherwise. Note that the FB control does not depend directly on the workload. Workload variations affect the control outputs with a certain time lag, which can cause oscillations or overshooting. This can lead to a slow convergence to the desired reference values. To deal with these workload changes we include a FF compensator that varies the provisioned resources to compensate for workload variations. We give a last twist to the FF compensator by switching the control to use either a forecast of the workload or its current value. The forecast is used to act proactively to increasing workloads. Both FB and FF use proportional control and the overall control action is the sum of the FB and FF outputs. We provide details of the controller in Sect. 4.

The robustness of the controller is achieved with both adaptive modeling and disturbance rejection. That is, the online SID module adaptively identifies unseen system dynamics and models the time-varying characteristics of the cloud stack. The FF controller, in turn, compensates for the effect of bursty workloads on the control outputs, rejecting the disturbances to the control system.

RobOps is implemented in Python. We have created a monitoring API to aggregate application and system KPIs such as throughput, latency, CPU usage, etc. Similarly, we have developed a control API which provides plugin functionality to VM/LXC-based orchestration mechanisms, allowing us to connect to different platforms, currently we support Rancher and OpenStack.

3 Online System Identification

We extend the system identification (SID) framework to enable dynamic and automated modeling. The SID module performs four tasks: (1) analysis of candidate models, (2) model identification, (3) parameters computation and (4) likelihood evaluation and selection. Differently to other solutions in the field, our framework does not assume a concrete model to represent the service but a pool of model structures. We perform model identification using online monitored data from inputs and outputs (u, y, d) to compute the coefficients of models in the pool. During this fitting, models are classified following the AIC criterion to find the most accurate one and forward it to the controller.

3.1 Selecting the Candidate Models

Considering multiple models helps in capturing the dynamics of heterogeneous clouds, and reduce the limitations of individual models to adapt to hardware changes or interference. We base the RobOps online SID component on the general family of linear models [16], extending it to represent a MIMO system:

where A, B, C, J and L are matrices of rational polynomials with operator q, and \(\varvec{\epsilon }(k)\) is noise at the \(k^{ th }\) control period. As defined in [16], different combinations of these polynomials lead to different model structures. We focus our experiments in the ARX, MAX and ARMAX structures. Each one of these models can have different polynomial order. These structures consider exogenous inputs that capture the effect of the number of instances on the service KPIs. Finally, we select the control period based on the time required to create a new instance. This time depends on the application and virtualization technique (e.g. virtual machines or containers), in this paper we consider values between 20–60 s.

3.2 Model Identification

We now describe how we construct the models. Using control theory notation, we define \(\varvec{u} = [u_1,u_2,...,u_{n_u}]^T\) to denote the number of instances of each service component, while the control outputs \(\varvec{y} = [y_1,y_2,...,y_{n_y}]^T\) represent the KPIs collected from these instances. We denote the incoming workload as d, the disturbance of the service. Service KPIs and workload are measured as discrete time series, i.e. \(\varvec{y}(k)\) is the value of \(\varvec{y}\) measured at control period k.

In general, cloud services exhibit a near-linear behavior and we can linearize the models in the neighborhood of operating points. If services behave non-linearly, we can apply linear difference equations with the deviations from an operating point to locally approximate the dynamics of the service. An operating point is defined as a steady state of the service, such that state u, y, and d are stable at this point. At each time k, the parameters u(k), y(k), and d(k) are measured as the deviations from such operating point. Then, the correlation between the deviations of parameters can be approximated linearly, as long as these deviations are small when compared to the operating points.

For generality, we describe the model construction process for ARMAX. We create a set of \(n \times n'\) ARMAX models, where n and \(n'\) denote the highest order of the AR and MA components, respectively. One of these models will be selected and used as input to our controller. The eXogenous inputs capture the effect of u and d on the control outputs for the FB loop, and the relationship between u and d needed for the FF compensators. Formally, each model is expressed as

Here, \(A_i(k)\) \(i = 1,2,...,n\) is an \(n_y\times n_y \) matrix which captures the correlation among the output time series, being \(n_y\) the number of control outputs. B(k) is an \(n_y\times n_u\) matrix and captures the correlation between service inputs and control outputs, being \(n_u\) the number of service inputs. \(C_i(k)\) is an \(n_y\times n_y \) matrix capturing the moving average of the control output. Finally, D(k) is an \(n_y\times 1\) matrix which captures the correlation between workload and output.

3.3 Model Parameters Computation

After constructing the models we now identify their parameters. Computing static models after a profiling phase has several limitations. First, services running in the cloud usually exhibit a nonlinear behavior when the entire operating range is considered. Second, external interference may alter the model at any time. For these reasons, RobOps computes and updates the model parameters online. Moreover, by updating the model online we avoid a exhaustive profiling phase, also leveraging low order models when few data is available and shifting to higher order models (if more accurate) as the observed data increases.

We use Kalman filters to identify the parameters of the models as proposed in [24]. We rewrite the model (2) in the state space defining the state as \(\varvec{x}(k) = [\varvec{y}^T(k),...,\varvec{y}^T(k-n+1)]^T\), and the state space model becomes:

Here F(k) is the transition matrix which contains the parameters from \(A_1\) to \(A_n\). H(k) represents the map between \(\varvec{x}(k)\) and \(\varvec{y}(k)\) and it is known for each order of the model, and w(k) is zero-mean Gaussian noise.

At each control period, we measure the control outputs \(\varvec{y}(k)\), and observe the number of instance per component \(\varvec{u}(k)\) and workload d(k). The state of the system is recursively updated by a Kalman filter. The parameters of the models are identified by maximizing the likelihood function.

3.4 Model Evaluation and Selection

Once we compute the parameters of the models we need to choose the one that better represents the service. Two of the most used criteria for model selection are the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC). Both criteria provide a score for each model that allows to compare their likelihood: \(AIC = 2k - 2\cdot L,\) and \(BIC = k\cdot ln(s) - 2\cdot L,\) where L is the log-likelihood function, s the number of samples used for the fitting, and k is the number of parameters to be estimated. Both criteria penalize high order models, reducing the risk of overfitting. We use AIC because it usually provides better fits to smaller data windows, while BIC aims at a general model and gets better results as the pool of data increases [4]. Hence, we select the model that results in the lowest AIC score and utilize it for designing the controller. Finally, we define a minimal switching time in order to avoid switching models too often.

Computational complexity. The SID module complexity depends on the computation of model parameters and model selection. Model parameters are recursively computed with Kalman filters. Its complexity \(N_{ KL }\) depends on the size of the data measured in a control period and is bounded by \(c_1\cdot ((n_u+u_y)\times (n + n'))^3 + N_l\) where \(N_l\) is the complexity of maximizing the likelihood function, and \(c_1\) is a constant. On the other hand, the complexity of evaluating a model is bounded by \(w\cdot c_2\cdot n_y^2\), where w is the size of the data to compute the AIC score, and \(c_2\) is a constant. The total complexity is bounded by \(|\mathscr {M}|(w\cdot c_2\cdot n_y^2 + \text {max} N_{ KL })\), where \(\mathscr {M}\) is the set of candidate models.

4 Design of a Feedforward Plus Feedback Controller

This section describes the controller. For a certain workload, the controller adjusts the number of instances \(\varvec{u}\) of each component to maintain the value of the service KPIs \(\varvec{y}\) around the references \(\varvec{r}\). To achieve this goal, we propose a MIMO adaptive switching control scheme including a FF compensator and a FB controller. Both the FF compensator and FB controller use the model computed by the online SID component described in Sect. 3.

We require a MIMO controller because our service has multiple KPIs to control, and multiple actuators to enforce this control, i.e. the number of instances of each component. The FB controller monitors the control error \(e=\varvec{r}-\varvec{y}\) and adjusts \(\varvec{u}\) accordingly. However, the effect of abrupt workload variations on KPIs may be inaccurate or delayed, resulting in a slow response of the FB loop. For this reason, the FF compensator complements the FB controller by monitoring the workload and actuating on the service when it varies abruptly. Then, the controller input (or control signal) is the number of instances of each type that have to be scaled out(in) in the current control period. We denote this control signal as \(\varvec{u_c}\). As shown in Fig. 1c, \(\varvec{u_c}(k)= \varvec{u}_{FF}(k)+\varvec{u}_{FB}(k).\)

4.1 Design of a Feedforward Controller

The FF compensator is employed to monitor the workload and mitigate the effect of workload surges. To design the controller, we need to quantify online the effect of the workload on the target service. To this purpose, we define the following error function representing the interaction between the FF compensator and the workload on the service \(E_1(k) = B(k)\varvec{u}_{FF}(k)+D(k)d(k),\) where matrices B(k) and D(k) come from the model structure presented in Eq. (2). The term D(k)d(k) reflects the effect of the workload on the service KPIs. On the other hand, the term \(B(k)\varvec{u}_{FF}(k)\) represents the impact that the FF compensator exerts on the service KPIs. Hence, \(E_1(k)\) represents the amount of KPI variation caused by the workload after being compensated by the FF controller \(\varvec{u}_{FF}(k)\).

The computation of \(\varvec{u}_{FF}(k)\) depends also on u and y dimensions. If they are equal, and matrix B(k) has full rank, then \(\varvec{u}_{FF}(k) = -B^{-1}(k)D(k)d(k)\). If the dimension of u is smaller than the dimension of y (i.e. there are more KPIs to control than components), then \(E_1\) is over-determined. In this case, we minimize the square of main error function \(E_1(k)\). If the dimension of u is greater than dimension of y (i.e., more components than KPIs), \(E_1\) is under-determined. In this case, \(E_1(k) = 0\) and we need to minimize the square of auxiliary error function \(E_2(k) = \varvec{\hat{u}}_{FF}(k)-\varvec{u}_{FF}(k)\). \(E_2(k)\) represents the difference between the actual virtual instances \(\varvec{u}_{FF}(k)\) and the estimated number of virtual instances \(\varvec{\hat{u}}_{FF}(k)\) computed from the model in Eq. (2). Therefore, the control action \(\varvec{u}_{FF}(k)\) of the FF controller is the result of minimizing the errors \(E_1(k)\) and \(E_2(k)\). Algorithm 1 summarizes the different cases to consider when computing \(\varvec{u}_{FF}(k)\).

Switching controller. We want our controller to scale out the service fast but scale in more cautiously. For this reason, we propose two different controllers \(g_{\sigma }(u,d)\) for the FF compensator, selected by a switching signal \(\sigma \) that depends on the workload variation d(k). To compute \(\sigma \) we model the time series d as an Auto-Regressive process at each control period k, and use it to predict the future workload \(d(k+1)\). When the forecasted value yields \(d(k+1)>d(k)\), we assign \(\sigma = 0\) and \(\sigma = 1\) otherwise. The candidate FF controllers are then \(\varvec{u}_{FF}(k) = g_0(u(k),d(k+1))\) and \(\varvec{u}_{FF}(k) = g_1(u(k),d(k))\), where \(g_0\), \(g_1\) result from computing \(\varvec{u}_{FF}(k)\) as in Algorithm 1 with values \(d(k+1)\) or d(k), respectively. The compensator \(g_0(u(k),d(k+1))\) is used with increasing workloads and proactively compensates the workload ahead of time. On the other hand, we use \(g_1(u(k),d(k))\) for non increasing workloads, compensating the current workload variation. Finally, to guarantee the stability of the whole control system, the switching controller is restricted to the case of slow switching. A minimal dwell time \(t_d\) of 5 to 10 times the control period is used to avoid switching too frequent.

4.2 Design of the Feedback Controller

The FF control by itself is unstable as it calculates the control actions based only on the parameters estimated by open loop models. As system dynamics are not modeled and noise may be aggregated, this can result in large errors. The FF compensator does not keep track of service KPIs, jeopardizing SLO fulfillment. For this reason, we complement it with a FB loop, which provides stability to the service and ensures that KPIs track the desired references.

The FB loop is based on the model returned by the online SID. This model can be either ARX, MAX or ARMAX of any order. RobOps computes the parameters of a proportional controller adapting to any of these structures. For generality, we show the design of a proportional controller for an ARMAX closed-loop system. This design can be generalized for structures of any order following well establish methods, though. Consider the model from Eq. (2) and substitute the FF control, we obtain: \(\varvec{y}(k+1)=A(k)\varvec{y}(k)+B(k)\varvec{u}_{FB}(k) + E_1(k)\), where the square of the error function \(E_1(k)\) is to be minimized by the FF compensator. Note that \(\epsilon \) is zero-mean system noise and the controller is robust to it. Since the FF controller compensates the majority of the effect of the workload variation d(k), the FB control action will be moderated. The goal of the FB controller is to adjust \(\varvec{u}_{FB}(k)\) to maintain \(\varvec{y}(k)\) at the reference \(\varvec{r}\). Then, the proportional FB control action is given by: \(\varvec{u}_{FB}(k) = K_p(k)e(k) = K_p(k)(\varvec{r}-{\varvec{y}})(k),\) where \(K_p(k)\) is the proportional FB control gain. We compute \(K_p(k)\) using common pole placement strategy which places the closed-loop poles by considering a fast transient response of the system. Since the dynamics of the system is monitored at each time interval, the FB controller can also adapt to the service variations.

5 Evaluation

In this section we evaluate RobOps performance and compare it to different baseline solutions in several scenarios using a commercial communication service. This service runs several containers and supports different types of traffic. We focus on instant messaging traffic and in two specific containerized functions: the conversation manager (CM) and user log manager (ULM). These functions are elastic and need to be scaled as the number of chats and active users vary. The workload for these functions is measured in number of chats.

Metrics. We assess the performance measuring the time during which SLOs are violated and in what magnitude (a.k.a severity). The KPI to control is component latency, which is the time needed to process a message. According to developers, latency must be below 3 s. Otherwise, the SLO is violated. Severity is measured by comparing the magnitude of latency to the SLO.

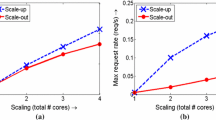

(a) RobOps is more robust to rapid workload variations than the other approaches, largely reducing or eliminating the impact on performance. (b) This robustness is mostly due to the FF compensator. Its relevance in the aggregated control action increases with the workload variation rate. (c) RobOps rarely exceed the SLOs, even for workloads with high variation rate.

Baseline comparison. RobOps (RO) is compared to threshold-based policies (TH) and to a feedback controller (FB) – the same we use in RobOps– with static gains. RobOps and FB control use a reference value of \( r = 750\) ms (i.e. \( SLO /4\)) for both CM and ULM latencies. For TH we set the upper and lower thresholds to \( r \) and \(0.5\cdot r \). When these thresholds are exceeded, the number of containers is increased or decreased by one. Reference is fixed to \( SLO /4\) to give room to the solutions to scale out containers upon latency increments.

5.1 Evaluating the Dynamic Response

We evaluate the dynamic response of the controllers by injecting workloads with different increasing rates, including steps to simulate traffic surges.

Traffic surges. We study first the case of traffic surges. To do so, we use steps transitioning from 20 to 200, 300, 400 and 500 chats, and monitor the service for 45 control periods, enough for all the controllers to reach a configuration that can handle the injected traffic. Figure 2a shows the results for the 400 chats surge. As the latency reflects the variation on the load with delay, neither FB nor threshold controllers can scale the service in time. RobOps response is faster as the feedforward compensator (FF) scales the service out when the change in the workload is observed, not exceeding the SLO. The FB controller response is faster than thresholds, but still exceeds the SLO. Moreover, the initial provisioning is still tight, leading to more oscillations in the provisioning, exceeding the SLOs during 3 control periods. Due to these oscillations, the accumulated action of the FB controller ends up creating more containers than RobOps, but still violating the SLOs. Due to the lack of a model, thresholds increase the number of containers gradually until the latency falls below the reference. This results in violating the SLOs during more than 10 control periods. Finally, the highest latency measured for RobOps is 0.90 times the SLO, while for the FB and thresholds controllers the latency is 2.04 and 8.4 times the SLO, respectively.

Figure 2b shows the latency cumulative distribution function (CDF) for each surge. All three controllers converge to a similar configurations. However, RobOps has some initial overprovisioning due to the FF compensator that allows for faster and less oscillatory convergence, and less SLO violations than the FB controller. Thresholds always have a slower response, more SLO violations and worse latency peaks (up to 45 times the SLO). The FB controller averages a \(10\%\) of SLO violations and peaks up to 3.5 times the SLO. However, the FB controller benefits from the characteristics of the service. When the service configuration is not sufficient to handle the load, the latency increases rapidly, leading to a large increment of the error (\(\varvec{r}-\varvec{y}\)) between samples to be adjusted by the controller and, therefore, a larger control action, taking less time to bring the latency back below SLOs. If the service response was slower, the FB controller would violate SLOs during more control periods. RobOps shows similar results for all surges, exceeding only the SLO with the largest surge and with a peak latency of roughly 2.7 times the SLO. Compared to FB, RobOps reacts a \(10\%\) faster and reduces the impact of SLO violations in performance in at least 2X. These results are mostly thanks to the action of the FF compensator, that in some cases provisions even some containers in excess allowing RobOps to handle surges more gracefully than the other controllers.

Traffic varying with different rates. We now inject 4 different sinusoidal workloads varying between 0 and 560 chats and periods of 15, 20, 30 and 50 control periods. Figure 3a shows the 15 control periods case. Upon workloads with such high variation, RobOps proactively creates a high number of containers due to the action of the FF compensator, exceeding SLOs only \(4\%\) of the time. Although the FB controller is the same in RobOps, it exhibits worse results, violating SLOs up to a \(14\%\) of the time. This difference is also due to the FF compensator. When the latency is below the reference, the FB controller reduces the number of containers in the service even if the workload is increasing. In these cases, the FF compensator counteracts the negative action of the FB controller. Without the FF, the FB controller reduces the number of containers leading to higher latencies and more SLO violations. Figure 3b shows how FF action is more relevant for low period workloads, having even more weight in the total control action than the FB component for the 15 control periods workload.

Figure 3c shows the latency CDFs for workloads with 15, 30 and 50 control periods. RobOps shows the best results, exceeding SLOs less than \(4\%\) of the time, while FB and threshold controllers reach a \(14\%\) and \(23\%\), respectively. Similarly, the worst RobOps SLO violation was of 2.4 times the SLO per 4 and 5.3 times for the FB and thresholds. The latency CDFs also show that the results obtained by thresholds and FB get worse as the frequency increases, while RobOps obtains similar results across all experiments, regardless of the variation rate.

5.2 Robustness to Software Changes

We finally evaluate the robustness of the framework by measuring how RobOps adapts to changes in the service. To do so, we inject a sinusoidal workload and then change the number of threads used by the CM and ULM containers. This emulates hardware changes or interferences that reduce the amount of available resources. In particular, we start running the containers with 2 threads, reduce to 1 thread after some time, and finally increase to 4 threads.

Varying container resources leads to changes in the models, and therefore to changes in the gains of the controller. Figure 4a shows the evolution of the 4 coefficients of the inverse of the gain matrix \(K^{-1}\). Matrix \(K^{-1}\) reflects the relation between the number of ULMs and CMs and their effect on their latency. We show the coefficient values for each configuration once the model converged.

(a) RobOps adapts to changes in the service. Changes in the available resources are captured by the online SID, adapting the model. This is reflected in the inverse matrix of the gain (\(K^{-1}\)), whose coefficients change for each configuration. (b) RobOps has some performance degradation while adapts the model. The feedback controller model is static, failing to provision the service correctly.

Figure 4a shows how the structure and order of the model changed for each configuration. While for the first configuration the service could be represented with an ARX (1), for the remaining configurations it moved to an ARMAX (1,1) and a MAX (3) models. Coefficients \(k^{-1}_{11}\) and \(k^{-1}_{22}\) decrease when the number of threads increases, as the capability of a container to reduce the service latency is increased. These coefficients are lower for 4 threads than for 2 threads. The behavior of coefficients \(k^{-1}_{12}\) and \(k^{-1}_{21}\) is the opposite, increasing with the number of threads. Increasing its processing capacity the container is indirectly increasing the latency on the other component, as more workload will arrive at it.

Variations in K affect the number of containers scaled by the FB controller and the FF compensator and, therefore, the capability of the controller to rapidly provision resources. To evaluate the benefits of adapting the model to these changes, we repeat the experiment using a FB controller with static gains. RobOps and the FB controller start knowing the service model for 2 threads. Figure 4b compares their performance for each configuration. RobOps performance falls to approximately a \(10\%\) degradation after every configuration change, mostly due to the learning period. On the other hand, the performance of the FB controller degrades severely, increasing from roughly \(4\%\) SLO violations when it uses the correct model to a \(33.5\%\) and \(21.5\%\) once the service moves to 2 and 4 threads, respectively. The performance in the last scenario is better because, with the 2 thread model, the FB controller instantiates containers in excess. The situation is the opposite for the 1 thread configuration, leading to larger SLO violations. Thanks to the online SID module RobOps reduces the impact of interference by up to a \(23.5\%\) when compared to the simple FB controller.

6 Related Work

Here we present a classification of existing methods according to both: the modeling approach and control strategy.

The most popular approach in industry [1, 2] is to use thresholds to represent high/low utilization values of resources and/or application KPIs [8]. This approach requires significant upfront effort and expertise to properly calibrate thresholds that react fast enough to workload variations at reasonable cost (i.e. avoid idle resources). More elaborated proposals use fuzzy logic [11] to provide a richer set of rules that better describe application resources. Still, these solutions do not capture service dynamics and dependencies, as mentioned in Sect. 1.

Other authors propose empirical approaches to model applications using benchmarks [14]. These approaches deal with complexity and nonlinearities across infrastructure layers by modeling the full stack. Empirical models also require substantial knowledge about the service configuration to benchmark the system properly. Also, benchmarks must be re-executed after hardware upgrades.

Queueing theory has been used as a foundation of several proposals [3, 7]. These models have parameters that can be automatically calibrated using Kalman filters [6]. However, queue models are useful to describe stationary states rather than transient, not being suitable for dynamic environments such as cloud.

Another possibility is to use time-series analysis to predict incoming workloads [19] and make scaling decisions in advance. Some examples of these forecasting techniques are exponential smoothing [11], Fourier analysis [10], wavelets [18] or Bayesian classifiers [5]. These predictions need to be combined with empirical or theoretical models to ultimately scale the system resources.

Control theory based solutions are numerous in the literature for both single input single output (SISO) and multiple input multiple output (MIMO) services. For SISO systems we find solutions using standard PID controllers [12] or fixed gain controllers combined with dynamic thresholding [15]. For MIMO services, feedback control is usually based on state space models combined with adaptive [17, 19] or switching control [3]. A limitation of feedback controllers is that delayed responses can lead to oscillatory behaviors or overshooting. Combining feedback and feedforward control can alleviate this limitation. In [23] authors used both controllers empirically obtaining (static) models. Using static models, however, can limit the benefits of the control technique, as shown in Sect. 5.

7 Discussion and Future Work

The evaluation in Sect. 5 shows the applicability and efficiency of RobOps. Still, there are aspects requiring further research and analysis to generalize our results. We have shown that RobOps can identify linear models among different options. When non-linearities are significant we can generalize our method using linear difference equations on the deviations around the operating points. However, we still need to extend our approach to different structures (e.g. non-linear) like Box-Jenkins or NARMAX.

Regarding the control strategy, we tried rather simple proportional controllers as they offer acceptable performance for the service under evaluation. However, we plan to evaluate more scenarios requiring more sophisticated controllers integrated to our SID module and evaluate their impact on modeling aspects (such as accuracy and performance) as well as stability and robustness.

We need to extend our baseline evaluation and compare to other solutions and validate RobOps with more services and applications. One characteristic of the service we used in Sect. 5 is how rapidly latency increases upon under-provisioning. We will evaluate whether RobOps can accurately provision any service with different dynamics and generalize its properties. Finally, we will quantify the cost reduction derived of causing less SLO violations despite of using more resources.

8 Conclusion

In this paper we have introduced RobOps, an automated framework to dynamically control resource provisioning in cloud. RobOps implements online SID to dynamically generate multiple service models eliminating the need for benchmarks and profiling. As the process is automated, user expertise is not required as in today’s threshold-based solutions. SID allows to select online the most accurate model from a collection of MIMO models with different structures, enabling RobOps to handle interfering services collocated in the shared infrastructure.

The framework combines FB and FF control, provisioning resources faster than other solutions and reducing SLO violations. Combining FF and FB control with SID results in a framework that adapts to changes in the service and handles rapid workload variations, reaching stable configurations in an agile way.

We evaluate RobOps performance with an enterprise communication service, and compared it to baseline solutions such as FB control or thresholds. RobOps is able to provision resources faster, reducing SLO violations in presence of traffic surges by a more than a \(10\%\) and a \(19\%\) when compared to FB and thresholds as well as reducing the performance impact by more than \(50\%\). Similarly, RobOps can adapt the service model upon hardware changes, reducing the SLO violations by a \(23.5\%\) when compared to FB control.

Notes

- 1.

AR stands for AutoRegressive, MA for Moving Average, and X implies the presence of eXogenous inputs.

References

Amazon Web Services. https://aws.amazon.com/. Accessed June 2017

Google Cloud Platform. https://cloud.google.com/. Accessed June 2017

Ali-Eldin, A., Kihl, M., Tordsson, J., Elmroth, E.: Efficient provisioning of bursty scientific workloads on the cloud using adaptive elasticity control. In: Proceedings of the 3rd Workshop on Scientific Cloud Computing Date, pp. 31–40. ACM (2012)

Burnham, K., Anderson, D.: Multimodel inference understanding AIC and BIC in model selection. Sociol. Methods Res. 33(2), 261–304 (2004)

Di, S., Kondo, D., Cirne, W.: Google hostload prediction based on Bayesian model with optimized feature combination. J. Parallel Distrib. Comput. 74(1), 1820–1832 (2014)

Gandhi, A., Dube, P., Karve, A., Kochut, A., Zhang, L.: Adaptive, Model-driven Autoscaling for Cloud Applications. In: USENIX 11th International Conference on Autonomic Computing, pp. 57–64 (2014)

Han, R., Ghanem, M., Guo, L., Guo, Y., Osmond, M.: Enabling cost-aware and adaptive elasticity of multi-tier cloud applications. Future Gener. Comput. Syst. 32, 82–98 (2014)

Hasan, M., Magana, E., Clemm, A., Tucker, L., Gudreddi, S.: Integrated and autonomic cloud resource scaling. In: Network Operations and Management Symposium, pp. 1327–1334. IEEE, April 2012

Hellerstein, J., Diao, Y., Parekh, S., Tilbury, D.: Feedback Control of Computing Systems. Wiley, Hoboken (2004)

Jacobson, D., Yuan, D., Scryer, J.N.: Netflix’s Predictive Auto Scaling Engine. The Netflix Tech Blog. Accessed April 2016

Jamshidi, P., Ahmad, A., Pahl, C.: Autonomic resource provisioning for cloud-based software. In: Proceedings of the 9th International Symposium on Software Engineering for Adaptive and Self-Managing Systems, pp. 95–104 (2014)

Janert, P.: Feedback Control for Computer Systems. O’Reilly Media Inc., Sebastopol (2013)

Kalyvianaki, E., Charalambous, T., Hand, S.: Self-adaptive and self-configured CPU resource provisioning for virtualized servers using Kalman filters. In: Proceedings of the 6th International Conference on Autonomic Computing. ACM (2009)

Lange, S., Nguyen-Ngoc, A., Gebert, S., Zinner, T., Jarschel, M., Kopsel, A., Sune, M., Raumer, D., Gallenmuller, S., Carle, G., Tran-Gia, P.: Performance benchmarking of a software-based LTE SGW. In: Proceedings of the 2015 11th International Conference on Network and Service Management, pp. 378–383. IEEE Computer Society (2015)

Lim, H., Babu, S., Chase, J.: Automated control for elastic storage. In: Proceedings of the 7th International Conference on Autonomic Computing. ACM (2010)

Ljung, L.: System Identification: Theory for the User. Englewood Cliffs (1987)

Nathuji, R., Kansal, A., Ghaffarkhah, A.: Q-clouds: managing performance interference effects for QoS-aware clouds. In: Proceedings of the 5th European Conference on Computer Systems, pp. 237–250. ACM (2010)

Nguyen, H., Shen, Z., Gu, X., Subbiah, S., Wilkes, J.: AGILE: elastic distributed resource scaling for infrastructure-as-a-service. In: Proceedings of the 10th International Conference on Autonomic Computing, pp. 69–82. USENIX (2013)

Padala, P., Hou, K., Shin, K., Zhu, X., Uysal, M., Wang, Z., Singhal, S., Merchant, A.: Automated control of multiple virtualized resources. In: Proceedings of the 4th ACM European conference on Computer systems, pp. 13–26. ACM (2009)

Ranjan, R., Benatallah, B., Dustdar, S., Papazoglou, M.P.: Cloud resource orchestration programming: overview, issues, and directions. IEEE Internet Comput. 19(5), 46–56 (2015)

Reiss, C., Tumanov, A., Ganger, G., Katz, R., Kozuch, M.: Heterogeneity and dynamicity of clouds at scale: Google trace analysis. In: ACM Symposium on Cloud Computing, pp. 7:1–7:13. ACM (2012)

Truong, H., Dustdar, S.: Programming elasticity in the cloud. Computer 48(3), 87–90 (2015)

Trushkowsky, B., Bodík, P., Fox, A., Franklin, M., Jordan, M., Patterson, D.: The scads director: scaling a distributed storage system under stringent performance requirements. In: FAST, pp. 163–176 (2011)

Wan, E., Van Der Merwe, R.: The unscented Kalman filter for nonlinear estimation. In: Adaptive Systems for Signal Processing, Communications, and Control Symposium, pp. 153–158. IEEE (2000)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Chen, C., Arjona Aroca, J., Lugones, D. (2017). RobOps: Robust Control for Cloud-Based Services. In: Maximilien, M., Vallecillo, A., Wang, J., Oriol, M. (eds) Service-Oriented Computing. ICSOC 2017. Lecture Notes in Computer Science(), vol 10601. Springer, Cham. https://doi.org/10.1007/978-3-319-69035-3_50

Download citation

DOI: https://doi.org/10.1007/978-3-319-69035-3_50

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-69034-6

Online ISBN: 978-3-319-69035-3

eBook Packages: Computer ScienceComputer Science (R0)