Abstract

In this paper, we consider the control problem in a reinforcement learning setting with large state and action spaces. The control problem most commonly addressed in the contemporary literature is to find an optimal policy which optimizes the long run \(\gamma \)-discounted transition costs, where \(\gamma \in [0,1)\). They also assume access to a generative model/simulator of the underlying MDP with the hidden premise that realization of the system dynamics of the MDP for arbitrary policies in the form of sample paths can be obtained with ease from the model. In this paper, we consider a cost function which is the expectation of a approximate value function w.r.t. the steady state distribution of the Markov chain induced by the policy, without having access to the generative model. We assume that a single sample path generated using a priori chosen behaviour policy is made available. In this information restricted setting, we solve the generalized control problem using the incremental cross entropy method. The proposed algorithm is shown to converge to the solution which is globally optimal relative to the behaviour policy.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

In this paper, we consider a reinforcement learning setting with the underlying Markov decision process (MDP) defined by the 4-tuple \((\mathbb {S}, \mathbb {A}, \mathrm {R}, \mathrm {P})\), where the finite sets \(\mathbb {S}\) and \(\mathbb {A}\) are referred to as the state space and action space respectively. Also, \(\mathrm {R}:\mathbb {S}\times \mathbb {A}\times \mathbb {S}\rightarrow \mathbb {R}\) is the reward function which defines the state transition costs and \(\mathrm {P}: \mathbb {S}\times \mathbb {A}\times \mathbb {A}\rightarrow [0,1]\) is the transition probability function. A stationary randomized policy (SRP) \(\pi \) is a probability mass function over the actions conditioned on the state space, i.e., for \(s \in \mathbb {S}\), we have \(\pi (\cdot \vert s) \in [0,1]^{\vert \mathbb {A}\vert }\) and \(\sum _{a \in \mathbb {A}}\pi (a \vert s) = 1\). A policy determines the action to be taken at each discrete time step of an arbitrary realization of the MDP. In this paper, we employ a parametrized class of SRPs \(\{\pi _{w} \vert w \in \mathbb {W} \subset \mathbb {R}^{k_2}\}\). We assume that \(\mathbb {W}\) is compact.

By complying to a policy \(\pi _w\), the behaviour of the MDP reduces to a Markov chain defined by the transition probabilities \(\mathrm {P}_{w}(s, s^{\prime }) = \sum _{a \in \mathbb {A}}\pi _{w}(a \vert s)\mathrm {P}(s, a, s^{\prime })\). The performance of a policy is usually quantified by a value function which is defined as the long-run \(\gamma \)-discounted transition costs (\(\gamma \in [0,1)\)) incurred by the MDP while following the policy. The value function is formally defined as follows: \(V^{w}(s) = \mathbb {E}_{w}\left[ \sum _{t \in \mathbb {N}}\gamma ^{t}\mathrm {R}(\mathbf {s}_t, \mathbf {a}_t, \mathbf {s}_{t+1}) \vert \mathbf {s}_0 = s\right] , s \in \mathbb {S}\), where \(\mathbb {E}_{w}[\cdot ]\) is the expectation w.r.t. the probability distribution of the Markov chain induced by the policy \(\pi _w\). And the primary goal in an RL setting is to find the optimal policy which solves \(\mathop {\arg \mathrm{max}}\nolimits _{w \in \mathbb {W}}V^{w}\) without any knowledge of the model parameters \(\mathrm {P}\) and \(\mathrm {R}\). However, observations in the form of sample paths which are realizations of the MDP under any arbitrary policy are made available.

Classical approaches have complexities which scale polynomial in the cardinality of the state space and hence are intractable. This is commonly referred to as the curse of dimensionality. Hence, one has to resort to approximation techniques in order to achieve tractability. In this paper, for value function estimation, we consider the linear function approximation. Here, for a given policy \(\pi _w\), we approximate its value function \(V^{w}\) by projecting it on to the subspace \(\{\varPhi x \vert x \in \mathbb {R}^{k_1}\}\), where \(\varPhi = (\phi _1, \phi _2, \dots , \phi _{k_1})^{\top }\), \(k_1 \ll \vert \mathbb {S}\vert \) and \(\phi _i \in \mathbb {R}^{\vert \mathbb {S}\vert }\), \(1 \le i \le k_1\) called the prediction features are chosen a priori. In order for the projection to be well-defined, we require the following assumption:

(A1): For each \(w \in \mathbb {W}\) , the Markov chain induced by the policy \(\pi _w\) is ergodic.

Under this assumption, one can define the weighted norm \(\Vert \cdot \Vert _{\nu }\) as follows: For \(V \in \mathbb {R}^{\vert \mathbb {S}\vert }\), \(\Vert V \Vert _{\nu } = (\sum _{s \in \mathbb {S}}V^{2}(s)\nu (s))^{\frac{1}{2}}\), where \(\nu \) is the limiting distribution (steady state distribution) of the given sample path. If the sample path is generated using a policy \(\pi _{w_b}\), \(w_b \in \mathbb {W}\) (referred to as the behaviour policy), then the limiting distribution is the stationary distribution \(\nu _{w_b}\) of the Markov chain induced by the behaviour policy \(\pi _{w_b}\). Therefore, the linear function approximation of the value function \(V^{w}\) is defined as follows:

where we assume that an infinitely long sample path \(\{\mathbf {s}_0, \mathbf {a}_0, \mathbf {r}_0, \mathbf {s}_1\), \(\mathbf {a}_1, \mathbf {r}_1, \mathbf {s}_2, \dots \}\) generated by the behaviour policy \(\pi _{w_b}\) (\(w_b \in \mathbb {W} \subseteq \mathbb {R}^{k_{2}}\) ) is available.

(A2): The behaviour policy \(\pi _{w_b}\) , where \(w_b \in \mathbb {W}\) , satisfies the following condition: \(\pi _{w_b}(a \vert s) > 0\), \(\forall s \in \mathbb {S}, \forall a \in \mathbb {A}\).

2 Proposed Algorithm

Our proposed approach has two components:

-

1.

A stochastic approximation (SA) version of the cross entropy (CE) method to solve the control problem (2). The SA version of the CE method is a zero-order, incremental, adaptive and stable global optimization method.

-

2.

A variation of the off-policy LSTD (\(\lambda \)) to compute the objective function values (i.e., \(\mathbb {E}_{\nu _w}\left[ h_{w \vert w}\right] \)).

We describe the above two components in detail here.

2.1 Stochastic Approximation Version of the Cross Entropy Method

Cross entropy method [4, 9] solves global optimization problems where the objective function does not possess good structural properties, i.e., those of the kind: Find \(x^{*} = \, \mathop {\arg \mathrm{max}}\nolimits _{x \in \mathbb {X} \subset \mathbb {R}^{d}} J(x)\), where \(J:\mathbb {X} \rightarrow \mathbb {R}\) is a bounded Borel measurable function (\(J_l< J(x) < J_u\), \(\forall x\)). CE is a zero-order method which implies that the algorithm does not require the gradient or higher-order derivatives of the objective function in order to seek the optimal solution. CE method has found successful application in diverse domains which include continuous multi-extremal optimization [7], reinforcement learning [5, 6] and several NP-hard problems [7, 8].

CE method generates a sequence of model parameters \(\{\theta _t\}_{t \in \mathbb {N}}\), \(\theta _t \in \varTheta \) (assumed to be compact) and a sequence of thresholds \(\{\gamma _t \in \mathbb {R}\}_{t \in \mathbb {N}}\), \(J_l \le \gamma _t \le J_u\) and the algorithm attempts to direct the sequence \(\{\theta _t\}\) towards the degenerate distribution concentrated at the global optimum \(x^{*}\) and \(\gamma _t\) towards \(J(x^{*})\). The threshold \(\gamma _{t+1}\) is usually taken as the \((1-\rho )\)-quantile of J w.r.t the PDF \(f_{\theta _t}\). (For \(\theta \in \varTheta \), we denote by \(\gamma _{\rho }(J, \theta )\) the \((1-\rho )\)-quantile of J w.r.t the PDF \(f_{\theta }\)). Henceforth, \(\gamma _{t+1} = \gamma _{\rho }(J, \theta _t)\). And the model parameter \(\theta _{t+1}\) is generated by projecting (w.r.t. the Kullback-Leibler (KL) divergence) on to the family of distributions \(\mathcal {F} \triangleq \{f_{\theta } \vert \theta \in \varTheta \}\), the zero-variance distribution concentrated in the region \(\{J(x) \ge \gamma _{t+1}\}\) with respect to the PDF \(f_{\theta _t}\). Thus,

with \(S:\mathbb {R}\rightarrow \mathbb {R}_{+}\) is a positive, monotonically increasing function.

In this paper, we consider the Gaussian distribution as the family of distributions \(\mathcal {F}\). In this case, the PDF is being parametrized as \(\theta =(\mu , \varSigma )^{\top }\), where \(\mu \) and \(\varSigma \) are the mean and the covariance of the Gaussian distribution respectively. Also, one can solve the optimization problem (3) analytically to obtain the following update rule:

Thus the CE algorithm can be expressed as \(\theta _{t+1} = (\varUpsilon _1(\theta _t, \gamma _{t+1}), \varUpsilon _2(\theta _t, \gamma _{t+1}))^{\top }\). However, this is the ideal scenario which is intractable due to the inability to compute the quantities \(\mathbb {E}_{\theta _t}[\cdot ]\) and \(\gamma _{\rho }(\cdot , \cdot )\). There are multiple ways one can track the ideal CE method. In this paper, we consider the efficient tracking of the ideal CE method using the stochastic approximation (SA) framework proposed in [1, 2]. The SA version of the CE method consists of three stochastic recursions which are defined as follows:

Here, \(\beta _t > 0\) and \(\mathsf {X}_{t+1} \sim \widehat{f}_{\theta _t}\), where the mixture PDF \(\widehat{f}_{\theta _t}\) is defined as \(\widehat{f}_{\theta _t} \triangleq (1-\zeta )f_{\theta _t} + \zeta f_{\theta _0}\), \(\zeta \in (0,1)\), \(f_{\theta _0}\) is the initial PDF. The mixture approach facilitates extensive exploration of the solution space and prevents the model iterates from getting stranded in suboptimal solutions.

2.2 Computing the Objective Function \(\mathbb {E}_{\nu _w}\left[ h_{w \vert w}\right] \)

In this paper, we employ the off-policy LSTD (\(\lambda \)) to approximate \(h_{w \vert w}\) for a given policy parameter \(w \in \mathbb {W}\). The procedure to estimate the objective function \(\mathbb {E}_{\nu _w}\left[ h_{w \vert w}\right] \) is formally defined in Algorithm 1. The Predict procedure in Algorithm 1 is almost the same as the off-policy LSTD algorithm. The recursion (step 9) attempts to estimate the objective function \(\mathbb {E}_{\nu _w}\left[ h_{w \vert w}\right] \) as follows:

For a given \(w \in \mathbb {W}\), \(\ell _{k}^{w}\) attempts to find an approximate value of the objective function J(w). The following lemma formally characterizes the limiting behaviour of the iterates \(\ell _{k}^{w}\).

Lemma 1

For a given \(w \in \mathbb {W}, \ell _{k}^{w} \rightarrow \ell ^{w}_{*} = \mathbb {E}_{\nu _{w_b}}\left[ x_{w \vert w_b}^{\top }\phi (\mathbf {s})\right] \) as \(k \rightarrow \infty \) w.p. 1, where

Here \(D^{\nu _{w_b}}\) is the diagonal matrix with \(D^{\nu _{w_b}}_{ii}=\nu _{w_b}(i)\), \(1 \le i \le \vert \mathbb {S}\vert \), where \(\nu _{w_b}\) is the stationary distribution of the Markov chain \(\mathrm {P}_{w_b}\) induced by the behavior policy \(\pi _{w_b}\) and \(\mathrm {R}^{w} \in \mathbb {R}^{\vert \mathbb {S} \vert }\) with \(\mathrm {R}^{w}(s) \triangleq \varSigma _{s^{\prime } \in \mathbb {S}, a \in \mathbb {A}}\pi _{w}(a \vert s)\mathrm {P}(s, a, s^{\prime })\mathrm {R}(s, a, s^{\prime })\).

Remark 1

By the above lemma, for a given \(w \in \mathbb {W}\), the quantity \(\ell _{k}^{w}\) tracks \(J_b(w) \triangleq \mathbb {E}_{\nu _{w_b}}\left[ x^{\top }_{w \vert w_b}\phi (\mathbf {s})\right] \). This is however different from the true objective function value \(J(w) = \mathbb {E}_{\nu _{w}}\left[ h_{w \vert w}\right] \), when \(w \ne w_b\). This additional approximation error incurred is the extra cost one has to pay for the discrepancy in the policy which generated the sample path.

2.3 Proposed Algorithm

Our algorithm to solve the control problem (2) is illustrated in Algorithm 2. The following theorem shows that the model sequence \(\{\theta _{t}\}\) and the averaged sequence \(\{\overline{\theta }_{t}\}\) generated by Algorithm 2 converge to the degenerate distribution concentrated on the global maximum of the objective function \(J_b\).

Theorem 1

Let \(S(x) = exp(rx), r \in \mathbb {R}\). Let \(\rho , \zeta \in (0,1)\) and \(\theta _0 = (\mu _0, q\mathbb {I}_{k_2 \times k_2})^{\top }\), where \(q \in \mathbb {R}_{+}\). Also, let \(c_t \rightarrow 0\) as \(t \rightarrow \infty \).. Let the learning rates \(\overline{\beta }_{t}\) and \(\beta _{t}\) satisfy \(\sum _t \beta _t = \sum _t \overline{\beta }_t = \infty \), \(\sum _t \beta ^{2}_t + \overline{\beta }^{2}_t < \infty \). Let \(\{\theta _{t} = (\mu _{t}, \varSigma _{t})\}_{t \in \mathbb {N}}\) and \(\{\overline{\theta }_{t} = (\overline{\mu }_{t}, \overline{\varSigma }_{t})\}_{t \in \mathbb {N}}\) be the sequences generated by Algorithm 2 and also assume \(\theta _{t} \in \varTheta \), \(\forall t \in \mathbb {N}\). Let \(\overline{\beta }_{t} = o (\beta _{t})\). Let \(w_b \in \mathbb {W}\) be the chosen behaviour policy vector. Also, let the assumptions (A1–A2) hold. Then, there exists \(q^{*} \in \mathbb {R}_{+}\) and \(r^{*} \in \mathbb {R}_{+}\) s.t. \(\forall q > q^{*}\) and \(\forall r > r^{*}\),

where \(w^{b*} \in \mathop {\arg \mathrm{max}}\nolimits _{w \in \mathbb {W}} J_b(w)\) with \(J_b(w) \triangleq \mathbb {E}_{\nu _{w_b}}\left[ x^{\top }_{w \vert w_b}\phi (\mathbf {s})\right] \).

3 Experimental Illustrations

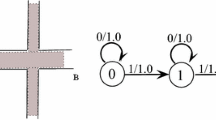

The performance of our algorithm is evaluated on the chain walk MDP setting. This particular setting which is being proposed in [3] demonstrates the scenario where policy iteration is non-convergent when approximate value functions are employed instead of true ones. Here \(\vert \mathbb {S} \vert = 300\), \(\mathbb {A} = \{L, R\}\), \(k_1=5\), \(k_2=10\) and the discount factor \(\gamma = 0.99\). The reward function \(R(\cdot , \cdot , 100) = R(\cdot , \cdot , 200) = 1.0\) and zero for all other transitions. The transition probability kernel is given by \(P(s, L, s+1) = 0.1\), \(P(s, L, s-1) = 0.9\), \(P(s, R, s+1) = 0.9\) and \(P(s, R, s-1) = 0.1\). We choose the behaviour policy vector \(w_{b} = (0,0,\dots ,0)^{\top }\). We employ the Gibbs “soft-max” class of policies: \(\pi _{w}(a | s) = \frac{e^{(w^{\top }\psi (s, a)/\tau )}}{\sum _{b \in \mathbb {A}}e^{(w^{\top }\psi (s,b)/\tau )}}\), where \(\{\psi (s, a) \in \mathbb {R}^{k_{2}} \vert s \in \mathbb {S}, a \in \mathbb {A}\}\) is a given policy feature set and \(\tau \in \mathbb {R}_{+}\) is fixed a priori. We employ radial basis functions (RBF) as both policy and prediction features, i.e.,

The plot of the respective optimal value functions contrived by LSPI, Greedy-GQ and Algorithm 2 for the chain walk MDP setting. The optimal solutions of various algorithms are being developed by averaging over 10 independent trials. For Algorithm 2, we averaged the various optimal solutions obtained for different sample trajectories generated using the same behaviour policy, but with different initial states which are chosen randomly. Our approach (Algorithm 2) literally surpassed other algorithms in terms of its quality. The random choice of the initial state ineffectively favoured sufficient exploration of the state space which directly assisted in generating high quality solutions.

where \(m_i = 5+10(i-1), v_i = 5\), \(1 \le i \le 5\) (Fig. 1).

The results are shown in Fig. 2.

4 Conclusion

We propose an adaptation of the cross entropy method to solve the control problem in reinforcement learning under an information restricted setting, where only a single sample path generated using a priori chosen behaviour policy is available. The proposed algorithm is shown to converge to the solution which is globally optimal relative to the behaviour policy.

References

Joseph, A.G., Bhatnagar, S.: A randomized algorithm for continuous optimization. In: Winter Simulation Conference, WSC 2016, Washington, DC, USA, 11–14 December 2016, pp. 907–918 (2016)

Joseph, A.G., Bhatnagar, S.: Revisiting the cross entropy method with applications in stochastic global optimization and reinforcement learning. In: Frontiers in Artificial Intelligence and Applications, (ECAI 2016), vol. 285, pp. 1026–1034 (2016)

Koller, D., Parr, R.: Policy iteration for factored MDPS. In: Proceedings of the Sixteenth Conference on Uncertainty in Artificial Intelligence, pp. 326–334. Morgan Kaufmann Publishers Inc. (2000)

Kroese, D.P., Porotsky, S., Rubinstein, R.Y.: The cross-entropy method for continuous multi-extremal optimization. Methodol. Comput. Appl. Probab. 8(3), 383–407 (2006)

Mannor, S., Rubinstein, R.Y., Gat, Y.: The cross entropy method for fast policy search. In: ICML, pp. 512–519 (2003)

Menache, I., Mannor, S., Shimkin, N.: Basis function adaptation in temporal difference reinforcement learning. Ann. Oper. Res. 134(1), 215–238 (2005)

Rubinstein, R.: The cross-entropy method for combinatorial and continuous optimization. Methodol. Comput. Appl. Probab. 1(2), 127–190 (1999)

Rubinstein, R.Y.: Cross-entropy and rare events for maximal cut and partition problems. ACM Trans. Model. Comput. Simul. (TOMACS) 12(1), 27–53 (2002)

Rubinstein, R.Y., Kroese, D.P.: The Cross-Entropy Method: A Unified Approach to Combinatorial Optimization, Monte-Carlo Simulation and Machine Learning. Springer, New York (2013)

Acknowledgement

This work was supported in part by the Robert Bosch Centre for Cyber-Physical Systems, Indian Institute of Science, Bangalore.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Joseph, A.G., Bhatnagar, S. (2017). An Incremental Fast Policy Search Using a Single Sample Path. In: Shankar, B., Ghosh, K., Mandal, D., Ray, S., Zhang, D., Pal, S. (eds) Pattern Recognition and Machine Intelligence. PReMI 2017. Lecture Notes in Computer Science(), vol 10597. Springer, Cham. https://doi.org/10.1007/978-3-319-69900-4_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-69900-4_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-69899-1

Online ISBN: 978-3-319-69900-4

eBook Packages: Computer ScienceComputer Science (R0)