Abstract

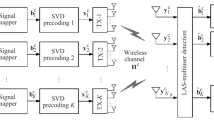

Signal detection scheme is the key technology to the implementation of multiple-input multiple-output (MIMO) wireless communication system, while the spatial-multiplexing coded MIMO systems cause a severe design challenge for signal detection algorithms. Although many researches focus on searching the solution space for optimal solution based on more efficient searching algorithm, the signal detection of MIMO system does not regarded as a classification problem. In this paper, the detection problem is considered as a feature classification, and a novel signal detection scheme of MIMO system based on extreme learning machine auto encoder (ELM-AE) is proposed. The proposed algorithm can efficiently extract the features of input data by ELM-AE and classify these representations to corresponding groups rapidly by using extreme learning machine (ELM). This paper has constructed a theoretical model of the proposed signal detector for MIMO system and carried out simulations to evaluating its performance. Simulation results indicate that the proposed detector outperforms many traditional schemes and state-of-the-art algorithms.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Telatar, E.: Capacity of multi-antenna Gaussian channels. Trans. Emerg. Telecommun. Technol. 10(6), 585–595 (1999)

Zhu, X., Murch, R.D.: Performance analysis of maximum likelihood detection in a MIMO antenna system. IEEE Trans. Commun. 50(2), 187–191 (2002)

Liu, D.N., Fitz, M.P.: Low complexity affine MMSE detector for iterative detection-decoding MIMO OFDM systems. IEEE Trans. Commun. 56(1), 150–158 (2008)

Xu, J., Tao, X., Zhang, P.: Analytical SER performance bound of M-QAM MIMO system with ZF-SIC receiver. In: IEEE International Conference on Communications, pp. 5103–5107 (2008)

Sarkar, S.: An advanced detection technique in MIMO-PSK wireless communication systems using MMSE-SIC detection over a Rayleigh fading channel. CSI Trans. ICT 3(10), 1–7 (2016)

Li, F., Zhou, M., Li, H.: A novel neural network optimized by quantum genetic algorithm for signal detection in MIMO-OFDM systems. In: IEEE Symposium on Computational Intelligence in Control & Automation, CICA, pp. 170–177. IEEE (2011)

Yang, Y., Hu, F., Jiang, Z.: Signal detection of MIMO system based on quantum ant colony algorithm. In: IEEE International Conference on Signal Processing, Communications and Computing, ICSPCC, pp. 1–5. IEEE (2016)

Huang, G.B., Li, M.B., Chen, L., Siew, C.K.: Incremental extreme learning machine with fully complex hidden nodes. Neurocomputing 71(4–6), 576–583 (2008)

Huang, G.B.: An insight into extreme learning machines: random neurons, random features and kernels. Cogn. Comput. 6(3), 376–390 (2014)

Kasun, L.L.C., Zhou, H., Huang, G.B., Chi, M.V.: Representational learning with ELMs for Big Data. IEEE Intell. Syst. 28(6), 31–34 (2013)

Liu, L., Lofgren, J., Nilsson, P.: Low-complexity likelihood information generation for spatial-multiplexing MIMO signal detection. IEEE Trans. Veh. Technol. 61(2), 607–617 (2012)

Rusek, F., Persson, D., Lau, B.K., Larsson, E.G., Marzetta, T.L., Edfors, O., Tufvesson, F.: Scaling up MIMO: opportunities and challenges with very large arrays. IEEE Signal Process. Mag. 30(1), 40–60 (2012)

Huang, G.B., Chen, L., Siew, C.K.: Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans. Neural Networks 17(4), 879–892 (2006)

Huang, G.B., Zhou, H., Ding, X., Zhang, R.: Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B 42(2), 513–529 (2012)

Tang, J., Deng, C., Huang, G.B.: Extreme learning machine for multilayer perceptron. IEEE Trans. Neural Netw. Learn. Syst. 27(4), 809 (2016)

Hoerl, A.E., Kennard, R.W.: Ridge regression: biased estimation for nonorthogonal problems. Technometrics 12(1), 55–67 (1970)

Fei, W., Ye, X., Sun, Z., Huang, Y., Zhang, X., Shang, S.: Research on speech emotion recognition based on deep auto-encoder. In: IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems, CYBER (2016)

Masci, J., Meier, U., Cireşan, D., Schmidhuber, J.: Stacked convolutional auto-encoders for hierarchical feature extraction. In: Honkela, T., Duch, W., Girolami, M., Kaski, S. (eds.) ICANN 2011. LNCS, vol. 6791, pp. 52–59. Springer, Heidelberg (2011). doi:10.1007/978-3-642-21735-7_7

Acknowledgments

The authors would like to thank the anonymous reviewers for their constructive and insightful comments for further improving the quality of this work. The research work was partially supported by the National Natural Science Foundation of China under Grant (61263005, 61563009), New Century Talents Project of the Ministry of Education under Grant No. NCET-12-0657.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Long, F., Yan, X. (2017). ELM-Based Signal Detection Scheme of MIMO System Using Auto Encoder. In: Liu, D., Xie, S., Li, Y., Zhao, D., El-Alfy, ES. (eds) Neural Information Processing. ICONIP 2017. Lecture Notes in Computer Science(), vol 10639. Springer, Cham. https://doi.org/10.1007/978-3-319-70136-3_53

Download citation

DOI: https://doi.org/10.1007/978-3-319-70136-3_53

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-70135-6

Online ISBN: 978-3-319-70136-3

eBook Packages: Computer ScienceComputer Science (R0)