Abstract

With the increasing demands for videos over wireless networks, the weak backhaul links can hardly provide desirable service. Caching, a technique to reduce peak traffic rates and improve the QoE of cellular users, has become quite popular. In this paper, we study the video caching and delivery problem from the point of maximizing the QoE of cellular users and the profits of both the network operator (MNO) and the video provider (VP). We consider a commercial cellular caching system consisting of a VP, a MNO and multiple cellular users. Generally, the VP may lose its users if the users are not getting their desired QoE. Meanwhile, by introducing the caching technique, the MNO can greatly reduce the accessing delay of the cellular users, thus improve their QoE performance. We formulate the profit maximization problem of the VP, MNO and cellular users as a tri-party joint optimization problem, and then solve it through primal dual decomposition method. Numerical results are provided for verifying the effectiveness of the proposed method.

J. Zou—This work has been partially supported by the NSFC grants No. 61622112 and No. 61472234, and Shanghai Natural Science Foundation grant No. 14ZR1415100.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

With the rapid development of mobile video applications, the mobile network operator (MNO) has been under great pressure and shown no sign of suspend. According to the cisco visual networking index mobile forecast for 2016–2021 [1], mobile data traffic is expected to grow at a CAGR of 47 percent to 49 exabytes (one million gigabytes) per month by 2021, among which the leading contributor is the video traffic generated by the mobile users. To cope with the tremendous traffic increase, some changes are required for the current cellular infrastructure. One effective solution is to introduce caching technique at the base station (BS), i.e., to cache video content in advance to the BS that is geographically closer to the users [2, 3]. As the caching-capable BS can enable high density spatial reuse of the wireless resources with high speed localized communication, which is usually assumed to be faster than the backhaul links connected to the VP. On the other hand, adaptive bit rate streaming has become a popular video delivery technique, guaranteed with improving QoE of videos delivered on wireless networks. Therefore, every video file can be delivery with different rate versions to accommodate different media terminal and network channel condition. Achieving the appropriate scheme of caching and delivery strategy is currently one of the most important challenges for the commercial cellular caching networks.

Generally, whenever a mobile user make a playback request for a video, it will first attempt to find an available version from the adjacent BS. If the local BS has cached the corresponding version, the mobile user will download from the BS and playback the video, otherwise it has to download the video file from the VP through weak and costly backhaul links. There are three issues should be considered: the mobile users will suffer a long access latency when the cellular network is congested. For the BS which belongs to the MNO has to use the expensive weak backhaul to retrieve video file from remote VP, when the video is not cached in the BS while the users request for it. For the VP who own all the video files, tries to earn profits by serving as many users as possible. In this work, we consider three interest groups consisting of users, MNO and VP. The VP may lose its users if the users cannot achieve the desired QoE, thus the proportion of attrition cost caused by user losses can be used as the measure of VP revenues. In the meanwhile, the congestion of wireless or backhaul links will inevitably increase the access delay of the users to the video streaming. Therefore, the access delay can be used to measure the QoE performance of the users. Further, the MNO, by storing popular videos at its BSs, can earn the profit from reducing the repetitive video transmissions over the backhaul link.

The challenge of catering to video file, as a dominant type of traffic, is critical for commercial cellular network system. Up to now, many researchers attempted to improve cache utilization with various caching strategies, such as uncoded caching and coded caching [4, 5], transcoding [6] and so on. The authors of [7] present a novel strategy in ICNs for adaptive caching of variable video files, which is to achieve optimal video caching to reduce access delay for the maximal requested bit rate for each user. However, it only considered the profit of the user. In [8,9,10], the authors considered a commercial caching network, and solved the problem of maximizing the profit of both the VP and the MNO by Stackelberg game, but they did not consider the user’s profit and the case in which each video can be encoded into multiple rate versions. The authors in [11] considered the joint derivation of video caching and routing policies for users with different quality requirements, but did not consider the VP’s profit. There are some studies which have simultaneously considered the problem of video caching and delivery [12, 13], but the problem of addressing video caching and delivery over different bit rates remains untouched.

In this paper, we propose a caching strategy to handle multirate video content caching and delivery in a commercial cellular network. Our objective is to maximize the overall profits of the cellular network, which includes the profits of the mobile users, the MNO, and the VP. We formulate the caching problem as a tri-party joint cost minimization problem, and use primal-dual decomposition method [14] to decouple the problem into two optimization problems, which are solved by the subgradient method and greedy algorithm, respectively. The main contributions of this article are summarized as follows:

-

1.

We propose a multi-objective optimization problem, and then formulate it as a tri-party joint cost minimization (profit maximization) problem. Unlike the previous works which mostly focused either the MNO and the users, or the VP and MNO, or the users only, we consider together these three groups with conflicting interests, and model their profit maximization problem as a weighted tri-party joint optimization problem.

-

2.

We use the Lagrange relaxation and primal-dual decomposition method to decompose the original optimization problem into two subproblems. The first subproblem, called as the delivery subproblem, decides the portion of the requests satisfied by the BSs. As it is a typical convex problem, we solve it by using the standard convex optimization methods. For the second subproblem, called as the caching subproblem, it decides whether a video should be cached in a BS. It can be viewed as a knapsack problem, and we can solve it through greedy algorithm.

-

3.

Numerical results illustrate that the proposed caching strategy can significantly improve not only the users’ profits but the profit of VP and MNO for a commercial cellular network.

The remainder of the paper is organized as follows. Section 2 presents the system model and the related specifications. In Sect. 3, we formulate the tri-party joint minimization problem and demonstrate the distributed solution. Our experiment setup and performance evaluation results are detailed in Sect. 4, and our conclusions are summarized in Sect. 5.

2 System Model

In this section, we detail the assumptions and definitions upon which our system model is built.

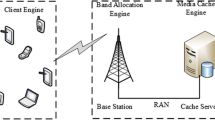

The system architecture is depicted in Fig. 1. We consider a MNO consisting of a set \(I = \{ 1,2,...i..\left| I \right| \} \) of caching enabled BSs connected to a VP through backhaul links which is too weak to meet so much user requests. Under each BS, there are a number of user requests for video streaming service. When a user requests some video file j, it first asks its local BS (i.e. the BS in the neighborhood of the user). If the video file is available at the local BS, it handles the request directly. Otherwise, the request would be redirected to the VP. We study the system for a certain time period during which each BS \(i \in I\) has an average capacity of \({F_i} \ge 0\) bps, while the capacity of the backhaul link is \({G_i} \ge 0\) bps, and each BS is endowed with a certain storage capacity of \({S_i} \ge 0\) bytes. We assume that the BSs in the system cannot communicate with each other.

The VP contains all \(J = \{ 1,2,...j..\left| J \right| \} \) video files and each video can be delivered with different rate versions. We assume that for each video \(j \in J\), there is a set \(Q= \{ 1,2,...q..\left| Q \right| \}\) of rate versions that can be offered. Each rate version \(q \in Q\) corresponds to a certain playback rate \({R_{jq}}\) and has size \({o_{jq}}\) bytes which increases with the rate (i.e. \({o_{jq}} \ge {o_{jr}}\) if \(q > r\)). Moreover, the file size is assumed to be an affine function: \({o_{jq}} = aq + b\) [15]. The important notations are summarized in Table 1.

User Request Model. The rate version of video j requested by the user is characterized by \({R_{jq}}\). In this paper, we assume the rate versions requested by the users follow a uniform distribution. Due to the constrained wireless capacity, there must be some requests that can not be satisfied. Let \({y_{ijq}} \in [0,1]\) represent the portion of the requests for video j with version q that can be served by the corresponding BS i. For the required video which is not cached at the BS will be turned to the VP through the backhaul link, and we denote the portion of such request served by the VP as \({z_{ijq}}\in [0,1]\).

Content Caching Model. Whether video j with version q will be cached in BS i is denoted by a binary matrix \({x_{ijq}} \in \{0,1\} \), where \({x_{ijq}}=1\) means video j with version q is placed in BS i and 0 otherwise. In order to minimizing the average access latency, the content caching policy must be carefully designed based on the user requests.

3 Problem Formulation

In this section, we first define the profit of the users, the MNO and the VP respectively, and then formulate the tri-party joint optimization problem. Finally, we present out distributed solution.

3.1 Profit Modeling

Users Profits. We use the user access delay to measure the user profit. The average delay \({\overline{d} _{ijq}} \) experienced by the users at BS i for downloading video file j with version q depends on the path and the congestion of the respective links. In order to serve a user by a BS, the requested video should either be already cached there or retrieved via the backhaul link. This latter option adds delay and may be quite significant. Ideally, we want the objective function to reflect the congestion level of the link, so the caching strategy will avoid the congestion of corresponding link. A common option that meets this requirement is to use the average delay in a M/M/1 queue, expressed by \(D(f) = \frac{1}{{C - f}},f < C\), where C denotes the link capacity and f denots the load of correspond links. Therefore, the average delay for the users accessing to version q of video j at BS i can be defined as

where \({A_i}(y) = \sum \limits _j {\sum \limits _q {{y_{ijq}}{\lambda _{ij}}{R_{jq}}} } \) is the load of the wireless link of BS i, and for the backhaul link we have \({B_i}(z) = \sum \limits _j {\sum \limits _q {{z_{ijq}}{\lambda _{ij}}{R_{jq}}} } \). Inequality (3) indicates the storage capacity constraint of BS i. Inequality (4) and (5) indicate the capacity constraints of the wireless links and the backhaul links, respectively.

MNO Profit. The revenue gained by MNO is from the saved cost of the backhaul link due to local caching. Once a user downloads a required video from the local storage, the MNO can save a video transmission over the backhaul link. Therefore, we have the MNO profit defined as

VP Profit. Users who are experiencing high delays or do not get the service he wants when streaming a video from one VP may switch to another. This will lead to the losses of the former VP. When it occurs we call the VP has a user attrition cost [16]. We use the user attrition cost to measure the VP’s profit, and the user attrition cost is formulated as the penalty of the requests that are unserved.

where t denotes the unit cost of user attrition. Our objective here is to serve as many users as possible through both local BSs and the remote VP.

Tri-party Joint Minimization Problem (TJM Problem). According to the foregoing analysis, we have three objective functions, which include maximizing the MNO profit, minimizing the user access delay, and minimizing the user attrition cost. We change this multiobjective optimization problem as a single objective optimization problem through the weighted method [17]. That is, we introduce a weighted system parameter \(\alpha \in [0,1]\) and combine these three objective functions together into a single objective optimization problem [18] as follows:

3.2 Distributed Algorithm

To solve the above problem in a distributed manner, we relax the constraints (2), (4), (5) and formulate the Lagrange function as

where \({u_{ijq}},{v_{ijq}}\) and \({w_{ijq}}\) are Lagrange multipliers. In addition, the corresponding Lagrange dual function is

The Lagrange dual problem of (10) is then defined as: \(\hbox {max} \; g(u,v,w)\), which can be solved in an iterative fashion, using a primal-dual Lagrange method. Notice that due to the discrete constraint set, we have to employ a subgradient method for updating the dual variables.

where \({[*]^ + }\) denotes the projection onto the set of nonnegative real numbers, and \({\tau ^{(n)}}\) is a positive step size.

The primal problem can be further decomposed into two subproblems, named P1 and P2, as follows:

3.3 Implementation Problem

A decentralized solution procedure of the proposed primal-dual algorithm is summarized in Algorithm 1. For each BS i, subproblem P1 involves only the delivery decision variables \({y_{ijq}}\) and \({z_{ijq}}\), we call it delivery subproblem. Since the objective function of P1 is convex and there exits strictly feasible solutions. Hence, it can be efficiently solved using standard convex optimization techniques [18]. By using the subgradient method, we have

Subproblem P2 involves only the caching decision variables \({x_{ijq}}\), we call it caching subproblem. It can be separated into \(\left| I \right| \) unidimensional knapsack problems, one for each \(i \in I\). The optimal solution of each knapsack problem for \(i \in I\) can be optimally solved using greedy algorithm in a distributed manner.

3.4 Convergence Analysis

Algorithm 1 converges asymptotically to the optimal solution \(x_{ijq}^*\), \({y_{ijq}^*}\), \({z_{ijq}^*}\). A formal proof of the convergence directly follows from the properties of the decomposition principle [11]. In general, either constant step sizes or diminishing step sizes can be used for a subgradient algorithm [19]. A constant step size is more convenient for distributed implementation, whereas the corresponding subgradient algorithm will only converge to some suboptimal solution within any given small neighborhood around the optimum [14]. Using a diminishing step size, the convergence to the optimum can be guaranteed.

4 Numerical Analysis and Result

In this section, we present the numerical results for the performance evaluation of our proposed tri-party joint optimization policy. For the numerical analysis, we consider a commercial cellular caching network consisted of a VP and a MNO which contains \(I=100\) of BSs with 1000 mobile users are uniformly placed in random statistically independent positions in the cell. We use Zipf distribution [20] to model the popularity of video files.

In all simulations, we assume there totally have \(\left| J \right| = 100\) video files, each of which can be delivered in \(\left| Q \right| = 2\) versions. The size of a version of a video \({o_{jq}}\) is equal to 10 and 20 units of data in the low and the high quality level respectively and thus the playback rate \({R_j}\) is equal to 9.5 and 19.5 respectively as we set \(a = 1\) and \(b = 0.5\) [15]. Within a certain period, each user requests a video file follows a Zipf distribution with a Zipf exponent \(\gamma = 0.8 \) and follows a uniform probability distribution for which version. Unless otherwise specified, The capacity of wireless links \({F_i} = 100\) and backhaul links \({G_i} = 50\). We also set \(t=1000\) and \({c^{bh}} = 200\) which can be a arbitrary constant in simulation and it can be set by the VP in reality.

We compare the percentage of users that can be served by our commercial cellular caching system which are shown in Fig. 2(a). Noted that all ratios of users that can be served are obtain under the condition of wireless and backhaul capacity constrained. In Fig. 2(a) we observe that the ratio of users that can be served is increased gradually with the size of the storage capacity. It is obvious that each local BS can cache more popularity video files with the increase of storage capacity, and thus, more user requests can be satisfied locally, the weak backhaul capacity can naturally be saved, therefore, under the same conditions, when one can’t find the video he want in the local BS will be served by VP through the previously saved backhaul capacity. So, to a certain extent it is increased the proportion of users can be served by the proposed caching policy.

In Fig. 2(b) we observe that the total cost of the tri-party decreases with the size of storage capacity, this is because more user requests can be served by local BS with the increase of storage capacity, as more popularity videos can be cached in the storage of BS in advance and lead to great reduction of duplicated video transmission. On the other hand, there are lots of backhaul resources saved with the reduction of duplicated transmission that can be used to serve more user requests, which cannot get deserved service from local BS.

In upper Fig. 3 we can see that the cost of users (i.e. user access delay) decrease with the size of storage capacity, this is because more users can be served by the adjacent BS where is geographically closer to the users directly, and it’s obviously faster than the backhaul links connected to VP with reduced access delay. Due to more users can download required video from the local storage, the MNO can save much video transmission over the backhaul link too. Therefore, from middle Fig. 3 we can see the profit of MNO (i.e. reduction of the backhaul cost) increases with the storage capacity. From lower Fig. 3 we can see the user attrition decreases with the size of storage capacity, this is because more user requests can be served by the local cache of BS and in the meanwhile the number of requests served by VP observably decreased, and with considerable saved backhaul resource, the VP can serve more users that can’t get desired service from local BS. Therefore, with the increase of storage capacity the profit of the users, the MNO and the VP are increased dramatically.

The impact of Zipf parameter \(\gamma \) on the tri-party serving cost is illustrated in Fig. 4. We see that the average serving cost of our proposed scheme in certain caching system is obviously lower than in no caching system. We can observe that as the Zipf parameter \(\gamma \) increases (from 0.1 to 1.7) the corresponding optimal average tri-party serving cost decreases dramatically. This is because with the increase of Zipf parameter \(\gamma \) a few popular videos account for a large percentage of video traffic, and through our strategy most popular videos are cached in the local BSs despite the constrained of storage capacity.

5 Conclusion

In this paper, we addressed the profit maximization problem of the tri-party joint, which consisting of mobile users, VP and MNO, by introducing a weighted coefficient to formulate our three object functions as one tri-party joint minimization problem. Then we solve the problem through prim dual decompose method. By observation, we discovering that we can decompose the primal profit maximization problem to a caching problem and a delivery problem, which can be solved by greedy algorithm and subgradient method, respectively. Finally, abundant simulation results are testified the proposed scheme.

References

Cisco Visual Networking Index: Global Mobile Data Traffic Forecast Update, 2016–2021 White Paper. http://www.cisco.com/c/en/us/solutions/collateral/service-provider/visual-networking-index-vni/mobile-white-paper-c11-520862.html. Accessed 09 July 2017

Molisch, A.F., Caire, G., Ott, D., et al.: Caching eliminates the wireless bottleneck in video-aware wireless networks. An Overview Paper Submitted to Hindawi’s J. Adv. Electr. Eng. 2014(9), 74–80 (2014)

Wang, X., Chen, M., Taleb, T., et al.: Cache in the air: exploiting content caching and delivery techniques for 5G systems. IEEE Commun. Mag. 52(2), 131–139 (2014)

Shanmugam, K., Golrezaei, N., Dimakis, A.G., et al.: FemtoCaching: wireless content delivery through distributed caching helpers. IEEE Trans. Inf. Theory 59(12), 8402–8413 (2013)

Maddah-Ali, M.A., Niesen, U.: Fundamental limits of caching. IEEE Trans. Inf. Theory 60(5), 2856–2867 (2014)

Jin, Y., Wen, Y., Westphal, C.: Optimal transcoding and caching for adaptive streaming in media cloud: an analytical approach. IEEE Trans. Circuits Syst. Video Technol. 25(12), 1914–1925 (2015)

Li, W., Oteafy, S.M.A., Hassanein, H.S.: Dynamic adaptive streaming over popularity-driven caching in information-centric networks. In: 2015 IEEE International Conference on Communications, pp. 5747–5752 (2015)

Li, J., Chen, W., Xiao, M., et al.: Efficient video pricing and caching in heterogeneous networks. IEEE Trans. Veh. Technol. 65(10), 8744–8751 (2016)

Li, J., Sun, J., Qian, Y., et al.: A commercial video-caching system for small-cell cellular networks using game theory. IEEE Access 4, 7519–7531 (2016)

Li, J., Chen, H., Chen, Y., et al.: Pricing and resource allocation via game theory for a small-cell video caching system. IEEE J. Select. Areas Commun. 34(8), 2115–2129 (2016)

Poularakis, K., Iosifidis, G., Argyriou, A., et al.: Video delivery over heterogeneous cellular networks: optimizing cost and performance. In: 2014 IEEE International Conference on Computer Communications, pp. 1078–1086 (2014)

He, J., Zhang, H., Zhao, B., et al.: A Collaborative Framework for In-network Video Caching in Mobile Networks. Eprint Arxiv (2014)

Jiang, W., Feng, G., Qin, S.: Optimal cooperative content caching and delivery policy for heterogeneous cellular networks. IEEE Trans. Mob. Comput. 16(5), 1382–1393 (2017)

Palomar, D., Chiang, M.: A tutorial on decomposition methods and distributed network resource allocation. IEEE J. Sel. Areas Commun. 24(8), 1439–1451 (2006)

Zhang, W., Chen, Z., Chen, Z., et al.: QoE-driven cache management for HTTP adaptive bit rate streaming over wireless networks. IEEE Trans. Multimedia 15(6), 1431–1445 (2013)

Gharaibeh, A., Khreishah, A., Ji, B., et al.: A provably efficient online collaborative caching algorithm for multicell-coordinated systems. IEEE Trans. Mob. Comput. 15(8), 1863–1876 (2015)

Miettinen, K.: Nonlinear Multiobjective Optimization. Kluwer Academic Publishers, Norwell (1999)

Zou, J., Xiong, H., Li, C., et al.: Lifetime and distortion optimization with joint source/channel rate adaptation and network coding-based error control in wireless video sensor networks. IEEE Trans. Veh. Technol. 60(3), 1182–1194 (2011)

Bertsekas, D.: 6.253 convex analysis and optimization. Athena Sci. 129(2), 420–432 (2004). Spring 2004

Breslau, L., Cao, P., Fan, L., et al.: Web caching and Zipf-like distributions: evidence and implications. In: Proceedings of IEEE INFOCOM, vol. 1, pp. 126–134 (1999)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Zhai, C., Zou, J., Zhang, Y. (2017). Video Content Caching and Delivery for Tri-Party Joint Profit Optimization. In: Zhao, Y., Kong, X., Taubman, D. (eds) Image and Graphics. ICIG 2017. Lecture Notes in Computer Science(), vol 10667. Springer, Cham. https://doi.org/10.1007/978-3-319-71589-6_22

Download citation

DOI: https://doi.org/10.1007/978-3-319-71589-6_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-71588-9

Online ISBN: 978-3-319-71589-6

eBook Packages: Computer ScienceComputer Science (R0)