Abstract

Using 3DS MAX to obtain elemental image (EI) array in the virtual integral imaging system need to put large scale camera array, which is difficult to be applied to practice. To solve this problem we establish a sparse acquisition integral imaging system. In order to improve the accuracy of disparity calculation, a method using color segmentation and integral projection to calculate the average disparity value of each color object between two adjacent images is proposed. Firstly, we need to finish the establishment of virtual scene and microlens array model in 3DS MAX. According to the mapping relationship between EI and sub image (SI), we can obtain the SI by first, then calculate to the EI. The average value of the disparity from different color objects between adjacent images is acquired based on color image segmentation method and integral projection method, and then translate a rectangular window of fixed size in accordance with the average disparities to intercept the rendered output images to get the sub images (SIs). Finally, after stitching and mapping of the SIs we obtain the elemental images (EIs), put the EIs into the display device to display 3-dimensional (3D) scene. The experimental results show that we can only use 12 * 12 cameras instead of 59 * 41 cameras to obtain EIs, and the 3D display effect is obvious. The error rate of disparity calculation is 0.433% in both horizontal and vertical directions, which is obviously better than other methods with disparity error rate of 2.597% and 4.762%. The sparse acquisition integral imaging system is more accurate and more convenient which can be used for EI content acquisition for large screen 3D displaying.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Integral imaging technology [1,2,3] is part of the advanced 3D display technologies currently on the international stage. It is an autostereoscopic and multi-perspective stereoscopic imaging technique that captures and reproduces a light field by using a 2D array of microlenses. In recent years, with the development of industrial production and audience’s love of watching 3D movies at the cinema, 3D display technology has become a hot research. However, people need to wear 3D glasses while watching 3D movies, the viewing experience is not perfect. 3D movies are based on binocular disparity principle [4], which separate the left and right eye images, and produce a stereoscopic feeling by the fusion of the brain, watching for a long time will produce visual fatigue. The integral imaging technology does not need people to wear any device. It is a real representation of 3D scene without visual fatigue which provides continuous image disparities and true color images. It has good application prospects.

The integral imaging system is mainly composed of two parts which are picking up process and displaying process. In the pick up process, each microlens allows an image of the subject as seen from the viewpoint of that lens’s location to be acquired. In the display process, each microlens allows each observing eye to see only the area of the associated micro-image containing the portion of the subject that would have been visible through that space from that eye’s location. There are mainly two kinds of integral imaging systems which are optical integral imaging system and computer virtual integral imaging system. The computer virtual pick up method [5] is the most popular EI content acquisition method. However, direct shooting of EIs need us to place a camera array which contains large number of cameras. The rendering process of direct shooting usually lasts for a long time and it is prone to error. Due to the mapping relationship between EI array and SI array [6, 7], we propose a method which obtains the SI array first and then calculates to the EI array. This method reduces the number of cameras required and achieves sparse acquisition integral imaging. In view of the present situation, we establish a camera array as virtual microlens array to pick up and render output images by 3DS MAX [8]. And then we use color segmentation to separate objects of different colors, after that, the horizontal disparity and vertical disparity of the adjacent images of the same color objects are obtained by integral projection method, and the average disparities in the horizontal and vertical directions of several objects satisfying the condition are selected as the final disparities. Next, we translate a rectangular window of fixed size according to the disparity values to intercept rendered output images to get SI array, and the EI array is obtained after mapping. Finally, the 3D images are captured by our device on the optical platform, which verifies the sparse acquisition integral imaging system.

2 Theory

2.1 Camera Imaging Principle

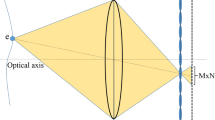

3D Studio Max, often referred to as 3DS MAX, is a 3D modeling and rendering software helps us to pick up scenes for design visualization. There are free camera and target camera in 3DS MAX which can be used to simulate the real camera to pick up target objects. Figure 1 is the pick up and display process of traditional integral imaging system.

The imaging principle [9] is shown in Fig. 2. Where u is the distance from 3D object to the camera lens, v is the distance between the film and camera lens, L is the edge length of the 3D object, l is the length of image on the camera film, f is the focal length of the camera. The Gauss imaging formulas are shown as follows:

2.2 Color Segmentation in HSV Space

After getting the color images rendered output by 3DS MAX, we need to convert the RGB data into HSV data, set the threshold range of different colors, and segment the different color images [10,11,12]. Different color thresholds are set as shown in Table 1. After segmenting images of different colors extracted in HSV space, we need to convert it into the RGB space.

2.3 Window Interception Based on Integral Projection

As shown in Fig. 3, L is the distance between one pixel and its nearest microlens, and F is the distance between microlens and the recording medium, the distance between two adjacent microlenses remains unchanged. The ratio of L to F is a constant according to the triangle theorem. We only need to know the disparities of any two adjacent images, and we will know all the disparities. Occlusion problems will affect the accuracy of the disparity calculation. So we select the disparity values which satisfy the condition to take the average value as the final disparity value. Firstly, we establish coordinate system in which the upper left corner of each rendered image is taken as the coordinate origin, from the origin to the right direction is the x axis, and the y axis is down to the vertical direction as shown in Fig. 4.

Set average horizontal disparity of dh, and average vertical disparity of dv. After obtaining the disparities, it is necessary to intercept the images with the size of M1 (pixels) * N1 (pixels) by a rectangular window with the size of M (pixels) * N (pixels) to obtain the SI array [13, 14]. And it is necessary to determine the position coordinate (x(1,1), y(1,1)), which is the upper left corner of the rectangular window in the picture taken by the camera in the first row of the first column, where the SI we intercepted is just in the center of the rectangular window. And then according to the average disparities through integral projection [15], we translate the rectangular window to intercept images to obtain SI array. (x(m,n), y(m,n)) is the coordinate of the upper left corner of rectangular window in the picture taken by the camera in the mth row of the nth column, the formulas are as follows:

2.4 Mapping Relations Between EIs and SIs

The mapping relationship [16] is illustrated in Figs. 5 and 6. Figure 5 is the mapping relationship between EIs and SIs. In order to achieve sparse acquisition, we use the inverse mapping method to obtain EIs through SIs mapping, the process is shown in Fig. 6. The left side is the SI array, which are composed of m * n SIs, the size of each SI is i (pixels) * j (pixels). The pixels of the same position in each SI are extracted and combined together to form a mapping unit picture, namely EI. In the end, all the EIs are combined together in order to get the EI array.

According to the mapping relationship, the corresponding locations of one pixel in the image before and after mapping [17] are shown in formula (5). Where Former and Latter represent images before and after mapping, x and y represent the pixel position of the image before mapping, \( \text{x} = 0,1,2, \ldots \left( {\text{m} * \text{i} - \text{1}} \right),\;\;\text{y = }0,1,2, \ldots \left( {\text{n} * \text{j} - \text{1}} \right) \), % is the symbol of remainder operation, \( \lfloor {\,} \rfloor\) is the symbol of round down function. EI array is composed of i * j EIs, the size of each EI is m (pixels) * n (pixels).

2.5 Display Principle

The values of m and n depend on the relationship between the resolution and size of the display screen and the pitch of micro lens array. If the screen resolution is A (pixels) * B (pixels), the size of display screen is C (mm) * D (mm), the pitch of micro lens array is d (mm), then we can calculate to the values of m and n:

2.6 Parallax Error Rate

The disparity error rate E is defined as the ratio of the average disparity W0 to the exact disparity value W, The formula is as follows:

3 Experimental Results and Discussion

3.1 Els Generated by Virtual Microlens Array

The display screen is the 5.5 inches (in) OPPOr9 phone screen with a resolution of 1280 (pixels) * 1080 (pixels). The size of which is 110.7 mm * 92.7 mm. The size of microlens array is 55 mm * 55 mm, the horizontal and vertical pitches of microlens array are 1.03 mm. According to the formulas (6) and (7), the resolution of the rendered output images should be 12 (pixels) * 12 (pixels). So we need to place 12 rows and 12 columns of free cameras to pick up the virtual scene. Set the free camera lens focal length f = 50 mm, camera pitch p = 10 in, viewing angle θ = 39.598°. Use the teapot and ball model in 3DS MAX as the target objects. The radius of the teapot is 12 in, the radii of the yellow and green balls are 3 in, the radius of the blue ball is 3.5 in, and the radius of the orange ball is 5 in. Place the free camera array at a distance of 210 in from the object. Different views of 3D scene are shown as follows (Fig. 7):

Set the size of the rendered image as 600 (pixels) * 600 (pixels), put them into the HSV space for color segmentation, and then import the pictures to the RGB space to display, the pictures are as follows:

Figure 8(a) is the color image with the size of 600 (pixels) * 600 (pixels) rendered by the 66th (the 6th row of the 6th column) camera of 3DS MAX. Figure 8(b), (c), (d), (e) and (f) are the pink, green, blue, orange and yellow portions after color segmentation. We select the 66th and the 67th (the 6th row of the 7th column) camera images for different color segmentation, and then do the horizontal and vertical integral projection to obtain horizontal disparity, part of the integral projection of adjacent different color images are shown in Fig. 9. Figure 9(a) is the integral projection of a green ball and Fig. 9(b) is the integral projection of the whole of all objects.

Similarly, we select the 66th and the 78th (the 7th row of the 6th column) camera images for different color segmentation, and do the integral projection to obtain vertical disparity, the results are shown in Fig. 10.

In order to obtain the disparity comparison standard, we take the target object replaced by a white ball with a radius of 0.05 in, other conditions are not changed, use the camera array to shoot white ball, render the pictures with the same size of 600 (pixels) * 600 (pixels) to do integral projection. We select the 66th and the 67th camera images to do integral projection to obtain horizontal disparity, and select the 66th and the 78th camera images to do integral projection to obtain vertical disparity (Fig. 11).

According to the integral projections of different color objects we can obtain the location coordinates in the picture and the adjacent picture of the corresponding color objects. By subtracting the coordinates of the two objects, we can get the disparities of the corresponding color objects. The results are shown in the following Table 2:

Where Yellow 1 is the yellow ball on the left side and Yellow 2 is the yellow ball on the right side. Pink, Blue, Green, Orange are respectively the corresponding color objects. The General represents the whole of all objects. Since the horizontal pitch of the camera is the same as the vertical pitch, so the horizontal disparity of the object should be the same as the vertical disparity. Due to the horizontal disparity value and the vertical disparity value of the corresponding objects of Yellow 1, Yellow 2 and Pink are not equal, we need to remove this part of the data. So we obtain the average disparity of 38.3333 pixels based on the average disparity of the corresponding Blue, Green and Orange object images. According to the formula (8), the error rate of the disparity calculation is 0.433% both in the horizontal and the vertical direction. The disparity error rate of the whole of all objects is 2.597%. And the disparity error rate of the literature method [18] is 4.762%. The disparity error rate of the method used in this paper is significantly reduced.

When the size of the rendered image is 100 (pixels) * 100 (pixels), the disparity value is 1/6 of the rendered image with the size of 600 (pixels) * 600 (pixels), and both of the average horizontal and vertical disparities are 6.38888 pixels. Set the coordinate of the upper left corner of the rectangular window in the picture rendered by the camera of the first row of the first column to (55, 63). Translate the rectangular window with a size of 59 (pixels) * 41 (pixels) from left to right and from top to bottom every 6.38888 pixels to intercept different images. For images that are less than 59 (pixels) * 41 (pixels) after the window interception, we use black pixels to fill the background. Finally, the interceptions of the pictures in accordance with the order of the cameras after image splicing are shown in Fig. 12. According to the mapping relationship between EI and the SI, the EI array is obtained as shown in Fig. 13:

3.2 Display by Plat Lens Array

In the display process, the focal length of the microlens array is 5 mm, and the distance from the microlens array to the display screen is exactly 3 mm which is just the thickness of the microlens array. According to Gauss imaging formula (1), we can calculate the distance between the center depth plane of the image and the microlens array is 7.5 mm, the image is a virtual image which locates inside the display screen.

In order to verify the 3D display of the sparse acquisition integral imaging system, we build a device on the optical platform. Firstly, the adjustable lifting platform is fixed on the optical platform to adjust the height from up to down. Then, the manual translation table is fixed on the adjustable lifting platform to adjust from left to right. The slide rail is fixed on the manual translation stage to adjust from forward to backward. Next, the microlens array is placed on the OPPOr9 mobile phone screen. We need to ensure that the edges of the microlens array and the mobile phone screen are parallel. And put them all together on the optical platform. The other mobile phone iphone6 (4.7 inches, resolution 1334 (pixels) * 750 (pixels)) is placed on the slide rail stage, then we turn on the camera of iphone6, slowly adjust the scale of the device to make iphone6 move from left to right, from forward to backward to pick up different view of images. Different perspective views of the device are shown as Fig. 14:

The different viewpoints of images taken from the device are shown in Fig. 15:

All the images in Fig. 15 can be found in Fig. 12. For example, Fig. 15(a) is similar to the SI in the fourth row of the fifth column in Fig. 12.

4 Conclusion

In this paper, a sparse acquisition integral imaging system based on color segmentation and integral projection is proposed. We use color segmentation in the HSV space to get different color objects, and use the integral projection method to calculate the disparity values, and then find the average value of the disparities of different objects to reduce the error rate of horizontal/vertical disparity to 0.433%, which is better than the other methods with disparity error rates of 2.597% and 4.762%. The integral projection method commonly used in face recognition and license plate recognition is applied to the integral imaging to obtain the target object position. We do not need to know other parameters such as distance of the object in the acquisition process, we can obtain the disparities only through the information in the pictures. The SI array is obtained by translating the rectangular window with a size of 59 (pixels) * 41 (pixel) according to the disparities. And the EI array is obtained according to the mapping relationship between EI and SI, put the EI array into the phone and place the microlens array on the phone screen to get a good 3D display effect. We can only use 12 * 12 cameras instead of 59 * 41 cameras to obtain the EI array to achieve sparse acquisition. This method makes it easier and more accurate to reproduce the 3D image.

References

Wang, Q.H., Deng, H.: 3D pickup and display method of integral imaging. Chin. J. Liq. Cryst. Displays 29(2), 153–158 (2014)

Yuan, X.C., Xu, Y.P., Yang, Y., et al.: Design parameters of elemental images formed by camera array for crosstalk reduction in integral imaging. Opt. Precis. Eng. 19(9), 2050–2056 (2011)

Jiao, X.X., Zhao, X., Yang, Y., et al.: Pick-up system for three-dimensional integral imaging with camera array. Opt. Precis. Eng. 20(8), 1653–1660 (2012)

Cao, X.: Technological bottleneck of virtual reality. Sci. Technol. Rev. 34(15), 94–103 (2015)

Kuang, Y., Jiang, J.: Study of virtual scene interaction based on VRML and 3DS Max. Appl. Mech. Mater. 713–715, 2345–2347 (2015)

Park, J.H., Jung, S., Choi, H., et al.: Depth extraction by use of a rectangular lens array and one-dimensional elemental image modification. Appl. Opt 43(25), 4882–4895 (2004)

Park, J.H., Kim, J., Lee, B.: Three-dimensional optical correlator using a sub-image array. Opt. Express 13(13), 5116–5126 (2005)

Perry, T.S.: Autodesk 3D studio max 2017. Animation 30(6), 56 (2016)

Jiao, T.T., Wang, Q.H., Li, D.H., et al.: Computer-generated integral imaging based on 3DS MAX. Chin. J. Liq. Cryst. Displays 23(5), 622–624 (2008)

Ali, N.M., Rashid, M., Alang, N.K., Mustafah, Y.M.: Performance comparison between RGB and HSV color segmentations for road signs detection. Appl. Mech. Mater. 393, 550–555 (2013)

Pujol, F.A., Pujol, M., Jimeno-Morenilla, A., et al.: Face detection based on skin color segmentation using fuzzy entropy. Entropy 19(1), 26 (2017)

Zhu, Y.Z., Meng, Q.H., Pu, J.X.: Traffic light auto-recognition based on HSV color space and shape feature. Video Eng. 39(5), 150–154 (2015)

Guo, M., Si, Y.J., Wang, S.G., et al.: Elemental image generation combing discrete viewpoint pickup with adaptive window interception. J. Jilin Univ. (Eng. Technol. edn.) 46(5), 1681–1687 (2016)

Lyu, Y.Z., Wang, S.G., Zhang, D.T.: Elemental image array generation and sparse viewpoint pickup in integral imaging. J. Jilin Univ. (Eng. Technol. edn.) 43(S1), 1–5 (2013)

Yang, F., Zhang, H., Pan, G.F.: Eye location based on adaptive image segmentation and curve blending. Opt. Precis. Eng. 21(12), 3255–3262 (2013)

Wang, Y., Piao, Y.: Computational reconstruction for integral imaging with sampled elemental images. Acta Optica Sinica 34(5), 70–75 (2014)

Di, B.H.: The Improvement of Digital Contents Creation Technology in Integral Imaging. Jilin University (2014)

Lyu, Y.Z.: Research on Generation, Coding and Display of Elemental Image Array in Integral Imaging System. Jilin University (2014)

Acknowledgement

This work was supported by the of National Natural Science Foundation of China (61631009).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Jia, B., Wang, S., Wu, W., Li, T., Zhang, L. (2017). Sparse Acquisition Integral Imaging System. In: Zhao, Y., Kong, X., Taubman, D. (eds) Image and Graphics. ICIG 2017. Lecture Notes in Computer Science(), vol 10668. Springer, Cham. https://doi.org/10.1007/978-3-319-71598-8_37

Download citation

DOI: https://doi.org/10.1007/978-3-319-71598-8_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-71597-1

Online ISBN: 978-3-319-71598-8

eBook Packages: Computer ScienceComputer Science (R0)