Abstract

Wide spread of electronic imaging equipment and Internet makes photo sharing a popular and influential activity. However, privacy leaks caused by photo sharing have raised serious concerns, and effective methods on privacy protection are required. In this paper, a new privacy-preserving algorithm for photo sharing, especially for human faces in group photos, is proposed based on sparse representation and data hiding. Our algorithm uses a cartoon image to mask the region that contains privacy information, and performs sparse representation to find a more concise expression for this region. Furthermore, the sparse coefficients and part of the residual errors obtained from sparse representation are encoded and embedded into the photo by means of data hiding with a secret key, which avoids introducing extra storage overhead. In this way, the privacy-protected photo is obtained, and only people with correct key can reverse it to the original version. Experimental results demonstrate that the proposed privacy-preserving algorithm does not increase storage or bandwidth requirements, and meanwhile ensures a good quality of the reconstructed image.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

With the widespread of mobile phones as well as the popularization of social network applications, sharing photos online is becoming more and more enjoyable, especially group photos taken in specific scenes. However, due to the fact that most of the photo sharing applications do not have an option to protect individual privacy, a negative impact on privacy protection has been caused by this popular activity. Recently, a large number of privacy disclosure cases have aroused the public concern on privacy protection methods.

To find a sound solution to the privacy leakage problem, a large body of work has been done on the privacy protection of videos and photos. In terms of video privacy protection, some video surveillance systems protect the privacy information by implementing access rights management [1] or using scrambling technique to protect privacy in specific regions [2]. As for photo privacy protection, considering the fact that almost all the photos used in photo sharing applications are in JPEG format, many effective methods are proposed based on JPEG photos, including JPEG scrambling [3], JPEG transmorphing [4], P3 (privacy-preserving photo sharing) algorithm [5] and so on. Similarly, scrambling technique also can be used in photo privacy protection by arbitrarily modifying the signs of the quantized discrete cosine transform (DCT) coefficients. This method ensures that the privacy information in sensitive regions can be protected, but it is hard to reach a good visual effect at the same time. To improve that, Lin et al. [4] replaced the sensitive region with a smiley face, and inserted the original privacy information into the application markers, while this approach increased the storage and bandwidth requirements. What’s more, another popular method on photo privacy protection is P3 algorithm, which divided the photo into a public part and a secret part by setting a threshold for quantized DCT coefficients. Only the public part was uploaded to the photo sharing service providers (PSPs). Thus, the secret part that contains most of the privacy information can be protected. However, P3 only works on the whole-image level and it needs an extra cloud storage provider to store the encrypted secret part.

In this paper, we propose a novel method on photo privacy-preserving based on sparse representation and data hiding, which ensures that the photo privacy can be protected in an effective and convenient way. Meanwhile, if the correct key is provided, the photo can be recovered to its original version without need to keep a copy of the original. In this algorithm, the sparse representation is used to find a more concise expression for each image signal in the target region, which can benefit the following data hiding step. And the data hiding approach is employed to avoid extra overhead to storage. Based on the proposed algorithm, the privacy information can be protected, and the privacy-protected photo can be recovered without sacrificing the storage, the bandwidth, and the quality of the reconstructed photo. Furthermore, according to this photo privacy-preserving algorithm, we propose an efficient photo sharing architecture that consists of two parts: (1) a client side for performing photo privacy protection and photo reconstruction, and (2) a server for storing privacy-protected photos.

The rest of this paper is organized as follows. The proposed image privacy-preserving algorithm is presented in detail in Sect. 2. And the experimental results along with corresponding analysis are discussed in Sect. 3. Finally, Sect. 4 will draw the conclusions of this paper.

2 Photo Privacy-Preserving Algorithm

In this section, a detailed introduction of the proposed photo privacy-preserving algorithm is given in two aspects: photo privacy protection and photo reconstruction. Based on the fact that JPEG is the most popular format of the photos in online social networks, the proposed algorithm is mainly applied on photos in JPEG format.

2.1 Photo Privacy Protection

The flowchart of the photo privacy protection process is shown in Fig. 1. The main procedures include face detection and cartoon mask addition, sparse representation and residual errors calculation, residual errors addition, along with arithmetic coding and data hiding.

Face Detection and Cartoon Mask Addition. For a photo owner, to perform photo privacy protection, face detection is conducted at the beginning to provide a number of candidate regions that probably need to be selected to protect. The selected regions can also be other regions where there is no human face, which means the target regions can also be chosen by the owner arbitrarily. The boundaries of the selected regions are further adjusted to be aligned with the blocks used in JPEG compression. Note that, for the convenience of introducing our algorithm, the procedures are described in the case that only one region is selected to protect, as is shown in Fig. 1(b), and the privacy protection method for multiple regions is similar.

Based on the coordinate of target region, the image with mask \(I_{mask}\), shown as Fig. 1(c), is generated depending on the cartoon mask and its edge information. Here, the use of kinds of cartoon masks can not only protect privacy, but also make photo sharing be more interesting and ensure a good visual effect of the privacy-protected photo. Since the original image \(I_{ori}\) (Fig. 1(a)) is assumed in JPEG format, its DCT coefficients can be easily extracted. Therefore, to generate \(I_{mask}\) in JPEG format, the most straightforward approach is to change the DCT coefficients of the target region in \(I_{ori}\). In this way, the double compression on the whole image can be avoided, and consequently the distortion of the whole image is reduced. Note that, the DCT coefficients mentioned here and all of the DCT coefficients mentioned below refer to the quantized DCT coefficients.

To obtain the new DCT coefficients of the target region, the first step is to generate the region with cartoon mask in pixel domain by doing pixel replacement. Due to the difference between the size of the cartoon mask and target region, the cartoon mask along with its edge information are resized to match target region. Then, for the position outside the edge, pixel values of the original image are preserved. And for the position inside the edge, the original image values are replaced by corresponding pixel values of the cartoon mask. The second step is JPEG compression. Denote Q as the quality factor of \(I_{ori}\). The new DCT coefficients of target region can be obtained by doing JPEG compression on the small rectangular region obtained from the first step using the quality factor Q. After that, the leading coefficients are divided into two parts: (1) the coefficients completely independent with the cartoon mask and (2) the coefficients associated with the cartoon mask. As shown in Fig. 2(a), coefficients of the blocks with green borders belong to the first part, and coefficients of other blocks belong to the second part. Note that, the size of these blocks is \(8\times 8\) by default.

Finally, we can change the DCT coefficients of the target region in \(I_{ori}\) by the new coefficients. To minimize distortion, only the DCT coefficients of blocks that belong to the second part are used to replace the original DCT coefficients of these blocks. Thus, \(I_{mask}\) in JPEG format is generated. Furthermore, the new target region \(R_{obj}\) which contains all the privacy information, as is shown in Fig. 1(f), will be processed in the following steps.

In addition, the cartoon mask in PNG format and its edge information along with a dictionary which will be used in next step are stored on the client side. This is reasonable since they are very small. Here, the storage of the edge information can avoid edge detection of the cartoon mask image in every photo privacy protection process.

Sparse Representation and Residual Errors Calculation. Considering that the new target region \(R_{obj}\) contains too much information, K-means singular value decomposition (K-SVD) algorithm [6, 7] is employed to design an over-complete dictionary \(\mathbf {D} \) that lead to sparse representation. Since the amount of information in U and V components is very small, their DCT coefficients can be encoded and embedded directly in the arithmetic coding and data hiding step, and this process is omitted in Fig. 1. That is to say, only Y component of \(R_{obj}\) is processed in this step.

Given Y component of \(R_{obj}\), the sparse representation is performed on patch-level with the over-complete dictionary \(\mathbf {D} \) trained by K-SVD algorithm. Unless stated, the size of the patches in our algorithm is always set to \(8\times 8\), which is the same as the size of the blocks used in JPEG compression. For each block, the pixel values are firstly vectorized as \(\mathbf {y} _i\in \mathbb {R}^{n\times 1}\) \((i=1,2,\cdots ,S)\). S denotes the total number of blocks in \(R_{obj}\). n is the length of a single vector, which is equal to 64 in our algorithm. After that, the dictionary \(\mathbf {D} \), which contains K prototype signal atoms for columns, is used to calculate sparse representation coefficients \(\mathbf {x} _i\) for corresponding \(\mathbf {y} _i\). More specifically, based on \(\mathbf D \), \(\mathbf{y }_i\) can be represented as a sparse linear combination of these atoms

where \(\Vert \cdot \Vert _0\) is the \(l^0\) norm, which indicates the number of nonzero entries of a vector. And L is used to control the sparse degree of \(\mathbf {x} _i\). Note that the K-SVD training is an offline procedure, and the well trained dictionary is fixed in our algorithm.

Generally, as long as the sparse coefficients and the dictionary \(\mathbf {D} \) are known, a reconstructed signal \(\mathbf {y} _i'\) corresponding to the original signal \(\mathbf {y} _i\) can be computed. For the convenience of the encoding of \(\mathbf {x} _i\), we adjust them to integers \(\mathbf {x} _i'= \text{ round } (\mathbf {x} _i)\). Consequently, the reconstructed signal \(\mathbf {y} _i'\) can be calculated by formula 2.

where \(i=1,2,\cdots ,S\), \(\mathbf {x} _i \in \mathbb {R} ^ {K \times 1}\), and \(\mathbf {x} _i' \in \mathbb {Z} ^ {K \times 1}\).

Note that, the reconstructed signal \(\mathbf {y} _i'\) is not strictly equal to the original signal \(\mathbf {y} _i\), which means that there exists a difference between them. And, by doing subtraction, we can get the residual errors in spatial domain.

However, since part of the residual errors will be added directly to the DCT coefficients of a specific area in \(I_{mask}\) in the next step, the residual errors between DCT coefficients of \(\mathbf {y} _i'\) and \(\mathbf {y} _i\) should be calculated instead of the residual errors in spatial domain. Therefore, we directly extract the DCT coefficients of \(\mathbf {y} _i\) from \(I_{ori}\), denoted as \(C_{\text{ y }_i}\). Moreover, by doing pixel value translation (from [0, 255] to [−128, 127]), DCT transformation, and quantization using the same quantization table with \(I_{ori}\), the DCT coefficients of \(\mathbf {y} _i'\) can be obtained, denoted as \(C_{\text{ y }_i'}\). Thus, the residual errors of DCT coefficients can be obtained by formula (3).

where \(\mathbf {e} _i \in \mathbb {Z} ^ {n \times 1}\).

Figure 3 shows the process of sparse representation and residual errors calculation. After all these procedures, the sparse coefficients matrix \(\mathbf {X} \) and the residual error matrix \(\mathbf {E} \) corresponding to \(R_{obj}\) are obtained. The size of matrix \(\mathbf {E} \) is the same as the size of the selected rectangular region, as is shown in Fig. 1(b), and the values at the positions outside of \(R_{obj}\) are set to 0.

Residual Errors Addition. In this step, the residual error matrix \(\mathbf {E} \) generated from the previous procedure is divided into two parts according to the division method shown in Fig. 2(b). Based on the division method, blocks with green borders are defined as edge blocks and other blocks are defined as internal blocks. Thus, the matrix \(\mathbf {E} \) can be divided into two parts: \(\mathbf {E} _1\) and \(\mathbf {E} _2\), corresponding to the residual errors in edge blocks and internal blocks.

To reduce the amount of information that needs to be embedded, \(\mathbf {E} _2\) is directly added to the DCT coefficients of the corresponding internal blocks in \(I_{mask}\).

where \(C_{mask}\) indicates the DCT coefficients of the internal blocks in \(I_{mask}\), and \(C_{show}\) represents the new DCT coefficients of these blocks. Thus, \(\mathbf {E} _2\) can be easily extracted in the photo reconstruction process by doing a subtraction. Since \(\mathbf {E} _2\) is very small and most values in it are 0, the distortion caused by this procedure almost does not affect the visual quality of the photo and the size of the JPEG file is almost unchanged. Finally, we get \(I_{error}\), as is shown in Fig. 1(d).

Besides, \(\mathbf {E} _1\) will be processed in the next step.

Arithmetic Coding and Data Hiding. Unlike some previous photo privacy protection methods that increased the storage or bandwidth requirements, our algorithm considers the privacy information as secret data and directly embeds it into the photo. The sparse coefficients matrix \(\mathbf {X} \), the residual error matrix \(\mathbf {E} _1\), the DCT coefficients of U and V components of \(R_{obj}\) along with some auxiliary information will be encoded and embedded into the photo in this step.

First, arithmetic coding method is used to encode \(\mathbf {X} \) and \(\mathbf {E} _1\). Two short binary bit streams can be generated and further sequentially linked to form a long bit stream. Here, the length of the first short bit stream that comes from sparse coefficients matrix is used to distinguish which part of the long bit stream is corresponding to \(\mathbf {X} \) in the decoding phase, and this parameter is denoted as \(l_X\). We use 16 bits to encode \(l_X\), and subsequently set these bits at the beginning of the long bit stream.

Then the long bit stream will be embedded into the DCT coefficients of Y component of \(I_{error}\) based on F5 steganography [8]. Reversible data hiding method is also available in case you need to recover the original image losslessly in the photo reconstruction process. Additionally, the DCT coefficients of U and V components of \(R_{obj}\) will be directly encoded and embedded to the corresponding component of \(I_{error}\). An encryption key is used in F5 steganography, so that only the person with correct key is able to extract the secret data hidden in the photo.

Note that, two parts of the DCT coefficients of Y component are not available to embed. One is the target region, and its DCT coefficients are used to exactly extract the error matrix \(\mathbf {E} _2\) in the reconstruction process. The other one is DCT coefficients of the blocks in the first column, which is preserved to embed the auxiliary information.

Finally, as for the auxiliary information, it mainly contains three parts: (1) the coordinate position of target region, including the coordinate of top left pixel (x, y), and the width and height of target region (w, h), (2) the length of the three bit streams that need to be embedded into Y, U and V components respectively, denoted as \(len_Y\), \(len_U\) and \(len_V\), and (3) the parameters of F5 steganography in three components, denoted as \(k_Y\), \(k_U\) and \(k_V\). What’s more, if there are many cartoon mask images available, the sequence number of the used cartoon mask should also be considered as part of the auxiliary information. To obtain the auxiliary information before extracting secret data, Jsteg steganography is used to embed these bits into the DCT coefficients of the blocks in the first column. Figure 4 shows the specific structure of the auxiliary information bit stream.

Through all above procedures, the final image \(I_{show}\) which will be uploaded to the sever can be obtained. Anyone who has access to this sever can obtain \(I_{show}\), but only the person with correct key can recover the photo to its original version. Thus, this algorithm is appropriate for photo privacy protection.

2.2 Photo Reconstruction

After downloading the privacy-protected photo \(I_{show}\) from the server to the client side, people who has correct key can obtain its original version by reversing the protection procedures described above. The specific process is as follows:

-

(1)

Extract the auxiliary information from the blocks in the first column. Thus, the coordinate position of target region, the length of three bit streams along with the parameters of F5 steganography can be obtained at the beginning.

-

(2)

Extract the bit streams of secret information from the DCT coefficients of Y, U and V components based on the auxiliary information obtained from step one. Then, by performing arithmetic decoding, the sparse coefficients matrix \(\mathbf {X} \), the residual error matrix \(\mathbf {E} _1\) along with the DCT coefficients of U and V components of the target region can be recovered. Furthermore, the DCT coefficients of the reconstructed edge blocks, denoted as \(C_1\), and the DCT coefficients of the reconstructed internal blocks, denoted as \(C_2\), can be calculated based on the dictionary \(\mathbf {D} \) and the sparse coefficients matrix \(\mathbf {X} \). Note that, the dictionary as well as the cartoon mask image and its edge information are shared by every user on the client side.

-

(3)

Resize the cartoon mask to match the target region, and then perform JPEG compression on the mask with the same quality factor as \(I_{show}\) to obtain its DCT coefficients of Y component. In this way, the DCT coefficients of internal blocks in cartoon mask can be obtained, that is \(C_{mask}\).

-

(4)

Extract error matrix \(\mathbf {E} _2\) by a simple subtraction

$$\begin{aligned} \ \text{ E }_2=C_{show}-C_{mask}, \end{aligned}$$(5)where \(C_{show}\) and \(C_{mask}\) indicate the DCT coefficients of Y component in the internal blocks of \(I_{show}\) and cartoon mask image, respectively.

-

(5)

Recover the original DCT coefficients of Y component of target region by

$$\begin{aligned} C= {\left\{ \begin{array}{ll} C_1+E_1 &{}\text{ for } \text{ edge } \text{ blocks }\\ C_2+E_2 &{}\text{ for } \text{ internal } \text{ blocks } , \end{array}\right. } \end{aligned}$$(6)where \(C_1\) and \(C_2\) are the DCT coefficients obtained from step two. For edge blocks, we recover its original DCT coefficients by adding \(E_1\) to \(C_1\), and by adding \(E_2\) to \(C_2\), the original DCT coefficients of internal blocks can be recovered. Thus, the DCT coefficients of Y component of \(R_{obj}\) can be recovered.

-

6)

Replace the DCT coefficients of the target region in \(I_{show}\) by the recovered DCT coefficients of Y, U and V components. In this way, the image can be recovered to the original version that contains privacy information.

3 Experimental Results and Analysis

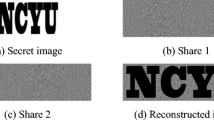

In this section, several experiments are conducted to demonstrate the efficacy of the proposed photo privacy-preserving algorithm, including: (1) length comparison between the bit streams from original DCT coefficients and the ones from the proposed method, and (2) performance comparison between JPEG transmorphing method and the proposed method.

3.1 Length Comparison Between Bit Streams

The first experiment is designed to test whether our proposed method can reduce the number of bits required for image signal representation, which can be done simply by comparing the length between bit streams from original DCT coefficients and bit streams from our sparse representation based method. 1000 images under natural scenes from The Images of Groups Dataset [9] are used in the first experiment. These images are collected from Flicker images, with different kinds of scenes and different size, and the number of people in these images is also different. All these images are group photos that multiple people involved, so the size of the face regions is relatively small. For each image, face detection method from Yu [12] is employed to determine the target region. And, to reduce the complexity of the experiment, only the face which is first detected in the image is selected to protect.

As for the dictionary used in the experiment, it is trained by K-SVD algorithm. The training samples are the 8 \(\times \) 8 blocks taken from face regions of 725 images, and these images are from the first part of color FERET database [10, 11]. During the training process, the values of some parameters are set as follows: (1) the number of nonzero entries of sparse coefficients \(\mathbf x _i\) is set to 4, which means L is equal to 4, and (2) the size of the final dictionary is 64 \(\times K\), and K is set to 128, and (3) the maximal number of iterations is set to 50. Since the dictionary is well trained, it is fixed in our algorithm, and shared by all the users.

The results are shown in Table 1, and the average length of the bit streams of these 1000 images with two methods are listed in the table. Note that, only the bit streams of Y components are taken into consideration in this experiment. From this table, it can be clearly found that the proposed method has a better performance on shortening the length of bit streams. Additionally, we compute the percentage of the bitrate decrease to characterize the proportion of the bits which can be reduced by applying proposed sparse representation based method. Note that, the bitrate decreases by 28.42% [(8122−5814)/8122], suggesting that 28.42% of bits can be avoided embedding to the image.

Figure 5 shows the scatter plot of the length of bit streams with two methods among different images. These images are arranged in ascending order based on the size of target regions, which leads to the upward trend of the length. Obviously, the values of black dots from the original DCT coefficients are almost all bigger than the corresponding values of the red dots from proposed method. As the size of target region increases, the gap between the length of these two methods is becoming more and more apparent, and the advantage of our sparse representation based algorithm is becoming more and more obvious.

3.2 Performance Comparison with JPEG Transmorphing

JPEG transmorphing [4] is an algorithm that can not only protect the photo privacy but also recover the original image with a modified version. This method first replaced the face region with a smiley face to hide the sensitive information, and then inserted this part of sensitive information into the application markers of the JPEG file to ensure that the photo can be recovered when it is needed. In this section, we will compare our proposed method with JPEG transmorphing mainly in two aspects: size of overhead on the JPEG files and quality of reconstructed images. Still the 1000 images from The Image of Groups Dataset [9] are used in the second experiment to measure the performance of these two methods.

Experimental results are shown in Table 2, and the results in this table are all average values. The values of PSNR are computed based on the pairs of original image and the reconstructed image, and the values in the last column are obtained by comparing the file size of the original images and the privacy-protected images. One can see from the table that the bitrate significantly decreased by switching JPEG transmorphing to the proposed method. In other words, among these 1000 images, the proposed method can keep almost the same quality of reconstructed images as JPEG transmorphing method without adding a large amount of extra storage overhead on the image files. Notice that the large amount of overhead on the image files in JPEG transmorphing method is mainly caused by inserting a sub-image which contains privacy information into the application markers of the JPEG file. Once the size of target region increases, the bitrate of JPEG transmorphing method will also increase. Besides, in the proposed algorithm, once the size of target region increases, the length of the bit stream will also increase, and consequently the quality of the reconstructed image will decrease. In this case, reversible data hiding method can be chosen to replace F5 steganography, which allows users to obtain the reconstructed image in a lossless way.

Finally, some examples of photo privacy protection based on our proposed method are shown in Fig. 6, including the case that different cartoon masks are used to protect multiple regions in one photo.

4 Conclusions

This paper presents a photo privacy-preserving algorithm based on sparse representation and data hiding, which can protect the photo privacy as well as reconstruct the photo in a high quality for people who has the correct key. The proposed method conducts sparse representation to reduce the amount of information that requires to be embedded into the image. And data hiding method is employed to avoid adding extra storage overhead on the image files. Experimental results have shown that, compared to the advanced alternatives, our method does not increase the requirements of storage or bandwidth and can keep a pretty high quality for the reconstructed photo. Future work lies in improving the applicability of the algorithm on photos with oversize privacy areas.

References

Senior, A., Pankanti, S., Hampapur, A., Brown, L., Tian, Y.L., Ekin, A., Connell, J., Shu, C., Lu, M.: Enabling video privacy through computer vision. IEEE Secur. Priv. 3(3), 50–57 (2005)

Dufaux, F., Ebrahimi, T.: Scrambling for privacy protection in video surveillance systems. IEEE Trans. Circuits Syst. Video Technol. 18(8), 1168–1174 (2008)

Lin, Y., Korshunov, P., Ebrahimi, T.: Secure JPEG scrambling enabling privacy in photo sharing. In: 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition, vol. 4, pp. 1–6 (2015)

Lin, Y., Ebrahimi, T.: Image transmorphing with JPEG. In: IEEE International Conference on Image Processing, pp. 3956–3960 (2015)

Ra, M.R., Govindan, R., Ortega, A.: P3: toward privacy-preserving photo sharing. In: 10th USENIX Conference on Networked Systems Design and Implementation, pp. 515–528 (2013)

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006)

Rubinstein, R., Peleg, T., Elad, M.: Analysis K-SVD: a dictionary-learning algorithm for the analysis sparse model. IEEE Trans. Signal Process. 61(3), 661–677 (2013)

Westfeld, A.: F5—A Steganographic Algorithm: High Capacity Despite Better Steganalysis. In: Moskowitz, I.S. (ed.) IH 2001. LNCS, vol. 2137, pp. 289–302. Springer, Heidelberg (2001). https://doi.org/10.1007/3-540-45496-9_21

Gallagher, A., Chen, T.: Understanding images of groups of people. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 256–263 (2009)

Phillips, P.J., Wechsler, H., Huang, J., Rauss, P.: The FERET database and evaluation procedure for face recognition algorithms. Image Vis. Comput. 16(5), 295–306 (1998)

Phillips, P.J., Moon, H., Rizvi, S.A., Rauss, P.J.: The FERET evaluation methodology for face recognition algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 22, 1090–1104 (2000)

Face Detection. https://github.com/ShiqiYu/libfacedetection

Acknowledgments

This work was supported in part by the National Key Research and Development of China (2016YFB0800404), National NSF of China (61672090, 61332012), Fundamental Research Funds for the Central Universities (2015JBZ002).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Li, W., Ni, R., Zhao, Y. (2017). JPEG Photo Privacy-Preserving Algorithm Based on Sparse Representation and Data Hiding. In: Zhao, Y., Kong, X., Taubman, D. (eds) Image and Graphics. ICIG 2017. Lecture Notes in Computer Science(), vol 10668. Springer, Cham. https://doi.org/10.1007/978-3-319-71598-8_51

Download citation

DOI: https://doi.org/10.1007/978-3-319-71598-8_51

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-71597-1

Online ISBN: 978-3-319-71598-8

eBook Packages: Computer ScienceComputer Science (R0)