Abstract

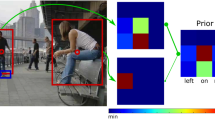

In this paper, we propose a semantic concept-based video browsing system which mainly exploits the spatial information of both object and action concepts. In a video frame, we soft-assign each locally regional object proposal into cells of a grid. For action concepts, we also collect a dataset with about 100 actions. In many cases, actions can be predicted from a still image, not necessarily from a video shot. Therefore, we consider actions as object concepts and use a deep neural network based on YOLO detector for action detection. Moreover, instead of densely extracting concepts of a video shot, we focus on high-saliency objects and remove noisy concepts. To further improve the interaction, we develop a color-based sketch board to quickly remove irrelevant shots and an instant search panel to improve the recall of the system. Finally, metadata, such as video’s title and summary, is integrated into our system to boost its precision and recall.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cobârzan, C., Schoeffmann, K., Bailer, W., Hürst, W., Blažek, A., Lokoč, J., Vrochidis, S., Barthel, K.U., Rossetto, L.: Interactive video search tools: a detailed analysis of the video browser showdown 2015. Multimedia Tools Appl. 76(4), 5539–5571 (2017)

Redmon, J., Farhadi, A.: Yolo9000: Better, faster, stronger. arXiv preprint arXiv:1612.08242 (2016)

Zhou, B., Lapedriza, A., Xiao, J., Torralba, A., Oliva, A.: Learning deep features for scene recognition using places database. In: Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems 27, pp. 487–495. Curran Associates, Inc., New York (2014)

Patterson, G., Xu, C., Su, H., Hays, J.: The sun attribute database: beyond categories for deeper scene understanding. Int. J. Comput. Vis. 108(1–2), 59–81 (2014)

Liu, N., Han, J.: Dhsnet: deep hierarchical saliency network for salient object detection. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. CoRR abs/1409.1556 (2014)

Johnson, J., Karpathy, A., Fei-Fei, L.: Densecap: fully convolutional localization networks for dense captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Blažek, A., Lokoč, J., Skopal, T.: Video retrieval with feature signature sketches. In: Traina, A.J.M., Traina, C., Cordeiro, R.L.F. (eds.) SISAP 2014. LNCS, vol. 8821, pp. 25–36. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-11988-5_3

Nguyen, V.T., Le, D.D., Salvador, A., Zhu, C., Nguyen, D.L., Tran, M.T., Duc, T.N., Duong, D.A., Satoh, S., i Nieto, X.G.: Nii-hitachi-uit at trecvid 2015. In: TRECVID 2015 Workshop, Gaithersburg, MD, USA (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

Truong, TD. et al. (2018). Video Search Based on Semantic Extraction and Locally Regional Object Proposal. In: Schoeffmann, K., et al. MultiMedia Modeling. MMM 2018. Lecture Notes in Computer Science(), vol 10705. Springer, Cham. https://doi.org/10.1007/978-3-319-73600-6_49

Download citation

DOI: https://doi.org/10.1007/978-3-319-73600-6_49

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-73599-3

Online ISBN: 978-3-319-73600-6

eBook Packages: Computer ScienceComputer Science (R0)