Abstract

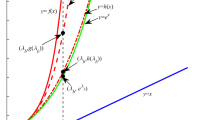

Manifold learning and feature selection have been widely studied in face recognition in the past two decades. This paper focuses on making use of the manifold structure of datasets for feature extraction and selection. We propose a novel method called Joint Sparse Locality Preserving Projections (JSLPP). In order to preserve the manifold structure of datasets, we first propose a manifold-based regression model by using a nearest-neighbor graph, then the \( L_{2,1} \)-norm regularization term is imposed on the model to perform feature selection. At last, an efficient iterative algorithm is designed to solve the sparse regression model. The convergence analysis and computational complexity analysis of the algorithm are presented. Experimental results on two face datasets indicate that JSLPP outperforms six classical and state-of-the-art dimensionality reduction algorithms.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Batur, A.U., Hayes, M.H.: Linear subspace for illumination robust face recognition. In: Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, December 2001

Cox, T.F., Cox, M.A.A.: Multidimensional scaling on a sphere. Commun. Stat. Theory Methods 20(9), 2943–2953 (1991)

Turk, M., Pentland, A.P.: Face recognition using eigenfaces. In: IEEE Conference on Computer Vision and Pattern Recognition (1991)

Belhumeur, P.N., Hespanha, J.P., Kriegman, D.J.: Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans. Pattern Anal. Mach. Intell. 19(7), 711–720 (1997)

Tenenbaum, J.B., de Silva, V., Langford, J.C.: A global geometric framework for nonlinear dimensionality reduction. Science 290, 2319–2323 (2000)

Roweis, S.T., Saul, L.K.: Nonlinear dimensionality reduction by locally linear embedding. Science 290, 2323–2326 (2000)

Saul, L.K., Roweis, S.T.: Think globally.: fit locally: unsupervised learning of low dimensional manifolds. J. Mach. Learn. Res. 4, 119–155 (2003)

Belkin, M., Niyogi, P.: Laplacian eigenmaps and spectral techniques for embedding and clustering. In: Proceedings of Conference on Advances in Neural Information Processing System, vol. 15 (2001)

He, X., Yan, S., Hu, Y., Niyogi, P., Zhang, H.J.: Face recognition using laplacianfaces. IEEE Trans. Pattern Anal. Mach. Intell. 27(3), 328–340 (2005)

He, X., Niyogi, P.: Locality preserving projections. In: Neural Information Processing Systems, vol. 16, p. 153 (2004)

He, X., Cai, D., Yan, S., Zhang, H.J.: Neighborhood preserving embedding. In: ICCV, pp. 1208–1213 (2005)

Bradley, P., Mangasarian, O.: Feature selection via concave minimization and support vector machines

Wang, L., Zhu, J., Zou, H.: Hybrid huberized support vector machines for microarray classification. In: ICML (2007)

Gu, Q., Li, Z., Han, J.: Joint feature selection and subspace learning. In: International Joint Conference on Artificial Intelligence, pp. 1294–1299. AAAI Press (2011)

Nie, F., Huang, H., Cai, X., Ding, C.: Efficient and robust feature selection via joint \( L_{2,1} \) norms minimization. In: Advances in Neural Information Processing Systems, vol. 23, pp. 1813–1821 (2010)

Ma, Z., Yang, Y., Sebe, N., Member, S., Zheng, K., Hauptmann, A.G.: Classifier-specific intermediate representation, 15(7), 1628–1637 (2013)

Zou, H., Hastie, T., Tibshirani, R.: Sparse principal component analysis. J. Comput. Graph. Stat. 15(2), 265–286 (2006)

Acknowledgement

This work was supported in part by the Natural Science Foundation of China (Grant 61573248, Grant 61773328, Grant 61375012 and Grant 61703283), China Postdoctoral Science Foundation (Project 2016M590812 and Project 2017T100645), the Guangdong Natural Science Foundation (Project 2017A030313367 and Project 2017A030310067), and Shenzhen Municipal Science and Technology Innovation Council (No. JCYJ20170302153434048).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

Liu, H., Lai, Z., Chen, Y. (2018). Joint Sparse Locality Preserving Projections. In: Qiu, M. (eds) Smart Computing and Communication. SmartCom 2017. Lecture Notes in Computer Science(), vol 10699. Springer, Cham. https://doi.org/10.1007/978-3-319-73830-7_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-73830-7_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-73829-1

Online ISBN: 978-3-319-73830-7

eBook Packages: Computer ScienceComputer Science (R0)