Abstract

Diffuse Lung Diseases (DLDs) are a challenge for physicians due their wide variety. Computer-Aided Diagnosis (CAD) are systems able to help physicians in their diagnoses combining information provided by experts with Machine Learning (ML) methods. Among ML techniques, Deep Learning has recently established itself as one of the preferred methods with state-of-the-art performance in several fields. In this paper, we analyze the discriminatory power of Deep Feedforward Neural Networks (DFNN) when applied to DLDs. We classify six radiographic patterns related with DLDs: pulmonary consolidation, emphysematous areas, septal thickening, honeycomb, ground-glass opacities, and normal lung tissues. We analyze DFNN and other ML methods to compare their performance. The obtained results show the high performance obtained by DFNN method, with an overall accuracy of 99.60%, about 10% higher than the other studied ML methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Computer-Aided Diagnosis

- Deep Feedforward Neural Network

- Deep Learning

- Diffuse Lung Diseases

- Machine Learning

1 Introduction

Diffuse Lung Diseases (DLDs) form a group of more than 150 pathological conditions, characterized by inflammation of the lung, which leads to breathing dysfunctions [21]. Distinguishing among these diseases is a challenge for physicians due their wide variety. Computer-Aided Diagnosis (CAD) systems can help physicians in their diagnoses, combining information provided by experts with Machine Learning (ML) methods. Computers present several advantages over humans: they do not suffer from boredom, fatigue, and distractions; are consistent in their analyses; and they can combine the knowledge of several experts in a single analysis [18].

There are several studies using ML methods in diagnoses of several diseases, such as lung cancer [5] and breast cancer [26]. Among ML techniques, Deep Learning (DL) is a recent development that is providing state-of-the-art performance in several fields, such as speech recognition [19] and image recognition [12]. Litjens et al. [14] list over 300 contributions of DL in medical image analysis, most of which appeared in recent years.

In this paper, we analyze the discriminatory power of Deep Feedforward Neural Networks (DFNN) when applied to DLDs. DL methods present better stability, generalization ability, and scalability, when compared with simple neural networks [6]. We contrast this approach with other ML methods: k-Nearest Neighbors (kNN) [23], Support Vector Machines (SVM) [24], Logistic Regression (LR) [11], Gaussian Mixture Models (GMM) [15], and Artificial Neural Networks (ANN) [20], in order to compare their performance.

We evaluate the selected methods for the classification of the following six radiographic patterns related with DLDs: pulmonary consolidation (PC), emphysematous areas (EA), septal thickening (ST), honeycomb (HC), ground-glass opacities (GG), and normal lung tissues (NL). For this purpose, we use the 28 texture and fractal features described in the work of Pereyra et al. [16]. In this work we only deal with the features, since the images from which they were obtained are not available due to practical issues. For this reason, we employed DFNN rather than the state-of-the-art Convolutional Neural Networks.

We applied dimensionality reduction methods prior to classification to remove irrelevant or redundant data, namely, Principal Component Analysis (PCA), Linear Discriminant Analysis (LDA), and Stepwise Selection – Forward (SSF), Backward (SSB), and Forward-Backward (SSFB).

The main contributions of this work are: (I) A comprehensive quantitative comparison of classification methods using dimensionality reduction for DLDs; and (II) The use of DFNN to improve the classification accuracy over state-of-the-art methods. This work may contribute to the analysis and selection of those features that can efficiently characterize the DLDs studied.

This article is organized as follows: Sect. 2 presents an overview of DFNN; Sect. 3 presents the material used and related work; Sect. 4 presents the methods used; Sect. 5 presents the main results; and, finally, Sect. 6 concludes this work.

2 Deep Feedforward Neural Networks

The goal of a DFNN is to approximate a desired function \(f^*\), mapping an input x to a category y. A feedforward network defines a mapping \(y = f(x;\theta )\) and learns the value of the parameters \(\theta \) that result in the best function approximation.

The architecture of a DFNN, as in most neural networks, is usually described in groups of units called layers. The layers are arranged in a chain structure, with each layer being a function of the preceding layer. In a feedforward model, the information flows through the function being evaluated from x (the input layer), through the intermediate computations used to define a function f (the hidden layers), and finally to the output y (the output layer). The behavior of the hidden layers is not directly specified, leaving the learning algorithm to decide on how to use the layers to produce the desired output. The training data also do not specify what each layer should do. Instead, the learning algorithm must decide how to use the layers to best implement an approximation of \(f^*\) [9]. To avoid slow convergence, it is possible to use an adaptive learning method, such as AdaDelta [25].

Each unit that composes a layer is called a neuron. In a fully connected architecture, each neuron is connected to all the neurons in the next layer, transmitting a weighted combination of the input signal, as \(\alpha = \sum _{i = 1}^n w_{i} x_i + b\), where \(x_i\) and \(w_i\) denote the firing neuron’s input values and their weights, respectively, and n is the number of input values. The bias b represents the neuron’s activation threshold and is included in each layer of the network, except the output layer. The final output is fully determined by the weights linking neurons and biases [6].

Weighted values are aggregated and then the output signal is checked by a nonlinear activation function, activating the unit or not, and the value is transmitted by the connected neuron. There are several possibilities for the activation function. In this work, we used the hyperbolic tangent function: \(f(\alpha ) = (e^\alpha - e^{-\alpha })/(e^\alpha + e^{-\alpha })\), which is a rescaled and shifted logistic function. Its symmetry around 0 allows the training algorithm to converge faster [6], avoiding the saturation behavior of the top hidden layer observed with sigmoid networks [8].

Regularization methods can be used to reduce generalization error and hence prevent overfitting. Among the possibilities, we used Ridge and Lasso penalties: Ridge causes many weights to be small, and Lasso turns many weights into zero, which can add stability to the model [11].

3 Materials and Related Work

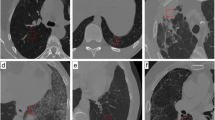

The material used is derived from the work of Pereyra et al. [16]: 247 high-resolution CT images from 108 different exams from the University Hospital at the Ribeirão Preto Medical School of the University of São Paulo, Brazil, previously selected and evaluated by thoracic radiologists. First, the DICOM images were normalized to 8 bits per pixel. Histogram equalization and centering at −400 and −800 Hounsfield Units (HU) were performed to highlight high- and low-attenuation patterns, respectively. Segmentation was made by using mathematical morphology (closing), binary thresholding (at −800 HU), and object selection. Then, a total of 3252 Regions of Interest (ROIs) (each of size \(64 \times 64\) pixels) were grouped into categories, according to the radiologist report, that characterize each radiographic pattern: 529 ROIs for HC, 594 for GG, 589 for ST, 450 for PC, 501 for EA, and 589 for NL. After these procedures, the labels were reviewed and validated with radiologists for accuracy. From each ROI was extracted a set of 28 features: the first-order statistical (mean, median, standard deviation, skewness, and kurtosis); 14 texture features defined by Haralick et al. [10] (energy, difference moment, correlation, variance, inverse difference moment, sum of squares: average, sum of squares: variance, sum of squares: entropy, entropy, difference variance, difference entropy, information measures of correlation 1, information measures of correlation 2, maximal correlation coefficient) for the four possible neighborhood (\({0}^{\circ }\), \({45}^{\circ }\), \({90}^{\circ }\), and \({135}^{\circ }\)), using one pixel as the reference distance; Laws’ measures of texture energy [13] using the appropriate convolution masks [Laws’ wave measures, Laws’ wave measures (rotation-invariant), Laws’ ripple measures, Laws’ ripple measures (rotation-invariant), Laws’ level measures]; statistical measures (standard deviation, mean, and median) of the power spectral density (PSD), obtained from the Discrete Fourier Transform (DFT); and fractal dimension (FD), using PSD of each ROI including the application of the tapered cosine window. Details about these features and analysis of texture in biomedical images are available in Rangayyan [18].

Using this data set of features, Pereyra et al. [16] achieved a correct classification rate of up to 82.62% using the kNN algorithm. Almeida et al. [2] performed a statistical analysis of the same features using the GMM with six components for each feature. GMM provided, for at least one class and 78.5% of the features, correct classification of at least 60%. Almeida et al. [1] extended their previous work by applying GMM to the five most significant features, generating fuzzy membership functions and obtaining 63% in average of correct classification.

In this work, we reproduce the main results of the aforementioned work and perform a comprehensive evaluation of different ML methods, including DFNN.

4 Methods

The dimensionality reduction methods used in the present study do not need setting of parameters. However, classification requires tuning the parameters empirically until the best results are found. We not only classify the full set of features but also the results from the dimensionality reduction methods, using the ML methods mentioned before. We validated the results by 10-fold-cross-validation.

To generate the results we used a machine with i5 processor and 4 GB of RAM memory, with no GPU. Our programming platform is the R language (version 3.3.2) [17] due to its high accuracy in computing statistical functions [3].

The same configuration used before by Pereyra et al. [16] for kNN was adopted, \(k = 5\). However, for GMM, previously used by Almeida et al [2], we chose a different approach: an extension proposed by Fraley and Raftery [7], which uses the labels to choose the best parameterization of the covariance matrix of the data as well as the number of components in each class, turning GMM into a supervised algorithm.

For the other ML methods studied, we adopted the following parameters: (I) SVM: a kernel of inhomogeneous polynomials of degree 2, with the cost of constraint violation being 1; (II) LR: elastic net penalty [11], with elastic net mixing parameter set to 0.7; (III) ANN: softmax activation function, and training function BFGS quasi-Newton method, with a decay rate set to \(10^{-5}\). We used one hidden layer with different numbers of neurons for each method: full feature set (8 neurons), PCA (8), LDA (11), SSF (15), SSB (12), and SSFB (9).

With the DFNN we used 300 epochs; both Ridge and Lasso penalties used constraint values set to \(10^{-5}\), three hidden layers with 230 neurons, and the AdaDelta learning method with the hyperparameters \(\rho = 0.99\) and \(\epsilon = 10^{-8}\).

5 Results and Discussion

5.1 Results of Dimensionality Reduction Methods

The following subsets were achieved using dimensionality reduction methods: 28 features for the full set of features, 18 for SSB, 13 for PCA, 12 for SSFB, 11 for SSF, and 5 for LDA.

LDA produces the smallest vector, of dimension 5. For PCA, we could obtain an even greater reduction: only seven principal components retained 95% of the information (as measured by the variance); however, using 13 principal components (with 99% information retained) we achieved the best result. Nevertheless, since both LDA and PCA perform data transformations, it would still be necessary to extract all the features from the images. It is outside the scope of this work to analyze whether feature extraction is essential or not.

SSF obtained a vector with 11 features. In the case of SSF, SSB, and SSFB, it is not necessary to perform a transformation, i.e., they discard some features based on criteria, in our case, BIC [4] criterion.

Note that all the dimensionality reduction methods used resulted in substantial reduction: at least 10 features discarded by stepwise selection, and at least 13 dimensions represent 99% of the information. These results suggest the presence of insignificant information in the data set, which can happen due factors such as redundant features, noise caused by either the reconstruction of the image or feature acquisition, and irrelevant features.

5.2 Results of Classification

Table 1 shows the classification methods that provided the top few results along with the dimensionality reduction methods that were used to achieve them. We used macro-averaged measures, namely F1 score, specificity, sensitivity, and accuracy, to compare the results. These metrics are obtained from the confusion matrix. Macro-averaged measures are calculated using an unweighted average for each class separately, whereas micro-averaged measures are averages over instances, in a one-vs-all scheme. Therefore, while macro-averaged measures treats all the classes equally, micro-averaged measures favors larger classes [22]. Since our data set is almost uniformly distributed, values of the macro-averaged and micro-averaged measures are almost the same. Hence, we decided to show only the macro-averaged measures, and avoid redundant information.

We can describe the metrics used as follows: Specificity (Sp) is how effectively a classifier identifies negative labels (the ability of a test to correctly exclude those individuals who do not have a given disease); Sensitivity (Se) is how effectively a classifier identifies positive labels (the ability of a test to correctly identify those individuals who have a given disease); Precision (Pr) is when the amount of random variation in a test is small; and Accuracy (Ac) is when a test measures what it is supposed to measure. F1 score is the harmonic mean of precision and sensitivity, as follows: \( \text {F1} = 2 (\text {Pr} \times \text {Se})/(\text {Pr}+ \text {Se}) \).

SVM presents the second best result among the evaluated methods, with an overall accuracy of 86.64%. DFNN is the winner, outperforming the others ML methods in all the measures analyzed. Note that DFNN presents an almost perfect score for all of the measures, especially at specificity, with 99.92%. It is worth mentioning that the overall accuracy using kNN (83.82%) and GMM (84.86%) surpass the accuracy of classification presented by Pereyra et al. [16] (82.62%) and Almeida et al. [1] (63.00%).

Table 2 shows the confusion matrix of DFNN. It can be seen that all the classes have a correct rate over 98%, with two of them, PC and HC, having a perfect score. The worst classification is presented by ST, with 98.88%. Pereyra et al. [16] describe this disease as having a consistently poor classification, but DFNN improves its classification by more than 20% over previous results.

The time and the respective confidence interval required for training each ML method are: 0.009 s \(\pm 0.3\) ms for kNN with LDA; 8.83 s \(\pm 0.3\) ms for ANN with SSB; 0.59 s \(\pm 8.8\) ms for SVM with SSB; 4.64 s \(\pm 11.3\) ms for LR using all features; 1.42 s \(\pm 80.3\) ms for GMM with SSF; and 1375.45 s [\(\pm 94.3\) ms for DFNN using all features. It is possible to note that DFNN is the slowest method for training among the studied methods. However, the time required in predictions is negligible compared to the training time and is quite similar for all methods.

6 Conclusion

The present work has performed an analysis of radiographic patterns related to DLDs, using data derived from the work of Pereyra et al. [16]. Dimensionality reduction methods were employed to reduce the dimensionality of the data. The best result, obtained using LDA, showed a reduction from 28 to 5 features, but LDA transforms the data into a new space, making it hard to know how each feature contributes to the data.

SVM, kNN, GMM, ANN, LR, and DFNN were used to classify the data. DFNN outperformed other methods in all comparison metrics used, with an overall accuracy of 99.60%, about 10% better than the second best ML method. The methods shown assist in the development of CAD systems for DLDs.

References

Almeida, E., Rangayyan, R., Azevedo-Marques, P.: Fuzzy membership functions for analysis of high-resolution CT images of diffuse pulmonary diseases. In: 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society EMBC, pp. 719–722 (2015)

Almeida, E., Rangayyan, R., Azevedo-Marques, P.: Gaussian mixture modeling for statistical analysis of features of high-resolution CT images of diffuse pulmonary diseases. In: 2015 IEEE International Symposium on Medical Measurements and Applications (MeMeA), pp. 1–5 (2015)

Almiron, M., Almeida, E., Miranda, M.: The reliability of statistical functions in four software packages freely used in numerical computation. Braz. J. Probab. Stat. 23(2), 107–119 (2009)

Bishop, C.M.: Pattern Recognition and Machine Learning. Information Science and Statistics. Springer, New York (2006)

Cai, Z., Xu, D., Zhang, Q., Zhang, J., Ngai, S., Shao, J.: Classification of lung cancer using ensemble-based feature selection and machine learning methods. Mol. BioSyst. 11, 791–800 (2015)

Candel, A., Parmar, V., LeDell, E., Arora, A.: Deep Learning with H2O, 5th edn. H2O.ai Inc, Mountain View (2017)

Fraley, C., Raftery, A.: Model-based clustering, discriminant analysis, and density estimation. J. Am. Stat. Assoc. 97, 611–631 (2000)

Glorot, X., Bengio, Y.: Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the International Conference on Artificial Intelligence and Statistics (AISTATS 2010). Society for Artificial Intelligence and Statistics (2010)

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT Press, Cambridge (2016)

Haralick, R.M., Shanmugam, K., Dinstein, I.: Textural features for image classification. IEEE Trans. Syst. Man Cybern. 6, 610–621 (1973)

Hastie, T., Tibshirani, R., Friedman, J.: The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd edn. Springer, New York (2009). https://doi.org/10.1007/978-0-387-84858-7

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016)

Laws, K.: Rapid texture identification. In: Proceedings of SPIE Conference on Image Processing for Missile Guidance, vol. 238, pp. 376–380 (1980)

Litjens, G., Kooi, T., Bejnordi, B., Setio, A., Ciompi, F., Ghafoorian, M., Laak, J., Ginneken, B., Sánchez, C.: A survey on deep learning in medical image analysis. CoRR abs/1702.05747 (2017)

McLachlan, G., Peel, D.: Finite Mixture Models. Wiley, Hoboken (2004)

Pereyra, L., Rangayyan, R., Ponciano-Silva, M., Azevedo-Marques, P.: Fractal analysis for computer-aided diagnosis of diffuse pulmonary diseases in HRCT images. In: 2014 IEEE International Symposium on Medical Measurements and Applications (MeMeA), pp. 1–6 (2014)

R Core Team: R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing (2016). https://www.R-project.org/

Rangayyan, R.: Biomedical Image Analysis. CRC Press, Boca Raton (2004)

Ravanelli, M., Brakel, P., Omologo, M., Bengio, Y.: A network of deep neural networks for distant speech recognition. CoRR abs/1703.08002 (2017)

Ripley, B., Hjort, N.: Pattern Recognition and Neural Networks. Cambridge University Press, Cambridge (1995)

Rubin, A., Santana, A., Costa, A., Baldi, B., Pereira, C., et al.: Diretrizes de doenças pulmonares intersticiais da Sociedade Brasileira de Pneumologia e Tisiologia. Braz. J. Pulmonol. 38(suppl. 2), S1–S133 (2012)

Sokolova, M., Lapalme, G.: A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 45(4), 427–437 (2009)

Tan, P.N., Steinbach, M., Kumar, V.: Introduction to Data Mining. Addison-Wesley Longman Publishing Co., Inc., Boston (2005)

Zaki, M., Meira Jr., W.: Data Mining and Analysis: Fundamental Concepts and Algorithms. Cambridge University Press, Cambridge (2014)

Zeiler, M.D.: ADADELTA: An adaptive learning rate method. CoRR abs/1212.5701 (2012)

Zheng, B., Yoon, S., Lam, S.S.: Breast cancer diagnosis based on feature extraction using a hybrid of k-means and support vector machine algorithms. Expert Syst. Appl. 41(4), 1476–1482 (2014)

Acknowledgement

This work was partially funded by Fapeal, CNPq, and SEFAZ-AL. The work of Héctor Allende-Cid was supported by the project FONDECYT Initiation into Research 11150248.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Cardoso, I. et al. (2018). Evaluation of Deep Feedforward Neural Networks for Classification of Diffuse Lung Diseases. In: Mendoza, M., Velastín, S. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2017. Lecture Notes in Computer Science(), vol 10657. Springer, Cham. https://doi.org/10.1007/978-3-319-75193-1_19

Download citation

DOI: https://doi.org/10.1007/978-3-319-75193-1_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-75192-4

Online ISBN: 978-3-319-75193-1

eBook Packages: Computer ScienceComputer Science (R0)