Abstract

This publication describes a systematic approach for behaviour adaptations of humans, based on interaction patterns as a fundamental way to design and describe human machine interaction, and on image schemas as the basic elements of the resulting interaction. The natural learning path since childhood involves getting knowledge by experience; it is during this process that image schemas are built. The approach described in this paper was developed in close interplay with the concepts of cooperative guidance and control (CGC), where a cooperative automation and a human control a machine together, and of augmented reality (AR), where a natural representation of the world, e.g. in form of a video stream, is enriched with dynamic symbology. The concept was instantiated as interaction patterns “longitudinal and lateral collision avoidance”, implemented in a fix based simulator, and tested with professional operators whether driving performance and safety in a vehicle with restricted vision could be improved. Furthermore, it was tested whether interaction patterns could be used to adapt the current driver behaviour towards better performance while reducing the task load. Using interaction patterns that escalated according to the drivers actions and the current environmental state, lead to a reduction of temporal demand, effort and frustration. Furthermore less collisions were counted and the overall lateral displacement of the vehicle was reduced. The results were a good mix of encouragement and lessons learned, both for the methodical approach of pattern based human machine interaction, and for the application of AR-based cooperative guidance and control.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Augmented reality

- Interaction patterns

- Human behaviour adaptation

- Intelligent transportation systems

- Cooperative guidance and control

1 Introduction

Military vehicles like heavy trucks, tanks or excavators face the challenge of being sufficiently guarded against enemy fire and being safely manoeuvrable. The latter includes driving capability but also vision in order to see where the vehicle is driving. The safety aspect is not only important for the driver himself but also for other road users. Current military vehicles therefore face a trade-off between optimal vision and optimal armour resulting in more obstruction of the driver’s sight and the necessity of an assisting co-driver. One option to overcome such limitations is to create a virtually transparent vehicle by using a camera-monitor system that provides a seamless vision to the driver.

Another important aspect of shielded vehicles in combat scenarios is situational awareness. Situational awareness (SA) is a decisive aspect of survivability for combat vehicles, and AR technologies have the potential to take SA to the next level. The introduction of optical, IR and acoustic sensors for monitoring the vehicle’s surroundings, and in particular the integration of Battlefield Management Systems (BMS), has significantly improved situational awareness. However, in time critical situations the vehicle crew (e.g. commander, gunner and driver) have difficulties fully exploiting the information provided by these systems since the crew has to focus on the scene where the action is taking place. Augmentation offer the potential of overcoming this deficiency by using for example AR technologies displaying information, typically from the BMS, directly in the operator’s sight in form of graphical symbols depending on the driver’s current actions. Thus the operator can pay full attention to what is going on in the vehicle’s vicinity while staying updated on the tactical situation within the sights’ field of view.

The idea presented in this paper is to use interaction patterns to adapt assistance to the current situation formed by the environment and the driver’s behaviour and actions. The behaviour of interaction patterns depends on the driver’s actions and the state of the environment. Their focus is to adapt the driver’s actions towards a safer system state through multimodal interaction.

First, the general concept of interaction patterns will be described. Second the application of interaction patterns in shielded vehicles will be presented and the respective outcomes of a conducted study. The paper finishes with a discussion of the findings and concludes with an outlook towards other domains.

2 Pattern Languages

The idea of using Pattern Languages has been discussed and applied for several years now. It was first introduced by Alexander in the area of architecture where he explains how a set of recurring designs can allow anyone to create its own house tailored to his needs by using previously known and tested solutions [1]. These solutions, so called Patterns, have the characteristic that they can be linked to one another allowing the user for complex and unique creations similarly to how a spoken language permits creating an unlimited quantity of sentences by arranging the words.

Pattern languages have found usages outside of architecture making them powerful tools that can help multidisciplinary teams communicate better without needing to have a complete understanding of how a process works while also helping to speed up the development of new tools. One of these areas is in the development of software where the pattern language approach can be used for object oriented programming. Here, the objects and classes are described in a more general way that can later be adapted to solve a specific problem [2]. Gamma further elaborates on the description and elements of a pattern expanding on the definition by Alexander. According to Gamma, a pattern has four essential elements: a name, a problem description, a solution and consequences of utilizing the pattern. The basic structure was also the starting point for the patterns described in this paper.

In the area of Interaction Design however, the application of Pattern Languages is more varied and specific to each use case. As the examples provided by Borchers in [3], each use case has a particular Pattern Language that only applies within the context of each use case. Borchers also expands in the elements that define a pattern by adding context, illustrations, examples and diagrams to the definition of Gamma.

2.1 Interaction Pattern Language

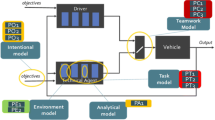

It is clear that the definition and application of a Pattern Language is strongly linked to the context surrounding its application. The basic paradigm of the research described in this paper is that of Cooperative Guidance and Control (CGC) where both the driver and a cooperative automation have influence on the guidance and control of a vehicle, e.g. described in [4,5,6,7]. In the case of CGC, Fig. 1 shows an excerpt of the pattern language developed for this. In the diagram, each of the items represents a pattern, with the lines being the links or relation to others. It is important to mention that other possible connections exist but in order to maintain clarity, they have been omitted. An important aspect of a pattern language is that patterns hold a degree of independence; this means that whether a pattern is applied or not, should not affect the implementation of others. This also allows for patterns to be included in other languages.

Each of the patterns in Fig. 1 is described following the same structure. The elements used for this purpose are the following:

Problem.

Provides a simplified description of a common problem that the pattern solves.

Solution.

Presents a detailed but non-restrictive procedure for addressing the problem. It includes the target domains, image schemas and source domains relevant for solving the problem. In addition, stating the target domains opens the possibility for creativity on how to implement the pattern outside of the proposed image schemas and source domains.

Consequences.

Here the possible outcomes of applying the pattern are described. If applicable, affected target domains are also mentioned, e.g. decrease on the sense of urgency.

Implementation Example.

These can be programming code examples, technical drawings of a design, or diagrams. Their purpose is to offer an extra explanation and quick guide of how the pattern can be implemented.

For the field Cooperative Guidance and Control it is especially important to point out that the human factors involved in the creation and application of an Interaction Pattern should be included in its definition. This has been done by adding the involved Target Domain or Internal Target States, Image Schemas and Source Domain [8, 9] to the Solution and Consequences elements of the pattern definition.

The Target Domains or Internal Target States are the perception areas that are being changed as a result of the user’s interaction with his surroundings. These perception areas, e.g. urgency, importance, authority, etc., can be purposely targeted to generate a desired change by the implementation of an interaction design. Therefore, interaction patterns should consider the Target Domains affected as either part of its solution or as a result of applying it.

The natural learning path since childhood involves getting knowledge by experience; it is during this process that image schemas are built. These image schemas are representations of how our body interacts with the environment and our understanding about it. For example, when used in the design of an interface, image schemas (conventionally written in capital letters) such as BIG-SMALL [8, 10], FAST-SLOW [8], UP-DOWN [11, 12] add greater level of intuitivism. A BIG symbol can be understood as near or more important, a FAST blinking light can mean greater urgency than a SLOW one. We don’t explicitly learn the meaning of each of the image schemas but nevertheless we understand them since we have previously encountered them without even realizing it.

As previously mentioned, a Target Domain can be intentionally affected in order to provoke a perception change. Source Domains provide the medium through which new information is captured. They work on the different modalities and have the characteristic of carrying a specific physical value. As such, source domains are closely linked to the implementation of an interaction pattern, e.g. vibration, stiffness, colour, symbols, beeps, etc.

3 Interaction Pattern Example for Shielded Vehicle Application

In this section the application of an example interaction pattern for shielded vehicles will be described. First the respective research questions will be stated followed by the interaction pattern implementation. Afterwards, the study design will be presented. The section concludes with the respective evaluation of the study results.

3.1 Research Questions

The two main research questions of the study were:

Can driving performance and safety in a shielded vehicle with restricted vision in the proximity of the vehicle be improved using interaction patterns?

Can interaction patterns be used to adapt the current driver behaviour towards better performance while reducing the task load?

3.2 Implementation

Name.

Collision Avoidance.

Problem.

The main problem addressed by the interaction pattern is to prevent collisions with objects in the vehicle’s proximity. There are at least two tension poles from the perspective of the ego-system between which a balance needs to be achieved: approaching the obstacle(s) and keeping at distance of (or deviating around) the obstacle. The awareness might be low, perception might be reduced. The problem can be visualized, see Fig. 2.

Abstract problem description for the interaction pattern “collision avoidance”. Upper red arrow represents tensing action due to approaching the obstacle. Lower green arrow represents relaxing action due to receding from the obstacle. The balancing action is to avoid the obstacle and reach a safe distance. (Color figure online)

Solution.

A stepwise escalation can lead to a higher awareness that the danger in the current situation is increasing when continuing with the current action or non-action. Also escalation offers the opportunity to react on user action, hence a positive reaction to behaviour adaption towards the non-danger tension pole. Depending on the relative speed and the escalation phase a respective obstacle avoidance manoeuvre should be chosen, e.g. an implemented automated lane change pattern or an implemented emergency brake pattern. To make both available, a mode transition pattern is necessary to decouple the driver.

The escalation should be triggered depending on the Time to Collision (TTC) in situations with rather high relative velocities, and triggered depending on distance in situations with rather low relative velocities. The result of the pattern implementation is an improved awareness of the target domains Variation and Importance. Variation of the current system state and Importance to change the current behaviour.

Consequences.

The interaction pattern addresses a reduction in collisions with near objects and accordingly will reduce lateral displacement from the centre of the lane. Due to a reduced task load, the Situation Awareness of drivers will be enhanced. The solution focuses on adaption management, therefore certain internal target states are addressed. Focused domains are Variation and Importance since an action needs to be made to avoid a dangerous situation that is going to happen in the current course of (non)action. Challenging consequences are the aspect of over trust or overreliance in a non-perfect system. Also connected to non-perfect systems are wrong escalations due to falsely detected objects that may negatively affect acceptance. Finally, an overreaction by the user could be observed when escalation phases are too small.

Implementation Example.

The target domain of Variation can be addressed using the image schema PATH. Structural elements of PATH are a start, an end and a direction [13, 14]. The PATH schema also includes a series of locations [15] that can be interpreted as escalation steps. Symbolic qualities addressing Importance can be implemented with colour codes following the pattern from traffic lights, where red means stop or danger and green means go or safe [16]. These colour codes can be emphasized using the BRIGHT-DARK image schema [12].

The start and end locations or phase limits need to be determined with relevant variables. In a situation with rather high relative velocities, e.g. following a moving car, distance is not sufficient information to determine the need for behaviour adaptation. In situations with high relative velocities the need for reaction is farther away than in situations with low relative velocities. Therefore a combination of relative velocity and distance as time to collision (TTC) seems to be a valid concept to determine escalation boundaries in situations with rather high relative velocities. In more static situations, e.g. parking or slowly driving in a narrow road, the TTC becomes unfeasible due to extremely low relative velocities and the need for very close approaches, so that distance seems to be a useful concept in such situations. Another variable that takes the current driver’s behaviour into account is the current steering angle. Therefore depending on the situation the same pattern can be used with different escalation variables and boundaries.

The respective implementations for a collision avoidance pattern in a situation with rather low relative velocities are visualized in Fig. 3 for forward collisions and Fig. 4 for side collisions or road departure.

The PATH image schema is implemented having three distinctive steps or locations: Low urgency, medium urgency and high urgency. The respective variable when a certain urgency level is reached depends on the distance between the ego vehicles bounding box and the obstacle’s bounding box as well as the current steering angle. Depending on the ego vehicle’s action a Variation of Importance is defined by the escalation phase.

In Fig. 3 we see the implementation of the collision avoidance pattern in a forward collision avoidance assistance when approaching a parked truck.

When there is 5 m distance between ego-vehicle and obstacle the forward hood turns from green to orange, except if the driver starts to steer to the right. When forward distance is lower than 2 m and the ego vehicle and obstacle are in the same lane, forward hood turns from orange to red except if the driver starts to steer to the right. If the driver initiates reverse and the distance increases the interaction pattern deescalates, respectively.

A similar collision avoidance pattern can be implemented to be used as a side collision avoidance assistance either for parked vehicles at the side or to prevent road departure (see Fig. 4).

Again not only the current relation of the vehicle towards a side obstacle or roadside end defines the escalation, but also the driver’s action. If the driver already steers to the left when departing to the right, the pattern will deescalate according to the steering model of the vehicle and the respective time of lane departure (TLC).

3.3 Study Design

The participants drove in a random order with a cabin, a monitor and a monitorsystem with augmentation condition (see Fig. 5).

The design of the experiment was a within subjects design with three repeated measurements. 18 military drivers took part. The mean age of the participants was 32 (SD = 6,3). Every run included a training of 3 min to get used to the setup. After every condition they filled out the NASA-TLX questionnaire. At the end of the experiment the systems were evaluated in a semi-structured interview.

The NASA Task Load Index (NASA-TLX) [17] is an assessment tool that rates perceived task load in order to assess a task. The task load is divided into six subscales. They are rated for each task within a 100-points range with 5-point steps.

-

Mental Demand: How mentally demanding was the task?

-

Physical Demand: How physically demanding was the task?

-

Temporal Demand: How hurried or rushed was the pace of the task?

-

Performance: How successful were you in accomplishing what you were asked to do?

-

Effort: How hard did you have to work to accomplish your level of performance?

-

Frustration: How insecure, discouraged, irritated, stressed, and annoyed were you?

Additionally, quantitative data for displacement of the centre of the lane and the amount of collisions with infrastructure, cars and mines was logged during the test runs. Different scenarios were used to find out about the usefulness of the different test conditions in terms of performance and situation awareness (driving data), and task load (NASA-TLX). The respective scenarios were a city scenario with other traffic participants, like pedestrians and other vehicles, and an off-road part. In order to prevent sequence effects, two different maps with different scenario sequences were built and the test conditions permutated.

Respectively, comparable interaction patterns were used in the off-road part, where a very narrow path needed to be followed through a mine field.

Setup.

The study was conducted in a generic static driving simulator running the professional driving simulation software SILABFootnote 1. As driving interfaces an active steering wheel and active gas and brake pedals from SENSODRIVEFootnote 2 were used and a Sidestick from Stirling DynamicsFootnote 3 as gear stick.

Regarding the visual interfaces, there was a training condition and three test conditions (see Fig. 5).

In the training condition Fig. 5(a), the simulation was visualized via a cave setup representing three large projection screens that were arranged in a 90° angle to the sides and to the front. Additionally two \( 13^{\prime \prime } \) LCD 720p monitors were used as rear-view mirrors. A third \( 13^{\prime \prime } \) LCD 720p monitor was used to visualize speedometer and tachometer.

In the cabin condition Fig. 5(b), a wooden vehicle frame was added to the training condition to introduce ambient occlusion at A and B-pillars.

In the monitor condition Fig. 5(c) and (d), the wooden frame was replaced by a monitor array of five \( 13^{\prime \prime } \) LCD 720p monitors that cover 160° of the driver’s horizontal field of view. Rear-view mirrors as well as ramp mirrors were integrated as picture-in picture (PiP) in the forward left and forward right screens (see Fig. 5(d)).

Additionally, in the monitorsystem with augmentation condition Fig. 5(d), depending on the actions of the driver and the respective situation, interaction patterns escalated or deescalated. As mentioned before, the basic architecture of the generic assistance and automation system is based on the concept of interaction mediation and cooperative guidance and control of highly automated vehicles [6, 7]. In the respective study, only visual assistance is given via the screens. Therefore the final escalation step of the collision avoidance patterns (Figs. 3 and 4), when control was shifted from human to automation, was not considered.

The hypothesis of this study is that the human behaviour adaptation using interaction patterns via a camera monitorsystem with augmentation will improve the situation awareness, the driver´s performance and will reduce the overall task load.

3.4 Evaluation

As mentioned before, the concepts were tested in a simulator experiment, in which 18 military drivers took part. The mean age of the participants was 32 (SD = 6,3). All of them have a car and a truck driving licence, eight a motorcycle licence and three a tank licence. 11 of the participants use their vehicle for private purpose daily, 1 participant 3–5 times a week. 50% of the participants had very little simulator experience, 50% little or rather little. Eleven persons assess their driving style as safe/experienced, three as dynamic/sportive/brisk, 4 as cautious. The experience with driving assistance systems, e.g. lane departure warning system, was very low. Only with adaptive cruise control systems 50% of the participants have extensive experience.

In the NASA-TLX (see Fig. 6 and Table 1) the mental demand for driving with the cabin was higher rated than when driving with the monitor and monitorsystem with augmentation condition.

The physical demand was also rated as the highest between the concepts. The monitorsystem with augmentation had the lowest temporal demand, effort and frustration. The participants were satisfied with their performance the most after driving the monitorsystem with augmentation. For the performance there was a statistically significant difference between all conditions. A repeated measures ANOVA showed a difference, F(2, 34) = 7,055 p = .003, partial η2 = .293. A Bonferroni-corrected post-hoc test showed a significant difference between the cabin and the monitor condition (.009, 95%-CI [−22.63, −2.93]). Also there was a difference between the monitor and the monitorsystem with augmentation condition (.005, 95%-CI [−19.45, −3.3]).

The lowest lateral displacement (see Fig. 7 and Table 2) was in all parts of the scenarios with the monitorsystem with augmentation. A statistical significance could not be found between the conditions.

The following diagrams (Figs. 8, 9 and 10) show the different amount of collisions with the respective elements.

Collisions with infrastructure, cars and mines were mainly caused in the cabin. The probability of a collision is higher, but also the amount of absolute collisions. The infrastructure used in this study was made of houses, walls, traffic lights and traffic signs.

4 Discussion and Outlook

This publication described a systematic approach for behaviour adaptations of humans, based on interaction patterns as a fundamental way to design and describe human machine interaction, and on image schemas as the basic elements of the resulting interaction. This approach was developed in close interplay with the concepts of cooperative guidance and control, where a cooperative automation and a human control a machine together, and of augmented reality, where a natural representation of the world, e.g. in form of a video stream, is enriched with dynamic symbology. The concept was applied to armoured vehicles, instantiated as interaction patterns “longitudinal and lateral collision avoidance”, implemented in a fix based simulator, and tested with professional operators. The results were a good mix of encouragement and lessons learned, both for the methodical approach of pattern based human machine interaction, and for the application of AR-based cooperative guidance and control.

The use of interaction patterns for collision avoidance, which was tested in the study, showed different aspects of improvement. One of the main objectives to reduce the number of collisions with vehicles in the near proximity could be reached. Also, the displacement was always lower. As a result, the driving performance could be enhanced. Furthermore, the task load could be reduced as the driving with the augmentation caused the lowest temporal demand, effort and frustration. Also, the participants were satisfied with their performance the most.

A possible opportunity for improvement is the integration of live eye tracking to move the drivers focus, e.g. from one screen to another or to point out the critical collision area. Physiological metrics might be useful for patterns in other situations, e.g. combining higher assistance and automation degrees with a mode transition pattern when the task load of drivers is too high. Also, the used visual figure of the hood could be improved in terms of size and form. Additionally, multimodal extension of the patterns with haptic or acoustic feedback could be evaluated.

Regarding the AR-based cooperative guidance and control: We gained an increasing understanding of how this cooperative interplay between an automation and humans can be organized, and patterns are an excellent way to describe this organization. We have encouraging results on a couple of patterns, and especially with the link to image schemas, we increasingly understand why some patterns work differently and better than others. With all optimism, we are far from having optimal patterns, and far from having more than a first glimpse of this vast design space of human machine cooperation and technology based reality augmentation.

Regarding the overall approach of linking patterns with image schemas: For us this is the most promising way to link everything that the community has learned already about specific patterns and specific image schemas, and to make this available in the design process. We have a first understanding how this link can be done, however we are far from having an optimal way to do this linking of patterns and image schemas efficiently. More research, and especially more joint effort is needed to organize and combine the increasing knowledge that is being built up in different spots in the community, and to make this available in the specific design and engineering situation of real products, so that it can improve the increasingly complex human machine systems, not only in the far future, but right here, right now.

Notes

- 1.

WIVW, https://wivw.de/en/silab.

- 2.

SENSODRIVE, https://www.sensodrive.de.

- 3.

Stirling Dynamics, https://www.stirling-dynamics.com.

References

Alexander, C., Ishikawa, S., Silverstein, M., Jacobson, M., Fiksdahl-King, I., Angel, S.: A Pattern Language: Towns, Buildings, Construction. Oxford University Press, New York (1977)

Gamma, E., Helm, R., Johnson, R., Vlissides, J.: Design Patterns: Elements of Reusable Object-Oriented Software. Addison-Wesley, Boston (1995)

Borchers, J.O.: A pattern approach to interaction design. AI Soc. 15, 359–376 (2001)

Flemisch, F., Heesen, M., Hesse, T., Kelsch, J., Schieben, A., Beller, J.: Towards a dynamic balance between humans and automation: authority, ability, responsibility and control in shared and cooperative control situations. Cognit. Technol. Work 14, 3–18 (2011)

Altendorf, E., Flemisch, F.: Prediction of driving behavior in cooperative guidance and control: a first game-theoretic approach. In: 3. Interdisziplinärer Workshop: Kognitive Systeme. Duisburg, Germany (2014)

Baltzer, M.; Altendorf, E.; Meier, S., Flemisch, F.: Mediating the interaction between human and automation during the arbitration processes in cooperative guidance and control of highly automated vehicles: basic concept and first study. In: Stanton, N., Landry, S., Bucchianico, G.D., Vallicelli, A. (eds.) Advances in Human Aspects of Transportation Part I, pp. 439–450. AHFE Conference, Krakow (2014)

Flemisch, F., Winner, H., Bruder, R., Bengler, K.: Cooperative guidance, control, and automation. In: Winner, H., Hakuli, S., Lotz, F., Singer, C. (eds.) Handbook of Driver Assistance Systems, pp. 1–9. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-09840-1_58-1

Hurtienne, J.: Image schemas and design for intuitive use – exploring new guidance for user interface design. Dissertational thesis. Technische Universität Berlin (2011)

Baltzer, M., Weßel, G., López, D., Flemisch, F.: Interaction patterns for cooperative guidance and control. In: IEEE International Conference on Systems, Man, and Cybernetics, Banff, Canada (2017)

Tolaas, J.: Notes on the origin of some spatialization metaphors. Metaphor Symb. Activity 6, 203–218 (1991)

Lakoff, G., Johnson, M.: Philosophy in the Flesh: the Embodied Mind & its Challenge to Western Thought. Basic Books, New York (1999)

Baldauf, C.: Metapher und Kognition: Grundlagen einer neuen Theorie der Alltagsmetapher. P. Lang, Frankfurt am Main (1997)

Johnson, M.: The Body in the Mind: The Bodily Basis of Meaning, Imagination, and Reason. University of Chicago Press, Chicago (1987)

Lakoff, G.: Women, Fire and Dangerous Things. University of Chicago Press, Chicago (1987)

Lakoff, G.: Some empirical results about the nature of concepts. Mind Lang. 4, 103–129 (1989)

Bolz, R.E.: CRC Handbook of Tables for Applied Engineering Science. CRC Press, Boca Raton (1973)

Hart, S.G., Staveland, L.E.: Development of NASA-TLX (Task Load Index): results of empirical and theoretical research. Adv. Psychol. 52, 139–183 (1988)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Baltzer, M.C.A., Lassen, C., López, D., Flemisch, F. (2018). Behaviour Adaptation Using Interaction Patterns with Augmented Reality Elements. In: Schmorrow, D., Fidopiastis, C. (eds) Augmented Cognition: Intelligent Technologies. AC 2018. Lecture Notes in Computer Science(), vol 10915. Springer, Cham. https://doi.org/10.1007/978-3-319-91470-1_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-91470-1_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91469-5

Online ISBN: 978-3-319-91470-1

eBook Packages: Computer ScienceComputer Science (R0)