Abstract

We developed a running support system using body movements as input in a realistic 3D Map. The users use their body movements to control movements in the 3D map. We used depth-camera sensor to track the user’s body joints movement as the user moves. The location changes are tracked real-time and the system calculates the speed, which will be used as speed control in our system. This means when the users run in the real life, they will also feel like running in the system naturally. The user will feel the realistic aspect of our system because the speed changes with the user’s speed. Realistic 3D map from Zenrin is used in our system. The map consists of several detailed featured such as traffic signs, train station, and another natural feature such as weather, lightning, and shadow. Therefore, we would like to help the users feel more realistic and have some fun experiences.

In our system, we use a head-mounted display to enhance the user’s experience. The users will wear it while running and can see the realistic 3D map environment. We aim to provide immersive experience so the users feel as if going to the real place and keep the users motivation high. Integrating realistic 3D map as part of the system will enrich human experience, keep user motivated, and provide natural environment that is similar with the real world. We hope our system can assist the users as one of possible alternatives running in a virtual environment.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Realistic 3D map and Head Mounted Display developments open new possibilities for creating interactive and immersive interface that enables user to explore and enjoy new experiences in the virtual world. On the other hand, depth-camera is a well-known device that can locate body joints and recognize body movements based on body joints’ location changes. There are several depth-cameras in the market, such as Leap Motion which specialized in detecting hands and finger movements We are interested in full body movements tracking using Kinect depth-camera in a realistic 3D map environment.

Realistic 3D map recently becoming more popular due to the increasing need of virtual environment that is similar with the real world. Zenrin, a map company from Japan, created realistic 3d map of cities in Japan. The Zenrin 3D map includes detailed information such as traffic and road sign, public spaces, and natural landscapes [1]. Several cities already developed and many more will come, giving opportunities for virtual reality (VR) system development. Virtual reality provides many possibilities for developing interactive and immersive applications. Although the use of VR holds risk, such as VR sickness [2], it certainly provides opportunities for new applications and provides a unique experience to the user.

Exercise is one of the most important aspect of human life. We need to exercise in daily routine to keep our body healthy and fit. There are many varieties of exercise, but among them running is popular because people consider it fun and easy to do. Despite the popularity of running, in this modern time, many people do not exercise enough for maintaining their health. There are lots of reasons for keeping people from doing routine exercise, therefore we aim to create a system that people can use for exercising, especially running, anytime and anywhere they want.

In this paper, we propose a support system for running in a realistic 3D map. The user’s body movements will be used as input by mapping it using Kinect depth camera [3]. The 3D map used in this system is a replication of the real environment of a city in Japan. The use of realistic 3D map will keep the user motivated and provide familiarity with the real environment.

2 Goal and Approach

We are aiming to create a running support system in which the users use his body movement to control movements on the 3D map. When the users run in the real world, they will feel like running in the map naturally. Integrating realistic 3D map will enrich the human experience, keep them motivated, and provide a natural environment.

A system using a realistic 3D map as its environment is still limited, due to the lack of suitable environment. The development of 3D map of cities in Japan by Zenrin provides and opens new ways for presenting immersive experience. Body movement is used as input to the system by mapping the user’s body joints into a character in the system [3]. By using body movement as input, the user will feel more connected and realistic in the way they move and control the system. The body joints movement data will be used to calculate velocity and recognize action [4]. The action recognized will determine the movement in the system.

Exploring a large virtual environment usually required lots of space in the real world. Consequently, many systems related to virtual environment exploration could only be realized in small scale or using a controller to move in the virtual world. Using controller to control movement in the virtual world will provide one solution, but consequently the system will not provide natural feeling for the users. Another possible solution is to use walking in place principle to explore a large virtual reality environment, so we do not need to provide lots of space in the real world [5].

We use 3D map from Zenrin company as our virtual world environment. The 3D map from Zenrin provides detailed and accurate information (Fig. 2) so the user will feel like going to the real place in the real world. The users will also be easier to remember the location and maintain their running performance. They will feel like running in real place, therefore the performance is expected to be similar with the real running.

In this system, we use body movement as input. The user’s body movement is tracked and processed into another output in the system. We use body movement to provide natural feeling toward the user, so the user will move at ease and feel like connected into the system through their body. The body movement is represented by body joint’s movement in real time.

Among several depth cameras, we choose to use Kinect depth-camera. We choose Kinect as our tool because of its capability to capture whole body data. Kinect can capture the user’s body movement through tracking the body joints. Kinect also provides tracking of more than 36 body joints and another feature such as gesture. We would like to use the whole-body movement to produce movement in the system, speed control, posture control, and another feature that require full body movement.

The users will use head-mounted display to look inside the 3D map (Fig. 1a). We decided to use HMD to provide with immersive feeling and natural way. The users can run in longer period and get the feeling of the certain area the user explore in the map.

Our running support system has several merits. Firstly, the users can exercise while looking around the realistic 3D map. This will increase their performance and keep their motivations high. Using body movement instead of another method provides the user connectivity feeling with the system. By using realistic environment, the users can get some insights about the environment and help them remembers the location when later visit the real place in the real world.

3 Running Support System

3.1 Realistic 3D Map

We implemented the system for running support system in a realistic 3D map. We use a 3D map of city in Japan provided by Zenrin. In our system, we use the Otaku city 3D map (Akihabara) because of its popularity worldwide. The 3D map provides a detailed environment and smooth images of the real Akihabara. We downloaded the 3D map file from Unity Asset Store (Fig. 2).

Information in the 3D map consists of several detailed infrastructure, public spaces, and natural landscapes. We can observe traffic and road signs, train station, and rivers just like in the real world. By using this detailed map, we hope can keep the user’s motivation high and make the user feel like exploring the location in the real world. The raw file downloaded from Unity asset store still contains several features provided by the company. To develop system of our own, we need to delete the current features and install our own.

3.2 Moving Forward Mechanism

The first feature of the system is the moving forward. The purpose is how to make the user runs as if running in the real world by moving their leg, arms, and create certain posture that are similar. If the users make running like movements, the point of view in the virtual world will change (Fig. 3) as if the users move in the real world. The system will also have speed control for the running movement. Speed in this context means the avatar speed of moving through the map. Figure 4 illustrates the running-like movement performed by the users.

First, we use depth-camera to track knee joints. Then, we calculate the approximated speed of knee movements (x, y, z axis). We calculate the velocity by comparing the position changes of those joints for each frame [4] and take the maximum value from right and left knee. The maximum value from both knees movement will be used as the “speed” approximator.

The running speed obtained in our system represents not the whole accurate speed of the user’s running activity. The knee movement translated into the steps in our system as approximation of running speed. How many steps we interpreted is flexible and can be changed, but our main point is we want to reflect the change of moving speed in our speed by integrating it with the users running intensity in the real world.

We use knee movement as our input value because of human running posture. In our system, the users do not run like in the real world, instead they will do running in place movement. We observe that knee is the part that will move the most when people running in the real world. Consequently, knee joints are tracked by our system and its changing during the time will be used as speed control input. Figure 5 illustrates the horizontal knee movement we capture to produce the moving forward mechanism and speed control in our system.

Speed controller is added into the system to realize a realistic running support system. Human run in certain speed in certain period and the speed is subject to change due to various reasons. Integrating the speed into the system will provide more realistic feeling to the user, so we can expect linear performances of duration using our system with the benefit of running.

3.3 Horizontal Rotation

Another feature in the system is the horizontal rotation. This feature enables the users to change their point of view in horizontal direction. Since we limit the space of user in our system, we need another way to realize the horizontal movement [8]. In this case, the Horizontal rotation movement is produced by the user’s orientation toward the depth-camera.

If the users would like to change the direction in the system, they are required to change direction toward the depth-camera. For example, as shown in Fig. 6(a), if the orientation exceeds x degrees, the point of view in the system will also change toward that direction (Fig. 6(b)). This feature is designed to compensate the vast 3D map environment with the limited space in the real world. By using this feature, the users can change direction and explore another area without changing permanent direction in real life. In this sense, the user will maintain his original position when starting to run again.

Next, we would like to set certain threshold for control. If the user’s orientation change to the right or left side more than the threshold value, we will change the point of view of the system according to user’s direction. We set the threshold 45° so the system will not easily detect the user’s movement as command for changing direction. When the angle value corresponds with the threshold, the system will trigger the command to the GUI object attached to the avatar. We imbued the GUI object with another command for mouse movement input and limit the moving axis just for x axis. Another axis already covered by the head-mounted display setting for looking around the environment.

3.4 Posture Control

Posture control is a mechanism for comparing the user’s posture while running with a standard [9]. The feature will help the user maintain good posture while running, such as asking the users to keep their shoulder and neck straight for maintaining effective running posture (Fig. 7(a)).

In our system the avatar and the users body joints are connected. From that, we can obtain the position of each body joint (x, y, and z) for several body joints such as neck, head, spine, torso, and shoulders. After obtaining the position, we will calculate this following angle: Neck and head angle, torso and neck angle, and angle between shoulders.

We set thresholds within each calculation to keep the angle in certain position so the users will run in good posture. The system will remind the user of their posture by comparing with the standard posture for running. In our system when the user makes bad posture, the system will recognize the posture as bad and reminds the user. For example, in figure the user’s running with uneven shoulders position, the system will remind the user by popping a text in the head mounted display as represented in Fig. 7(b).

3.5 Collision Control

Collision Control is a feature that will prevents the users to have a collision with another object in the room and makes them stay in the proper distance of Kinect camera. We will use the depth data (z coordinates) of the user’s distance from Kinect to their position, and x coordinates data to track the user’s movement relative to the sensor in the room.

The system will recognize the distance between the user and another object. In our research, we limited the area of the user movements into a square area of 1.5 m2 squares in front of the camera. The limitation is meant to prevent the user going out from the Kinect’s reading area and prevents the user from making collision or injury by hitting with surrounding objects.

We use the depth camera to extract the distance between user and object (in this case is the table we use for placing the depth-camera). 0.5 m is the maximum distance we allow the users standing in front of the table (Fig. 8(a)). When the users distance with the table decreases below 0.5 m, the system will remind by presenting warning text in the screen. The warning text will disappear when the users move to the safe zone as illustrated in Fig. 8(b).

4 Implementation

We use several devices for implementing our system. We choose to use a Kinect depth-camera for capturing full-body skeletal data. We will use Samsung Gear VR as head-mounted display for providing an immersive experience while using our system. We will also use one-unit computer with an Intel i7 processor, 8 GB RAM, CPU speed 2.5 GHz. The system will be integrated with Unity 3D Studio and Microsoft Visual Studio for scripting.

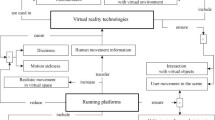

Several SDK will also be used for integrating all the devices. We will use Microsoft Kinect for Windows SDK, Kinect Unity SDK and “Kinect v2 Examples with MS-SDK”. After creating the system, we installed it to the HMD by using server-based system that streams the Kinect data to the HMD in real time. Figure 9 represents the system software overview.

We use Kinect SDK for windows to connect the depth-camera with the windows environment. In our system, we use “Kinect v2 Examples with MS-SDK” downloaded from Unity asset store that contains the official Microsoft SDK for ease of integration. Another version for the VR system also used in our system to provide the connectivity between Head Mounted Display with the Kinect. Using server-based system allows the Kinect data to be streamed live into the HMD through internet connection.

4.1 Body Movement as Input

In our research, we use “Kinect v2 Examples with MS-SDK” asset to ease the connection of user’s body joints with the avatar. This asset provides us with the avatar designed and integrated with each of body joints recognized by Kinect Microsoft SDK. We import the asset into Unity and set the Kinect in position so the system can recognize the user’s body movement. Although the asset provides good integration of body joints and avatar, the avatar only able to move in very limited space with size same in the real life.

We connect each joint to a character model in the 3D map using unity and “Kinect v2 Examples with MS-SDK” asset. The user’s movement is integrated with the character, as shown in Fig. 10. By integrating the user’s movement to the character, we want to make the user feel connected and get “feedback” feeling. “Kinect v2 Examples with MS-SDK” provides the connectivity between an avatar and user, but restricted in movement. Therefore, if we want to move the avatar in a big virtual world, we also need big spaces in the real world [5].

To avoid using too many spaces, we use a “virtual traveling techniques” to cover and travel in such a large distance in the virtual environment [6]. This method allows the user to exploit only small place for their movement. In our research, we implement this method by making the users running in place and the system will recognize it as movement in the virtual environment.

One remaining problem is how to make the avatar moves all around the virtual environment. In our research, we will use the basic First-Person Controller movement in the Unity studio to create the basic moving forward mechanism. We will use an GUI object from the Unity that can be moved by using keyboard key. When there is input of that button, the object will move in certain direction, as in our research moving forward. Later we connect the GUI object with our speed control feature, so when the system detects certain speed larger than the threshold, will move the GUI attached to the avatar.

4.2 Body Movement-Based Features

Several features developed in our system such as moving forward mechanism, speed control, horizontal rotation, posture control, and collision control. These features use body movement data obtained from skeletal tracking using Kinect. Figure 11 illustrates the skeletal tracking by using Kinect.

Speed control uses knee location changes through time, horizontal rotation and posture control uses the users joints locations to calculate several angles, used as threshold value to trigger point of view change in horizontal rotation and warning text in posture control. Collision control uses the depth data (z) as in distance between the users and Kinect camera.

5 Related Work

The use of body movements has been used as method for controlling the VR system in the past. Body movements mentioned in this manner is using body joints or data based on body joints such as joints location changes [3]. The location changes are tracked by certain devices and sensors, some divided per framework and some done in real-time. This method is considered popular and used in various research due to its simplicity and feasible to realize [6].

In the past, realizing this feature in real-time was difficult due of device limitations [3]. However, after the development of Kinect, the users can run the system with proper accuracy, robustness, and affordable. Kinect tracks several body joints and translate it as depth-data, so the users can get the information its positions in the real world [7]. Shotton et al. proposed the principles of Kinect Skeletal Tracker by capturing single input depth image and infer a per-pixel body part distribution [10].

Wilson et al. proposed similar approach by using walking in place method for exploring a large VE [5]. By using two depth cameras and orientation sensors installed above the HMD, they can develop running system. In our research, we use one unit of depth camera without additional sensors.

The use of realistic 3D map in a VR system is implemented for fire evacuation training system [4]. Sookhanaphibarn and Paliyawan use a university based virtual environment in their system, so the users can walk around the area and train themselves regarding evacuation process when a fire emerges.

Comparing with the previous related works, ours hold several advantages. We use minimum devices compared to another works and used simpler configuration and algorithm to create our features. In our work, we use realistic 3D map of Japan city provided by Japan map company. Therefore, the virtual environment presented in our work holds possibilities for another application such as travelling sector. Using realistic environment also enhance the user’s experience as the users can use the system while enjoying the environment.

6 Preliminary Evaluation

We conducted a user study by comparing actual running with running using our system. Our hypothesis is by using our system, at least there is 3 major points that the users can take benefit of. Running, enhanced user experience, and similarity with the real environment. While our system is undoubtedly different from the actual running activity, we would like to perceive the differences from the user’s perspective, benefits, and further evaluation possibilities.

6.1 Participants and Method

We asked 7 participants (4 Male and 3 Female) to join our user study. All the participants ages are around 23–26 years old, have routine running or jogging schedule during the week, and have never been to the actual location of our system map (Akihabara, Tokyo, Japan).

We asked the participants to do several tasks before. Afterward, we interviewed the participants and asked several questions regarding our system to get some feedback. We would like to let the users experience different environment and compare between those two. The tasks are presented in the following:

-

1.

The participants will run for 10 s using our system

-

2.

The participants will run for 1 min in the street

-

3.

The participants will run for 1 min using our system

-

4.

The participants will run while changing their speed

-

5.

The participants observe the surrounding area while running.

The first task is used to get users’ general impressions using our system by running in short period of time. The second and third tasks are for getting the users’ impressions of running in longer period and differences between them. The fourth task is for checking the user’s impression toward speed control mechanism and the effect it has toward users’ performance. The last task is used to get users’ experience while using our system.

6.2 Results

After finishing the trial, we will ask the participants to fill a questionnaire. Each question has grade from one to five (1–5; 1 = least agree and 5 = most agree). After filling the questionnaire and sharing some feedbacks, we calculate the average grade for each question. Table 1 presents the questionnaire results.

The participants’ response toward question 1 is agree and most agree, indicated by the average grade 4.71. The participants feel that by using our system, they can use it not just for running but also looking around the environment. Most of the participants agreed that the realistic environment in our system influences them to repeat and explore the environment more.

Question 2 indicates the participants’ attitude toward using our system in longer period. The average grade is 4, which means the participants agree that the system is useful for long usage. However most of the participants also agree that our system can make them losing balance due to repetitive actions and some errors in the system. The participants commented that when the exhaustion due to repetitive actions and the system lagging happens, it slows them down and create some confusions.

The participants’ responds toward speed control feature are positive. Most of the participants agree that making the system following their speed makes them to increase their speed. The participants tended to speed up their running motion speed because they wanted to reach certain location faster and explore the area more. Just like running in the real world, when the users’ stamina high, they tend to increase their speed, vice versa.

Horizontal rotation feature’s usability received average grade 4, which indicates this feature is useful from the participants’ point of view. The participants commented that this feature is quite simple and useful for changing the moving direction since they know that the space in the real world is limited. On the other hand, 2 participants stated that this feature makes them a bit uneasy since they need to stop their motion suddenly when they are doing several sprinting motions in short time.

The participants respond toward posture control is positive. They stated that this feature helps to maintain their posture when certain unwanted changes happens (i.e. one shoulder become lower). Some participants even stated that they felt like real running due to the system keeps reminding them to change their posture all the time.

Collision control perceived positively by the participants. They considered this feature important and crucial for preventing injury. All the participants showed some concerns regarding their safety while running using head mounted display before the evaluation began. In the middle of using our system, they could not see their surrounding and this system reminding them to move back to certain direction when they are about to leave the safe zone.

We also received additional comments from the participants regarding the differences of our system with real running. They stated that our system can enhance the running activity until certain extends, for example in a very long period of use, cannot produce the same performance with real running. On the other hand, the realistic environment in our system is the major point as it keeps them wanting to explore and run more. The participants also looking forward to use this system to explore different environments and even interacting with another objects or user in the realistic 3D map environment.

7 Conclusions and Future Work

We designed a running support system in a realistic 3D Map using body movement as input. The user can explore the vast and detailed 3D map while running. We also reflected more natural body movements in our system through speed control, posture control, and another feature in our system. Our system holds possibilities for further improvement and is considered enhancing the users experience.

Based on the evaluation we did with the participants, we can conclude that our system is perceived in positive ways. It indicates that our system effective for motivating the users to run in good manner. Moreover, the users think that exploring the realistic 3D Map environment somewhat improves their motivation for running. Although we use our system for running purpose, it is possible to implement our system in another area such as travelling.

In the future, we are planning to further improve our system by adopting more body movements tracked by Kinect for implementing realistic movement. For example, we can also use the arm movement to interact with objects in the map. Another concern is how to improve the usability of our system when used in longer period due to the risk of losing balance. We are also planning to implement our system with the other platform such as google map or google street view.

References

Zenrin 3D Asset. http://www.zenrin.co.jp/product/service/3d/asset/

Karina, A., Wada, T., Tsukamoto, K.: Study on VR sickness by virtual reality snowboard. Trans. Virtual Real. Soc. Jpn. 11(2), 331–338 (2006)

Charles, S.: Real-time human movement mapping to a virtual environment. In: Region 10 Symposium (TENSYMP), pp. 150–154. IEEE (2016)

Sookhanaphibarn, K., Paliyawan, P.: Virtual reality system for fire evacuation training in a 3D virtual world. In: IEEE 5th Global Conference on Consumer Elevtronics, pp. 1–2 (2016)

Wilson, P.T., Nguyen, K., Harris, A., Williams, B.: Walking in place using the Microsoft Kinect to explore a large VE. In: SAP 2013 Proceedings of the ACM Symposium on Applied Perception, pp. 27–33 (2014)

Bruder, G., Steninicke, F.: Implementing walking in virtual environments. In: Steinicke, F., Visell, Y., Campos, J., Lécuyer, A. (eds.) Human Walking in Virtual Environments, vol. 10, pp. 221–240. Springer Science+Business Media, New York (2013). https://doi.org/10.1007/978-1-4419-8432-6_10

Shotton, J., Fitzgibbon, A., Cook, M., et. al.: Real-time human pose recognition in parts from single depth images. In: CVPR, pp. 1297–1304 (2011)

Williams, B., McCaleb, M., et. al.: Torso versus gaze direction to mavigate a VE by walking in place. In: SAP 2013, pp. 67–70. ACM (2013)

Tanaka, Y., Hirakawa, M.: Efficient strength training in a virtual world. In: 2016 International Conference on Consumer Electronics-Taiwan, pp. 1–2. IEEE (2016)

Taylor, B., Birk, M., Mandryk, R.L., Ivkovic, Z.: Posture training with real-time visual feedback. In: Proceedings CHI 2013 Extended Abstracts on Human Factors in Computing Systems, pp. 3135–3138 (2013)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Svarajati, A.Y., Tanaka, J. (2018). Using Body Movements for Running in Realistic 3D Map. In: Chen, J., Fragomeni, G. (eds) Virtual, Augmented and Mixed Reality: Interaction, Navigation, Visualization, Embodiment, and Simulation. VAMR 2018. Lecture Notes in Computer Science(), vol 10909. Springer, Cham. https://doi.org/10.1007/978-3-319-91581-4_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-91581-4_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91580-7

Online ISBN: 978-3-319-91581-4

eBook Packages: Computer ScienceComputer Science (R0)