Abstract

Virtual reality (VR) can provide robust assessment of cognitive spatial processing skills in individuals with visual impairment. VR combined with objective measures of behavioral performance, such as eye and hand tracking, affords a high degree of experimental control, task flexibility, participant engagement, and enhanced data capture. Individuals with visual impairment typically have difficulties identifying objects in a cluttered environment. Furthermore, these difficulties may differ depending on the type of visual impairment. Specifically, individuals with cortical/cerebral visual impairment (CVI) may show a greater sensitivity to visual task complexity compared to those with ocular based visual impairment (OVI). We have developed a VR environment with integrated eye and hand tracking to simulate exploring a toy box to assess performance on a static object-based visual search task. A grid of toys was displayed for a brief duration while participants found and fixated on a specific toy hidden among others. For a given trial, we manipulated multiple factors: the number of unique distractor toys, a color/theme matched toy, and the background clutter. Results to date show that both visually impaired groups demonstrate increased variability in search patterns and reaction times as compared to controls. Additionally, performance of the CVI group fluctuates greatly as a function of task difficulty. Findings from the current work demonstrate a successful interaction between individuals with visual impairments and VR simulations in assessing high level visual function. Further studies will serve as theoretical foundation for the creation of new assessment and training paradigms for visually impaired individuals.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The present work presents a novel application of virtual reality (VR) environments for assessing and comparing visual search performance and spatial processing in individuals with visual impairment.

The combination of VR usage with objective measures of behavioral performance affords a high degree of experimental control, task flexibility, participant engagement, and enhanced data capture and characterization. Specifically, parameters of task difficulty, experimental factors, and environmental complexity can all be manipulated, along with the immediate capture of multiple behavioral performance outcome parameters [1,2,3]. Recent enhancements in VR technology, including device miniaturization, ease of implementation, increasing realism, and relatively low cost, give the possibility of developing more mobile platforms for the testing of individuals outside of the research laboratory and clinical settings.

Previous research has shown that with regards to tasks requiring visual spatial knowledge acquisition, individuals showed equivalent performance and preferences when conducting testing within the real world and in virtual simulations [4, 5]. Thus, VR can negate any conflicting demands related to physical exertion for participants, while incorporating objective performance measures like eye and hand tracking for naturalistic data collection. In essence, as long as the participant understands the nature and demands of the task, VR based assessments can be very useful in assessing behavioral performance even in individuals with physical or developmental disabilities [6].

One arena in which VR based environments could show great utility is in the assessment of cognitive spatial processing of individuals with visual impairment. Specifically, standard clinical testing measurements of visual acuity and visual field perimetry are inherently limited in that they fail to fully capture and characterize visual functional deficits associated with higher level visual processing, particularly in complex real world situations [7]. As an example, individuals with visual impairments often have difficulties recognizing familiar objects while viewing complex visual scenes. Furthermore, the nature of the visual impairment may also impact upon the type and degree of functional visual deficit observed [8].

We conducted a focus group study (see Design section for further details) that revealed that visually impaired children and adolescents often have trouble locating and identifying their favorite toy when it is in close proximity to other toys, but they can locate the same toy easily when it is in isolation. For example, individuals with ocular visual impairment (OVI) (e.g. ocular albinism) often have reduced visual acuity and impaired oculomotor function (i.e. nystagmus) and thus may take longer to find a target object when searching in a complex and cluttered visual scene. In contrast, individuals with developmental damage to visual processing areas of the brain (referred to as cortical/cerebral visual impairment, or CVI) may miss a visual target entirely, despite having the necessary visual acuity to perform the task and unimpaired eye movements. These observations highlight potential differences in visual search and spatial processing strategies related to task demand, as well as the type of visual impairment. While there is extensive research investigating perceptual abilities in ocular based visual impairments, the CVI population remains relatively understudied despite being the leading cause of congenital visual impairment in children in developed countries [9]. Furthermore, there is a growing need to develop novel methods to better characterize visual perceptual deficits beyond what can be achieved with standard clinical testing.

In this direction, we developed a VR testing environment called the “virtual toy box”. This VR platform creates a realistic simulation and testing environment in which a participant is instructed to find a target toy within a grid of other toys. Firstly, by exploiting the aforementioned advantages of VR, factors related to cognitive spatial processing of object complexity and clutter can be experimentally manipulated to characterize their individual effects on visual search performance. Secondly, integrated data capture methods obtained from eye and hand movement tracking provide realistic and objective measures of behavioral performance. Lastly, the flexibility and range of testing parameters mean that the same environment can be used to assess performance across different types of visual impairments.

2 Methodology

2.1 Participants

Preliminary data presented here include three participant groups: individuals with normally developed sight (controls), ocular visual impairment (OVI), and cortical/cerebral visual impairment (CVI). A total of 9 participants highlight the study design and methodology of the current research. Age of participants ranged from 14 to 28 years of age (Control mean = 18.3, OVI mean = 25.0, CVI mean = 18.7). All participants had at least a high school level education and were right handed. Ocular and cortical/cerebral visual impaired groups had comparable visual acuity albeit reduced compared to controls. However, all individuals possessed sufficient acuity for the present task (Snellen visual acuity range between 20/20 to 20/80).

2.2 Hardware and Software

An Alienware Aurora R6 with an Intel i5 processor, NVidia GTX 1060 graphics card, and 32 GB of ram (Alienware Corporation ©) was used for development and execution of the experiment. A ViewSonic 27″ Widescreen 1080p (1920 × 1080 resolution) LED monitor (ViewSonic Corporation ©) displayed the testing environment. A Tobii 4C Eye Tracker (90 Hz sampling rate) (Tobii AB ©) recorded the eye movements. A Leap Motion (120 Hz sampling rate) tracked hand motion (Leap Motion, Inc. ©). The testing platform was created in the Unity 3D game engine version 5.6 (Unity Technologies ©). The 3D object models were created using the modeling software Blender (Blender Foundation ®).

2.3 Design and Procedure

A focus group survey was conducted with teachers of the visually impaired prior to the development of the current iteration of the testing platform. The goal of this focus group study was to ensure that the final design of the testing platform accurately addressed the problem of visual search, spatial processing, and visual scene complexity, by taking into consideration the opinion of experts possessing extensive experience working with these visually impaired individuals. After viewing a video that highlighted the VR environment and current design features, six focus group participants rated their responses (using a 7 point Likert scale, with 1 meaning strongly disagree and 7 strongly agree) to a series of predetermined statements (e.g. rating the realism of 3D object models) and also open ended questions (e.g. listing additional factors for consideration). The results were overwhelmingly positive for scores of realism (5.7 out of 7) and feature importance (6.8 out of 7). Open ended feedback was used to improve/modify the design of the tested factors (e.g., addition of a “catch trial” containing a color/theme matched distractor). The survey results revealed unmet needs related to the assessment of visual performance and key factors of interest related to the VR environment realism and effectiveness for further consideration.

Prior to the experimental run, eye tracking calibration was performed for each participant using the Tobii Eye Tracking Software v2.9 calibration protocol, which took about 30 to 45 s. Participants then viewed and selected their favorite toy (i.e. target of interest, or “target toy”) for the search task. There were three possible toys to choose from that varied in color and theme: a blue truck, orange basketball, and yellow duck. Participants viewed each target toy in isolation as it rotated around the Y-axis before choosing. Toy selection was done to enhance the immersive feel of the task and ensure that the participant was able to correctly identify a target of interest.

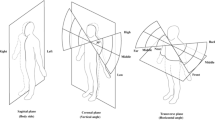

After selecting the target toy, the experimental program began. Participants viewed a toy box from an overhead perspective to simulate looking down into the box (see Fig. 1). Trials started with the box covered and only a fixation point displayed. The cover disappeared after 2 s and revealed a 5 × 5 grid of toys. Each toy within the grid was presented in a conical view (see Fig. 1). Participants had 4 s to visually locate and fixate on the target toy before that trial ended. The cover and fixation point reappeared between each trial of a new grid of toys. The primary factor of interest was the number of other unique toys filling the grid (ranging from 1 to 9 unique toys) to test the effect of multiple distractors (Fig. 1A and B). As a secondary factor of interest, a color/theme matched toy within the grid (presented in 25% of the trials) served as “catch trials” designed to test how participants were identifying the target toy (Fig. 1C). An additional factor of interest, a background layer of toys, was present in 50% of the trials to test the effect of background visual complexity (Fig. 1D).

Visual demonstration of various levels of the experimental factors. (A) example trial with minimum number of unique distractors (low), (B) trial with highest number of unique distractors (high), (C) trial with color/theme matched distractor (circle), and (D) trial with background toys enabled. (Color figure online)

Unique distractor numbers were classified into three levels: “Low” (1 to 3 unique toys), “Medium” (4 to 6 unique toys), and “High” (7 to 9 unique toys), with each group representing 33% of the trials. The color/theme matched distractor varied based on the selected target toy with the goal of not only presenting a toy with the same color but also the same categorical theme of the target. For example, if a participant selected the blue truck, the color/theme matched toy appeared as a blue helicopter (same color and transportation category – see Fig. 1C).

Participants completed a total of 35 trials per run with 3 runs overall. A run lasted approximately 3.5 min with a brief rest between each run. Response collection was done entirely through eye tracking, as participants were instructed to visually locate and fixate on the target toy with their eyes. The eye tracker collected the (X, Y) coordinate position of the gaze point on the screen at any given moment. The Tobii Unity SDK mediated data collection and data was sampled at the tracker’s default refresh rate of 90 Hz.

Two additional runs mimicked the main experiment but also had the participants reaching for the target toy. Hand position tracking by the Leap Motion hand tracker provided free physical movement and ran in parallel with eye tracking system. The goal was to elucidate potential differences between visual and kinesthetic/haptic/physical search patterns as well as observe visuo-motor coordination. Figure 2 shows a picture of a participant using the system.

3 Results and Discussion

3.1 Eye Gaze Data: Heatmaps

The raw coordinate gaze data were smoothed and aggregated over time using Gaussian filtering and histogram regional binning to generate heatmaps [10]. The varying colors in these heatmaps represent differing levels of gaze data density across spatial regions of the screen space, with each color being a ratio of point density. Yellow indicates that a participant spent more time looking in that area whereas blue indicates that a participant spent far less time there. There is approximately a 9:1 ratio of point density between yellow and blue. Given that the target location on the screen changed per trial, gaze data had to be offset on an individual trial basis to center the data from all the trials into one central region of interest. Due to this centering correction, the toy box image displayed behind the following heatmap data is merely a representation of a given condition or factor manipulation and not the actual position of the toy for a given trial. Portions of the images that do not show any color indicate a lack of sufficient data points to meet a minimum data capture threshold and are not indicative of a complete lack of gaze points. The data in the heatmaps are representations of hundreds of trials of eye gaze data. Overall distribution area and color scaling of the heatmap regions signify increased visual searching.

Figure 3 contains heatmaps for two categories of unique distractors (low and high) for each of the three participant groups as a visual demonstration of the collected eye tracking gaze data. The control group, regardless of unique distractors, showed a tight clustering of gaze points. The minimal spread of eye data suggests that the control participants found the target quickly on each trial and maintained their focus on that target. In contrast, the ocular visual impaired group (OVI) showed a more scattered search pattern with less defined gaze density regions that was comparable for both levels of unique distractors. The large spread of gaze data reflected decreased performance as compared to the control group, suggesting slower reaction times, less success finding the target, and fewer periods of strong fixation. The CVI group data also reflected an increased area of gaze data, revealing longer search patterns and reduced focus on the target location during trials as compared to controls. For the given task and condition levels, the CVI group fell somewhere in between the sighted and OVI groups. There was also a noticeable loss in definition of the eye gaze data for the CVI group during the high unique distractor trials, as seen by the decrease in high intensity regions (yellow colors) and increase of low density regions (blue colors).

3.2 Eye Gaze Data: Confidence Ellipses

Ellipses of best fit from the eye gaze data further quantified task performance. The area of these ellipses represented a 95% confidence interval for the contained input data based on percentage of viewing screen (see Fig. 4). Both visually impaired groups showed worse performance than the control group across each number of unique distractors. At the highest level, however, the CVI group exhibited the largest change in performance, indicating that these individuals were more affected by the number of unique distractors around the target object. Confidence ellipse area data also revealed that the threshold for impaired performance rests somewhere between the medium and high number of unique distractors.

Similar results are displayed for “catch trials”, when the color/theme-matched distractor was present. Figure 5 shows the effect on confidence ellipse areas for trials with and without the matched distractor for the three study groups. The control group performance was noticeably better than both the visually impaired groups and was relatively consistent between trial types. The OVI and CVI groups demonstrated performance changes, but in opposite directions (increased and decreased performance in relation to the presence of the color/themed matched distractor, respectively). The CVI group showed the largest effect, indicating that the presence of the matched distractor was disruptive to their search patterns.

Results for background enabled versus disabled trials are shown in Fig. 6. While the control group was unaffected by a background within the toy box, the OVI and CVI groups both showed improvement in performance. Early participant feedback may help provide an explanation for this difference, as multiple individuals reported that the background helped provide a feeling of greater depth and separation of the toy grid from the box.

3.3 Reaction Times

For each trial, there were two separate reaction time metrics. The first time that the participant’s eyes came into contact with the target toy on the screen was treated as an initial “hit” reaction. A second “fixate” reaction time was determined after the participant’s eyes remained in contact with the target for 300 ms. The “fixate” reaction time was measured separately as an indicator of sustained fixation rather than simply identifying the target at first glance. If a participant was still searching the screen but had not identified the correct toy, passing over the target may be erroneously scored as an extremely fast “hit”. For this reason, the following results detail the “fixate” reaction time data. A graphical representation of reaction time can be found in Fig. 7.

The control group was the quickest to locate and fixate on the target toy for all three levels of unique distractors. Additionally, the CVI group data reflected a discernible effect of increasing difficulty, similar to that observed with the visual search area quantified by the confidence ellipse area (compare with Fig. 4).

Reaction time data for all three groups based on color/theme match and background (results not shown) followed a similar pattern as the unique distractor data. Performance did not change strikingly with regards to manipulation of both factors. However, the group distinctions observed in the previous measures persisted. Controls consistently found the target toy quicker than both groups with visual impairment, while the OVI group was quicker than the CVI group.

Finally, reaction time for hand tracking data is presented in Fig. 8. The following data represent the time it took the participants to reach for and virtually touch the target toy. Controls reached for the target faster than both visually impaired groups and did not display much of an effect of unique distractor level. The CVI reaction time decreased at the highest number of unique distractors. During debriefing, multiple participants noted that they were using the virtual hand as a reference to help them search the area when the trials became increasingly difficult. This may translate to a real-world training strategy, where the individual uses their physical hand to help parse a complex visual scene. Overall, reaction times were slower when compared to the “fixate” reaction times for eye tracking data of both the visual impaired groups. This was expected given the physical time required for executing the reaching movements.

4 Conclusions and Future Work

The present work demonstrates the successful development of a VR testing environment designed to assess and compare object visual search in both cerebral and ocular based types of visual impairment.

The design and development process directly involved input from experts in the field to create a novel assessment method that goes beyond what is typically evaluated in the standard clinical setting. Results thus far illuminate what we learned from our focus group study in that individuals with visual impairment have difficulties identifying familiar objects when placed in a cluttered and complex environment. Furthermore, difficulties were related to the type of visual impairment. Specifically, performance of the group with cortical/cerebral visual impairment (CVI) compared to the group with ocular visual impairment (OVI) worsened at higher levels of task difficulty, but OVI performance was more stable across task difficulty. Overall, individuals with CVI also took longer to find target objects than both control and OVI participants when task difficulty was highest.

It is important to consider that there is currently no cure for CVI, as the visual deficits are due to early developmental brain damage. Therefore, work such as outlined in the current study is paramount to understanding the underlying cognitive and behavioral mechanisms associated with this type of visual impairment. Further, this same approach may serve as a platform for the further development of novel training and rehabilitation techniques. The visuo-motor coordination deficits associated with CVI furthers the demand for combined eye and hand tracking data collection. Combining VR along with eye and hand tracking technologies can provide for an entirely novel and more realistic assessment and training toolkit for clinical and behavioral sciences.

Future work will continue to collect data to validate currently observed behavioral trends while exploring other means of data collection and technologies (e.g. combined electroencephalogram (EEG) recordings and immersive visual presentation with head mounted displays). The end goal is to characterize the behavioral and neurological underpinnings related to visual spatial processing and search in individuals with visual impairment. Compensatory training and simulation strategies may emerge from the foundational observations developed from this research project.

References

Loomis, J.M., Blascovich, J.J., Beall, A.C.: Immersive virtual environment technology as a basic research tool in psychology. Behav. Res. Methods Instrum. Comput. 31(4), 557–564 (1999)

Zyda, M.: From visual simulation to virtual reality to games. Computer 38(9), 25–32 (2005)

Bennett, C.R., Corey, R.R., Giudice, U., Giudice, N.A.: Immersive virtual reality simulation as a tool for aging and driving research. In: Zhou, J., Salvendy, G. (eds.) ITAP 2016. LNCS, vol. 9755, pp. 377–385. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-39949-2_36

Richardson, A.E., Montello, D.R., Hegarty, M.: Spatial knowledge acquisition from maps and from navigation in real and virtual environments. Mem. Cogn. 27(4), 741–750 (1999)

Kuliga, S.F., et al.: Virtual reality as an empirical research tool—exploring user experience in a real building and a corresponding virtual model. Comput. Environ. Urban Syst. 54, 363–375 (2015)

Parsons, T.D., et al.: Virtual reality in paediatric rehabilitation: a review. Dev. Neurorehab. 12(4), 224–238 (2009)

Merabet, L.B., et al.: Disentangling How the Brain is “Wired” in Cortical/Cerebral Visual Impairment (CVI). Seminars in Pediatric Neurology (2017)

Martín, M.B., et al.: Cerebral versus ocular visual impairment: the impact on developmental neuroplasticity. Front. Psychol. 7, 1958 (2016)

Kong, L., et al.: An update on progress and the changing epidemiology of causes of childhood blindness worldwide. J. Am. Assoc. Pediatr. Ophthalmol. Strabismus 16(6), 501–507 (2012)

Gibaldi, A., et al.: Evaluation of the Tobii EyeX Eye tracking controller and Matlab toolkit for research. Behav. Res. Methods 49(3), 923–946 (2017)

Acknowledgements

This work was supported by the Research to Prevent Blindness/Lions Clubs International Foundation to and National Institutes of Health (R01 EY019924-08) to L.B.M.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Bennett, C.R., Bailin, E.S., Gottlieb, T.K., Bauer, C.M., Bex, P.J., Merabet, L.B. (2018). Virtual Reality Based Assessment of Static Object Visual Search in Ocular Compared to Cerebral Visual Impairment. In: Antona, M., Stephanidis, C. (eds) Universal Access in Human-Computer Interaction. Virtual, Augmented, and Intelligent Environments . UAHCI 2018. Lecture Notes in Computer Science(), vol 10908. Springer, Cham. https://doi.org/10.1007/978-3-319-92052-8_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-92052-8_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-92051-1

Online ISBN: 978-3-319-92052-8

eBook Packages: Computer ScienceComputer Science (R0)