Abstract

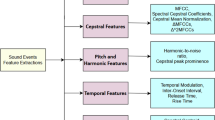

In this paper, we propose a method for recognizing the complex activity using audio sensors and the machine learning techniques. To do so, we will look for the patterns of combined monophonic sounds to recognize complex activity. At this time, we use only audio sensors and the machine learning techniques like Deep Neural Network (DNN) and Support Vector Machine (SVM) to recognize complex activities. And, we develop the novel framework to support overall procedures. Through the implementation of this framework, the user can support to increase quality of life of elders’.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Attal, F., Mohammed, S., Dedabrishvili, M., Chamroukhi, F., et al.: Physical human activity recognition using wearable sensors. Sensors 15, 31314–31338 (2015)

Ong, W.H., Palafox, L., Koseki, T.: Investigation of feature extraction for unsupervised learning in human activity detection. Bull. Netw. Comput. Syst. Softw. 2, 1–30 (2013)

Ghosh, A., Riccardi, G.: Recognizing human activities from smartphone sensor signals. In: Proceedings of the 22nd ACM International Conference on Multimedia, pp. 865–868. ACM (2014)

Roshtkhari, M.J., Levine, M.D.: Human activity recognition in videos using a single example. Image Vis. Comput. 31, 864–876 (2013)

Nam, Y.Y., Choi, Y.J., Cho, W.D.: Human activity recognition using an image sensor and a 3-axis accelerometer sensor. J. Internet Comput. Serv. 11, 129–141 (2010)

Bregman, A.S.: Auditory scene analysis: the perceptual organization of sound. MIT Press (1994)

Krijnders, J., Holt, G.T.: Tone-fit and MFCC scene classification compared to human recognition. In: IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (2013)

Parascandolo, G., Heittola, T., Huttunen, H., Virtanen, T.: Convolutional recurrent neural networks for polyphonic sound event detection. IEEE/ACM Trans. Audio, Speech, Lang. Process. 25, 1291–1303 (2017)

Parascandolo, G., Huttunen, H., Virtanen, T.: Recurrent neural networks for polyphonic sound event detection in real life recordings. In: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 6440–6444 (2016)

Marchi, E., Vesperini, F., Eyben, F., Squartini, S., Schuller, B.: A novel approach for automatic acoustic novelty detection using a denoising autoencoder with bidirectional LSTM neural networks. In: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 1996–2000 (2015)

Innami, S., Kasai, H.: NMF-based environmental sound source separation using time-variant gain features. Comput. Math Appl. 64, 1333–1342 (2012)

Defréville, B., Pachet, F., Rosin, C., Roy, P.: Automatic recognition of urban sound sources. In: Audio Engineering Society Convention 120 (2006)

Díaz-Uriarte, R., De Andres, S.A.: Gene selection and classification of microarray data using random forest. BMC Bioinform. 7, 3 (2006)

Eghbal-Zadeh, H., Lehner, B., Dorfer, M., Widmer, G.: A hybrid approach with multi-channel i-vectors and convolutional neural networks for acoustic scene classification. In: Signal Processing Conference (EUSIPCO), pp. 2749--2753 (2017)

Aggarwal, J.K., Ryoo, M.S.: Human activity analysis: a review. ACM Comput. Surv. 43, 16 (2011)

Kang J., Kim J., Lee S., Sohn M.: Recognition of transition activities of human using CNN-based on overlapped sliding window. In: 5th International Conference on Big Data Applications and Services (2017)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Fei-Fei, L.: Large-scale video classification with convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1725–1732 (2014)

Logan, B.: Mel frequency cepstral coefficients for music modeling. In: ISMIR vol. 270, pp. 1–11 (2000)

Lee, D.D., Seung, H.S.: Algorithms for non-negative matrix factorization. In: Advances in Neural Information Processing Systems, vol. 13 pp. 556–562 (2001)

Melorose, J., Perroy, R., Careas, S.: World population prospects: the 2015 revision, key findings and advance tables. Working Paper No. ESA/P/WP. 241, pp. 1–59 (2015)

Abdi, J., Al-Hindawi, A., Ng, T., Vizcaychipi, M.P.: Scoping review on the use of socially assistive robot technology in elderly care. BMJ Open 8(2), e018815 (2018)

Robinson, H., MacDonald, B., Broadbent, E.: The Role of Healthcare Robots for Older People at Home: A Review. Int. J. Soc. Robot. 6(4), 575–591 (2014)

Kohlbacher, F., Rabe, B.: Leading the way into the future: the development of a (lead) market for care robotics in Japan. Int. J. Technol. Policy Manag. 15(1), 21–44 (2015)

Epley, N., Akalis, S., Waytz, A., Cacioppo, J.T.: Creating social connection through inferential reproduction: loneliness and perceived agency in gadgets, gods, and greyhounds. Psychol. Sci. 19, 114–120 (2008)

Acknowledgements

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education, Science and Technology (NRF-2016 R1D1A1B03932110).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Copyright information

© 2019 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Kang, J., Kim, J., Kim, K., Sohn, M. (2019). Complex Activity Recognition Using Polyphonic Sound Event Detection. In: Barolli, L., Xhafa, F., Javaid, N., Enokido, T. (eds) Innovative Mobile and Internet Services in Ubiquitous Computing. IMIS 2018. Advances in Intelligent Systems and Computing, vol 773. Springer, Cham. https://doi.org/10.1007/978-3-319-93554-6_66

Download citation

DOI: https://doi.org/10.1007/978-3-319-93554-6_66

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-93553-9

Online ISBN: 978-3-319-93554-6

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)