Abstract

Time series classification (TSC) has been an ongoing machine learning problem with countless proposed algorithms spanning a multitude of fields. Whole series, intervals, shapelet, dictionary-based, and model-based are all different past approaches to solving TSC. Then there’s deep learning approaches that try to utilize all the success demonstrated by neural network’s (NN) architecture in image classification to TSC. Deep learning typically requires vast amounts of training data and computational power to have meaningful results. But, what if there was a network inspired not by a biological brain, but that of mathematics proven in theory? Or better yet, what if that network was not as computationally expensive as deep learning networks, which have billions of parameters and need a surplus of training data? This desired network is exactly what the Shepard Interpolation Neural Networks (SINN) provide - a shallow learning approach with minimal training samples needed and a foundation on a statistical interpolation technique to achieve great results. These networks learn metric features which can be more mathematically explained and understood. In this paper, we leverage the novel SINN architecture on a popular benchmark TSC data set achieving state-of-the-art accuracy on several of its test sets while being competitive against the other established algorithms. We also demonstrate that even when there is a lack of training data, the SINN outperforms other deep learning algorithms.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Shepard Interpolation

- Time Series Classification (TSC)

- Shallow Learning

- Minimal Training Sample

- Shapelets

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Time series data is one of the most practical pieces of information in today’s world that allows us to understand what’s going on around us. Financial markets, ocean tides, astrological events, communications, supply chain purchasing, hurricanes, and earthquakes are all different types of time series data which impact our day to day lives. Understanding these events (and others) is a crucial step in making sense of the chaotic world seen in time. Time series classification (TSC) differs from regular data classification problems because of the additional chronological determination of the data. This causes some poor characteristics for simple classification techniques to be used like a simple nearest neighbors algorithm. A more sophisticated technique like machine learning (which tries to mathematically give computers the ability to see patterns and data that the common human cannot see) can be used for better success at TSC. This field has had an extraordinary amount of popularity in the last decade in computer science due to its accomplishments.

Different machine learning algorithms have been applied to TSC, all of which show strengths in particular data sets and weakness in others. This is due to the complex nature of time series data, and how some algorithms are primed for the specific complexity but fail at the simple. One of those algorithms which tries to overcompensate and excel on all data is that of neural networks (NN), particularly in deep learning frameworks [6]. NNs are biologically inspired by the one thing computer scientists are striving for - the brain. With the usage of deep learning (which combines multiple layers of neurons that are stacked in succession and trained in a back propagation manner) we can simulate neurons firing in brains and then stimulating the correct neurons of remembrance, or learning. Where deep learning struggles is in two areas: first, the amount of data needed to train the algorithm to achieve successful results; and second, the amount of computational power needed to achieve the training of all that data. The latter has been accelerated to today’s hardware craze of using high performance computing and graphics processing units (GPUs), but still does not address the issue of minimum training data; this can not be fixed if the data simply doesn’t exist.

In this paper, a shallow learning method that compensates the above issues is explored. This easily trained and computationally efficient Sheaprd Interpolation Neural Network(s) (SINN) is tested on the University of California, Riverside (UCR) time series classification and clustering repository against several state-of-the-art algorithms [2, 16]. Our proposed algorithm establishes higher benchmark accuracy on several of the data sets and is highly competitive against the elite TSC algorithms across the entire data set, establishing a mean error rate of 18.8%. In addition to accuracy, SINN excel in overall simplicity against other TSC algorithms, specifically that of deep learning NNs by achieving high accuracy on data sets which contain fewer training data than testing.

The remainder of this paper is as follows: first, a brief review of Shepard interpolation and its uses in machine learning. Second, a mathematical look into the structure SINN. Third, experimental results on the UCR data set against competition algorithms; and finally, a conclusion section looking at our contribution and future work.

2 Related Works

Shepard Interpolation is a type of data interpolation which falls into the inverse distance weighting class [14]. This method takes a distance metric that describes how far away the original data points are from the functional data points estimated. The interpolation function is dependent on the inverse weighting function

where \(d(x,x_{i})\) is some distance function. Shepard Interpolation is defined as

The exponential p typically is set to two in Shepard’s method, representing something similar to the Euclidean distance. The known value of a point is denoted as \(u_{i}\) while the unknown point interpolated is u(x). For statistical purposes, this algorithm is simple to implement and has very few parameters that need tweaking. This method not only has the ability to work on data in N dimensions, but it can interpolate data which is scattered and came to fame because of its ability to compensate for data on any grid. It’s a versatile and quick interpolation method as well. It does fault however when the data set is large since it is O(n) (where n is the number of samples) and is a global method which over values outliers when it comes to fitting new points.

A major drawback of classic Shepard interpolation is that all known data points share a same distance function that is symmetric across all dimensions. In the case of real world data this is certainly not the case. For example, imagine the situation of binary classification of images. The images can be represented as vectors of pixels’ values, with an associated value of 1 or 0 for true or false in the classification task. Changing a pixel in the corner versus changing a pixel in the center of the image has different impacts, and the same change of pixel value at the same position might not represent an equivalent change when executed on two vastly different images.

Typically, because of the ability to work so well on grids, Shepard interpolation has been mainly applied in machine learning practices regarding image classification. For instance, Shepard interpolation was used in a convolutional neural network [12]. The proposed method is the addition of a Shepard interpolation layer as an augmentation on architectures used for low-level image processing tasks. Park and Sandberg describe a Radial Basis Function Network (RBFN) that functions in much the same manner as the SINN, in that they are both based off of exact interpolation and function using a single hidden layer [11]. The Shepard Interpolation Neural Network architecture departs from Radial Basis Function Networks through implementation as well as a few characteristics of the activation functions used. For example, Radial Basis Function Networks require that the activation function be radially symmetric while Shepard Interpolation Neural Networks do not place such a constraint on the metric and Shepard activation functions. RBFNs rely on exact interpolation which honors the data, while SINN has more flexibility in inferring new data points in the feature space. One advantage RBFN has over our proposed method is its activation functions, which are non-linear kernels that help give some non-linearity in the classification boundaries, while our SINN does not process this ability in the activation functions. Our proposed algorithm’s architecture has only been very recently developed, as such, there are no other published attempts at improving this shallow learning approach as well as no attempts at using it for TSC. It has been used in image classification, both in the original frame work of raw image classification, and then in the usage of deep features for image classification [7, 16]. This outline will be reviewed in more detail in Sect. 3.

3 Shepard Interpolation Neural Networks

By exploiting the Shepard interpolation method, the architecture of the network can be designed rather than found through exploration, requiring very little hyper parameter tuning as well as providing increased efficiency. To further elaborate, several data points are selected from the each output class, and are used to deterministically initiate the weights of the two layers. The selection method for these data points has a large impact on the initial performance of the model as well as the final classification accuracy after training.

By substituting the distance function in Eq. 1 with a function that operates on vectors, the singular point x can be replaced by a vector \(\varvec{x}\). Intuitively, Shepard interpolation can calculate the value of a surface defined by the known data points at an unknown point by taking a weighted average of the known points. These weights are determined by the distance between known and unknown points.

The neural network component of the SINN is based around two types of neurons: metric neurons and Shepard neurons. A single metric neuron encodes a known data point from the training set as well as a distance function unique to that data point. This uniqueness of distance function helps fix the issue addressed with classic Shepard interpolation addressed in Sect. 2. The Shepard neuron encodes values of the surface at all known data points for a single output function, as well as the local curvature of the surface around each known data point.

SINN have different activation functions than other neural networks or RBFNs. For the metric node, the activation function is defined as

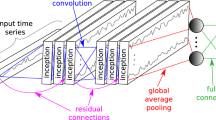

A single metric node is a one-to-one function; at a high level, it is a distance function between the input x and some known point parameterized by \(\alpha ,\,b\) and w along a single dimension. A collection of metric neurons can calculate the distance between a given vector and a known vector. Since in this paper we focus on time series data, it can be seen as the distance between one time series vector to another (be it any one dimensional vector). In order to encode some n number of features to some m known values, the SINN would need \(n*m\) metric neurons. For example, if we are using a time series data set that has three classes in it and each time series sample has a length of 100 time steps, then to encode entire data set the SINN would need 100*3 metric neurons. These metric neurons then “belong” to inverse neurons, this can be seen in Fig. 1, the input would be the n features we are encoding where m metric neurons are assigned for each input node.

For some input vector \(\varvec{x}=(x_{1},x_{2},\ldots ,x_{n})\), the inverse distance weighting activation function is defined as

While, obtaining some vector of Shepard node weights, \(\varvec{u}=(u_{1},u_{2},\ldots ,u_{n})\) the Shepard activation function is

In this, the normalization layer is combined with the Shepard layer which can be seen in Fig. 1. This shows the normalization layer and Shepard layer being separated for high level understanding. In reality, the \(\frac{w_{i}(\varvec{x})}{\sum w(\varvec{x})}\) is the normalization part of the appropriate layer. The Shepard neuron on the other hand, combines all the weights into a single prediction. It combines the metrics for each feature (n) and exponentiates them yielding a multi-dimensional distance function, then the neuron combines the m multi-dimensional distance values into a single prediction. By allowing the neurons to learn distance functions that are non-symmetric across the dimensions (and unique across the metric neurons) the relative importance of features given the values of the features can be learned. This allows for more intelligent segmenting of the feature space for classification due to the influx of inflection points interpolated by the beforehand layers. This also yields to the shallow learning ability of the SINN, where little training data is needed in order to perform well on classification tasks. These inflection points can serve as pseudo feature points (or inferred data points) which allows the algorithm to learn discriminatory boundaries more accurately compared to learning on just the original training data. Shepard neurons can also learn the local curvature of the surface as well; this can be achieved by tuning the p parameter in Eq. 4 which allows for curve fitting like seen in a polynomial regression problem [16].

4 Experimental Results

Our algorithm was tested on the time series data set from the University of California, Riverside (also known as UCR). This data set has multiple test sets within it, some of which have multiple classes in the test set, or simply binary. There might be more training data than testing (which is typical) but in some cases in this UCR data set there is less training data than there is testing data.

Nine other state-of-the-art algorithms spanning different fields are explored in comparison with the SINN. These algorithms include: Baydogan et al.’s time series based on a bag of features (TSBF), Schafer’s 1-NN Bag of SFA Symbols (BOSS), Lines and Bagnall’s elastic ensemble (PROP), Cetin et al.’s shapelet ensemble (SEI), Bagnall et al.’s flat-COTE (COTE), Nanopoulos et al.’s Multi-layer perceptron (MLP), Wang et al.’s fully convolutional neural network (FCN), Karim et al.’s Long Short Term Memory Network (LSTM), and the benchmark Berndt and Clifford’s dynamic time warping (DTW) [1, 3,4,5, 8,9,10, 13, 15]. The above approaches (omitting MLP, FCN and LSTM) rely heavily on algorithm-specific feature extraction or data preprocessing. This causes a discrepancy in the accuracy results for these certain algorithms. Also, of these non-neural algorithms, one to mention is COTE, which is a collective voting of 35 different classifiers.

4.1 Experimental Settings

For our experiments, there is no preprocessing done to the data, the raw data is simply fed into the SINN. As far as parameters, the number of nodes needed for the hidden layer is the only thing to set. In our experiments, we set the number of hidden layer Shepard neurons to be m (number of classes in the data set). This means, from Fig. 1, there is also m inverse nodes which own m metric nodes for encoding. The SINN was coded in Keras and the experiments were ran on a Dell Inspiron desktop with a Intel Core i5 processor, and a Nvidia Titan X graphics processing unit.

4.2 Result

Each test of the SINN was ran 100 times and averaged. Presented in Table 1 and shown visually in Fig. 2 we can see the results of SINN versus the other TSC best algorithms. Our proposed algorithm shows extremely strong results, with a mean error rate of 18.8% over the entire 49 test sets. This beats the benchmark algorithm of DTW, as well as three other algorithms. As far as individual test sets, SINN achieved new benchmark performance on four test sets: Cofee, ItalyPower, ProximalPhalanxTW, and UWaveX. These test sets span a wide variety of time series collections. Cofee, MiddlePhalanxOutlineAgeGroup, and ProximalPhalanx are all test sets that contain a gridded input - spectrograms and images. Knowing how well Shepard interpolation does on data that lies on a grid, it might give insight into why these achieved such great results.

COTE, being a combination of many different classifiers and using a voting scheme, performs well on the UCR data set. SINN outperforms COTE on 17 test sets spanning image, device, sensor, motion, and spectrogram showing the strength of our algorithm against a combination algorithm. The SINN architecture follows closely with the neural network framework, and comparing the results with the other three neural network algorithms, LSTM outperforms all the other techniques. This fortifies the strength of deep learning techniques in the time series classification field and possibly stems from the success on the image based time series explained above.

Where our shallow learning excels is when the training data is significantly smaller in number than the testing data. To explore this further, we took several of the UCR data sets and reduced the number of training samples substantially. We downsize the training data by 50% and 75% and retrain our algorithm again on the smaller training sets. The results can be seen in Table 2. To note, we tried to keep the same amount of samples per class to not skew our training results. After the retraining of our algorithm, we tested our method on the same testing data set which was used and reported previously in Table 2. Our algorithm performs extremely well considering the training data decreased in each case with an average loss of 7% when reducing the training data by half and 14% when reducing the training data by 75%. This shows the shallow learning ability to create better decision boundaries when training rather then relying on the many layers of the typically deep learning methods. It should be noted though that this means the training of the SINN is only as good as the features of the training data. So, if few data exists but the data is separable in the time domain or feature space, then the SINN can create a relatively good decision boundary for classification. If these features are not well distinguishable to begin with, then the performance of the SINN would indeed suffer. A reason why our proposed method performs well when little training data exists is from the foundation of Shepard interpolation and its ability to create and infer new points in the feature space which helps strengthen our performance on TSC.

5 Conclusion

Time series classification is a difficult machine learning task, not only for algorithms designed around solving time series data, but also for neurologically inspired classification algorithms. In this paper, a novel approach of combining mathematical foundation to neurologically inspired architecture is used to the task of TSC. Our proposed algorithm shows to be effective on time series data and achieves new benchmark results on several test sets as well as showing high results while cutting down the training data. Our SINN can be mathematically understood to learning the curvature and distances of the surface interacting in the feature space.

Going forward, a combination of things could be examined to make SINN better at TSC. As mentioned before, a wider network or a network implementing a stacked CNN with a SINN could benefit greatly. With this in mind, a feature based input would also be interesting to further explore, utilizing a time series specific feature as wavelets or Fourier spaces. Also, the way the data is initialized in the Shepard neurons needs to be better achieved. Possibly an unsupervised clustering algorithm would be able to group points together to initiate the neurons, or even a centroid of a cluster. These clustering algorithms might increase computational time, but may benefit the outcome of the results on the test sets. There are possible alternates on SINN which could look at different distance functions as well.

SINN have established state-of-the-art results based around a new mathematically evolved neural inspired network. This architecture paired up with theoretical mathematics has potential to become a great asset in the machine learning community working on time series classification.

References

Bagnall, A., Lines, J., Hills, J., Bostrom, A.: Time-series classification with cote: the collective of transformation-based ensembles. IEEE Trans. Knowl. Data Eng. 27(9), 2522–2535 (2015)

Bagnil, A.: The UEA & UCR time series classification repository. www.timeseriesclassification.com

Baydogan, M.G., Runger, G., Tuv, E.: A bag-of-features framework to classify time series. IEEE Trans. Pattern Anal. Mach. Intell. 35(11), 2796–2802 (2013)

Berndt, D.J., Clifford, J.: Using dynamic time warping to find patterns in time series. In: KDD Workshop, vol. 10, pp. 359–370, Seattle, WA (1994)

Cetin, M.S., Mueen, A., Calhoun, V.D.: Shapelet ensemble for multi-dimensional time series. In: Proceedings of the 2015 SIAM International Conference on Data Mining, pp. 307–315. SIAM (2015)

Funahashi, K.-I.: On the approximate realization of continuous mappings by neural networks. Neural Netw. 2(3), 183–192 (1989)

Williams, P., Smith, K.E.: Deep convolutional-shepard interpolation neural networks for image classification tasks. In: International Conference Image Analysis Recognition. Springer, Cham (2018)

Karim, F., Majumdar, S., Darabi, H., Chen, S.: LSTM fully convolutional networks for time series classification (2017) arXiv preprint arXiv:1709.05206

Lines, J., Bagnall, A.: Time series classification with ensembles of elastic distance measures. Data Min. Knowl. Discovery 29(3), 565–592 (2015)

Nanopoulos, A., Alcock, R., Manolopoulos, Y.: Feature-based classification of time-series data. Int. J. Comput. Res. 10(3), 49–61 (2001)

Park, J., Sandberg, I.W.: Universal approximation using radialbasis-function networks. Neural Comput. 3(2), 246–257 (1991)

Ren, J.S.J., Xu, L., Yan, Q., Sun, W.: Shepard convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 901–909 (2015)

Schäfer, P.: The boss is concerned with time series classification in the presence of noise. Data Min. Knowl. Discovery 29(6), 1505–1530 (2015)

Shepard, D.: A two-dimensional interpolation function for irregularly-spaced data. In: Proceedings of the 1968 23rd ACM National Conference, pp. 517–524. ACM (1968)

Wang, Z., Yan, W., Oates, T.: Time series classification from scratch with deep neural networks: a strong baseline. In: 2017 International Joint Conference on Neural Networks (IJCNN), pp. 1578–1585. IEEE (2017)

Williams, P.: SINN: shepard interpolation neural networks. In: Bebis, G., et al. (eds.) ISVC 2016. LNCS, vol. 10073, pp. 349–358. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-50832-0_34

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Smith, K.E., Williams, P. (2018). Time Series Classification with Shallow Learning Shepard Interpolation Neural Networks. In: Mansouri, A., El Moataz, A., Nouboud, F., Mammass, D. (eds) Image and Signal Processing. ICISP 2018. Lecture Notes in Computer Science(), vol 10884. Springer, Cham. https://doi.org/10.1007/978-3-319-94211-7_36

Download citation

DOI: https://doi.org/10.1007/978-3-319-94211-7_36

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-94210-0

Online ISBN: 978-3-319-94211-7

eBook Packages: Computer ScienceComputer Science (R0)