Abstract

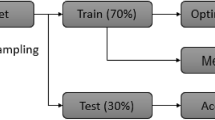

Hyper-parameter optimization and the identification of the learning algorithm best suited to a particular dataset can be exceedingly difficult. Researchers have developed automated methods for the selection of an algorithm and the associated hyper-parameters; however, this approach is not necessarily applicable to other datasets. In this paper, we present a method for the selection of a learning algorithm while simultaneously setting the hyper-parameters in a two-stage process: (1) Identification of important hyper-parameters to streamline the optimization process, and (2) Heuristic formulation based on sequence analysis to limit the long-tuning time and identify the optimal algorithm/ hyper-parameter combination. The proposed method greatly reduces the training time without a significant loss of performance in classification tasks.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ali, S., Smith, K.: On learning algorithm selection for classification. Appl. Soft Comput. 6, 119–138 (2006)

Bergstra, J., Bardenet, R., Bengio, Y., Kégl, B.: Algorithms for hyper-parameter optimization. In: Advances in Neural Information Processing Systems, pp. 2546–2554 (2011)

Bergstra, J., Bengio, Y.: Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13, 281–305 (2012)

Bernard, S., Heutte, L., Adam, S.: Influence of hyperparameters on random forest accuracy. In: Benediktsson, J.A., Kittler, J., Roli, F. (eds.) MCS 2009. LNCS, vol. 5519, pp. 171–180. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-02326-2_18

Breiman, L.: Random forests. Mach. Learn. 45, 5–32 (2001)

Caruana, R., Lawrence, S., Giles, L.: Overfitting in neural nets: backpropagation, conjugate gradient, and early stopping. In: Proceedings of the 13th International Conference on Neural Information Processing Systems, pp. 381–387 (2000)

Collobert, R., Bengio, S.: Links between perceptrons, MLPs and SVMs. In: Proceedings of the Twenty-First International Conference on Machine Learning, pp. 23–30 (2004)

Duvenaud, D., Maclaurin, D., Adams, R.: Early stopping as nonparametric variational inference. In: Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, pp. 1070–1077 (2016)

Fernández-Delgado, M., Cernadas, E., Barro, S., Amorim, D.: Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 15, 3133–3181 (2014)

Fidler, F., Thompson, B.: Computing correct confidence intervals for ANOVA fixed- and random-effects effect sizes. Educ. Psychol. Measur. 61, 575–604 (2001)

Hooker, G.: Generalized functional ANOVA diagnostics for high-dimensional functions of dependent variables. J. Comput. Graph. Stat. 16, 709–732 (2007)

Jones, D., Schonlau, M., Welch, W.: Efficient global optimization of expensive black box functions. J. Glob. Optim. 13, 455–492 (1998)

Kohavi, R.: A study of cross-validation and bootstrap for accuracy estimation and model selection. In: Proceedings of the 14th International Joint Conference on Artificial Intelligence, pp. 1137–1143 (1995)

Komer, B., Bergstra, J., Eliasmith, C.: Hyperopt-Sklearn: automatic hyperparameter configuration for scikit-learn. In: ICML Workshop on AutoML (2014)

Lin, S., Ying, K., Chen, S., Lee, Z.: Particle swarm optimization for parameter determination and feature selection of support vector machines. Expert Syst. Appl. 35, 1817–1824 (2008)

Luo, G.: A review of automatic selection methods for machine learning algorithms and hyper-parameter values. Netw. Model. Anal. Health Inform. Bioinform. 5, 18 (2016)

Masini, S., Bientinesi, P.: High-performance parallel computations using python as high-level language. In: Guarracino, Mario R., et al. (eds.) Euro-Par 2010. LNCS, vol. 6586, pp. 541–548. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-21878-1_66

McElroy, F.: A necessary and sufficient condition that ordinary least-squares estimators be best linear unbiased. J. Am. Stat. Assoc. 62, 1302 (1967)

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., Duchesnay, É.: Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Pedregosa, F.: Hyperparameter optimization with approximate gradient. In: Proceedings of the International Conference on Machine Learning, pp. 737–746 (2016)

Prechelt, L.: Automatic early stopping using cross validation: quantifying the criteria. Neural Netw. 11, 761–767 (1998)

Puntanen, S., Styan, G.: The equality of the ordinary least squares estimator and the best linear unbiased estimator. Am. Stat. 43, 153 (1989)

Rao, C.: Linear Statistical Inference and Its Applications. Wiley, New York (2002)

Schreuder, M., Höhne, J., Blankertz, B., Haufe, S., Dickhaus, T., Tangermann, M.: Optimizing event-related potential based brain–computer interfaces: a systematic evaluation of dynamic stopping methods. J. Neural Eng. 10, 036025 (2013)

Skipper, S., Josef, P.: Statsmodels: econometric and statistical modeling with python. In: Proceedings of the 9th Python in Science Conference, pp. 57–61 (2010)

Snoek, J., Larochelle, H., Adams, R.: Practical Bayesian optimization of machine learning algorithms. In: Proceedings of the 25th International Conference on Neural Information Processing Systems, pp. 2951–2959 (2012)

Thornton, C., Hutter, F., Hoos, H., Leyton-Brown, K.: Auto-WEKA: combined selection and hyperparameter optimization of classification algorithms. In: Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 847–855 (2013)

Witten, I., Frank, E., Hall, M.: Data Mining: Practical Machine Learning Tools and Techniques. Elsevier, Amsterdam (2011)

Wolpert, D.: The lack of a priori distinctions between learning algorithms. Neural Comput. 8, 1341–1390 (1996)

Acknowledgments

We are very grateful to the anonymous reviewers and editor. This work was sponsored by Ministry of Economic Affairs, Taiwan, R.O.C. through project No. G301ARY910 conducted by ITRI. The author would like to thank the anonymous reviewers for their detailed comments and suggestions that helped to improve the paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Wu, MS., Lu, JY. (2018). Automated Machine Learning Algorithm Mining for Classification Problem. In: Perner, P. (eds) Machine Learning and Data Mining in Pattern Recognition. MLDM 2018. Lecture Notes in Computer Science(), vol 10934. Springer, Cham. https://doi.org/10.1007/978-3-319-96136-1_30

Download citation

DOI: https://doi.org/10.1007/978-3-319-96136-1_30

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-96135-4

Online ISBN: 978-3-319-96136-1

eBook Packages: Computer ScienceComputer Science (R0)