Abstract

Automated essay scoring (AES) attempts to rate essays automatically using machine learning and natural language processing techniques, hoping to dramatically reduce the manual efforts involved. Given a target prompt and a set of essays (for the target prompt) to rate, established AES algorithms are mostly prompt-dependent, thereby heavily relying on labeled essays for the particular target prompt as training data, making the availability and the completeness of the labeled essays essential for an AES model to perform. In aware of this, this paper sets out to investigate the impact of data sparsity on the effectiveness of several state-of-the-art AES models. Specifically, on the publicly available ASAP dataset, the effectiveness of different AES algorithms is compared relative to different levels of data completeness, which are simulated with random sampling. To this end, we show that the classical RankSVM and KNN models are more robust to the data sparsity, compared with the end-to-end deep neural network models, but the latter leads to better performance after being trained on sufficient data.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Alikaniotis, D., Yannakoudakis, H., Rei, M.: Automatic text scoring using neural networks. In: ACL (1). The Association for Computer Linguistics (2016)

Altman, N.S.: An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 46(3), 175–185 (1992)

Attali, Y., Burstein, J.: Automated essay scoring with e-rater® v. 2. J. Technol. Learn. Assess. 4(3), 1–31 (2006)

Chen, H., He, B.: Automated essay scoring by maximizing human-machine agreement. In: EMNLP, pp. 1741–1752. ACL (2013)

Chen, H., Jungang, X., He, B.: Automated essay scoring by capturing relative writing quality. Comput. J. 57(9), 1318–1330 (2014)

Cummins, R., Zhang, M., Briscoe, T.: Constrained multi-task learning for automated essay scoring. In: ACL (1), pp. 789–799. The Association for Computer Linguistics (2016)

Dikli, S.: An overview of automated scoring of essays. J. Technol. Learn. Assess. 5(1) (2006)

Dong, F., Zhang, Y.: Automatic features for essay scoring - an empirical study. In: EMNLP, pp. 1072–1077. The Association for Computational Linguistics (2016)

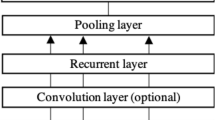

Dong, F., Zhang, Y., Yang, J.: Attention-based recurrent convolutional neural network for automatic essay scoring. In: CoNLL, pp. 153–162. Association for Computational Linguistics (2017)

Foltz, P.W., Laham, D., Landauer, T.K.: Automated essay scoring: applications to educational technology. In: World Conference on Educational Multimedia, Hypermedia and Telecommunications, pp. 939–944 (1999)

Jin, C., He, B., Hui, K., Sun, L.: TDNN: a two-stage deep neural network for prompt-independent automated essay scoring. In: ACL. The Association for Computer Linguistics (2018)

Larkey, L.S.: Automatic essay grading using text categorization techniques. In: SIGIR, pp. 90–95. ACM (1998)

Mcnamara, D.S., Crossley, S.A., Roscoe, R.D., Allen, L.K., Dai, J.: A hierarchical classification approach to automated essay scoring. Assess. Writ. 23, 35–59 (2015)

Phandi, P., Chai, K.M.A., Ng, H.T.: Flexible domain adaptation for automated essay scoring using correlated linear regression. In: EMNLP, pp. 431–439. The Association for Computational Linguistics (2015)

Rudner, L.M.: Automated essay scoring using Bayes’ theorem. Nat. Counc. Measur. Educ. New Orleans La 1(2), 3–21 (2002)

Shermis, M.D., Burstein, J. (eds.): Automated Essay Scoring: A Cross Disciplinary Perspective. Lawrence Erlbaum Associates, Hillsdale (2003)

Taghipour, K., Ngm H.T.: A neural approach to automated essay scoring. In: EMNLP, pp. 1882–1891. The Association for Computational Linguistics (2016)

Tay, Y., Phan, M.C., Tuan, L.A., Hui, S.C.: SkipFlow: Incorporating neural coherence features for end-to-end automatic text scoring. CoRR, abs/1711.04981 (2017)

Williamson, D.M., Xi, X., Jay Breyer, F.: A framework for evaluation and use of automated scoring. Educ. Measur.: Issues Pract. 31(1), 2–13 (2012)

Williamson, D.M.: A framework for implementing automated scoring. In: Annual Meeting of the American Educational Research Association and the National Council on Measurement in Education, San Diego, CA (2009)

Yang, Y., Buckendahl, C.W., Juszkiewicz, P.J., Bhola, D.S.: A review of strategies for validating computer-automated scoring. Appl. Measur. Educ. 15(4), 391–412 (2002)

Yannakoudakis, H., Briscoe, T., Medlock, B.: A new dataset and method for automatically grading ESOL texts. In: ACL, pp. 180–189. The Association for Computer Linguistics (2011)

Zesch, T., Wojatzki, M., Scholten-Akoun, D.: Task-independent features for automated essay grading. In: BEA@NAACL-HLT, pp. 224–232. The Association for Computer Linguistics (2015)

Zou, W.Y., Socher, R., Cer, D.M., Manning, C.D.: Bilingual word embeddings for phrase-based machine translation. In: EMNLP, pp. 1393–1398. ACL (2013)

Acknowledgments

This work is supported by the National Natural Science Foundation of China (61472391).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Ran, Y., He, B., Xu, J. (2018). A Study on Performance Sensitivity to Data Sparsity for Automated Essay Scoring. In: Liu, W., Giunchiglia, F., Yang, B. (eds) Knowledge Science, Engineering and Management. KSEM 2018. Lecture Notes in Computer Science(), vol 11061. Springer, Cham. https://doi.org/10.1007/978-3-319-99365-2_9

Download citation

DOI: https://doi.org/10.1007/978-3-319-99365-2_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-99364-5

Online ISBN: 978-3-319-99365-2

eBook Packages: Computer ScienceComputer Science (R0)