Abstract

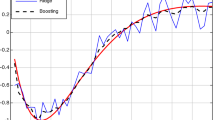

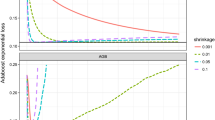

We study two boosting algorithms, Coordinate Ascent Boosting and Approximate Coordinate Ascent Boosting, which are explicitly designed to produce maximum margins. To derive these algorithms, we introduce a smooth approximation of the margin that one can maximize in order to produce a maximum margin classifier. Our first algorithm is simply coordinate ascent on this function, involving a line search at each step. We then make a simple approximation of this line search to reveal our second algorithm. These algorithms are proven to asymptotically achieve maximum margins, and we provide two convergence rate calculations. The second calculation yields a faster rate of convergence than the first, although the first gives a more explicit (still fast) rate. These algorithms are very similar to AdaBoost in that they are based on coordinate ascent, easy to implement, and empirically tend to converge faster than other boosting algorithms. Finally, we attempt to understand AdaBoost in terms of our smooth margin, focusing on cases where AdaBoost exhibits cyclic behavior.

This research was partially supported by NSF Grants IIS-0325500, DMS-9810783, and ANI-0085984.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Breiman, L.: Arcing the edge. Technical Report 486, Statistics Department, University of California at Berkeley (1997)

Breiman, L.: Prediction games and arcing algorithms. Neural Computation 11(7), 1493–1517 (1999)

Collins, M., Schapire, R.E., Singer, Y.: Logistic regression, AdaBoost and Bregman distances. Machine Learning 48(1/2/3) (2002)

Freund, Y., Schapire, R.E.: A decision-theoretic generalization of online learning and an application to boosting. Journal of Computer and System Sciences 55(1), 119–139 (1997)

Grove, A.J., Schuurmans, D.: Boosting in the limit: Maximizing the margin of learned ensembles. In: Proceedings of the Fifteenth National Conference on Artificial Intelligence (1998)

Koltchinskii, V., Panchenko, D.: Empirical margin distributions and bounding the generalization error of combined classifiers. The Annals of Statistics 30(1) (February 2002)

Mason, L., Bartlett, P., Baxter, J.: Direct optimization of margins improves generalization in combined classifiers. Advances in Neural Information Processing Systems 12 (2000)

Meir, R., Rätsch, G.: An introduction to boosting and leveraging. In: Mendelson, S., Smola, A. (eds.) Advanced Lectures on Machine Learning, pp. 119–184. Springer, Heidelberg (2003)

Rätsch, G., Warmuth, M.: Efficient margin maximizing with boosting ( 2002) (submitted)

Rosset, S., Zhu, J., Hastie, T.: Boosting as a regularized path to a maximum margin classifier. Technical report, Department of Statistics, Stanford University (2003)

Rudin, C., Daubechies, I., Schapire, R,E.:The dynamics of AdaBoost: Cyclic behavior and convergence of margins (2004) (submitted)

Rudin, C., Daubechies, I., Schapire, R.E.: On the dynamics of boosting. In: Advances in Neural Information Processing Systems 16 (2004)

Schapire, R.E.: The boosting approach to machine learning: An overview. In: MSRI Workshop on Nonlinear Estimation and Classification (2002)

Schapire, R.E., Freund, Y., Bartlett, P., Lee, W.S.: Boosting the margin: A new explanation for the effectiveness of voting methods. The Annals of Statistics 26(5), 1651–1686 (1998)

Zhang, T., Yu, B.: Boosting with early stopping: convergence and consistency. Technical Report 635, Department of Statistics, UC Berkeley (2003)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2004 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Rudin, C., Schapire, R.E., Daubechies, I. (2004). Boosting Based on a Smooth Margin. In: Shawe-Taylor, J., Singer, Y. (eds) Learning Theory. COLT 2004. Lecture Notes in Computer Science(), vol 3120. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-27819-1_35

Download citation

DOI: https://doi.org/10.1007/978-3-540-27819-1_35

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-22282-8

Online ISBN: 978-3-540-27819-1

eBook Packages: Springer Book Archive