Abstract

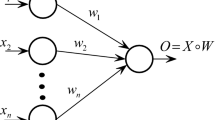

For the reason of all parameters in a conventional fuzzy neural network (FNN) needed to be adjusted iteratively, learning can be very slow and may suffer from local minima. To overcome these problems, we propose a novel FNN in this paper, which shows a fast speed and accurate generalization. First we state the universal approximation theorem for an FNN with random membership function parameters (FNN-RM). Since all the membership function parameters are arbitrarily chosen, the proposed FNN-RM algorithm needs to adjust only the output weights of FNNs. Experimental results on function approximation and classification problems show that the new algorithm not only provides thousands of times of speed-up over traditional learning algorithms, but also produces better generalization performance in comparison to other FNNs.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Wang, L.X., Mendel, J.M.: Fuzzy Basis Functions, Universal Approximation, and Orthogonal Least Squares Learning. IEEE Trans. on Neural Networks 3(5), 807–814 (1992)

Jang, J.S.R., Sun, C.T., Mizutani, E.: Neuro-fuzzy and Soft Computing. Prentice Hall International Inc., Englewood Cliffs (1997)

Huang, G.B., Zhu, Q.Y., Siew, C.K.: Extreme Learning Machine. To Appear in 2004 International Joint Conference on Neural Networks (IJCNN 2004) (2004)

Liu, B., Wan, C.R., Wang, L.: A Fast and Accurate Fuzzy Neural Network with Random Membership Function Parameters (submitted for publication)

Golub, G.H., Loan, C.F.V.: Matrix Computation, 3rd edn. Johns Hopkins University Press, Baltimore (1996)

Blake, C., Merz, C.: UCI Repository of Machine Learning Databases. http://www.ics.uci.edu/~mlearn/MLRepository.html , Department of Information and Computer Science, University of California, Irvine, USA (1998)

Ratsch, G., Onoda, T., Muller, K.R.: An Improvement of AdaBoost to Avoid Overfitting. In: Proceedings of the 5th International Conference on Neural Information Processing, (ICONIP 1998) (1998)

Romero, E.: Function Approximation with Saocif: a General Sequential Method and a Particular Algorithm with Feed-forward Neural Networks, http://www.lsi.upc.es/dept/techreps/html/R01-41.html , Department de Llenguatgesi Sistemes Informatics, Universitat Politecnica de catalunya (2001)

Romero, E.: A New Incremental Method for Function Approximation Using Feedforward Neural Networks. In: Proc. INNS-IEEE International Joint Conference on Neural Networks (IJCNN 2002), pp. 1968–1973 (2002)

Freund, Y., Schapire, R.E.: Experiments with a New Boosting Algorithm. In: International Conference on Machine Learning, pp. 148–156 (1996)

Wilson, D.R., Martinez, T.R.: Heterogeneous Radial Basis Function Networks. In: Proceedings of the International Conference on Neural Networks (ICNN 1996), pp. 1263–1267 (1996)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2004 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Wang, L., Liu, B., Wan, C. (2004). A Novel Fuzzy Neural Network with Fast Training and Accurate Generalization. In: Yin, FL., Wang, J., Guo, C. (eds) Advances in Neural Networks – ISNN 2004. ISNN 2004. Lecture Notes in Computer Science, vol 3173. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-28647-9_46

Download citation

DOI: https://doi.org/10.1007/978-3-540-28647-9_46

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-22841-7

Online ISBN: 978-3-540-28647-9

eBook Packages: Springer Book Archive