Abstract

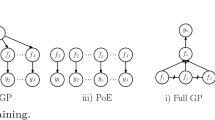

Kernel-based non-parametric models have been applied widely over recent years. However, the associated computational complexity imposes limitations on the applicability of those methods to problems with large data-sets. In this paper we develop a filtering approach based on a Gaussian process regression model. The idea is to generate a small-dimensional set of filtered data that keeps a high proportion of the information contained in the original large data-set. Model learning and prediction are based on the filtered data, thereby decreasing the computational burden dramatically.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Gibbs, M.N., Mark, G., MacKay, D.J.C.: Efficient implementation of Gaussian processes (1997)

Luo, Z., Wahba, G.: Hybrid adaptive splines. J. Amer. Statist. Assoc. 92, 107–116 (1997)

Murray-Smith, R., Pearlmutter, B.A.: Transformations of Gaussian process priors. TR-2003-149, Department of Computing Science, University of Glasgow, Scotland (June 2003)

O’Hagan, A.: On curve fitting and optimal design for regression (with discussion). Journal of the Royal Statistical Society B 40, 1–42 (1978)

Poggio, T., Girosi, F.: Networks for approximation and learning. Proceedings of IEEE 78, 1481–1497 (1990)

Seeger, M., Willians, C.K.I., Lawrence, N.D.: Fast forward selection to speed up sparse Gaussian process regression. In: Bishop, C.M., Frey, B.J. (eds.) Proceedings of the Ninth International Workshop on AI and Statistics (2003)

Rasmussen, C.E.: Evaluation of Gaussian Processes and Other Methods for Non-linear Regression. PhD Thesis. University of Toronto (1996), Available from http://bayes.imm.dtu.dk

Shi, J.Q., Murray-Smith, R., Titterington, D.M.: Hierarchical Gaussian Process Mixtures for Regression, DCS Technical Report TR-2002-107/Dept. Statistics Tech. Report 02-7, University of Glasgow, Scotland (2002)

Tresp, V.: The Bayesian committee machine. Neural Computation 12, 2719–2741 (2000)

Vapnik, V.N.: The Nature of Statistical Learning Theory. Springer, New York (1995)

Wahba, G.: Spline Models for Observational Data. SIAM, Philadelphia, PA; CBMS NSF Regional Conference series in applied mathematics(1990)

Williams, C.K.I., Rasmussen, C.E.: Gaussian process for regression. In: Touretzky, D.S., et al. (eds.) Advances in Neural Information Processing Systems, vol. 8. MIT Press, Cambridge (1996)

Williams, C.K.I., Seeger, M.: Using the Nyström method to speed up kernel machines. In: Leen, T.K., Diettrich, T.G., Tresp, V. (eds.) Advances in Neural Information Processing Systems, vol. 13. MIT Press, Cambridge (2001)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2005 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Shi, J.Q., Murray-Smith, R., Titterington, D.M., Pearlmutter, B.A. (2005). Filtered Gaussian Processes for Learning with Large Data-Sets. In: Murray-Smith, R., Shorten, R. (eds) Switching and Learning in Feedback Systems. Lecture Notes in Computer Science, vol 3355. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-30560-6_5

Download citation

DOI: https://doi.org/10.1007/978-3-540-30560-6_5

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-24457-8

Online ISBN: 978-3-540-30560-6

eBook Packages: Computer ScienceComputer Science (R0)