Abstract

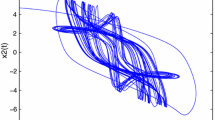

In this paper, the global asymptotic and exponential stability are investigated for a class of neural networks with distributed time-varying delays. By using appropriate Lyapunov-Krasovskii functional and linear matrix inequality (LMI) technique, two delay-dependent sufficient conditions in LMIs form are obtained to guarantee the global asymptotic and exponential stability of the addressed neural networks. The proposed stability criteria do not require the monotonicity of the activation functions and the differentiability of the distributed time-varying delays, which means that the results generalize and further improve those in the earlier publications. An example is given to show the effectiveness of the obtained condition.

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Lu, H., Chung, F.L., He, Z.: Some Sufficient Conditions for Global Exponential Stability of Hopfield Neural Networks. Neural Networks 17, 537–544 (2004)

Arik, S.: An Analysis of Exponential Stability of Delayed Neural Networks with Time Varying Delays. Neural Networks 17, 1027–1031 (2004)

Cao, J., Li, X.: Stability in Delayed Cohen-Grossberg Neural Networks: LMI Optimization Approach. Physica D 212, 54–65 (2005)

Cao, J., Wang, J.: Global Asymptotic and Robust Stability of Recurrent Neural Networks with Time Delays. IEEE Trans. Circuits and Systems I 52, 417–426 (2005)

Li, C., Liao, X., Zhang, R.: A Global Exponential Robust Stability Criterion for Interval Delayed Neural Networks with Variable Delays. Neurocomputing 69, 803–809 (2006)

Park, J.H.: A Novel Criterion for Global Asymptotic Stability of BAM Neural Networks with Time Delays. Chaos, Solitons and Fractals 29, 446–453 (2006)

Xu, S., Lam, J., Ho, D.W.C.: A New LMI Condition for Delay-dependent Asymptotic Stability of Delayed Hopfield Neural Networks. IEEE Transactions on Circuits and Systems II 53, 230–234 (2006)

Zeng, Z., Wang, J.: Improved Conditions for Global Exponential Stability of Recurrent Neural Networks with Time-varying Delays. IEEE Transactions on Neural Networks 17, 623–635 (2006)

Yang, H., Chu, T., Zhang, C.: Exponential Stability of Neural Networks with Variable Delays via LMI Approach. Chaos, Solitons and Fractals 30, 133–139 (2006)

Singh, S.: Simplified LMI Condition for Global Asymptotic Stability of Delayed Neural Networks. Chaos, Solitons and Fractals 29, 470–473 (2006)

De, V.B., Principe, J.C.: The Gamma Model-A New Neural Model for Temporal Processing. Neural Networks 5, 565–576 (1992)

Zhao, H.: Global Asymptotic Stability of Hopfield Neural Network Involving Distributed Delays. Neural Networks 17, 47–53 (2004)

Liao, X., Liu, Q., Zhang, W.: Delay-dependent Asymptotic Stability for Neural Networks with Distributed Delays. Nonlinear Analysis: Real World Applications 7, 1178–1192 (2006)

Liu, B., Huang, L.: Global Exponential Stability of BAM Neural Networks with Recent-history Distributed Delays and Impulses. Neurocomputing 69, 2090–2096 (2006)

Huang, T.: Exponential Stability of Fuzzy Cellular Neural Networks with Distributed Delay. Physics Letters A 351, 48–52 (2006)

Jiang, H., Teng, Z.: Boundedness and Global Stability for Nonautonomous Recurrent Neural Networks with Distributed Delays. Chaos, Solitons and Fractals 30, 83–93 (2006)

Liang, J., Cao, J.: Global Output Convergence of Recurrent Neural Networks with Distributed Delays. Nonlinear Analysis: Real World Applications 8, 187–197 (2007)

Cao, J., Yuan, K., Li, H.: Global Asymptotical Stability of Recurrent Neural Networks with Multiple Discrete Delays and Distributed Delays. IEEE Transactions on Neural Networks 17, 1646–1651 (2006)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2007 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Song, Q., Zhou, J. (2007). Novel LMI Criteria for Stability of Neural Networks with Distributed Delays. In: Liu, D., Fei, S., Hou, ZG., Zhang, H., Sun, C. (eds) Advances in Neural Networks – ISNN 2007. ISNN 2007. Lecture Notes in Computer Science, vol 4491. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-540-72383-7_114

Download citation

DOI: https://doi.org/10.1007/978-3-540-72383-7_114

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-72382-0

Online ISBN: 978-3-540-72383-7

eBook Packages: Computer ScienceComputer Science (R0)